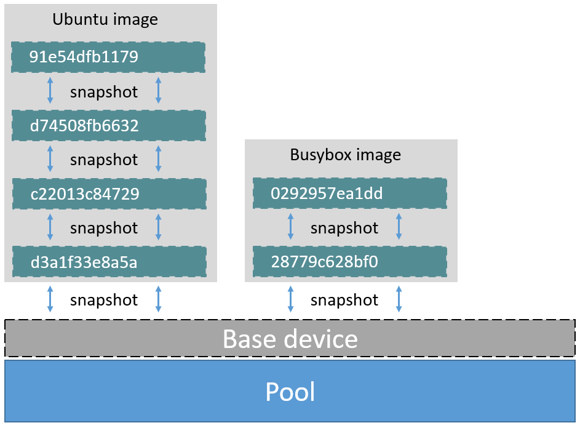

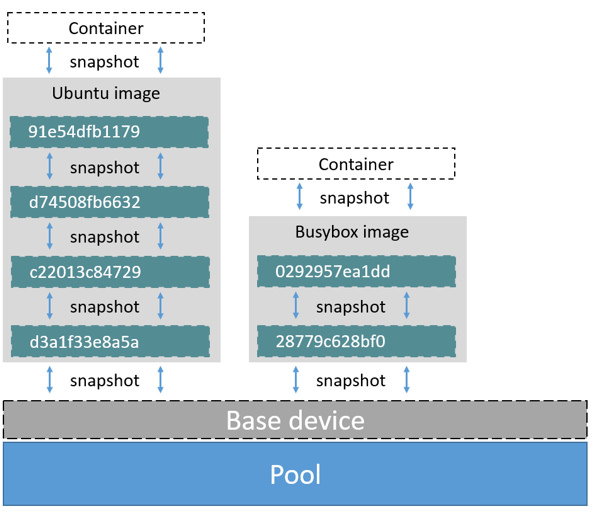

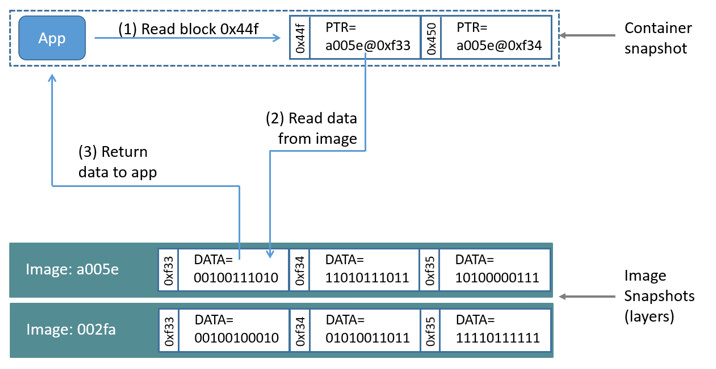

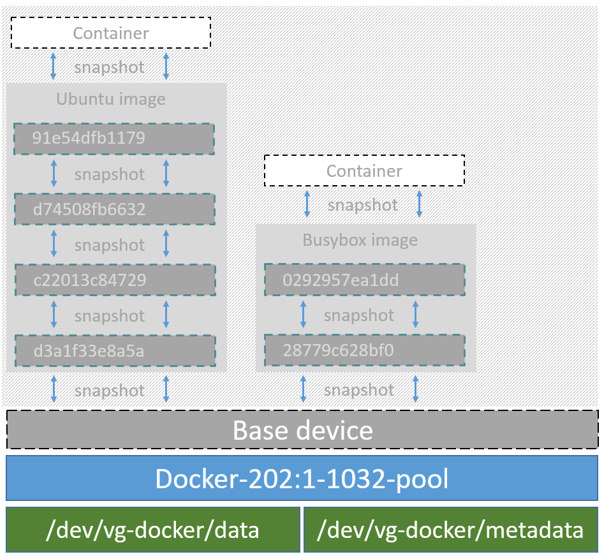

github.com/brahmaroutu/docker@v1.2.1-0.20160809185609-eb28dde01f16/docs/userguide/storagedriver/device-mapper-driver.md (about) 1 <!--[metadata]> 2 +++ 3 title="Device mapper storage in practice" 4 description="Learn how to optimize your use of device mapper driver." 5 keywords=["container, storage, driver, device mapper"] 6 [menu.main] 7 parent="engine_driver" 8 +++ 9 <![end-metadata]--> 10 11 # Docker and the Device Mapper storage driver 12 13 Device Mapper is a kernel-based framework that underpins many advanced 14 volume management technologies on Linux. Docker's `devicemapper` storage driver 15 leverages the thin provisioning and snapshotting capabilities of this framework 16 for image and container management. This article refers to the Device Mapper 17 storage driver as `devicemapper`, and the kernel framework as `Device Mapper`. 18 19 >**Note**: The [Commercially Supported Docker Engine (CS-Engine) running on RHEL 20 and CentOS Linux](https://www.docker.com/compatibility-maintenance) requires 21 that you use the `devicemapper` storage driver. 22 23 ## An alternative to AUFS 24 25 Docker originally ran on Ubuntu and Debian Linux and used AUFS for its storage 26 backend. As Docker became popular, many of the companies that wanted to use it 27 were using Red Hat Enterprise Linux (RHEL). Unfortunately, because the upstream 28 mainline Linux kernel did not include AUFS, RHEL did not use AUFS either. 29 30 To correct this Red Hat developers investigated getting AUFS into the mainline 31 kernel. Ultimately, though, they decided a better idea was to develop a new 32 storage backend. Moreover, they would base this new storage backend on existing 33 `Device Mapper` technology. 34 35 Red Hat collaborated with Docker Inc. to contribute this new driver. As a result 36 of this collaboration, Docker's Engine was re-engineered to make the storage 37 backend pluggable. So it was that the `devicemapper` became the second storage 38 driver Docker supported. 39 40 Device Mapper has been included in the mainline Linux kernel since version 41 2.6.9. It is a core part of RHEL family of Linux distributions. This means that 42 the `devicemapper` storage driver is based on stable code that has a lot of 43 real-world production deployments and strong community support. 44 45 46 ## Image layering and sharing 47 48 The `devicemapper` driver stores every image and container on its own virtual 49 device. These devices are thin-provisioned copy-on-write snapshot devices. 50 Device Mapper technology works at the block level rather than the file level. 51 This means that `devicemapper` storage driver's thin provisioning and 52 copy-on-write operations work with blocks rather than entire files. 53 54 >**Note**: Snapshots are also referred to as *thin devices* or *virtual 55 >devices*. They all mean the same thing in the context of the `devicemapper` 56 >storage driver. 57 58 With `devicemapper` the high level process for creating images is as follows: 59 60 1. The `devicemapper` storage driver creates a thin pool. 61 62 The pool is created from block devices or loop mounted sparse files (more 63 on this later). 64 65 2. Next it creates a *base device*. 66 67 A base device is a thin device with a filesystem. You can see which 68 filesystem is in use by running the `docker info` command and checking the 69 `Backing filesystem` value. 70 71 3. Each new image (and image layer) is a snapshot of this base device. 72 73 These are thin provisioned copy-on-write snapshots. This means that they 74 are initially empty and only consume space from the pool when data is written 75 to them. 76 77 With `devicemapper`, container layers are snapshots of the image they are 78 created from. Just as with images, container snapshots are thin provisioned 79 copy-on-write snapshots. The container snapshot stores all updates to the 80 container. The `devicemapper` allocates space to them on-demand from the pool 81 as and when data is written to the container. 82 83 The high level diagram below shows a thin pool with a base device and two 84 images. 85 86  87 88 If you look closely at the diagram you'll see that it's snapshots all the way 89 down. Each image layer is a snapshot of the layer below it. The lowest layer of 90 each image is a snapshot of the base device that exists in the pool. This 91 base device is a `Device Mapper` artifact and not a Docker image layer. 92 93 A container is a snapshot of the image it is created from. The diagram below 94 shows two containers - one based on the Ubuntu image and the other based on the 95 Busybox image. 96 97  98 99 100 ## Reads with the devicemapper 101 102 Let's look at how reads and writes occur using the `devicemapper` storage 103 driver. The diagram below shows the high level process for reading a single 104 block (`0x44f`) in an example container. 105 106  107 108 1. An application makes a read request for block `0x44f` in the container. 109 110 Because the container is a thin snapshot of an image it does not have the 111 data. Instead, it has a pointer (PTR) to where the data is stored in the image 112 snapshot lower down in the image stack. 113 114 2. The storage driver follows the pointer to block `0xf33` in the snapshot 115 relating to image layer `a005...`. 116 117 3. The `devicemapper` copies the contents of block `0xf33` from the image 118 snapshot to memory in the container. 119 120 4. The storage driver returns the data to the requesting application. 121 122 ## Write examples 123 124 With the `devicemapper` driver, writing new data to a container is accomplished 125 by an *allocate-on-demand* operation. Updating existing data uses a 126 copy-on-write operation. Because Device Mapper is a block-based technology 127 these operations occur at the block level. 128 129 For example, when making a small change to a large file in a container, the 130 `devicemapper` storage driver does not copy the entire file. It only copies the 131 blocks to be modified. Each block is 64KB. 132 133 ### Writing new data 134 135 To write 56KB of new data to a container: 136 137 1. An application makes a request to write 56KB of new data to the container. 138 139 2. The allocate-on-demand operation allocates a single new 64KB block to the 140 container's snapshot. 141 142 If the write operation is larger than 64KB, multiple new blocks are 143 allocated to the container's snapshot. 144 145 3. The data is written to the newly allocated block. 146 147 ### Overwriting existing data 148 149 To modify existing data for the first time: 150 151 1. An application makes a request to modify some data in the container. 152 153 2. A copy-on-write operation locates the blocks that need updating. 154 155 3. The operation allocates new empty blocks to the container snapshot and 156 copies the data into those blocks. 157 158 4. The modified data is written into the newly allocated blocks. 159 160 The application in the container is unaware of any of these 161 allocate-on-demand and copy-on-write operations. However, they may add latency 162 to the application's read and write operations. 163 164 ## Configure Docker with devicemapper 165 166 The `devicemapper` is the default Docker storage driver on some Linux 167 distributions. This includes RHEL and most of its forks. Currently, the 168 following distributions support the driver: 169 170 * RHEL/CentOS/Fedora 171 * Ubuntu 12.04 172 * Ubuntu 14.04 173 * Debian 174 175 Docker hosts running the `devicemapper` storage driver default to a 176 configuration mode known as `loop-lvm`. This mode uses sparse files to build 177 the thin pool used by image and container snapshots. The mode is designed to 178 work out-of-the-box with no additional configuration. However, production 179 deployments should not run under `loop-lvm` mode. 180 181 You can detect the mode by viewing the `docker info` command: 182 183 ```bash 184 $ sudo docker info 185 186 Containers: 0 187 Images: 0 188 Storage Driver: devicemapper 189 Pool Name: docker-202:2-25220302-pool 190 Pool Blocksize: 65.54 kB 191 Backing Filesystem: xfs 192 [...] 193 Data loop file: /var/lib/docker/devicemapper/devicemapper/data 194 Metadata loop file: /var/lib/docker/devicemapper/devicemapper/metadata 195 Library Version: 1.02.93-RHEL7 (2015-01-28) 196 [...] 197 ``` 198 199 The output above shows a Docker host running with the `devicemapper` storage 200 driver operating in `loop-lvm` mode. This is indicated by the fact that the 201 `Data loop file` and a `Metadata loop file` are on files under 202 `/var/lib/docker/devicemapper/devicemapper`. These are loopback mounted sparse 203 files. 204 205 ### Configure direct-lvm mode for production 206 207 The preferred configuration for production deployments is `direct-lvm`. This 208 mode uses block devices to create the thin pool. The following procedure shows 209 you how to configure a Docker host to use the `devicemapper` storage driver in 210 a `direct-lvm` configuration. 211 212 > **Caution:** If you have already run the Docker daemon on your Docker host 213 > and have images you want to keep, `push` them Docker Hub or your private 214 > Docker Trusted Registry before attempting this procedure. 215 216 The procedure below will create a logical volume configured as a thin pool to 217 use as backing for the storage pool. It assumes that you have a spare block 218 device at `/dev/xvdf` with enough free space to complete the task. The device 219 identifier and volume sizes may be different in your environment and you 220 should substitute your own values throughout the procedure. The procedure also 221 assumes that the Docker daemon is in the `stopped` state. 222 223 1. Log in to the Docker host you want to configure and stop the Docker daemon. 224 225 2. Install the LVM2 package. 226 The LVM2 package includes the userspace toolset that provides logical volume 227 management facilities on linux. 228 229 3. Create a physical volume replacing `/dev/xvdf` with your block device. 230 231 ```bash 232 $ pvcreate /dev/xvdf 233 ``` 234 235 4. Create a 'docker' volume group. 236 237 ```bash 238 $ vgcreate docker /dev/xvdf 239 ``` 240 241 5. Create a thin pool named `thinpool`. 242 243 In this example, the data logical is 95% of the 'docker' volume group size. 244 Leaving this free space allows for auto expanding of either the data or 245 metadata if space runs low as a temporary stopgap. 246 247 ```bash 248 $ lvcreate --wipesignatures y -n thinpool docker -l 95%VG 249 $ lvcreate --wipesignatures y -n thinpoolmeta docker -l 1%VG 250 ``` 251 252 6. Convert the pool to a thin pool. 253 254 ```bash 255 $ lvconvert -y --zero n -c 512K --thinpool docker/thinpool --poolmetadata docker/thinpoolmeta 256 ``` 257 258 7. Configure autoextension of thin pools via an `lvm` profile. 259 260 ```bash 261 $ vi /etc/lvm/profile/docker-thinpool.profile 262 ``` 263 264 8. Specify 'thin_pool_autoextend_threshold' value. 265 266 The value should be the percentage of space used before `lvm` attempts 267 to autoextend the available space (100 = disabled). 268 269 ``` 270 thin_pool_autoextend_threshold = 80 271 ``` 272 273 9. Modify the `thin_pool_autoextend_percent` for when thin pool autoextension occurs. 274 275 The value's setting is the perentage of space to increase the thin pool (100 = 276 disabled) 277 278 ``` 279 thin_pool_autoextend_percent = 20 280 ``` 281 282 10. Check your work, your `docker-thinpool.profile` file should appear similar to the following: 283 284 An example `/etc/lvm/profile/docker-thinpool.profile` file: 285 286 ``` 287 activation { 288 thin_pool_autoextend_threshold=80 289 thin_pool_autoextend_percent=20 290 } 291 ``` 292 293 11. Apply your new lvm profile 294 295 ```bash 296 $ lvchange --metadataprofile docker-thinpool docker/thinpool 297 ``` 298 299 12. Verify the `lv` is monitored. 300 301 ```bash 302 $ lvs -o+seg_monitor 303 ``` 304 305 13. If the Docker daemon was previously started, clear your graph driver directory. 306 307 Clearing your graph driver removes any images, containers, and volumes in your 308 Docker installation. 309 310 ```bash 311 $ rm -rf /var/lib/docker/* 312 ``` 313 314 14. Configure the Docker daemon with specific devicemapper options. 315 316 There are two ways to do this. You can set options on the command line if you start the daemon there: 317 318 ```bash 319 --storage-driver=devicemapper --storage-opt=dm.thinpooldev=/dev/mapper/docker-thinpool --storage-opt dm.use_deferred_removal=true 320 ``` 321 322 You can also set them for startup in the `daemon.json` configuration, for example: 323 324 ```json 325 { 326 "storage-driver": "devicemapper", 327 "storage-opts": [ 328 "dm.thinpooldev=/dev/mapper/docker-thinpool", 329 "dm.use_deferred_removal=true" 330 ] 331 } 332 ``` 333 334 15. If using systemd and modifying the daemon configuration via unit or drop-in file, reload systemd to scan for changes. 335 336 ```bash 337 $ systemctl daemon-reload 338 ``` 339 340 16. Start the Docker daemon. 341 342 ```bash 343 $ systemctl start docker 344 ``` 345 346 After you start the Docker daemon, ensure you monitor your thin pool and volume 347 group free space. While the volume group will auto-extend, it can still fill 348 up. To monitor logical volumes, use `lvs` without options or `lvs -a` to see tha 349 data and metadata sizes. To monitor volume group free space, use the `vgs` command. 350 351 Logs can show the auto-extension of the thin pool when it hits the threshold, to 352 view the logs use: 353 354 ```bash 355 $ journalctl -fu dm-event.service 356 ``` 357 358 If you run into repeated problems with thin pool, you can use the 359 `dm.min_free_space` option to tune the Engine behavior. This value ensures that 360 operations fail with a warning when the free space is at or near the minimum. 361 For information, see <a 362 href="../../../reference/commandline/dockerd/#storage-driver-options" 363 target="_blank">the storage driver options in the Engine daemon reference</a>. 364 365 366 ### Examine devicemapper structures on the host 367 368 You can use the `lsblk` command to see the device files created above and the 369 `pool` that the `devicemapper` storage driver creates on top of them. 370 371 ```bash 372 $ sudo lsblk 373 NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT 374 xvda 202:0 0 8G 0 disk 375 └─xvda1 202:1 0 8G 0 part / 376 xvdf 202:80 0 10G 0 disk 377 ├─vg--docker-data 253:0 0 90G 0 lvm 378 │ └─docker-202:1-1032-pool 253:2 0 10G 0 dm 379 └─vg--docker-metadata 253:1 0 4G 0 lvm 380 └─docker-202:1-1032-pool 253:2 0 10G 0 dm 381 ``` 382 383 The diagram below shows the image from prior examples updated with the detail 384 from the `lsblk` command above. 385 386  387 388 In the diagram, the pool is named `Docker-202:1-1032-pool` and spans the `data` 389 and `metadata` devices created earlier. The `devicemapper` constructs the pool 390 name as follows: 391 392 ``` 393 Docker-MAJ:MIN-INO-pool 394 ``` 395 396 `MAJ`, `MIN` and `INO` refer to the major and minor device numbers and inode. 397 398 Because Device Mapper operates at the block level it is more difficult to see 399 diffs between image layers and containers. Docker 1.10 and later no longer 400 matches image layer IDs with directory names in `/var/lib/docker`. However, 401 there are two key directories. The `/var/lib/docker/devicemapper/mnt` directory 402 contains the mount points for image and container layers. The 403 `/var/lib/docker/devicemapper/metadata`directory contains one file for every 404 image layer and container snapshot. The files contain metadata about each 405 snapshot in JSON format. 406 407 ## Increase capacity on a running device 408 409 You can increase the capacity of the pool on a running thin-pool device. This is 410 useful if the data's logical volume is full and the volume group is at full 411 capacity. 412 413 ### For a loop-lvm configuration 414 415 In this scenario, the thin pool is configured to use `loop-lvm` mode. To show 416 the specifics of the existing configuration use `docker info`: 417 418 ```bash 419 $ sudo docker info 420 421 Containers: 0 422 Running: 0 423 Paused: 0 424 Stopped: 0 425 Images: 2 426 Server Version: 1.11.0 427 Storage Driver: devicemapper 428 Pool Name: docker-8:1-123141-pool 429 Pool Blocksize: 65.54 kB 430 Base Device Size: 10.74 GB 431 Backing Filesystem: ext4 432 Data file: /dev/loop0 433 Metadata file: /dev/loop1 434 Data Space Used: 1.202 GB 435 Data Space Total: 107.4 GB 436 Data Space Available: 4.506 GB 437 Metadata Space Used: 1.729 MB 438 Metadata Space Total: 2.147 GB 439 Metadata Space Available: 2.146 GB 440 Udev Sync Supported: true 441 Deferred Removal Enabled: false 442 Deferred Deletion Enabled: false 443 Deferred Deleted Device Count: 0 444 Data loop file: /var/lib/docker/devicemapper/devicemapper/data 445 WARNING: Usage of loopback devices is strongly discouraged for production use. Use `--storage-opt dm.thinpooldev` to specify a custom block storage device. 446 Metadata loop file: /var/lib/docker/devicemapper/devicemapper/metadata 447 Library Version: 1.02.90 (2014-09-01) 448 Logging Driver: json-file 449 [...] 450 ``` 451 452 The `Data Space` values show that the pool is 100GB total. This example extends the pool to 200GB. 453 454 1. List the sizes of the devices. 455 456 ```bash 457 $ sudo ls -lh /var/lib/docker/devicemapper/devicemapper/ 458 459 total 1175492 460 -rw------- 1 root root 100G Mar 30 05:22 data 461 -rw------- 1 root root 2.0G Mar 31 11:17 metadata 462 ``` 463 464 2. Truncate `data` file to the size of the `metadata` file (approximage 200GB). 465 466 ```bash 467 $ sudo truncate -s 214748364800 /var/lib/docker/devicemapper/devicemapper/data 468 ``` 469 470 3. Verify the file size changed. 471 472 ```bash 473 $ sudo ls -lh /var/lib/docker/devicemapper/devicemapper/ 474 475 total 1.2G 476 -rw------- 1 root root 200G Apr 14 08:47 data 477 -rw------- 1 root root 2.0G Apr 19 13:27 metadata 478 ``` 479 480 4. Reload data loop device 481 482 ```bash 483 $ sudo blockdev --getsize64 /dev/loop0 484 485 107374182400 486 487 $ sudo losetup -c /dev/loop0 488 489 $ sudo blockdev --getsize64 /dev/loop0 490 491 214748364800 492 ``` 493 494 5. Reload devicemapper thin pool. 495 496 a. Get the pool name first. 497 498 ```bash 499 $ sudo dmsetup status | grep pool 500 501 docker-8:1-123141-pool: 0 209715200 thin-pool 91 502 422/524288 18338/1638400 - rw discard_passdown queue_if_no_space - 503 ``` 504 505 The name is the string before the colon. 506 507 b. Dump the device mapper table first. 508 509 ```bash 510 $ sudo dmsetup table docker-8:1-123141-pool 511 512 0 209715200 thin-pool 7:1 7:0 128 32768 1 skip_block_zeroing 513 ``` 514 515 c. Calculate the real total sectors of the thin pool now. 516 517 Change the second number of the table info (i.e. the disk end sector) to 518 reflect the new number of 512 byte sectors in the disk. For example, as the 519 new loop size is 200GB, change the second number to 419430400. 520 521 522 d. Reload the thin pool with the new sector number 523 524 ```bash 525 $ sudo dmsetup suspend docker-8:1-123141-pool \ 526 && sudo dmsetup reload docker-8:1-123141-pool --table '0 419430400 thin-pool 7:1 7:0 128 32768 1 skip_block_zeroing' \ 527 && sudo dmsetup resume docker-8:1-123141-pool 528 ``` 529 530 #### The device_tool 531 532 The Docker's projects `contrib` directory contains not part of the core 533 distribution. These tools that are often useful but can also be out-of-date. <a 534 href="https://goo.gl/wNfDTi">In this directory, is the `device_tool.go`</a> 535 which you can also resize the loop-lvm thin pool. 536 537 To use the tool, compile it first. Then, do the following to resize the pool: 538 539 ```bash 540 $ ./device_tool resize 200GB 541 ``` 542 543 ### For a direct-lvm mode configuration 544 545 In this example, you extend the capacity of a running device that uses the 546 `direct-lvm` configuration. This example assumes you are using the `/dev/sdh1` 547 disk partition. 548 549 1. Extend the volume group (VG) `vg-docker`. 550 551 ```bash 552 $ sudo vgextend vg-docker /dev/sdh1 553 554 Volume group "vg-docker" successfully extended 555 ``` 556 557 Your volume group may use a different name. 558 559 2. Extend the `data` logical volume(LV) `vg-docker/data` 560 561 ```bash 562 $ sudo lvextend -l+100%FREE -n vg-docker/data 563 564 Extending logical volume data to 200 GiB 565 Logical volume data successfully resized 566 ``` 567 568 3. Reload devicemapper thin pool. 569 570 a. Get the pool name. 571 572 ```bash 573 $ sudo dmsetup status | grep pool 574 575 docker-253:17-1835016-pool: 0 96460800 thin-pool 51593 6270/1048576 701943/753600 - rw no_discard_passdown queue_if_no_space 576 ``` 577 578 The name is the string before the colon. 579 580 b. Dump the device mapper table. 581 582 ```bash 583 $ sudo dmsetup table docker-253:17-1835016-pool 584 585 0 96460800 thin-pool 252:0 252:1 128 32768 1 skip_block_zeroing 586 ``` 587 588 c. Calculate the real total sectors of the thin pool now. we can use `blockdev` to get the real size of data lv. 589 590 Change the second number of the table info (i.e. the number of sectors) to 591 reflect the new number of 512 byte sectors in the disk. For example, as the 592 new data `lv` size is `264132100096` bytes, change the second number to 593 `515883008`. 594 595 ```bash 596 $ sudo blockdev --getsize64 /dev/vg-docker/data 597 598 264132100096 599 ``` 600 601 d. Then reload the thin pool with the new sector number. 602 603 ```bash 604 $ sudo dmsetup suspend docker-253:17-1835016-pool \ 605 && sudo dmsetup reload docker-253:17-1835016-pool --table '0 515883008 thin-pool 252:0 252:1 128 32768 1 skip_block_zeroing' \ 606 && sudo dmsetup resume docker-253:17-1835016-pool 607 ``` 608 609 ## Device Mapper and Docker performance 610 611 It is important to understand the impact that allocate-on-demand and 612 copy-on-write operations can have on overall container performance. 613 614 ### Allocate-on-demand performance impact 615 616 The `devicemapper` storage driver allocates new blocks to a container via an 617 allocate-on-demand operation. This means that each time an app writes to 618 somewhere new inside a container, one or more empty blocks has to be located 619 from the pool and mapped into the container. 620 621 All blocks are 64KB. A write that uses less than 64KB still results in a single 622 64KB block being allocated. Writing more than 64KB of data uses multiple 64KB 623 blocks. This can impact container performance, especially in containers that 624 perform lots of small writes. However, once a block is allocated to a container 625 subsequent reads and writes can operate directly on that block. 626 627 ### Copy-on-write performance impact 628 629 Each time a container updates existing data for the first time, the 630 `devicemapper` storage driver has to perform a copy-on-write operation. This 631 copies the data from the image snapshot to the container's snapshot. This 632 process can have a noticeable impact on container performance. 633 634 All copy-on-write operations have a 64KB granularity. As a results, updating 635 32KB of a 1GB file causes the driver to copy a single 64KB block into the 636 container's snapshot. This has obvious performance advantages over file-level 637 copy-on-write operations which would require copying the entire 1GB file into 638 the container layer. 639 640 In practice, however, containers that perform lots of small block writes 641 (<64KB) can perform worse with `devicemapper` than with AUFS. 642 643 ### Other device mapper performance considerations 644 645 There are several other things that impact the performance of the 646 `devicemapper` storage driver. 647 648 - **The mode.** The default mode for Docker running the `devicemapper` storage 649 driver is `loop-lvm`. This mode uses sparse files and suffers from poor 650 performance. It is **not recommended for production**. The recommended mode for 651 production environments is `direct-lvm` where the storage driver writes 652 directly to raw block devices. 653 654 - **High speed storage.** For best performance you should place the `Data file` 655 and `Metadata file` on high speed storage such as SSD. This can be direct 656 attached storage or from a SAN or NAS array. 657 658 - **Memory usage.** `devicemapper` is not the most memory efficient Docker 659 storage driver. Launching *n* copies of the same container loads *n* copies of 660 its files into memory. This can have a memory impact on your Docker host. As a 661 result, the `devicemapper` storage driver may not be the best choice for PaaS 662 and other high density use cases. 663 664 One final point, data volumes provide the best and most predictable 665 performance. This is because they bypass the storage driver and do not incur 666 any of the potential overheads introduced by thin provisioning and 667 copy-on-write. For this reason, you should to place heavy write workloads on 668 data volumes. 669 670 ## Related Information 671 672 * [Understand images, containers, and storage drivers](imagesandcontainers.md) 673 * [Select a storage driver](selectadriver.md) 674 * [AUFS storage driver in practice](aufs-driver.md) 675 * [Btrfs storage driver in practice](btrfs-driver.md) 676 * [daemon reference](../../reference/commandline/dockerd.md#storage-driver-options)