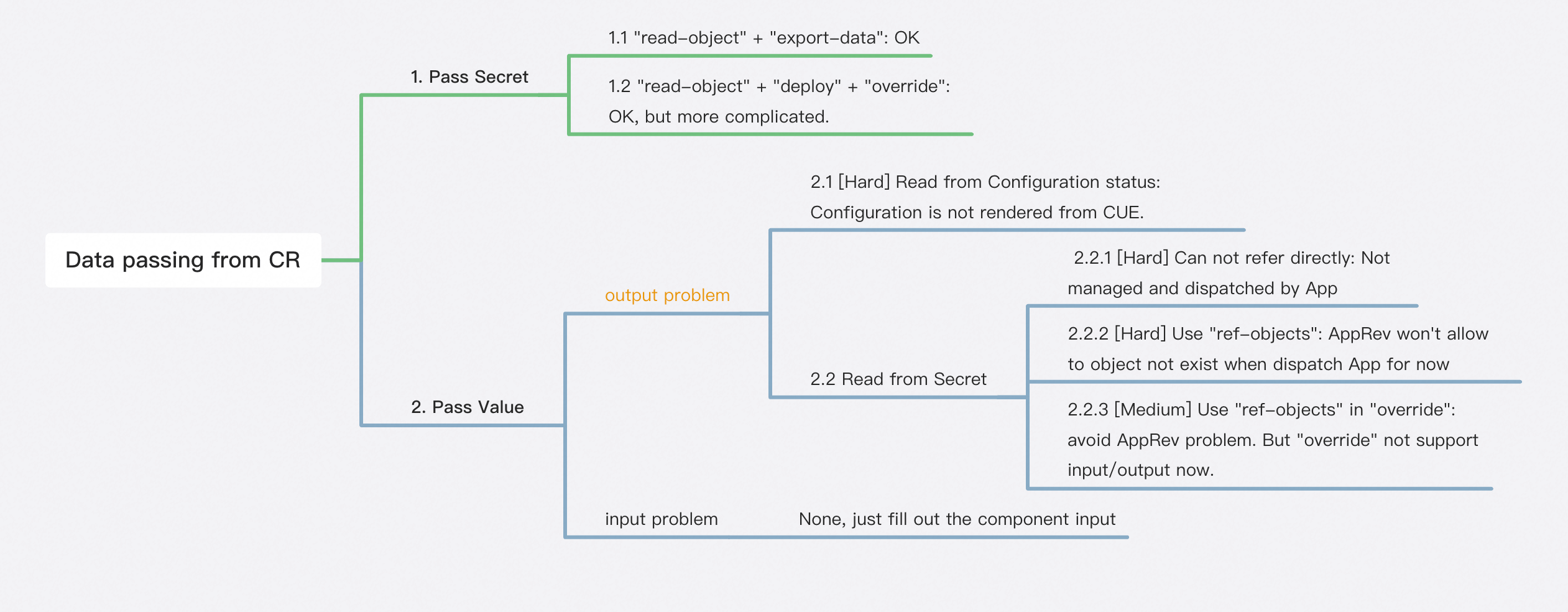

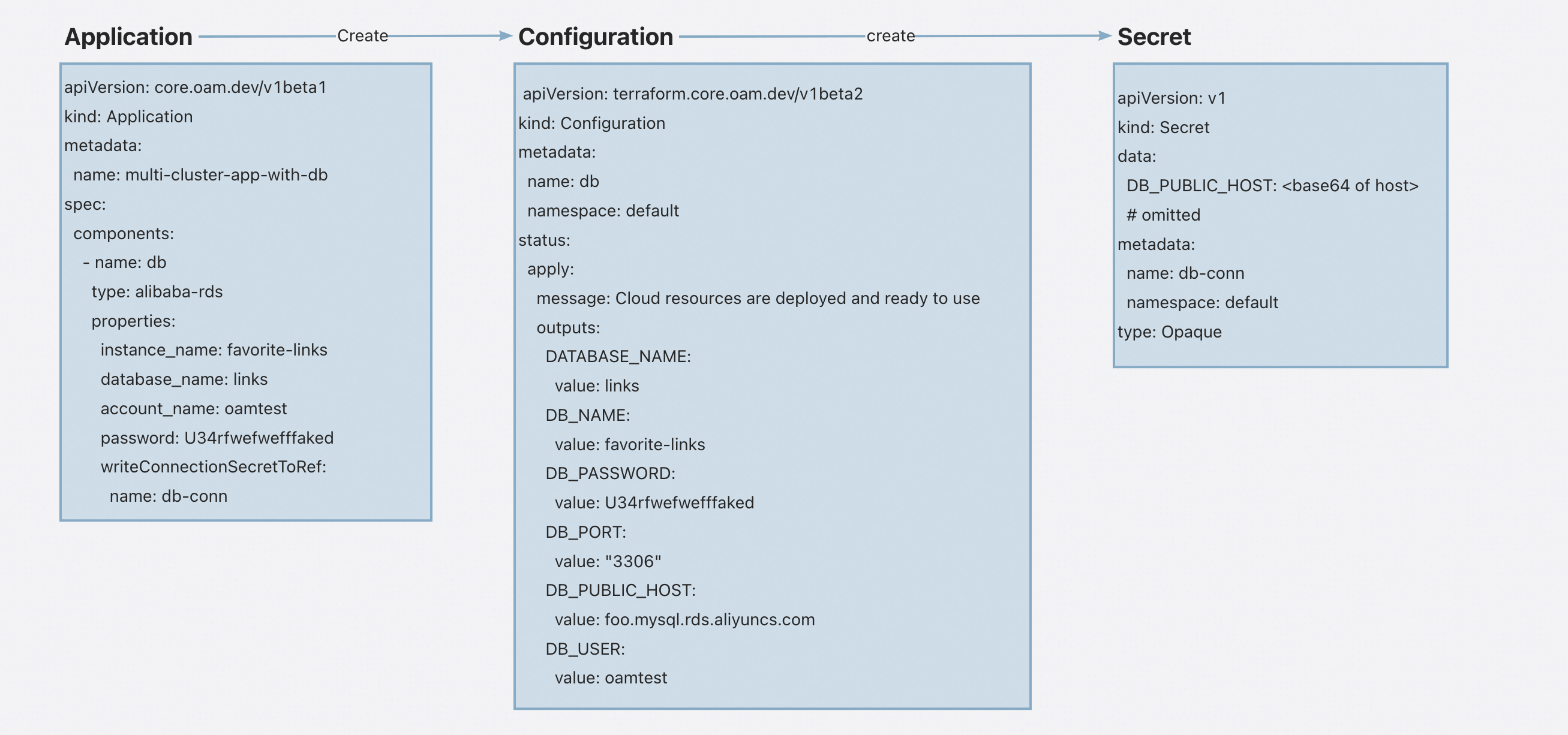

github.com/oam-dev/kubevela@v1.9.11/design/vela-core/cloud-resource-data-passing.md (about) 1 # Data Passing From Cloud Resource 2 3 ## Introduction 4 5 This proposal is to explain how to pass data from cloud resource to other workloads. 6 7 ## Background 8 9 In the issue [#4339](https://github.com/kubevela/kubevela/issues/4339), we have discussed how to pass data from cloud 10 resource to other workloads. Here's the summary of the discussion. 11 12 ### Why 13 14 KubeVela leverages Terraform controller to provision cloud resource within Kubernetes environment. 15 In cases where cloud databases are involved, it is necessary to pass along connection information to the related 16 workloads. This information Secret is created by terraform controller and stored in Kubernetes Secrets. 17 18 ### Goals 19 20 To pass data from cloud resource, there are two perspectives: method and workload location 21 22 **Workload Location** 23 24 1. Local cluster 25 2. Managed cluster 26 27 **Method** 28 29 1. Pass Secret: Secret Object is created at destination cluster. Workload refer to the Secret name and key. 30 2. Pass Value: Secret Object is **NOT** created at destination cluster. KubeVela fill the workload spec directly with 31 connection information value. 32 33 ## Proposal 34 35 There are four combinations of workload location and method. The implementation state is list below. We will discuss 36 each of them separately. 37 38 | | Local | Managed Cluster | 39 |-------------|-------|----------------| 40 | Pass Secret | ✅ | ✅ | 41 | Pass Value | ✅ | | 42 43 ### Workload in local cluster 44 45 #### Pass Secret 46 47 This combination is the most straightforward one. The Secret Object is already created at local cluster when 48 Configuration (cloud resource) is available. Workload can simply refer to the Secret name in Application spec. 49 50 ```yaml 51 apiVersion: core.oam.dev/v1beta1 52 kind: Application 53 metadata: 54 name: local-pass-secret 55 spec: 56 components: 57 - name: db 58 type: alibaba-rds 59 properties: 60 instance_name: favorite-links 61 database_name: links 62 account_name: oamtest 63 password: U34rfwefwefffaked 64 writeConnectionSecretToRef: 65 name: db-conn 66 - name: favorite-links 67 type: webservice 68 dependsOn: 69 - db 70 properties: 71 image: oamdev/nodejs-mysql-links:v0.0.1 72 port: 4000 73 traits: 74 - type: service-binding 75 properties: 76 envMappings: 77 DATABASE_HOST: 78 secret: db-conn 79 key: DB_PUBLIC_HOST 80 DATABASE_NAME: 81 secret: db-conn 82 key: DATABASE_NAME 83 DATABASE_USER: 84 secret: db-conn 85 key: DB_USER 86 DATABASE_PASSWORD: 87 secret: db-conn 88 key: DB_PASSWORD 89 ``` 90 91 #### Pass Value 92 93 This combination is similar to the previous one. The difference is that we have to read the Secret Object values in 94 workflow. Then utilize component level input/output mechanism to pass value to workload. 95 96 **Note**: `apply-component` can pass value from workflow level output to component level input. It only works for local 97 cluster. So we can make use of it to pass value to workload. In the [_Pass Value_ + _Maneged 98 cluster_](#workload-in-managed-cluster) section, you'll see why it won't work in multi-cluster scenario. 99 100 ```yaml 101 apiVersion: core.oam.dev/v1beta1 102 kind: Application 103 metadata: 104 name: local-pass-value 105 spec: 106 components: 107 - name: sample-db 108 type: alibaba-rds 109 properties: 110 instance_name: sample-db 111 account_name: oamtest 112 password: U34rfwefwefffaked 113 writeConnectionSecretToRef: 114 name: db-conn 115 # Example from https://kubevela.io/docs/end-user/components/cloud-services/provision-and-consume-database 116 - name: receiver 117 type: webservice 118 properties: 119 image: zzxwill/flask-web-application:v0.3.1-crossplane 120 port: 80 121 env: 122 - name: endpoint 123 - name: DB_PASSWORD # keep the style to suit image 124 - name: username 125 inputs: 126 - from: host 127 parameterKey: env[0].value 128 - from: password 129 parameterKey: env[1].value 130 - from: username 131 parameterKey: env[2].value 132 133 workflow: 134 steps: 135 - name: apply-db 136 type: apply-component 137 properties: 138 component: sample-db 139 - name: read-secret 140 type: read-secret 141 properties: 142 name: db-conn 143 outputs: 144 - name: host 145 valueFrom: output.value.DB_PUBLIC_HOST 146 - name: username 147 valueFrom: output.value.DB_USER 148 - name: password 149 valueFrom: output.value.DB_PASSWORD 150 - name: apply-receiver 151 type: apply-component 152 properties: 153 component: receiver 154 ``` 155 156 ### Workload in managed cluster 157 158 For workload in managed cluster, passing secret can be implemented. But we are facing some issues to pass value. 159 160  161 162 As displayed in mind map above, the main problem to **pass value** is reading data from either Configuration or Secret 163 it created. Here is an image that tells relationship between Application, Configuration and Secret, which helps to 164 understand 2.1 and 2.2.1 165 166  167 168 #### Pass Secret 169 170 Here's a demo about passing secret from cloud resource component to other worload. In this demo application will first 171 create a database and then create two workloads in different clusters. The both workloads will use the same DB. 172 173 ```yaml 174 apiVersion: core.oam.dev/v1beta1 175 kind: Application 176 metadata: 177 name: multi-cluster-app-with-db 178 spec: 179 components: 180 - name: db 181 type: alibaba-rds 182 properties: 183 instance_name: favorite-links 184 database_name: links 185 account_name: oamtest 186 password: U34rfwefwefffaked 187 writeConnectionSecretToRef: 188 name: db-conn 189 - name: favorite-links 190 type: webservice 191 properties: 192 image: oamdev/nodejs-mysql-links:v0.0.1 193 port: 4000 194 traits: 195 - type: service-binding 196 properties: 197 envMappings: 198 DATABASE_HOST: 199 secret: db-conn 200 key: DB_PUBLIC_HOST 201 DATABASE_NAME: 202 secret: db-conn 203 key: DATABASE_NAME 204 DATABASE_USER: 205 secret: db-conn 206 key: DB_USER 207 DATABASE_PASSWORD: 208 secret: db-conn 209 key: DB_PASSWORD 210 policies: 211 - name: worker 212 type: topology 213 properties: 214 clusters: 215 - cluster-worker 216 - name: all-cluster 217 type: topology 218 properties: 219 clusters: 220 - cluster-worker 221 - local 222 - name: links-comp 223 type: override 224 properties: 225 selector: 226 - favorite-links 227 workflow: 228 steps: 229 - name: create-rds 230 type: apply-component 231 properties: 232 component: db 233 - name: read-secret 234 type: read-object 235 properties: 236 apiVersion: v1 237 kind: Secret 238 name: db-conn 239 outputs: 240 - name: db-host 241 valueFrom: | 242 import "encoding/base64" 243 base64.Decode(null, output.value.data.DB_PUBLIC_HOST) 244 - name: db-user 245 valueFrom: | 246 import "encoding/base64" 247 base64.Decode(null, output.value.data.DB_USER) 248 - name: db-password 249 valueFrom: | 250 import "encoding/base64" 251 base64.Decode(null, output.value.data.DB_PASSWORD) 252 - name: db-name 253 valueFrom: | 254 import "encoding/base64" 255 base64.Decode(null, output.value.data.DATABASE_NAME) 256 - name: export-secret 257 type: export-data 258 properties: 259 name: db-conn 260 namespace: default 261 kind: Secret 262 topology: worker 263 inputs: 264 - from: db-host 265 parameterKey: data.DB_PUBLIC_HOST 266 - from: db-user 267 parameterKey: data.DB_USER 268 - from: db-password 269 parameterKey: data.DB_PASSWORD 270 - from: db-name 271 parameterKey: data.DATABASE_NAME 272 273 - name: deploy-web 274 type: deploy 275 properties: 276 policies: [ "all-cluster", "links-comp" ] 277 ``` 278 279 #### Discuss About Passing Value 280 281 There is still some scenarios we need to pass value to sub-cluster workload. For example: the helm chart is 282 off-the-shelf and can't be modified 283 like [wordpress](https://github.com/helm/charts/blob/master/stable/wordpress/values.yaml). It requires to pass the 284 database endpoint to values of chart. In this case, we can't pass a secret to it. 285 286 To fill the last grid of table, we have to solve either 2.1 or 2.2. 287 288 - To solve 2.1 we need to refactor the Configuration rendering logic and unify it to CUE. 289 - To solve 2.2, 2.2.3 is an easier way and need less effort. 290 291 Before we put in effort to solve this problem, we need to collect more requirements to see if it's necessary. 292 293 ##### Apply-component and Deploy 294 295 Here's another discussion about why *apply-component* can't work for workload in managed cluster. In [Pass Value + 296 Local cluster](#workload-in-local-cluster) combination, we use `read-object` and `apply-component` to overcome the 297 problem that Secret 298 value can be read in `workflow` but not in `component` of Application. 299 300 Now switching to multi cluster scenario, `apply-component` is not working because it is designed before KubeVela has 301 multi-cluster capability. After KubeVela have multi-cluster capability, we have `deploy` workflow-step to dispatching 302 component to multi-cluster. 303 304 Unlike `apply-componnet` which has built-in capability to convert workflow-level input/output to component-level ones. 305 `deploy` can accept input/output, but they will be applied to workflow properties itself like most other 306 workflow-steps. In another word you can change this part: 307 308 ```cue 309 parameter: { 310 //+usage=If set to false, the workflow will suspend automatically before this step, default to be true. 311 auto: *true | bool 312 //+usage=Declare the policies that used for this deployment. If not specified, the components will be deployed to the hub cluster. 313 policies: *[] | [...string] 314 //+usage=Maximum number of concurrent delivered components. 315 parallelism: *5 | int 316 //+usage=If set false, this step will apply the components with the terraform workload. 317 ignoreTerraformComponent: *true | bool 318 } 319 ``` 320 321 ### Conclusion 322 323 For cloud resource data passing scenarios, we have solutions for three out of four scenarios. The last one still needs 324 some discussion and is waiting for more requirements. 325