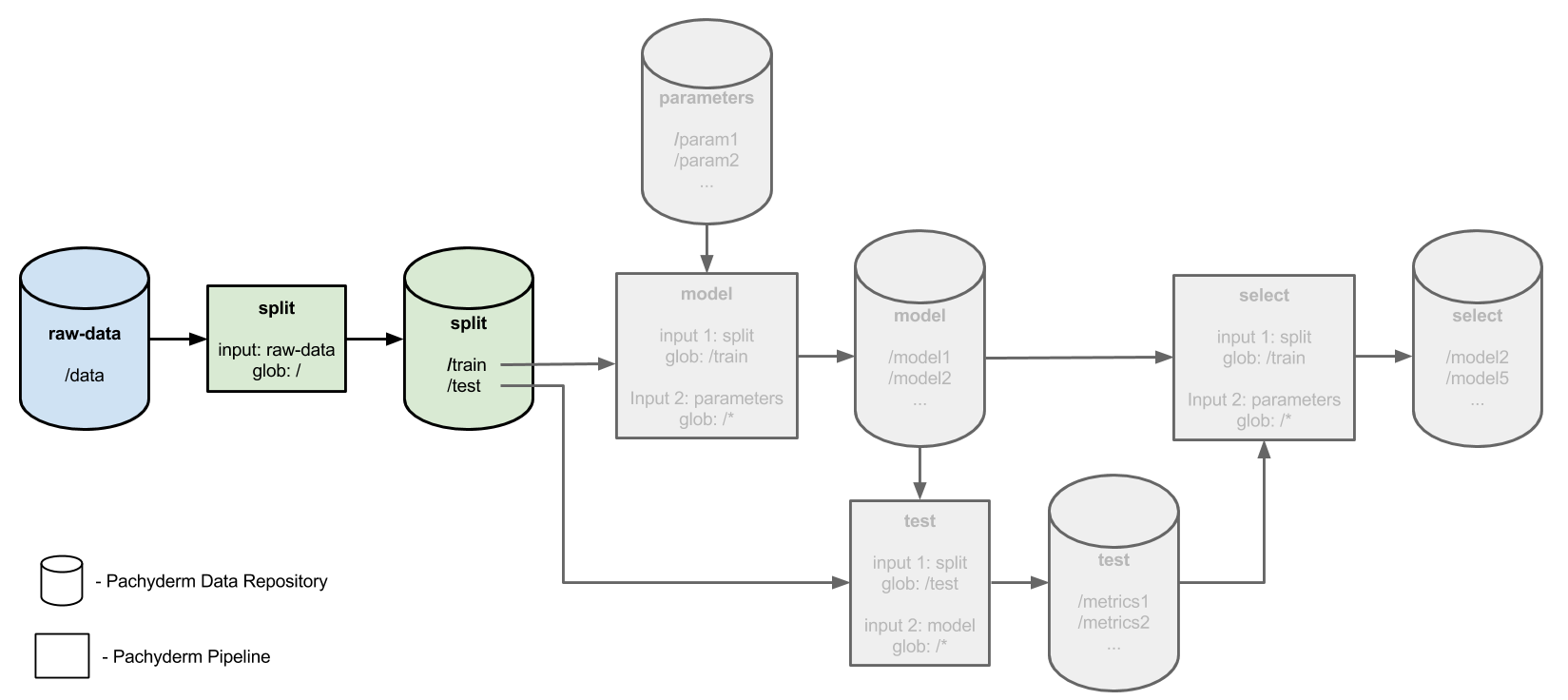

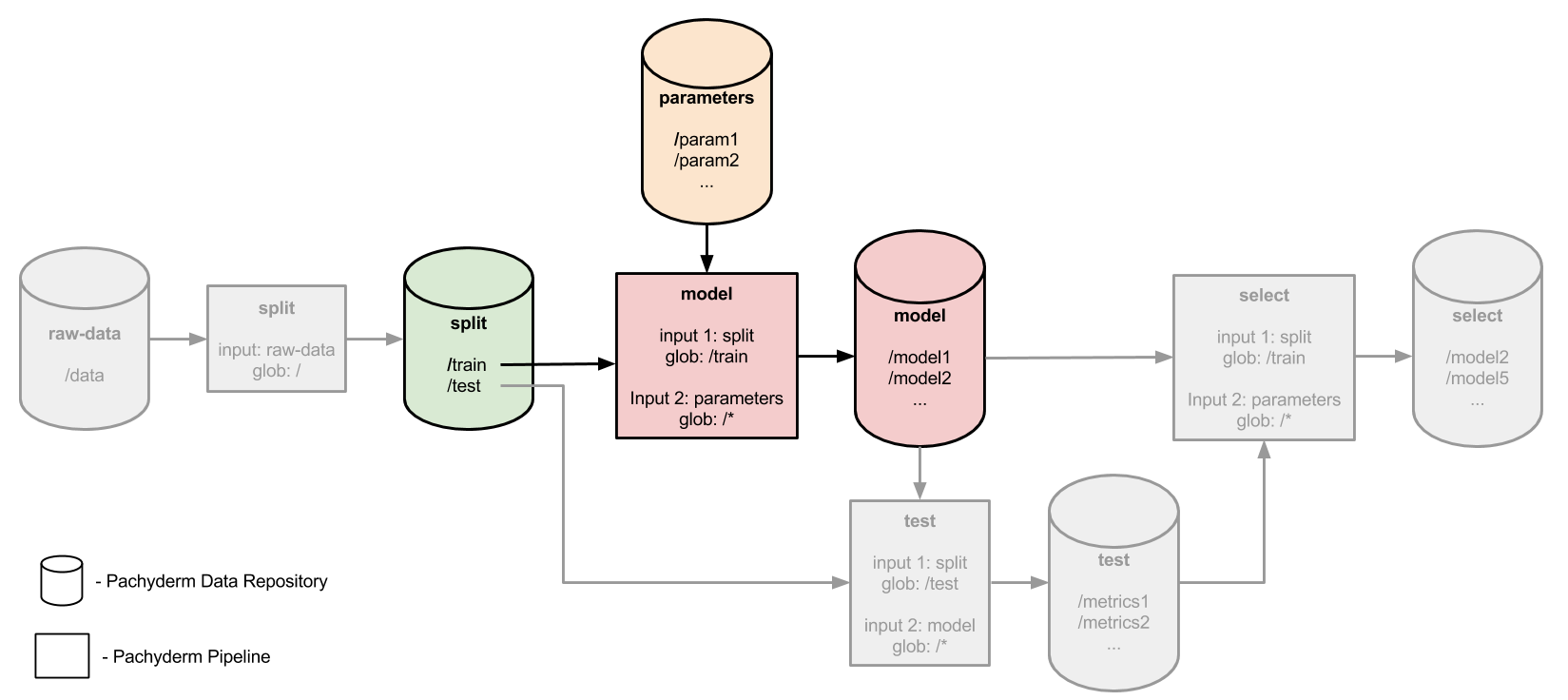

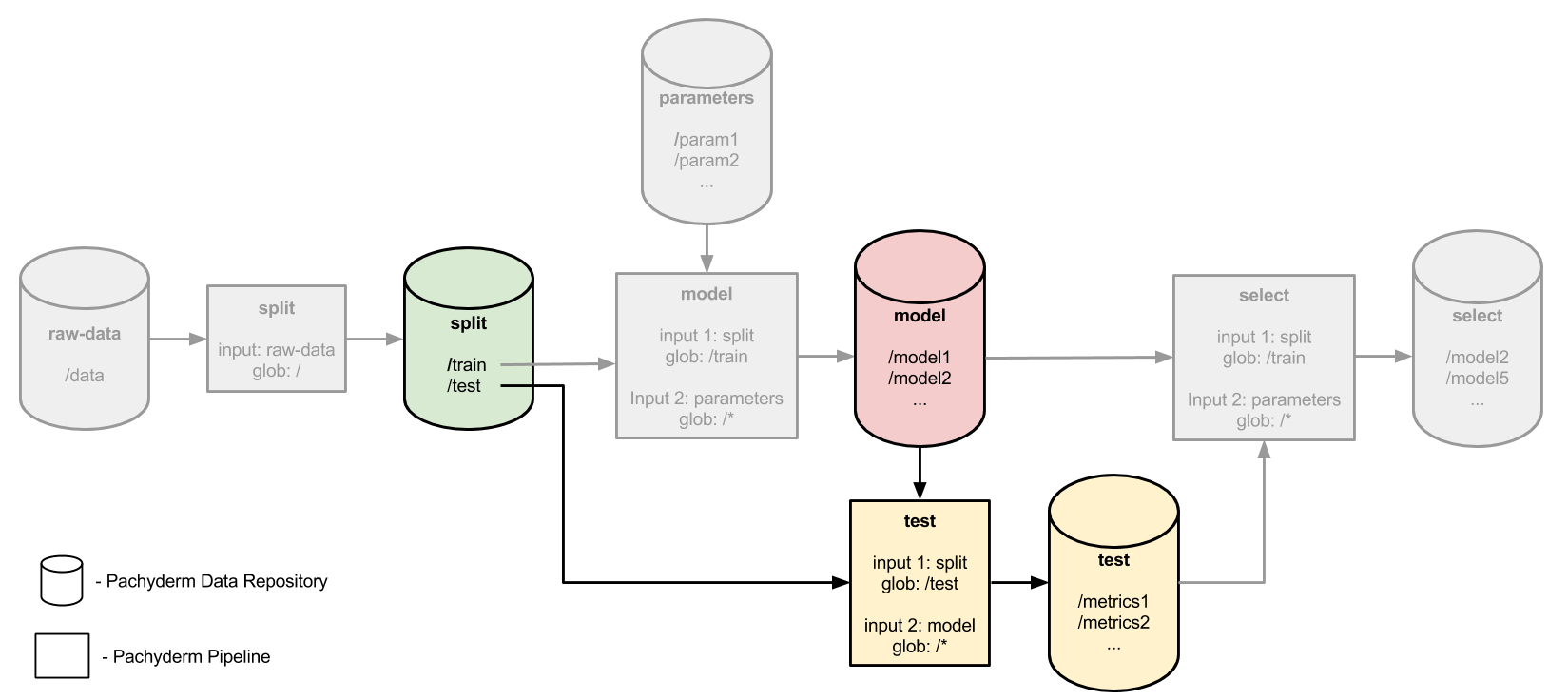

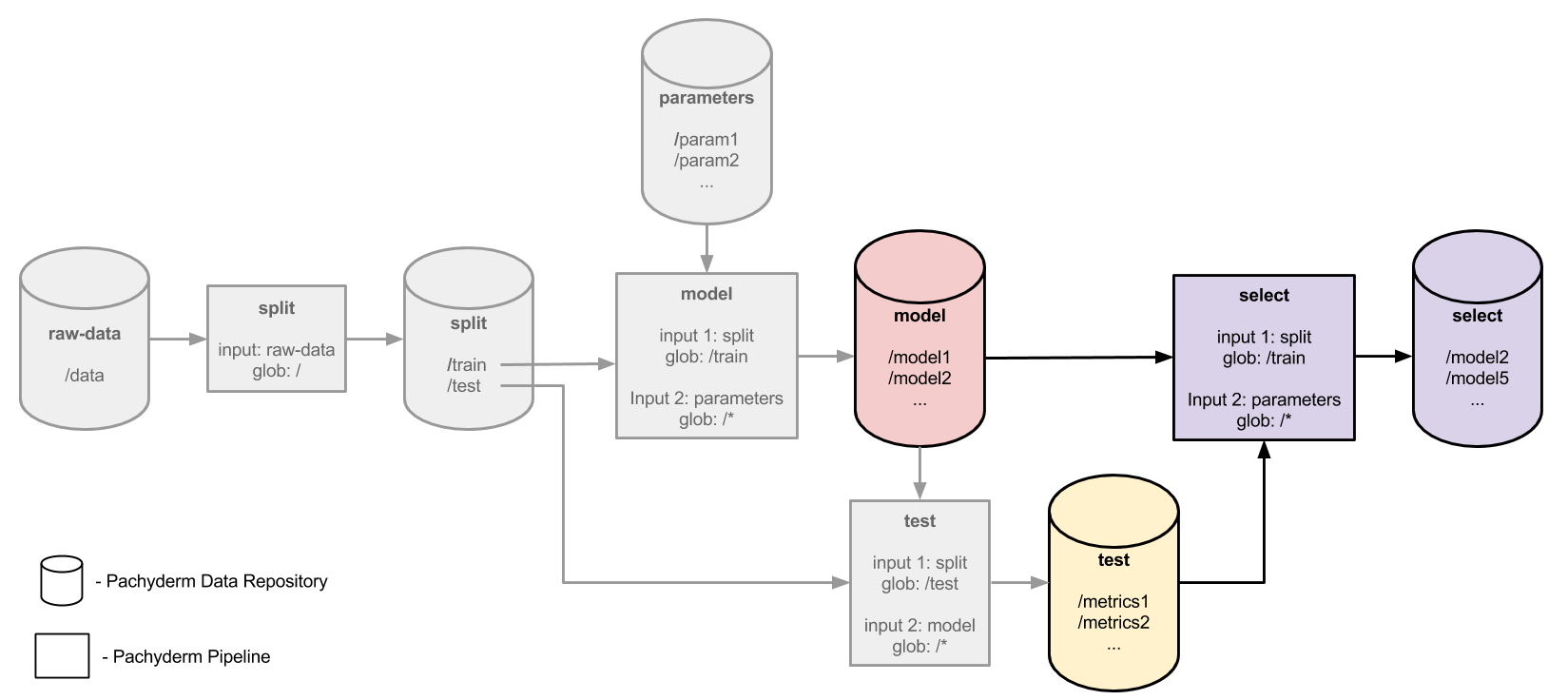

github.com/pachyderm/pachyderm@v1.13.4/examples/ml/hyperparameter/README.md (about) 1 > INFO - Pachyderm 2.0 introduces profound architectural changes to the product. As a result, our examples pre and post 2.0 are kept in two separate branches: 2 > - Branch Master: Examples using Pachyderm 2.0 and later versions - https://github.com/pachyderm/pachyderm/tree/master/examples 3 > - Branch 1.13.x: Examples using Pachyderm 1.13 and older versions - https://github.com/pachyderm/pachyderm/tree/1.13.x/examples 4 # Distributed hyperparameter tuning 5 6 This example demonstrates how you can evaluate a model or function in a distributed manner on multiple sets of parameters. In this particular case, we will evaluate many machine learning models, each configured uses different sets of parameters (aka hyperparameters), and we will output only the best performing model or models. 7 8 The models trained and evaluated in the example will attempt to predict the species of iris flowers using the iris data set, which is often used to demonstrate ML methods. The different sets of parameters used in the example are the *C* and *Gamma* parameters of an SVM machine learning model. If you aren't familiar with that model or those parameters, don't worry about them too much. The point here is that *C* and *Gamma* are parameters of this model, and we want to search over many combinations of *C* and *Gamma* to determine which combination best predicts iris flower species. 9 10 The example assumes that you have: 11 12 - A Pachyderm cluster running - see [Local Installation](https://docs.pachyderm.com/1.13.x/getting_started/local_installation/) to get up and running with a local Pachyderm cluster in just a few minutes. 13 - The `pachctl` CLI tool installed and connected to your Pachyderm cluster - see [any of our deploy docs](https://docs.pachyderm.com/1.13.x/deploy-manage/deploy/) for instructions. 14 15 ## The pipelines 16 17 The example uses 4 pipeline stages to accomplish this distributed hyperparameter tuning/search. First we will split our iris data set into a training and test data set. The training set will be used to train or fit our model with the various sets of parameters and the test set will be used later to evaluate each trained model. 18 19  20 21 Next, we will train a model for each combination of *C* and *Gamma* parameters in a `parameters` repo. The trained models will be serialized and output to the `model` repo. 22 23  24 25 In a `test` stage we will pair each trained/fit model in `model` with our test data set. Using the test data set we will generate an evaluation metric, or score, for each of the train models. 26 27  28 29 Finally, in a `select` stage we will determine which of the evaluated metrics in `test` is the best, select out the models corresponding to those metrics, and output them to the `select` repo. 30 31  32 33 ## Preparing the input data 34 35 The two input data repositories for this example are `raw-data` containing the raw iris data set and `parameters` containing all of our *C* and *Gamma* parameters. First let's create these repositories: 36 37 ```shell 38 $ pachctl create repo raw_data 39 $ pachctl create repo parameters 40 $ pachctl list repo 41 NAME CREATED SIZE 42 parameters 47 seconds ago 0B 43 raw-data 52 seconds ago 0B 44 ``` 45 46 Then, we can put our iris data set into `raw-data`. We are going to use a version of the iris data set that includes a little bit of noise to make the classification problem more difficult. This data set is included under [data/noisy_iris.csv](data/noisy_iris.csv). To commit this data set into Pachyderm: 47 48 ```shell 49 $ cd data 50 $ pachctl put file raw_data@master:iris.csv -f noisy_iris.csv 51 $ pachctl list file raw_data@master 52 NAME TYPE SIZE 53 iris.csv file 10.29KiB 54 ``` 55 56 The *C* and *Gamma* parameters that we will be searching over are included in [data/parameters](data/parameters) under two respective files. In order to process each combination of these parameters in parallel, we are going to use Pachyderm's built in splitting capability to split each parameter value into a separate file: 57 58 ```shell 59 $ cd parameters 60 $ pachctl put file parameters@master -f c_parameters.txt --split line --target-file-datums 1 61 $ pachctl put file parameters@master -f gamma_parameters.txt --split line --target-file-datums 1 62 $ pachctl list file parameters@master 63 NAME TYPE SIZE 64 c_parameters.txt dir 81B 65 gamma_parameters.txt dir 42B 66 $ pachctl list file parameters@master:c_parameters.txt 67 NAME TYPE SIZE 68 c_parameters.txt/0000000000000000 file 6B 69 c_parameters.txt/0000000000000001 file 6B 70 c_parameters.txt/0000000000000002 file 6B 71 c_parameters.txt/0000000000000003 file 6B 72 c_parameters.txt/0000000000000004 file 6B 73 c_parameters.txt/0000000000000005 file 7B 74 c_parameters.txt/0000000000000006 file 8B 75 c_parameters.txt/0000000000000007 file 8B 76 c_parameters.txt/0000000000000008 file 9B 77 c_parameters.txt/0000000000000009 file 9B 78 c_parameters.txt/000000000000000a file 10B 79 $ pachctl list file parameters@master:gamma_parameters.txt 80 NAME TYPE SIZE 81 gamma_parameters.txt/0000000000000000 file 6B 82 gamma_parameters.txt/0000000000000001 file 6B 83 gamma_parameters.txt/0000000000000002 file 6B 84 gamma_parameters.txt/0000000000000003 file 6B 85 gamma_parameters.txt/0000000000000004 file 6B 86 gamma_parameters.txt/0000000000000005 file 6B 87 gamma_parameters.txt/0000000000000006 file 6B 88 ``` 89 90 As you can see, each of the parameter files has been split into a file per line, and thus a file per parameter. This can be seen by looking at the file contents: 91 92 ```shell 93 $ pachctl get file parameters@master:c_parameters.txt/0000000000000000 94 0.031 95 $ pachctl get file parameters@master:c_parameters.txt/0000000000000001 96 0.125 97 $ pachctl get file parameters@master:c_parameters.txt/0000000000000002 98 0.500 99 ``` 100 101 For more information on splitting data files, see our [splitting data for distributed processing](https://docs.pachyderm.com/1.13.x/how-tos/splitting-data/splitting/). 102 103 ## Creating the pipelines 104 105 To create the four pipelines mentioned and illustrated above: 106 107 ```shell 108 $ cd ../../ 109 $ pachctl create pipeline -f split.json 110 $ pachctl create pipeline -f model.json 111 $ pachctl create pipeline -f test.json 112 $ pachctl create pipeline -f select.json 113 ``` 114 115 The pipelines should soon all be in the "running" state: 116 117 ```shell 118 pachctl list pipeline 119 NAME VERSION INPUT CREATED STATE / LAST JOB DESCRIPTION 120 select 1 (model:/ ⨯ test:/) 9 seconds ago running / starting A pipeline that selects the best evaluation metrics from the results of the `test` pipeline. 121 test 1 (model:/* ⨯ split:/test.csv) 9 seconds ago running / starting A pipeline that scores each of the trained models. 122 model 1 (parameters:/c_parameters.txt/* ⨯ parameters:/gamma_parameters.txt/* ⨯ raw_data:/iris.csv) 9 seconds ago running / starting A pipeline that trains the model for each combination of C and Gamma parameters. 123 split 1 raw_data:/ 9 seconds ago running / starting A pipeline that splits the `iris` data set into the `training` and `test` data sets. 124 ``` 125 126 127 And, after waiting a few minutes, you should see the successful jobs that did our distributed hyperparameter tuning: 128 129 ```shell 130 $ pachctl list job 131 ID OUTPUT COMMIT STARTED DURATION RESTART PROGRESS DL UL STATE 132 e2b75a61-13e2-4067-88b7-adec4d32f830 select/f38eae7cea574fc6a90adda706d4714e 18 seconds ago Less than a second 0 1 + 0 / 1 243.2KiB 82.3KiB success 133 4116af2b-efa5-405e-ba04-f850a656e25d test/1e379911118c4492932a2dd9eb198e9a About a minute ago About a minute 0 77 + 0 / 77 400.3KiB 924B success 134 f628028e-2c88-439e-8738-823fe0441e1b model/6a877b93e3e2445e92a11af8bde6dddf 3 minutes ago About a minute 0 77 + 0 / 77 635.1KiB 242.3KiB success 135 a2ba2024-db12-4a78-9383-82adba5a4c3d split/04955ad7fda64a66820db5578478c1d6 5 minutes ago Less than a second 0 1 + 0 / 1 10.29KiB 10.29KiB success 136 ``` 137 138 ## Looking at the results 139 140 If we look at the models that were trained based on our training data, we will see one model for each of the combinations of *C* and *Gamma* parameters: 141 142 ```shell 143 $ pachctl list file model@master 144 NAME TYPE SIZE 145 model_C0.031_G0.001.pkl file 6.908KiB 146 model_C0.031_G0.004.pkl file 6.908KiB 147 model_C0.031_G0.016.pkl file 6.908KiB 148 model_C0.031_G0.063.pkl file 6.908KiB 149 model_C0.031_G0.25.pkl file 6.908KiB 150 model_C0.031_G1.0.pkl file 6.908KiB 151 model_C0.031_G4.0.pkl file 6.908KiB 152 model_C0.125_G0.001.pkl file 4.85KiB 153 model_C0.125_G0.004.pkl file 4.85KiB 154 model_C0.125_G0.016.pkl file 4.85KiB 155 model_C0.125_G0.063.pkl file 4.85KiB 156 model_C0.125_G0.25.pkl file 4.85KiB 157 model_C0.125_G1.0.pkl file 4.85KiB 158 etc... 159 ``` 160 161 There should be 77 of these models: 162 163 ```shell 164 $ pachctl list file model@master | wc -l 165 78 166 ``` 167 168 But not all of these models are ideal for making our predictions. Our `select` pipeline stage automatically selected out the best of these models (based on the evaluation metrics generated by the `test` stage). We can see which of the models are ideal for our predictions as follows: 169 170 ```shell 171 $ pachctl list file select@master | wc -l 172 36 173 $ pachctl list file select@master 174 NAME TYPE SIZE 175 model_C0.031_G0.001.pkl file 5.713KiB 176 model_C0.031_G0.004.pkl file 5.713KiB 177 model_C0.031_G0.016.pkl file 5.713KiB 178 model_C0.031_G0.063.pkl file 5.713KiB 179 model_C0.031_G0.25.pkl file 5.713KiB 180 model_C0.031_G1.0.pkl file 5.713KiB 181 model_C0.031_G4.0.pkl file 5.713KiB 182 etc... 183 ``` 184 185 *Note* - Here, 36 of the 77 models were selected as ideal. Due to the fact that we are randomly shuffling our training/test data, your results may vary slightly. 186 187 *Note* - The pipeline we've built here is very easy to generalize for any sort of parameter space exploration. As long as you break up your parameters into individual files (as shown above), you can test the whole parameter space in a massively distributed way and simply pick out the best results.