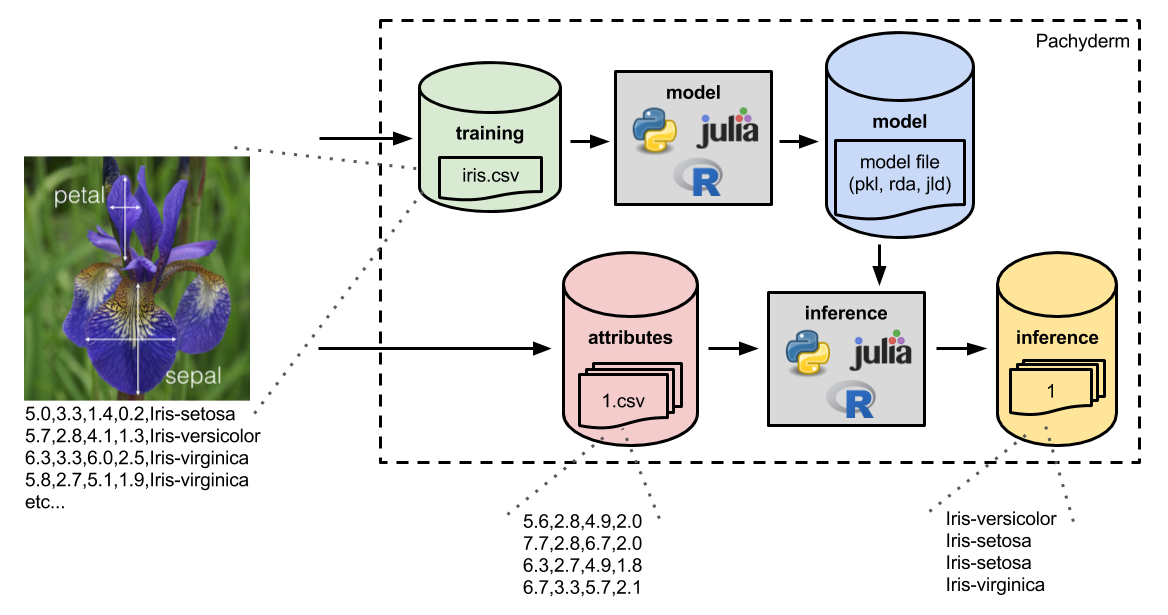

github.com/pachyderm/pachyderm@v1.13.4/examples/ml/iris/README.md (about) 1 > INFO - Pachyderm 2.0 introduces profound architectural changes to the product. As a result, our examples pre and post 2.0 are kept in two separate branches: 2 > - Branch Master: Examples using Pachyderm 2.0 and later versions - https://github.com/pachyderm/pachyderm/tree/master/examples 3 > - Branch 1.13.x: Examples using Pachyderm 1.13 and older versions - https://github.com/pachyderm/pachyderm/tree/1.13.x/examples 4 # ML pipeline for Iris Classification - R, Python, or Julia 5 6  7 8 This machine learning pipeline implements a "hello world" ML pipeline that trains a model to predict the species of Iris flowers (based on measurements of those flowers) and then utilizes that trained model to perform predictions. The pipeline can be deployed with R-based components, Python-based components, or Julia-based components. In fact, you can even deploy an R-based pipeline, for example, and then switch out the R pipeline stages with Julia or Python pipeline stages. This illustrates the language agnostic nature of Pachyderm's containerized pipelines. 9 10 1. [Make sure Pachyderm is running](README.md#1-make-sure-pachyderm-is-running) 11 2. [Create the input "data repositories"](README.md#2-create-the-input-data-repositories) 12 3. [Commit the training data set into Pachyderm](README.md#3-commit-the-training-data-set-into-pachyderm) 13 4. [Create the training pipeline](README.md#4-create-the-training-pipeline) 14 5. [Commit input attributes](README.md#5-commit-input-attributes) 15 6. [Create the inference pipeline](README.md#6-create-the-inference-pipeline) 16 7. [Examine the results](README.md#7-examine-the-results) 17 18 Bonus: 19 20 8. [Parallelize the inference](README.md#8-parallelize-the-inference) 21 9. [Update the model training](README.md#9-update-the-model-training) 22 10. [Update the training data set](README.md#10-update-the-training-data-set) 23 11. [Examine pipeline provenance](README.md#11-examine-pipeline-provenance) 24 25 Finally, we provide some [Resources](README.md#resources) for you for further exploration. 26 27 ## Getting Started 28 29 - Clone this repo. 30 - Install Pachyderm as described in [Local Installation](https://docs.pachyderm.com/1.13.x/getting_started/local_installation/). 31 32 ## 1. Make sure Pachyderm is running 33 34 You should be able to connect to your Pachyderm cluster via the `pachctl` CLI. To verify that everything is running correctly on your machine, you should be able to run the following with similar output: 35 36 ``` 37 $ pachctl version 38 COMPONENT VERSION 39 pachctl 1.7.0 40 pachd 1.7.0 41 ``` 42 43 ## 2. Create the input data repositories 44 45 On the Pachyderm cluster running in your remote machine, we will need to create the two input data repositories (for our training data and input iris attributes). To do this run: 46 47 ``` 48 $ pachctl create repo training 49 $ pachctl create repo attributes 50 ``` 51 52 As a sanity check, we can list out the current repos, and you should see the two repos you just created: 53 54 ``` 55 $ pachctl list repo 56 NAME CREATED SIZE 57 attributes 5 seconds ago 0 B 58 training 8 seconds ago 0 B 59 ``` 60 61 ## 3. Commit the training data set into pachyderm 62 63 We have our training data repository, but we haven't put our training data set into this repository yet. The training data set, `iris.csv`, is included here in the [data](data) directory. 64 65 To get this data into Pachyderm, navigate to this directory and run: 66 67 ``` 68 $ cd data 69 $ pachctl put file training@master -f iris.csv 70 ``` 71 72 Then, you should be able to see the following: 73 74 ``` 75 $ pachctl list repo 76 NAME CREATED SIZE 77 training 3 minutes ago 4.444 KiB 78 attributes 3 minutes ago 0 B 79 $ pachctl list file training@master 80 NAME TYPE SIZE 81 iris.csv file 4.444 KiB 82 ``` 83 84 ## 4. Create the training pipeline 85 86 Next, we can create the `model` pipeline stage to process the data in the training repository. To do this, we just need to provide Pachyderm with a JSON pipeline specification that tells Pachyderm how to process the data. This `model` pipeline can be specified to train a model with R, Python, or Julia and with a variety of types of models. The following Docker images are available for the training: 87 88 - `pachyderm/iris-train:python-svm` - Python-based SVM implemented in [python/iris-train-python-svm/pytrain.py](python/iris-train-python-svm/pytrain.py) 89 - `pachyderm/iris-train:python-lda` - Python-based LDA implemented in [python/iris-train-python-lda/pytrain.py](python/iris-train-python-lda/pytrain.py) 90 - `pachyderm/iris-train:rstats-svm` - R-based SVM implemented in [rstats/iris-train-r-svm/train.R](rstats/iris-train-r-svm/train.R) 91 - `pachyderm/iris-train:rstats-lda` - R-based LDA implemented in [rstats/iris-train-r-lda/train.R](rstats/iris-train-r-lda/train.R) 92 - `pachyderm/iris-train:julia-tree` - Julia-based decision tree implemented in [julia/iris-train-julia-tree/train.jl](julia/iris-train-julia-tree/train.jl) 93 - `pachyderm/iris-train:julia-forest` - Julia-based random forest implemented in [julia/iris-train-julia-forest/train.jl](julia/iris-train-julia-forest/train.jl) 94 95 You can utilize any one of these images in your model training by using specification corresponding to the language of interest, `<julia, python, rstats>_train.json`, and making sure that the particular image is specified in the `image` field. For example, if we wanted to train a random forest model with Julia, we would use [julia_train.json](julia_train.json) and make sure that the `image` field read as follows: 96 97 ``` 98 "transform": { 99 "image": "pachyderm/iris-train:julia-forest", 100 ... 101 ``` 102 103 Once you have specified your choice of modeling in the pipeline spec (the below output was generated with the Julia images, but you would see similar output with the Python/R equivalents), create the training pipeline: 104 105 ``` 106 $ cd .. 107 $ pachctl create pipeline -f <julia, python, rstats>_train.json 108 ``` 109 110 Immediately you will notice that Pachyderm has kicked off a job to perform the model training: 111 112 ``` 113 $ pachctl list job 114 ID OUTPUT COMMIT STARTED DURATION RESTART PROGRESS DL UL STATE 115 1a8225537992422f87c8468a16d0718b model/6e7cf823910b4ae68c8d337614654564 41 seconds ago - 0 0 + 0 / 1 0B 0B running 116 ``` 117 118 This job should run for about a minute (it will actually run much faster after this, but we have to pull the Docker image on the first run). After your model has successfully been trained, you should see: 119 120 ``` 121 $ pachctl list job 122 ID OUTPUT COMMIT STARTED DURATION RESTART PROGRESS DL UL STATE 123 1a8225537992422f87c8468a16d0718b model/6e7cf823910b4ae68c8d337614654564 2 minutes ago About a minute 0 1 + 0 / 1 4.444KiB 49.86KiB success 124 $ pachctl list repo 125 NAME CREATED SIZE 126 model 2 minutes ago 43.67 KiB 127 training 8 minutes ago 4.444 KiB 128 attributes 7 minutes ago 0 B 129 $ pachctl list file model@master 130 NAME TYPE SIZE 131 model.jld file 43.67 KiB 132 ``` 133 134 (This is the output for the Julia code. Python and R will have similar output, but the file types will be different.) 135 136 ## 5. Commit input attributes 137 138 Great! We now have a trained model that will infer the species of iris flowers. Let's commit some iris attributes into Pachyderm that we would like to run through the inference. We have a couple examples under [test](data/test). Feel free to use these, find your own, or even create your own. To commit our samples (assuming you have cloned this repo on the remote machine), you can run: 139 140 ``` 141 $ cd data/test/ 142 $ pachctl put file attributes@master -r -f . 143 ``` 144 145 You should then see: 146 147 ``` 148 $ pachctl list file attributes@master 149 NAME TYPE SIZE 150 1.csv file 16 B 151 2.csv file 96 B 152 ``` 153 154 ## 6. Create the inference pipeline 155 156 We have another JSON blob, `<julia, python, rstats>_infer.json`, that will tell Pachyderm how to perform the processing for the inference stage. This is similar to our last JSON specification except, in this case, we have two input repositories (the `attributes` and the `model`) and we are using a different Docker image. Similar to the training pipeline stage, this can be created in R, Python, or Julia. However, you should create it in the language that was used for training (because the model output formats aren't standardized across the languages). The available docker images are as follows: 157 158 - `pachyderm/iris-infer:python` - Python-based inference implemented in [python/iris-infer-python/infer.py](python/iris-infer-python/pyinfer.py) 159 - `pachyderm/iris-infer:rstats` - R-based inferenced implemented in [rstats/iris-infer-rstats/infer.R](rstats/iris-infer-r/infer.R) 160 - `pachyderm/iris-infer:julia` - Julia-based inference implemented in [julia/iris-infer-julia/infer.jl](julia/iris-infer-julia/infer.jl) 161 162 Then, to create the inference stage, we simply run: 163 164 ``` 165 $ cd ../../ 166 $ pachctl create pipeline -f <julia, python, rstats>_infer.json 167 ``` 168 169 where `<julia, python, rstats>` is replaced by the language you are using. This will immediately kick off an inference job, because we have committed unprocessed reviews into the `reviews` repo. The results will then be versioned in a corresponding `inference` data repository: 170 171 ``` 172 $ pachctl list job 173 ID OUTPUT COMMIT STARTED DURATION RESTART PROGRESS DL UL STATE 174 a139434b1b554443aceaf1424f119242 inference/15ef7bfe8e7d4df18a77f35b0019e119 8 seconds ago - 0 0 + 0 / 2 0B 0B running 175 1a8225537992422f87c8468a16d0718b model/6e7cf823910b4ae68c8d337614654564 6 minutes ago About a minute 0 1 + 0 / 1 4.444KiB 49.86KiB success 176 $ pachctl list job 177 ID OUTPUT COMMIT STARTED DURATION RESTART PROGRESS DL UL STATE 178 a139434b1b554443aceaf1424f119242 inference/15ef7bfe8e7d4df18a77f35b0019e119 2 minutes ago 2 minutes 0 2 + 0 / 2 99.83KiB 100B success 179 1a8225537992422f87c8468a16d0718b model/6e7cf823910b4ae68c8d337614654564 9 minutes ago About a minute 0 1 + 0 / 1 4.444KiB 49.86KiB success 180 $ pachctl list repo 181 NAME CREATED SIZE 182 inference About a minute ago 100 B 183 attributes 13 minutes ago 112 B 184 model 8 minutes ago 43.67 KiB 185 training 13 minutes ago 4.444 KiB 186 ``` 187 188 ## 7. Examine the results 189 190 We have created results from the inference, but how do we examine those results? There are multiple ways, but an easy way is to just "get" the specific files out of Pachyderm's data versioning: 191 192 ``` 193 $ pachctl list file inference@master 194 NAME TYPE SIZE 195 1 file 15 B 196 2 file 85 B 197 $ pachctl get file inference@master:1 198 Iris-virginica 199 $ pachctl get file inference@master:2 200 Iris-versicolor 201 Iris-virginica 202 Iris-virginica 203 Iris-virginica 204 Iris-setosa 205 Iris-setosa 206 ``` 207 208 Here we can see that each result file contains a predicted iris flower species corresponding to each set of input attributes. 209 210 ## Bonus exercises 211 212 ### 8. Parallelize the inference 213 214 You may have noticed that our pipeline specs included a `parallelism_spec` field. This tells Pachyderm how to parallelize a particular pipeline stage. Let's say that in production we start receiving a huge number of attribute files, and we need to keep up with our inference. In particular, let's say we want to spin up 10 inference workers to perform inference in parallel. 215 216 This actually doesn't require any change to our code. We can simply change our `parallelism_spec` in `<julia, python, rstats>_infer.json` to: 217 218 ``` 219 "parallelism_spec": { 220 "constant": "5" 221 }, 222 ``` 223 224 Pachyderm will then spin up 5 inference workers, 225 each running our same script, 226 to perform inference in parallel. 227 228 You can edit the pipeline in-place and then examine the pipeline to see how many workers it has. 229 230 `pachctl edit` will use your default editor, 231 which can be customized using the `EDITOR` environment variable, 232 to edit a pipeline specification in place. 233 Run this command and change the `parallelism_spec` from `1` to `5`. 234 235 ``` 236 $ pachctl edit pipeline inference 237 ``` 238 239 Then inspect the pipeline to see how many workers it has. 240 Here's an example of a python version of the inference pipeline. 241 See that `Workers Available` is `5/5`. 242 243 ``` 244 $ pachctl inspect pipeline inference 245 Name: inference 246 Description: An inference pipeline that makes a prediction based on the trained model by using a Python script. 247 Created: 57 seconds ago 248 State: running 249 Reason: 250 Workers Available: 5/5 251 Stopped: false 252 Parallelism Spec: constant:5 253 254 255 Datum Timeout: (duration: nil Duration) 256 Job Timeout: (duration: nil Duration) 257 Input: 258 { 259 "cross": [ 260 { 261 "pfs": { 262 "name": "attributes", 263 "repo": "attributes", 264 "branch": "master", 265 "glob": "/*" 266 } 267 }, 268 { 269 "pfs": { 270 "name": "model", 271 "repo": "model", 272 "branch": "master", 273 "glob": "/" 274 } 275 } 276 ] 277 } 278 279 280 Output Branch: master 281 Transform: 282 { 283 "image": "pachyderm/iris-infer:python", 284 "cmd": [ 285 "python3", 286 "/code/pyinfer.py", 287 "/pfs/model/", 288 "/pfs/attributes/", 289 "/pfs/out/" 290 ] 291 } 292 ``` 293 294 If you have Kubernetes access to your cluster, 295 you can use a variation of `kubectl get pods` to also see all 5 workers. 296 297 ### 9. Update the model training 298 299 Let's now imagine that we want to update our model from random forest to decision tree, SVM to LDA, etc. To do this, modify the image tag in `train.json`. For example, to run a Julia-based decision tree instead of a random forest: 300 301 ``` 302 "image": "pachyderm/iris-train:julia-tree", 303 ``` 304 305 Once you modify the spec, you can update the pipeline by running `pachctl update pipeline ...`. By default, Pachyderm will then utilize this updated model on any new versions of our training data. However, let's say that we want to update the model and reprocess the training data that is already in the `training` repo. To do this we will run the update with the `--reprocess` flag: 306 307 ``` 308 $ pachctl update pipeline -f <julia, python, rstats>_train.json --reprocess 309 ``` 310 311 Pachyderm will then automatically kick off new jobs to retrain our model with the new model code and update our inferences: 312 313 ``` 314 $ pachctl list job 315 ID OUTPUT COMMIT STARTED DURATION RESTART PROGRESS DL UL STATE 316 95ffe60f94914522bccfff52e9f8d064 inference/be361c6b2c294aaea72ed18cbcfda644 6 seconds ago - 0 0 + 0 / 0 0B 0B starting 317 81cd82538e584c3d9edb901ab62e8f60 model/adb293f8a4604ed7b081c1ff030c0480 6 seconds ago - 0 0 + 0 / 1 0B 0B running 318 aee1e950a22547d8bfaea397fc6bd60a inference/2e9d4707aadc4a9f82ef688ec11505c4 6 seconds ago Less than a second 0 0 + 2 / 2 0B 0B success 319 cffd4d2cbd494662814edf4c80eb1524 inference/ef0904d302ae4116aa8e44e73fa2b541 4 minutes ago 17 seconds 0 0 + 2 / 2 0B 0B success 320 5f672837be1844f58900b9cb5b984af8 inference/5bbf6da576694d2480add9bede69a0af 4 minutes ago 17 seconds 0 0 + 2 / 2 0B 0B success 321 a139434b1b554443aceaf1424f119242 inference/15ef7bfe8e7d4df18a77f35b0019e119 9 minutes ago 2 minutes 0 2 + 0 / 2 99.83KiB 100B success 322 1a8225537992422f87c8468a16d0718b model/6e7cf823910b4ae68c8d337614654564 16 minutes ago About a minute 0 1 + 0 / 1 4.444KiB 49.86KiB success 323 ``` 324 325 ### 10. Update the training data set 326 327 Let's say that one or more observations in our training data set were corrupt or unwanted. Thus, we want to update our training data set. To simulate this, go ahead and open up `iris.csv` (e.g., with `vim`) and remove a couple of the rows (non-header rows). Then, let's replace our training set (`-o` tells Pachyderm to overwrite the file): 328 329 ``` 330 $ pachctl put file training@master -o -f ./data/iris.csv 331 ``` 332 333 Immediately, Pachyderm "knows" that the data has been updated, and it starts new jobs to update the model and inferences. 334 335 ### 11. Examine pipeline provenance 336 337 Let's say that we have updated our model or training set in one of the above scenarios (step 11 or 12). Now we have multiple inferences that were made with different models and/or training data sets. How can we know which results came from which specific models and/or training data sets? This is called "provenance," and Pachyderm gives it to you out of the box. 338 339 Suppose we have run the following jobs: 340 341 ``` 342 $ pachctl list job 343 95ffe60f94914522bccfff52e9f8d064 inference/be361c6b2c294aaea72ed18cbcfda644 3 minutes ago 3 minutes 0 2 + 0 / 2 72.61KiB 100B success 344 81cd82538e584c3d9edb901ab62e8f60 model/adb293f8a4604ed7b081c1ff030c0480 3 minutes ago About a minute 0 1 + 0 / 1 4.444KiB 36.25KiB success 345 aee1e950a22547d8bfaea397fc6bd60a inference/2e9d4707aadc4a9f82ef688ec11505c4 3 minutes ago Less than a second 0 0 + 2 / 2 0B 0B success 346 cffd4d2cbd494662814edf4c80eb1524 inference/ef0904d302ae4116aa8e44e73fa2b541 7 minutes ago 17 seconds 0 0 + 2 / 2 0B 0B success 347 5f672837be1844f58900b9cb5b984af8 inference/5bbf6da576694d2480add9bede69a0af 7 minutes ago 17 seconds 0 0 + 2 / 2 0B 0B success 348 a139434b1b554443aceaf1424f119242 inference/15ef7bfe8e7d4df18a77f35b0019e119 12 minutes ago 2 minutes 0 2 + 0 / 2 99.83KiB 100B success 349 1a8225537992422f87c8468a16d0718b model/6e7cf823910b4ae68c8d337614654564 19 minutes ago About a minute 0 1 + 0 / 1 4.444KiB 49.86KiB success 350 ``` 351 352 If we want to know which model and training data set was used for the latest inference, commit id `be361c6b2c294aaea72ed18cbcfda644`, we just need to inspect the particular commit: 353 354 ``` 355 $ pachctl inspect commit inference@be361c6b2c294aaea72ed18cbcfda644 356 Commit: inference/be361c6b2c294aaea72ed18cbcfda644 357 Parent: 2e9d4707aadc4a9f82ef688ec11505c4 358 Started: 3 minutes ago 359 Finished: 39 seconds ago 360 Size: 100B 361 Provenance: attributes/2757a902762e456a89852821069a33aa model/adb293f8a4604ed7b081c1ff030c0480 spec/d64feabfc97d41db849a50e8613816b5 spec/91e0832aec7141a4b20e832553afdffb training/76e4250d5e584f1f9c2505ffd763e64a 362 ``` 363 364 The `Provenance` tells us exactly which model and training set was used (along with which commit to attributes triggered the inference). For example, if we wanted to see the exact model used, we would just need to reference commit `adb293f8a4604ed7b081c1ff030c0480` to the `model` repo: 365 366 ``` 367 $ pachctl list file model@adb293f8a4604ed7b081c1ff030c0480 368 NAME TYPE SIZE 369 model.pkl file 3.448KiB 370 model.txt file 226B 371 ``` 372 373 We could get this model to examine it, rerun it, revert to a different model, etc. 374 375 ## Resources 376 377 - Join the [Pachyderm Slack team](http://slack.pachyderm.io/) to ask questions, get help, and talk about production deploys. 378 - Follow [Pachyderm on Twitter](https://twitter.com/pachyderminc), 379 - Find [Pachyderm on GitHub](https://github.com/pachyderm/pachyderm), and 380 - [Spin up Pachyderm](https://docs.pachyderm.com/1.13.x/getting_started/) by running just a few commands to try this and [other examples](https://docs.pachyderm.com/1.13.x/examples/examples/) locally.