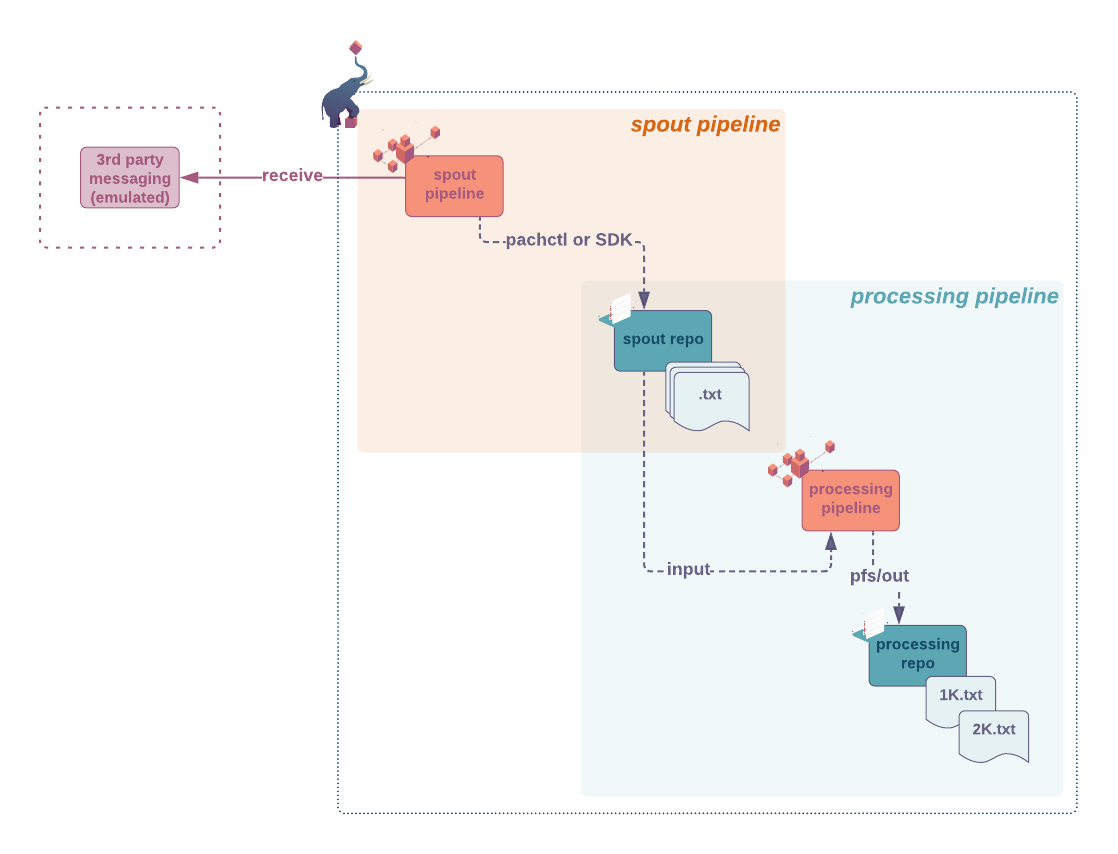

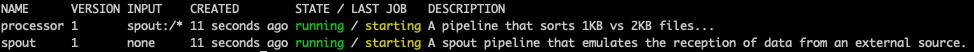

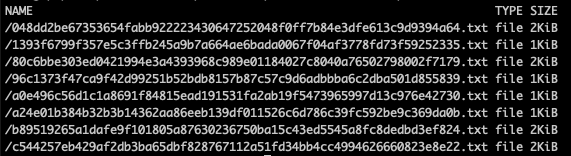

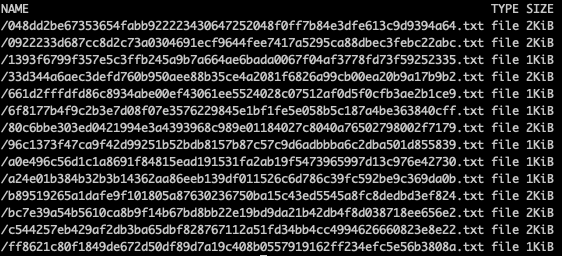

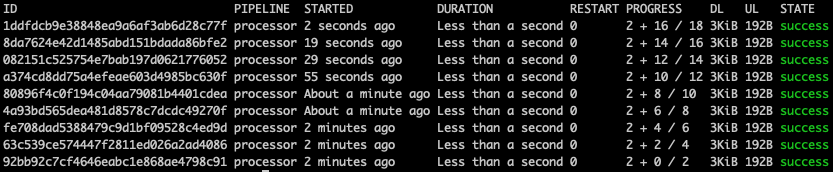

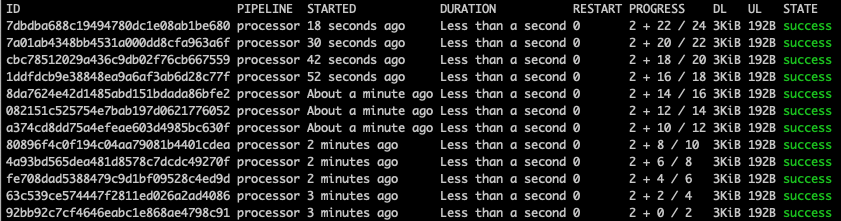

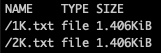

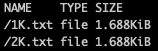

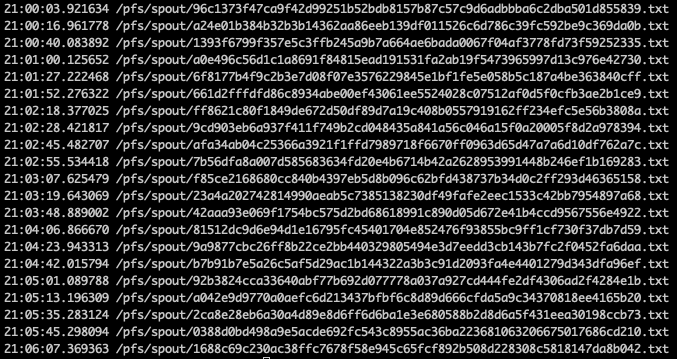

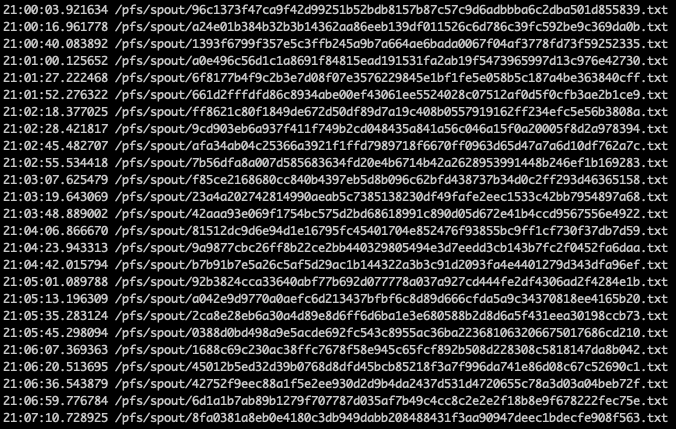

github.com/pachyderm/pachyderm@v1.13.4/examples/spouts/spout101/README.md (about) 1 > INFO - Pachyderm 2.0 introduces profound architectural changes to the product. As a result, our examples pre and post 2.0 are kept in two separate branches: 2 > - Branch Master: Examples using Pachyderm 2.0 and later versions - https://github.com/pachyderm/pachyderm/tree/master/examples 3 > - Branch 1.13.x: Examples using Pachyderm 1.13 and older versions - https://github.com/pachyderm/pachyderm/tree/1.13.x/examples 4 5 # Spout Pipelines - An introductory example 6 > This new implementation of the spout functionality is available in version **1.12 and higher**. 7 8 9 ## Intro 10 A spout is a type of pipeline that ingests 11 streaming data (message queue, database transactions logs, 12 event notifications... ), 13 acting as **a bridge 14 between an external stream of data and Pachyderm's repo**. 15 16 For those familiar with enterprise integration patterns, 17 a Pachyderm spout implements the 18 *[Polling Consumer](https://www.enterpriseintegrationpatterns.com/patterns/messaging/PollingConsumer.html)* 19 (subscribes to a stream of data, 20 reads its published messages, 21 then push them to -in our case- the spout's output repository). 22 23 For more information about spout pipelines, 24 we recommend to read the following page in our documentation: 25 26 - [Spout](https://docs.pachyderm.com/1.13.x/concepts/pipeline-concepts/pipeline/spout/) concept. 27 - [Spout](https://docs.pachyderm.com/1.13.x/reference/pipeline_spec/#spout-optional) configuration 28 29 30 In this example, we have emulated the reception 31 of messages from a third-party messaging system 32 to focus on the specificities of the spout pipeline. 33 34 Note that we used [`python-pachyderm`](https://github.com/pachyderm/python-pachyderm)'s Client to connect to Pachyderm's API. 35  36 Feel free to explore more of our examples 37 to discover how we used spout to listen 38 for new S3 objects notifications via an Amazon™ SQS queue 39 or connected to an IMAP email account 40 to analyze the polarity of its emails. 41 42 ## Getting ready 43 ***Prerequisite*** 44 - A workspace on [Pachyderm Hub](https://docs.pachyderm.com/1.13.x/hub/hub_getting_started/) (recommended) or Pachyderm running [locally](https://docs.pachyderm.com/1.13.x/getting_started/local_installation/). 45 - [pachctl command-line ](https://docs.pachyderm.com/1.13.x/getting_started/local_installation/#install-pachctl) installed, and your context created (i.e., you are logged in) 46 47 ***Getting started*** 48 - Clone this repo. 49 - Make sure Pachyderm is running. You should be able to connect to your Pachyderm cluster via the `pachctl` CLI. 50 Run a quick: 51 ```shell 52 $ pachctl version 53 54 COMPONENT VERSION 55 pachctl 1.12.0 56 pachd 1.12.0 57 ``` 58 Ideally, have your pachctl and pachd versions match. At a minimum, you should always use the same major & minor versions of your pachctl and pachd. 59 60 ## Example - Spout 101 61 ***Goal*** 62 In this example, 63 we will keep generating two random strings, 64 one of 1KB and one of 2KB, 65 at intervals varying between 10s and 30s. 66 Our spout pipeline will actively receive 67 those events and commit them as text files 68 to the output repo 69 using the **put file** command 70 of `pachyderm-python`'s library. 71 A second pipeline will then process those commits 72 and log an entry in a separate log file 73 depending on their size. 74 75 76 1. **Pipeline input repository**: None 77 78 1. **Spout and processing pipelines**: [`spout.json`](./pipelines/spout.json) polls and commits to its output repo using `pachctl put file` from the pachyderm-python library. [`processor.json`](./pipelines/processor.json) then reads the files from its spout input repo and log their content separately depending on their size. 79 80 > Have a quick look at the source code of our spout pipeline in [`./src/consumer/main.py`](./src/consumer/main.py) and notice that we used `client = python_pachyderm.Client()` to connect to pachd and `client.put_file_bytes` to write files to the spout output repo. 81 82 83 1. **Pipeline output repository**: `spout` will contain one commit per set of received messages. Each message has been written to a txt file named after its hash (for uniqueness). `processor` will contain two files (1K.txt and 2K.txt) listing the messages received according to their size. 84 85 86 ***Example walkthrough*** 87 88 1. We have a Docker Hub image of this example ready for you. 89 However, you can choose to build your own and push it to your repository. 90 91 In the `examples/spouts/spout101` directory, 92 make sure to update the 93 `CONTAINER_TAG` in the `Makefile` accordingly 94 as well as your pipelines' specifications, 95 then run: 96 ```shell 97 $ make docker-image 98 ``` 99 > Need a refresher on building, tagging, pushing your image on Docker Hub? Take a look at this [how-to](https://docs.pachyderm.com/1.13.x/how-tos/developer-workflow/working-with-pipelines/). 100 101 1. Let's deploy our spout and processing pipelines: 102 103 Update the `image` field of the `transform` attribute in your pipelines specifications `./pipelines/spout.json` and `./pipelines/processor.json`. 104 105 In the `examples/spouts/spout101` directory, run: 106 ```shell 107 $ pachctl create pipeline -f ./pipelines/spout.json 108 $ pachctl create pipeline -f ./pipelines/processor.json 109 ``` 110 Or, run the following target: 111 ```shell 112 $ make deploy 113 ``` 114 Your pipelines should all be running: 115  116 117 1. Now that the spout pipeline is up, check its output repo once or twice. 118 You should be able to see that new commits are coming in. 119 120 ```shell 121 $ pachctl list file spout@master 122 ``` 123  124 125 and some time later... 126 127  128 129 Each of those commits triggers a job in the `processing` pipeline: 130 ```shell 131 $ pachctl list job 132 ``` 133 134  135 136 and... 137 138  139 140 1. Take a look at the output repository of your second pipeline: `processor`: 141 ```shell 142 $ pachctl list file processor@master 143 ``` 144 145  146 147 New entry keep being added to each of the two log files: 148 149  150 151 Zoom into one of them: 152 ```shell 153 $ pachctl get file processor@master:/1K.txt 154 ``` 155  156 157 ... 158 159  160 161 That is it. 162 163 1. When you are done, think about deleting your pipelines. 164 Remember, a spout pipeline keeps running: 165 166 In the `examples/spouts/spout101` directory, run:. 167 ```shell 168 $ pachctl delete all 169 ``` 170 You will be prompted to make sure the delete is intentional. Yes it is. 171 172 > Hub users, try `pachctl delete pipeline --all` and `pachctl delete repo --all`. 173 174 175 176 A final check at your pipelines: the list should be empty. You are good to go. 177 ```shell 178 $ pachctl list pipeline 179 ```