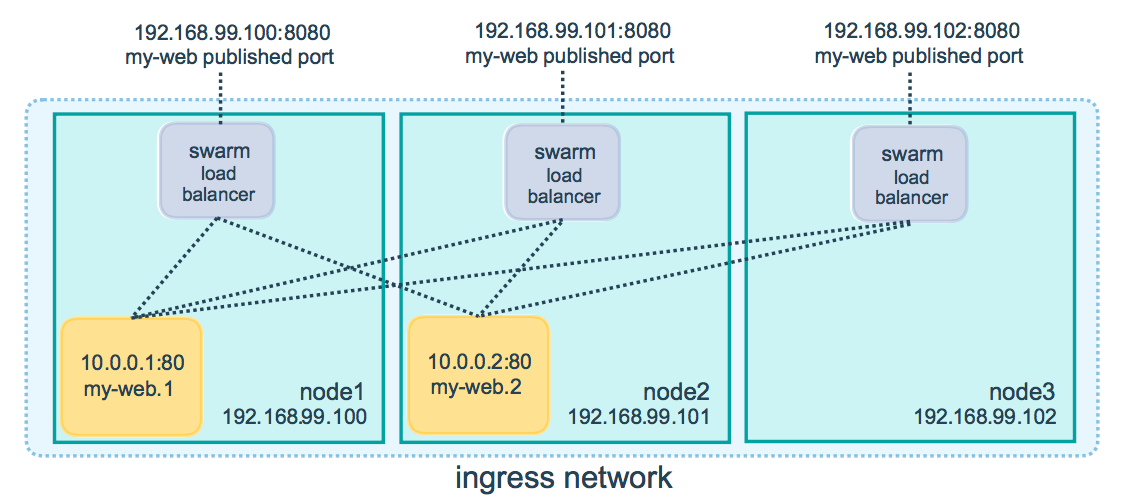

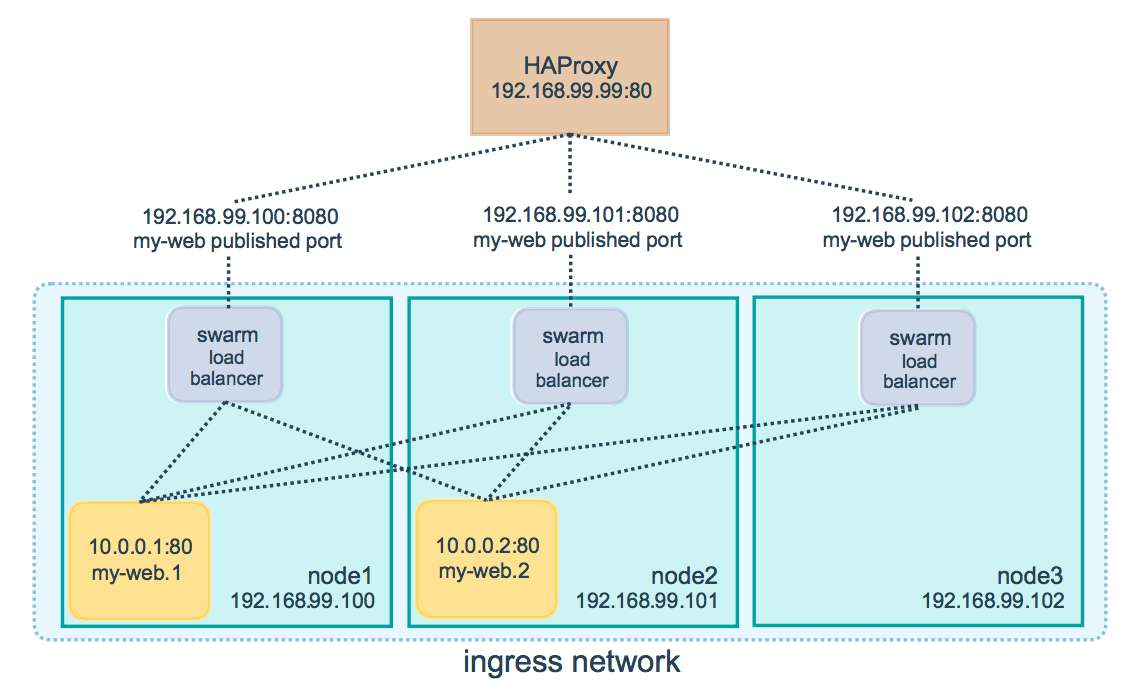

github.com/vieux/docker@v0.6.3-0.20161004191708-e097c2a938c7/docs/swarm/ingress.md (about) 1 <!--[metadata]> 2 +++ 3 title = "Use swarm mode routing mesh" 4 description = "Use the routing mesh to publish services externally to a swarm" 5 keywords = ["guide", "swarm mode", "swarm", "network", "ingress", "routing mesh"] 6 [menu.main] 7 identifier="ingress-guide" 8 parent="engine_swarm" 9 weight=17 10 +++ 11 <![end-metadata]--> 12 13 # Use swarm mode routing mesh 14 15 Docker Engine swarm mode makes it easy to publish ports for services to make 16 them available to resources outside the swarm. All nodes participate in an 17 ingress **routing mesh**. The routing mesh enables each node in the swarm to 18 accept connections on published ports for any service running in the swarm, even 19 if there's no task running on the node. The routing mesh routes all 20 incoming requests to published ports on available nodes to an active container. 21 22 In order to use the ingress network in the swarm, you need to have the following 23 ports open between the swarm nodes before you enable swarm mode: 24 25 * Port `7946` TCP/UDP for container network discovery. 26 * Port `4789` UDP for the container ingress network. 27 28 You must also open the published port between the swarm nodes and any external 29 resources, such as an external load balancer, that require access to the port. 30 31 ## Publish a port for a service 32 33 Use the `--publish` flag to publish a port when you create a service: 34 35 ```bash 36 $ docker service create \ 37 --name <SERVICE-NAME> \ 38 --publish <PUBLISHED-PORT>:<TARGET-PORT> \ 39 <IMAGE> 40 ``` 41 42 The `<TARGET-PORT>` is the port where the container listens. 43 The `<PUBLISHED-PORT>` is the port where the swarm makes the service available. 44 45 For example, the following command publishes port 80 in the nginx container to 46 port 8080 for any node in the swarm: 47 48 ```bash 49 $ docker service create \ 50 --name my-web \ 51 --publish 8080:80 \ 52 --replicas 2 \ 53 nginx 54 ``` 55 56 When you access port 8080 on any node, the swarm load balancer routes your 57 request to an active container. 58 59 The routing mesh listens on the published port for any IP address assigned to 60 the node. For externally routable IP addresses, the port is available from 61 outside the host. For all other IP addresses the access is only available from 62 within the host. 63 64  65 66 You can publish a port for an existing service using the following command: 67 68 ```bash 69 $ docker service update \ 70 --publish-add <PUBLISHED-PORT>:<TARGET-PORT> \ 71 <SERVICE> 72 ``` 73 74 You can use `docker service inspect` to view the service's published port. For 75 instance: 76 77 ```bash 78 $ docker service inspect --format="{{json .Endpoint.Spec.Ports}}" my-web 79 80 [{"Protocol":"tcp","TargetPort":80,"PublishedPort":8080}] 81 ``` 82 83 The output shows the `<TARGET-PORT>` from the containers and the 84 `<PUBLISHED-PORT>` where nodes listen for requests for the service. 85 86 ## Configure an external load balancer 87 88 You can configure an external load balancer to route requests to a swarm 89 service. For example, you could configure [HAProxy](http://www.haproxy.org) to 90 balance requests to an nginx service published to port 8080. 91 92  93 94 In this case, port 8080 must be open between the load balancer and the nodes in 95 the swarm. The swarm nodes can reside on a private network that is accessible to 96 the proxy server, but that is not publicly accessible. 97 98 You can configure the load balancer to balance requests between every node in 99 the swarm even if the there are no tasks scheduled on the node. For example, you 100 could have the following HAProxy configuration in `/etc/haproxy/haproxy.cfg`: 101 102 ```bash 103 global 104 log /dev/log local0 105 log /dev/log local1 notice 106 ...snip... 107 108 # Configure HAProxy to listen on port 80 109 frontend http_front 110 bind *:80 111 stats uri /haproxy?stats 112 default_backend http_back 113 114 # Configure HAProxy to route requests to swarm nodes on port 8080 115 backend http_back 116 balance roundrobin 117 server node1 192.168.99.100:8080 check 118 server node2 192.168.99.101:8080 check 119 server node3 192.168.99.102:8080 check 120 ``` 121 122 When you access the HAProxy load balancer on port 80, it forwards requests to 123 nodes in the swarm. The swarm routing mesh routes the request to an active task. 124 If, for any reason the swarm scheduler dispatches tasks to different nodes, you 125 don't need to reconfigure the load balancer. 126 127 You can configure any type of load balancer to route requests to swarm nodes. 128 To learn more about HAProxy, see the [HAProxy documentation](https://cbonte.github.io/haproxy-dconv/). 129 130 ## Learn more 131 132 * [Deploy services to a swarm](services.md)