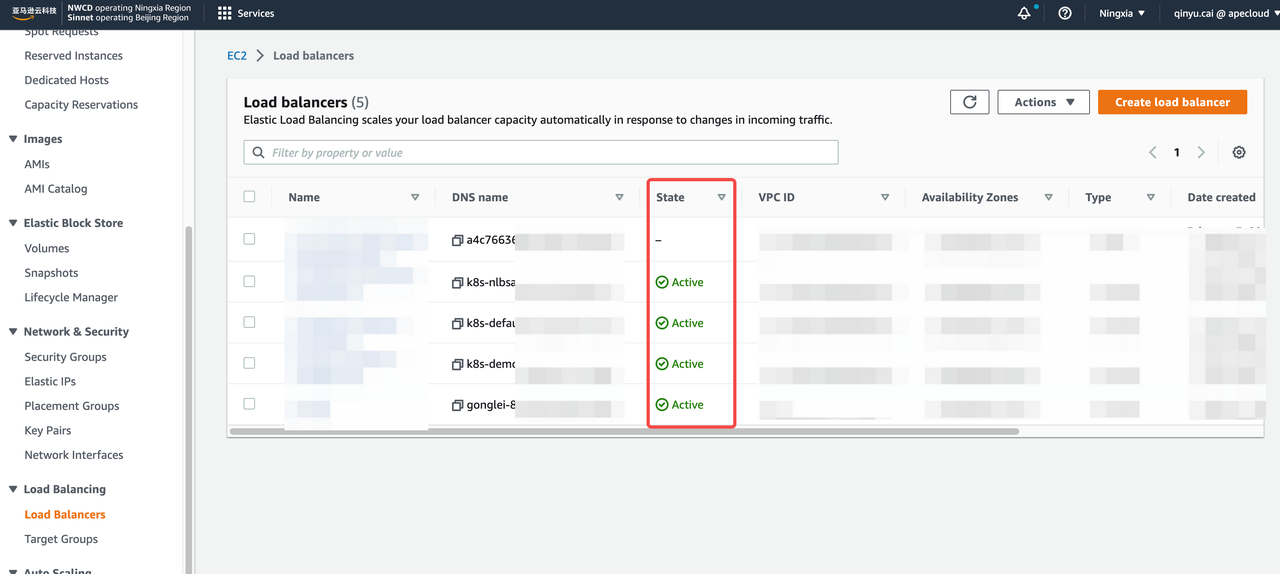

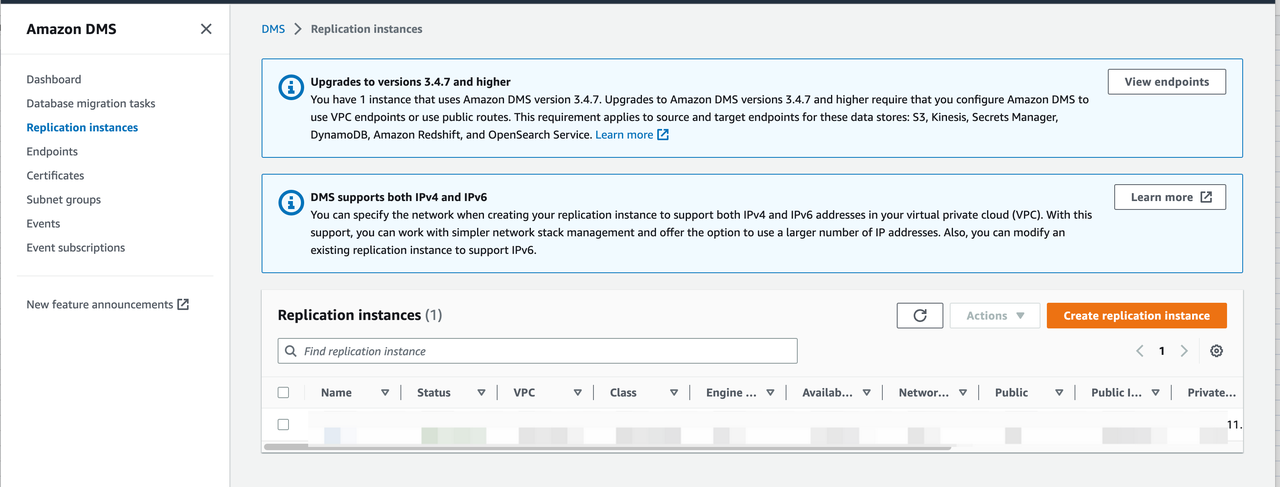

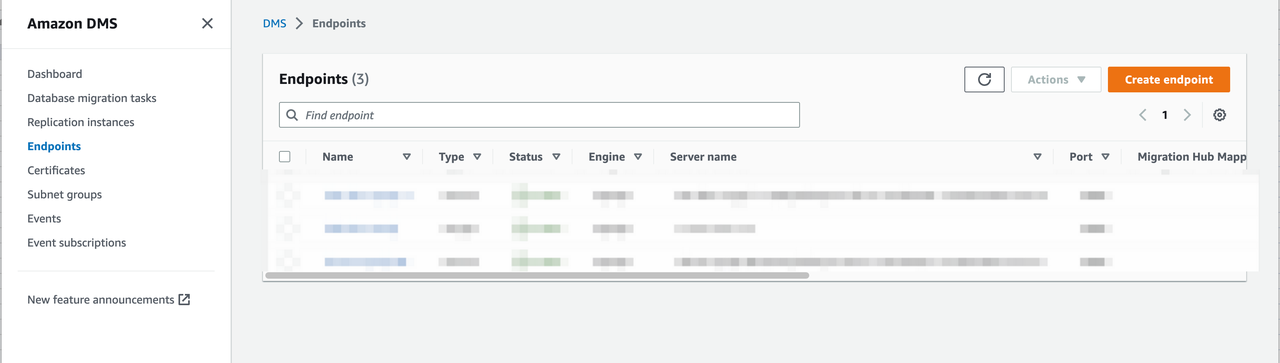

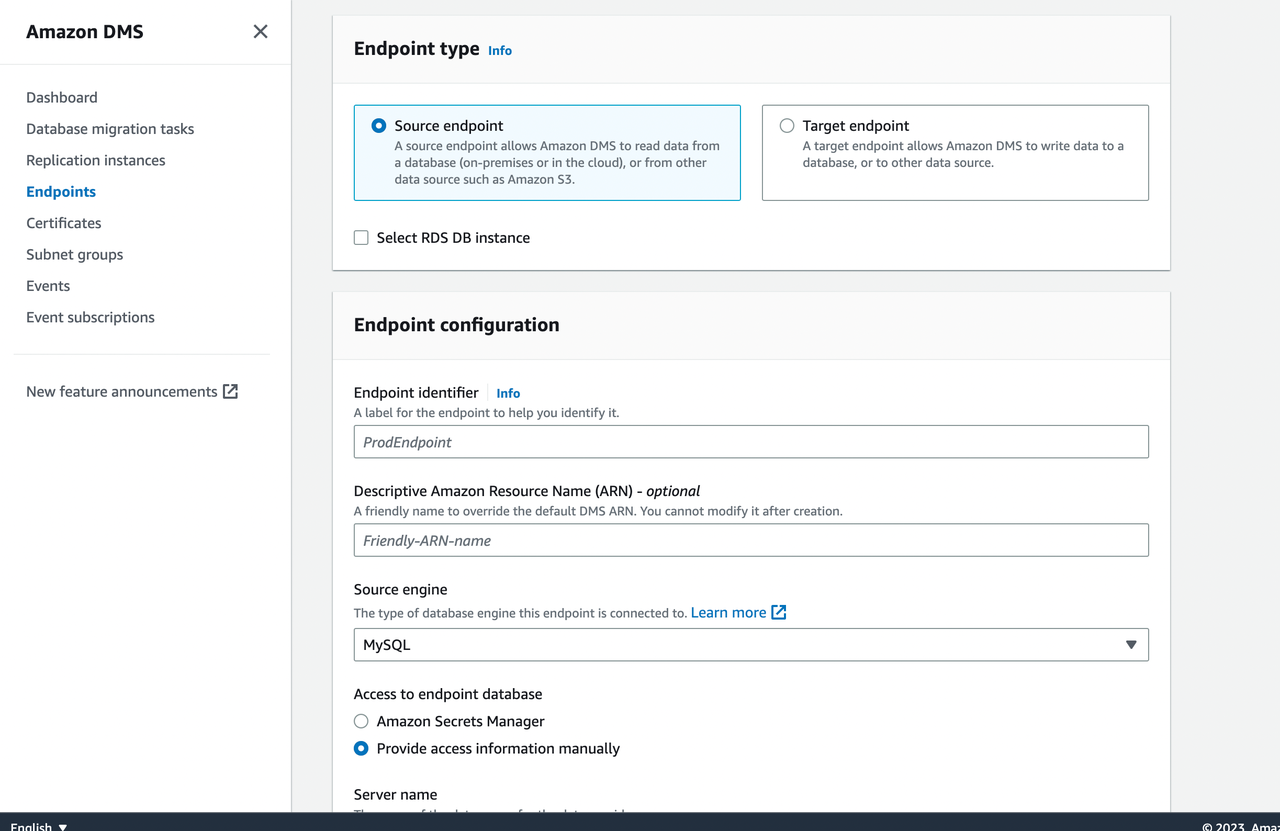

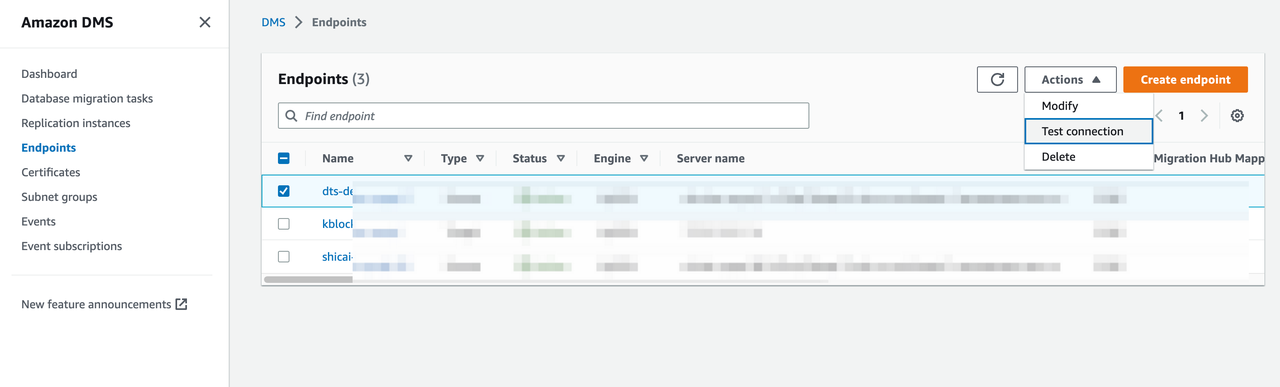

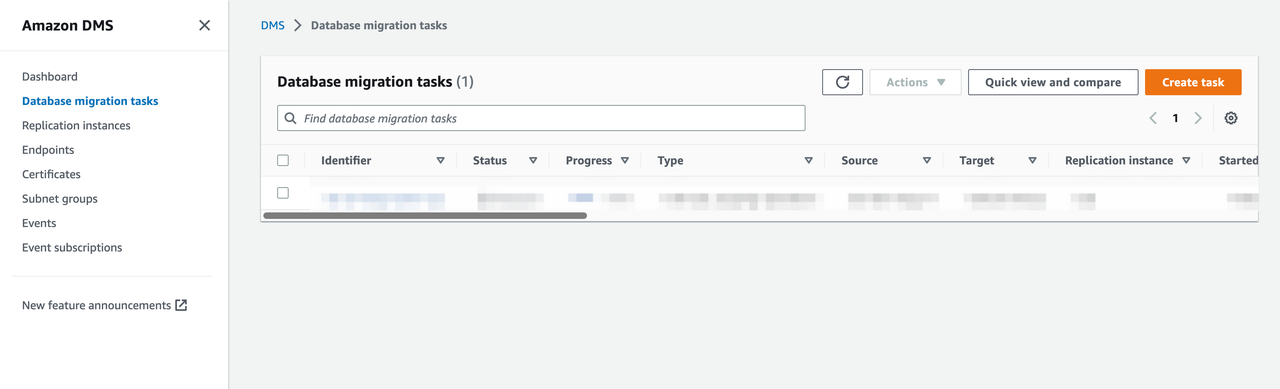

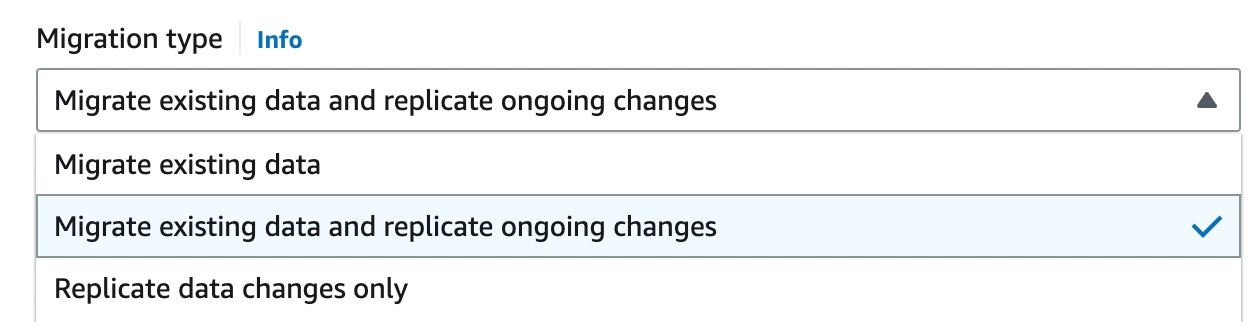

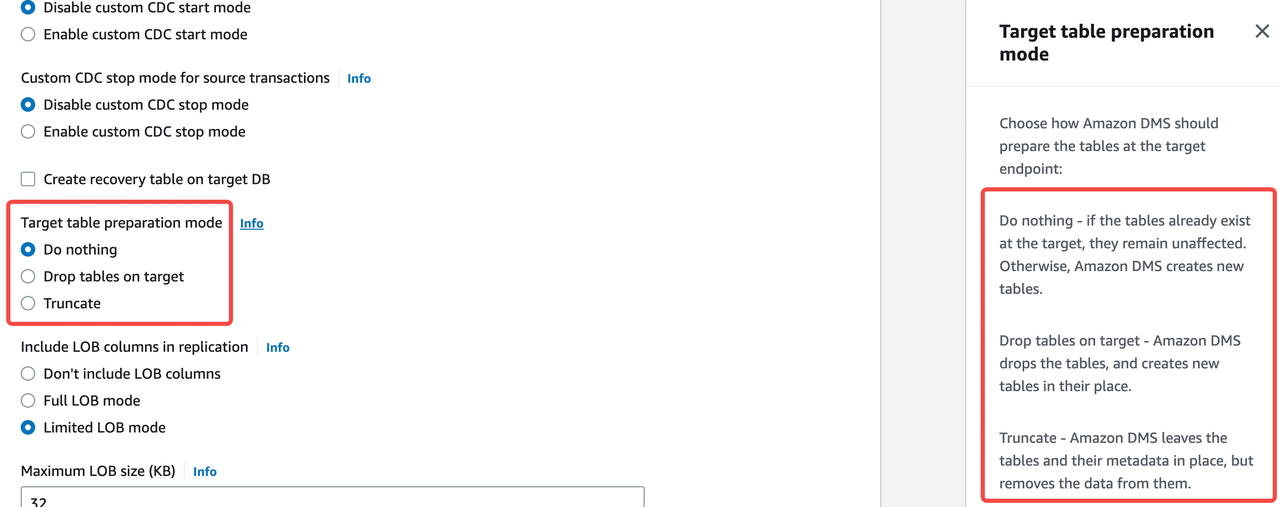

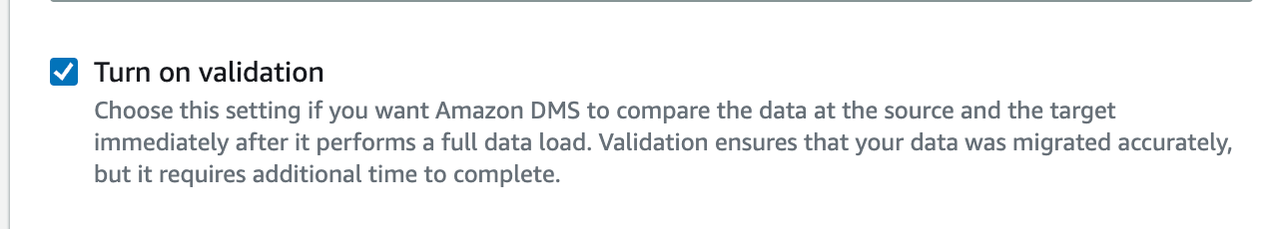

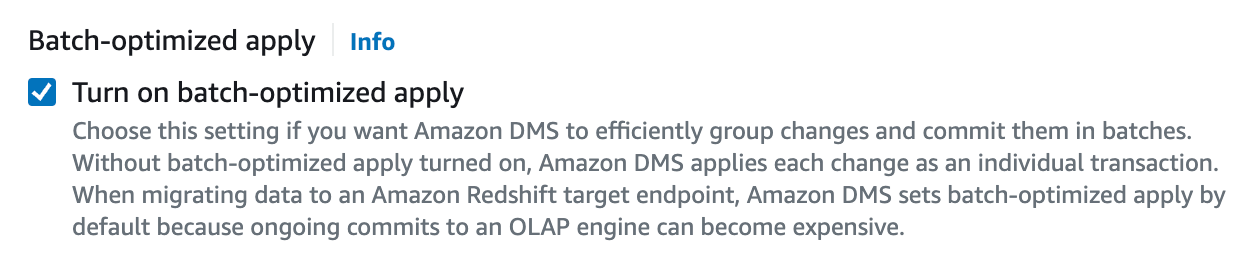

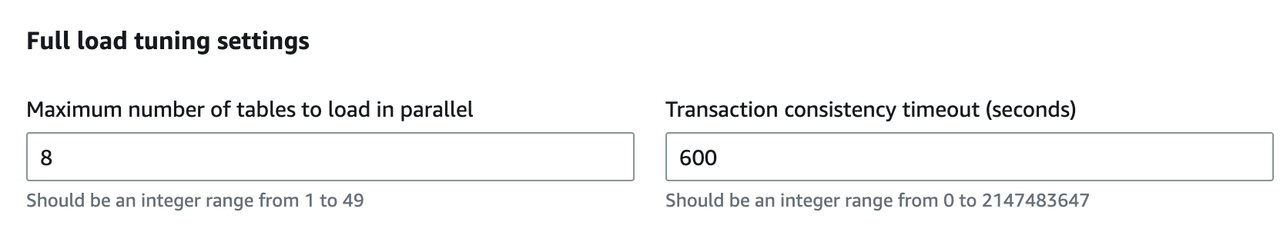

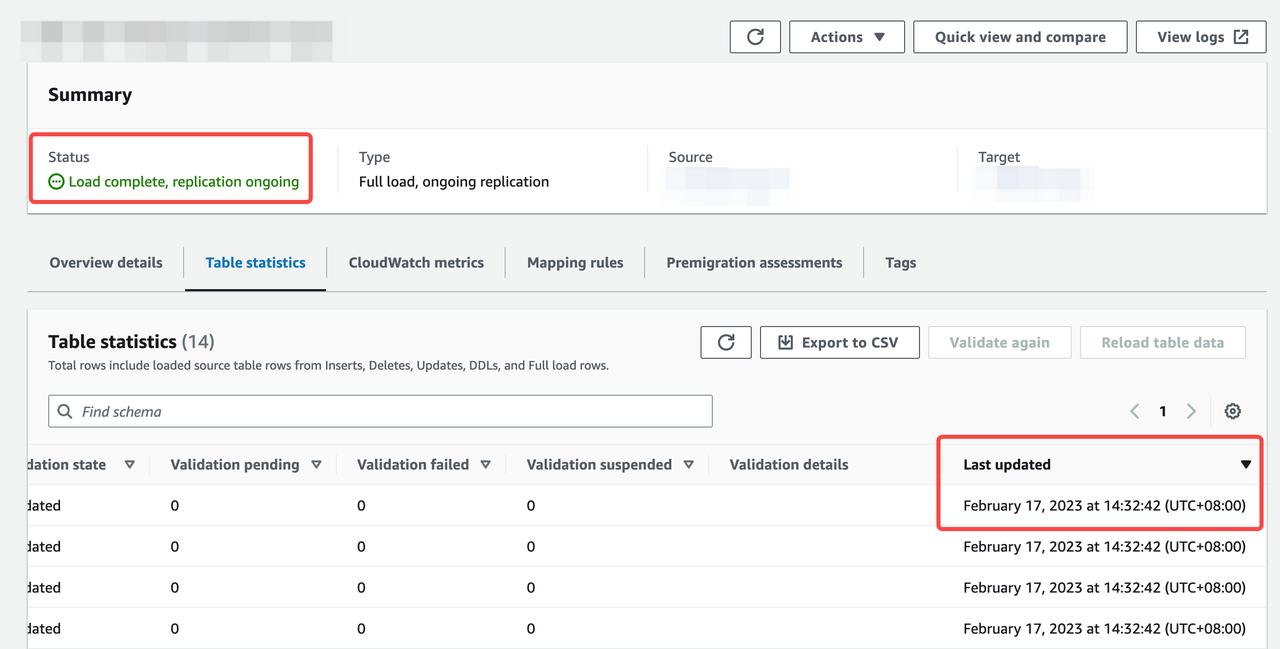

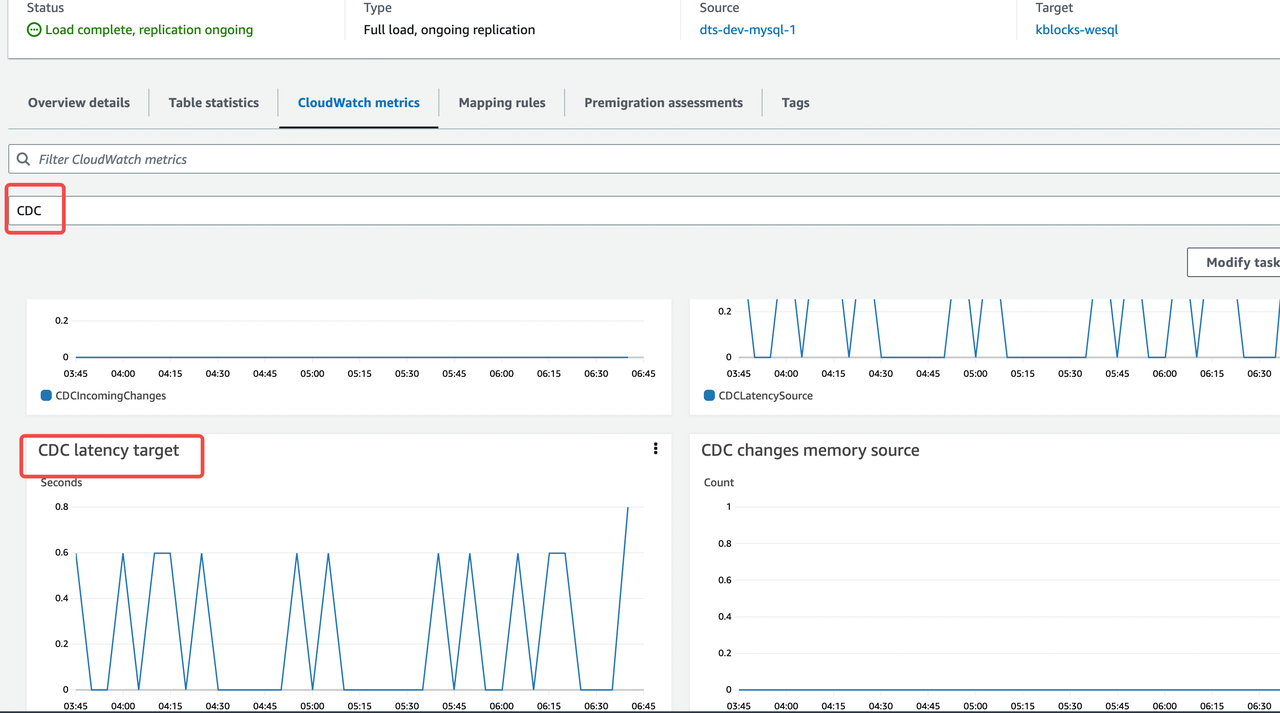

github.com/1aal/kubeblocks@v0.0.0-20231107070852-e1c03e598921/docs/user_docs/kubeblocks-for-mysql/migration/migration-aws-dms.md (about) 1 --- 2 title: Migrate data to ApeCloud MySQL by AWS DMS 3 description: How to migrate data to ApeCloud MySQL by AWS DMS 4 keywords: [mysql, migration, aws dms] 5 sidebar_position: 1 6 sidebar_label: Migration by AWS DMS 7 --- 8 9 # Migrate data to ApeCloud MySQL by AWS DMS 10 11 :::note 12 13 * Using the public network and network load balancer may incur expenses. 14 * The following tutorial is based on the prerequisite that ApeCloud MySQL is deployed on AWS EKS. Using other Kubernetes clusters to deploy ApeCloud MySQL is not included. 15 16 ::: 17 18 ## Network configuration 19 20 ### Expose the target ApeCloud MySQL network 21 22 The Kubernetes ClusterIP of ApeCloud MySQL is exposed by default in the EKS environment. But the migration task of DMS (Database Migration Service) runs in an independent Replication Instance, in which the Replication Instance can be set with the same VPC used by the Kubernetes clusters, but visiting ClusterIP still fails. This solution aims to connect this part of the network. 23 24 #### KubeBlocks native solution 25 26 ***Before you start*** 27 28 * [Install kbcli](./../../installation/install-with-kbcli/install-kbcli.md) 29 * Install KubeBlocks: You can install KubeBlocks by [kbcli](./../../installation/install-with-kbcli/install-kubeblocks-with-kbcli.md) or by [Helm](./../../installation/install-with-helm/install-kubeblocks-with-helm.md). 30 * Enable the AWS loadbalancer controller add-on. 31 32 ```bash 33 kbcli addon list 34 35 kbcli addon enable aws-load-balancer-controller 36 > 37 addon.extensions.kubeblocks.io/aws-load-balancer-controller enabled 38 ``` 39 40 If the loadbalancer is not enabled successfully, it may relate to your environment since the loadbalancer add-on relies on the EKS environment. 41 42 Check your EKS environment and enable this add-on again. For enabling add-on details, refer to [Enable add-ons](./../../overview/supported-addons.md). 43 44 ***Steps*** 45 46 1. Create an ApeCloud MySQL cluster on AWS. Refer to [Create an ApeCloud MySQL cluster](./../cluster-management/create-and-connect-a-mysql-cluster.md) for details. 47 2. Fill in the cluster name and run the command below to expose the external IP of the cluster. 48 49 ```bash 50 kbcli cluster expose mysql-cluster --enable=true --type='vpc' 51 ``` 52 53 :::note 54 55 For the above `kbcli cluster expose` command, the available value for `--type` are `vpc` and `internet`. Use `--type=vpc` for access within the same VPC and `--type=internet` for cross VPC access under the public network. 56 57 ::: 58 59 Run the command below to view the external IP:Port address which can be accessed by the same VPC machine but outside the EKS cluster. 60 61 ```bash 62 kbcli cluster describe mysql-cluster | grep -A 3 Endpoints 63 > 64 Endpoints: 65 COMPONENT MODE INTERNAL EXTERNAL 66 mysql ReadWrite 10.100.51.xxx:3306 172.31.35.xxx:3306 67 ``` 68 69 3. Configure the external IP:Port as the target endpoint on AWS DMS. 70 71 This operation generates an ENI (Elastic Network Interface) on EC2. If the quota of the low-spec machine is small, pay more attention to the available level of ENI. 72 73 For the corresponding ENI specifications, refer to [Elastic network interfaces - Amazon Elastic Compute Cloud](https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/using-eni.html). 74 75 #### Use Network Load Balancer (NLB) to expose the service 76 77 1. Install Load Balancer Controller on EKS. 78 79 For installation details, refer to [Installing the AWS Load Balancer Controller add-on](https://docs.aws.amazon.com/eks/latest/userguide/aws-load-balancer-controller.html). 80 81 For how to create NLB in a cluster, refer to [Network load balancing on Amazon EKS](https://docs.aws.amazon.com/eks/latest/userguide/network-load-balancing.html). 82 2. Create a service that uses NLB to expose the ApeCloud MySQL service. 83 84 Configure `metadata.name`, `metadata.annotations`, `metadata.labels`, and `spec.selector` according to your actual environment. 85 86 ```yaml 87 cat <<EOF | kubectl apply -f - 88 kind: Service 89 apiVersion: v1 90 metadata: 91 name: apecloud-mysql-service 92 annotations: 93 service.beta.kubernetes.io/aws-load-balancer-type: nlb-ip 94 alb.ingress.kubernetes.io/scheme: internet-facing 95 service.beta.kubernetes.io/aws-load-balancer-subnets: <subnet name1>,<subnet name2> 96 labels: 97 apps.kubeblocks.io/component-name: mysql 98 app.kubernetes.io/instance: <apecloud-mysql clustername> 99 app.kubernetes.io/managed-by: kubeblocks 100 app.kubernetes.io/name: apecloud-mysql 101 spec: 102 externalTrafficPolicy: Cluster 103 type: LoadBalancer 104 selector: 105 apps.kubeblocks.io/component-name: mysql 106 app.kubernetes.io/instance: <apecloud-mysql clustername> 107 app.kubernetes.io/managed-by: kubeblocks 108 kubeblocks.io/role: leader 109 ports: 110 - name: http 111 protocol: TCP 112 port: 3306 113 targetPort: mysql 114 EOF 115 ``` 116 117 3. Check whether this new service and NLB run normally. 118 119 ```bash 120 kubectl get svc 121 > 122 NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) 123 apecloud-mysql-service LoadBalancer 10.100.xx.xx k8s-xx-xx-xx.elb.cn-northwest-1.amazonaws.com.cn 3306:xx/TCP 124 ``` 125 126 Make sure the server runs normally and can generate EXTERNAL-IP. Meanwhile, verify whether the NLB state is `Active` by the AWS console, then you can access the cluster by EXTERNAL-IP:Port. 127 128  129 130 ### Expose the source network 131 132 There exist four different conditions for the source network. Choose one method to expose the source network according to your actual environment. 133 134 * Alibaba Cloud ApsaraDB RDS 135 136 Use the public network. Refer to [Apply for or release a public endpoint for an ApsaraDB RDS for MySQL instance](https://www.alibabacloud.com/help/en/apsaradb-for-rds/latest/apply-for-or-release-a-public-endpoint-for-an-apsaradb-rds-for-mysql-instance) to release a public endpoint then create an endpoint in AWS DMS. 137 138 * RDS within the same VPC in AWS 139 140 You only need to specify an RDS when creating an endpoint in DMS and no extra operation is required. 141 142 For creating an endpoint, refer to step 2 in [Configure AWS DMS tasks](#configure-aws-dms-tasks). 143 144 * RDS within different VPCs in AWS 145 146 Use the public network to create an endpoint. Refer to [this document](https://aws.amazon.com/premiumsupport/knowledge-center/aurora-mysql-connect-outside-vpc/?nc1=h_ls) to make public network access available, then create an endpoint in AWS DMS. 147 148 For creating an endpoint, refer to step 2 in [Configure AWS DMS tasks](#configure-aws-dms-tasks). 149 150 * MySQL in AWS EKS 151 152 Use NLB to expose the service. 153 154 1. Install Load Balancer Controller. 155 156 For installation details, refer to [Installing the AWS Load Balancer Controller add-on](https://docs.aws.amazon.com/eks/latest/userguide/aws-load-balancer-controller.html). 157 158 For how to create NLB in a cluster, refer to [Network load balancing on Amazon EKS](https://docs.aws.amazon.com/eks/latest/userguide/network-load-balancing.html). 159 2. Create the service using NLB. 160 161 Make sure the value of `some.label.key` in `metadata.labels` is consistent with the value of ApeCloud MySQL you created. 162 163 Configure `port` and `targetPort` in `spec.ports` according to your current environment. 164 165 ```yaml 166 cat <<EOF | kubectl apply -f - 167 kind: Service 168 apiVersion: v1 169 metadata: 170 name: mysql-local-service 171 annotations: 172 service.beta.kubernetes.io/aws-load-balancer-type: nlb-ip 173 alb.ingress.kubernetes.io/scheme: internet-facing 174 service.beta.kubernetes.io/aws-load-balancer-subnets: ${subnet name1},${subnet name2} 175 labels: 176 some.label.key: some-label-value 177 spec: 178 externalTrafficPolicy: Cluster 179 type: LoadBalancer 180 selector: 181 some.label.key: some-label-value 182 ports: 183 - name: http 184 protocol: TCP 185 port: 3306 186 targetPort: 3306 187 EOF 188 ``` 189 190 3. Make sure Service and NLB run normally. 191 192 Refer to step 3 in [Use Network Load Balancer (NLB) to expose the service](#use-network-load-balancer-nlb-to-expose-the-service) for details. 193 194 ## Configure AWS DMS tasks 195 196 Pay attention to the following potential issues during the migration task. 197 198 * Double write 199 200 During the migration, make sure no business is writing to the target data instance. Otherwise, double write occurs. 201 202 * Disk space of the target instance 203 204 Since the transfer tool uses a concurrent write model when writing to the target database, out-of-order writes may occur, which may trigger page splitting and cause the data space of the target database to be slightly enlarged compared with that of the original instance. It is recommended to plan appropriately when allocating the storage size of the target database, for example, at least 1.5 times the current storage size of the source database. 205 206 * DDL and onlineDDL 207 208 Locked structure changes often affect the speed of data migration. 209 210 The lock-free structure change is based on the rename of the temporary table in principle, which causes data problems if the migration object is not the whole database migration. 211 212 For example, if the migration object chooses to migrate db1.table1 to the target, and an onlineDDL is performed on db1.table1 on the source database during the process, the data of db1.table1 on the target database will be inconsistent with the source database. 213 214 It should be noted that the way some database management tools initiate DDL is performed by using lock-free mutation by default. 215 216 Migration is a short-term behavior. To avoid unnecessary troubles, it is recommended not to perform DDL operations during the migration process. 217 218 * BinLog retention hours 219 220 The incrementally migrating process of data transmission relies on the BinLog of the source database. 221 222 It is recommended to extend the BinLog retention hours to avoid a long-term interruption and the situation that the BinLog of the source database is cleared during recovery, resulting in the migration not being resumed. 223 224 For example, in AWS RDS, connect to the database and run the command below: 225 226 ```bash 227 # View configuration 228 # Input: 229 call mysql.rds_show_configuration; 230 231 # Output: Pay attention to the BinLog retention hours. 232 +------------------------+-------+-----------------------------------------------------------------------------------------------------------+ 233 | name | value | description | 234 +------------------------+-------+-----------------------------------------------------------------------------------------------------------+ 235 | binlog retention hours | 8 | binlog retention hours specifies the duration in hours before binary logs are automatically deleted. | 236 | source delay | 0 | source delay specifies replication delay in seconds between current instance and its master. | 237 | target delay | 0 | target delay specifies replication delay in seconds between current instance and its future read-replica. | 238 +------------------------+-------+-----------------------------------------------------------------------------------------------------------+ 239 240 # Adjust the retention hours to 72 hours 241 # Input: 242 call mysql.rds_set_configuration('binlog retention hours', 72); 243 ``` 244 245 ***Steps:*** 246 247 1. Create a Replication Instance for migration. 248 249 Go to **DMS** -> **Replication Instance** and click **Create replication instance**. 250 251 :::caution 252 253 Select the VPC that you have configured in EKS. 254 255 ::: 256 257  258 259 2. Create endpoints. 260 261 Go to **DMS** -> **Endpoints** and click **Create endpoint**. 262 263  264 265 Create the source endpoint and target endpoint respectively. If the target endpoint is the RDS instance, check **Select RDS DB instance** to configure it. 266 267  268 269 After configuration, specify a replication instance to test the connection. 270 271  272 273 3. Create migration tasks. 274 275  276 277 Click **Create task** and configure the task according to the instructions. 278 279 Pay attention to the following parameters. 280 281 * Migration Type 282 283  284 285 AWS DMS provides three migration types: 286 287 * Migrate existing data: AWS DMS migrates only your existing data. Changes to your source data aren’t captured and applied to your target. 288 * Migrate existing data and replicate ongoing changes: AWS DMS migrates both existing data and ongoing data changes, i.e. the existing data before the migration task and the data changes during the migration task will be synchronized to the target instance. 289 * Replicate data changes only: AWS DMS only migrates the ongoing data changes. If you select this type, you can use **CDC start mode for source transactions** to specify a location and migrate the data changes. 290 For this tutorial, select **Migrate existing data and replicate ongoing changes**. 291 292 * Target table preparation mode 293 294  295 296 The target table preparation mode specifies the initial mode of the data structure. You can click the Info link beside the options to view the definition of each mode. For example, if ApeCloud MySQL is a newly created empty instance, you can select **Do nothing** mode. 297 298 In addition, create a database on ApeCloud MySQL before migration because AWS DMS does not create a database. 299 300 * Turn on validation 301 302 It is recommended to enable this function. 303 304  305 306 * Batch-optimized apply 307 308 It is recommended to enable this function as this function enables you to write target instances in batch and can improve the write speed. 309 310  311 312 * Full load tuning settings: Maximum number of tables to load in parallel 313 314 This number decides how many concurrencies DMS uses to get source table data. Theoretically speaking, this can cause pressure on the source table during the full-load migration. Lower this number when the business in the source table is delicate. 315 316  317 318 * Table Mapping 319 320 Table mapping decides which tables in the database are used for migration and can also apply easy conversions. It is recommended to enable **Wizard** mode to configure this parameter. 321 4. Start the migration task. 322 323 ## Switch applications 324 325 ***Before you start*** 326 327 * Make sure DMS migration tasks run normally. If you perform a validation task, make sure the results are as expected. 328 * To differentiate conversation and improve data security, it is recommended to create and authorize a database account solely for migration. 329 * It is recommended to switch applications during business off-peak hours because for safety concerns during the switching process, it is necessary to stop business write. 330 331 ***Steps:*** 332 333 1. Make sure the transmission task runs normally. 334 335 Pay attention to **Status**, **Last updated in Table statistics**, and **CDC latency target** in **CloudWatch metrics**. 336 337 You can also refer to [this document](https://aws.amazon.com/premiumsupport/knowledge-center/dms-stuck-task-progress/?nc1=h_ls) to verify the migration task. 338 339  340 341  342 343 2. Pause business and prohibit new business write in the source database. 344 3. Verify the transmission task status again to make sure the task runs normally and the running status lasts at least 1 minute. 345 346 Refer to step 1 above to observe whether the link is normal and whether latency exists. 347 4. Use the target database to resume business. 348 5. Verify the migration with business.