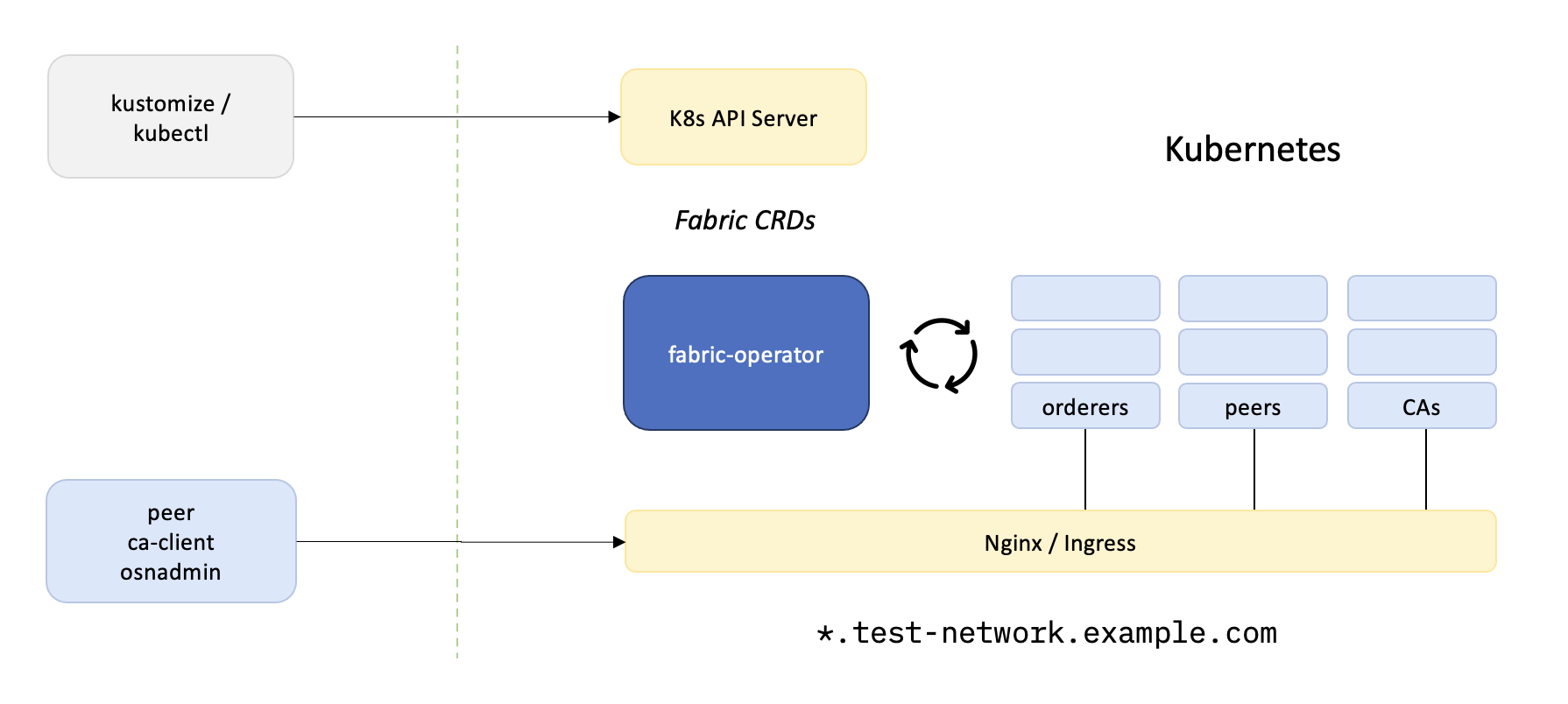

github.com/IBM-Blockchain/fabric-operator@v1.0.4/sample-network/README.md (about) 1 # Sample Network 2 3 Create a sample network with CRDs, fabric-operator, and the Kube API server: 4 5 - Apply `kustomization` overlays to install the Operator 6 - Apply `kustomization` overlays to construct a Fabric Network 7 - Call `peer` CLI and channel participation SDKs to administer the network 8 - Deploy _Chaincode-as-a-Service_ smart contracts 9 - Develop _Gateway Client_ applications on a local workstation 10 11 Feedback, comments, questions, etc. at Discord : [#fabric-kubernetes](https://discord.gg/hyperledger) 12 13  14 15 ## Prerequisites: 16 17 ### General 18 19 - [kubectl](https://kubernetes.io/docs/tasks/tools/) 20 - [jq](https://stedolan.github.io/jq/) 21 - [envsubst](https://www.gnu.org/software/gettext/manual/html_node/envsubst-Invocation.html) (`brew install gettext` on OSX) 22 - [k9s](https://k9scli.io) (recommended) 23 - Fabric binaries (peer, osnadmin, etc.) will be installed into the local `bin` folder. Add these to your PATH: 24 25 ```shell 26 export PATH=$PWD:$PWD/bin:$PATH 27 ``` 28 29 30 ### Kubernetes 31 32 If you do not have access to a Kubernetes cluster, create a local instance with [KIND](https://kind.sigs.k8s.io/docs/user/quick-start/#installation) 33 and [Docker](https://www.docker.com) (+ resources to 8 CPU / 8GRAM): 34 ```shell 35 network kind 36 ``` 37 38 For additional cluster options, see the detailed guidelines for: 39 - [Rancher Desktop](#rancher-desktop): k3s 40 - [fabric-devenv](#vagrant-fabric-devenv): vagrant VM 41 - [IKS](#iks) 42 - [EKS](#eks) 43 - [OCP](#ocp) 44 45 46 ### DNS Domain 47 48 The operator utilizes Kubernetes `Ingress` resources to expose Fabric services at a common [DNS wildcard domain](https://en.wikipedia.org/wiki/Wildcard_DNS_record) 49 (e.g. `*.test-network.example.com`). For convenience, the sample network includes an Nginx ingress controller, 50 pre-configured with [ssl-passthrough](https://kubernetes.github.io/ingress-nginx/user-guide/tls/#ssl-passthrough) 51 for TLS termination at the node endpoints. 52 53 For **local clusters**, set the ingress wildcard domain to the host loopback interface (127.0.0.1): 54 ```shell 55 export TEST_NETWORK_INGRESS_DOMAIN=localho.st 56 ``` 57 58 For **cloud-based clusters**, set the ingress wildcard domain to the public DNS A record: 59 ```shell 60 export TEST_NETWORK_INGRESS_DOMAIN=test-network.example.com 61 ``` 62 63 For additional guidelines on configuring ingress and DNS, see [Considerations for Kubernetes Distributions](https://cloud.ibm.com/docs/blockchain-sw-252?topic=blockchain-sw-252-deploy-k8#console-deploy-k8-considerations). 64 65 66 67 68 ## Sample Network 69 70 Install the Nginx controller and Fabric CRDs: 71 ```shell 72 network cluster init 73 ``` 74 75 Launch the operator and `kustomize` a network of [CAs](config/cas), [peers](config/peers), and [orderers](config/orderers): 76 ```shell 77 network up 78 ``` 79 80 Explore Kubernetes `Pods`, `Deployments`, `Services`, `Ingress`, etc.: 81 ```shell 82 kubectl -n test-network get all 83 ``` 84 85 86 ## Chaincode 87 88 In the examples below, the `peer` binary will be used to invoke smart contracts on the org1-peer1 ledger. Set the CLI context with: 89 ```shell 90 export FABRIC_CFG_PATH=${PWD}/temp/config 91 export CORE_PEER_LOCALMSPID=Org1MSP 92 export CORE_PEER_ADDRESS=test-network-org1-peer1-peer.${TEST_NETWORK_INGRESS_DOMAIN}:443 93 export CORE_PEER_TLS_ENABLED=true 94 export CORE_PEER_MSPCONFIGPATH=${PWD}/temp/enrollments/org1/users/org1admin/msp 95 export CORE_PEER_TLS_ROOTCERT_FILE=${PWD}/temp/channel-msp/peerOrganizations/org1/msp/tlscacerts/tlsca-signcert.pem 96 ``` 97 98 ### Chaincode as a Service 99 100 The operator is compatible with sample _Chaincode-as-a-Service_ smart contracts and the `ccaas` external builder. 101 When using the ccaas builder, the chaincode pods must be [started and running](scripts/chaincode.sh#L183) in the cluster 102 before the contract can be approved on the channel. 103 104 Clone the [fabric-samples](https://github.com/hyperledger/fabric-samples) git repository: 105 ```shell 106 git clone https://github.com/hyperledger/fabric-samples.git /tmp/fabric-samples 107 ``` 108 109 Create a channel: 110 ```shell 111 network channel create 112 ``` 113 114 Deploy a sample contract: 115 ```shell 116 network cc deploy asset-transfer-basic basic_1.0 /tmp/fabric-samples/asset-transfer-basic/chaincode-java 117 118 network cc metadata asset-transfer-basic 119 network cc invoke asset-transfer-basic '{"Args":["InitLedger"]}' 120 network cc query asset-transfer-basic '{"Args":["ReadAsset","asset1"]}' | jq 121 ``` 122 123 Or use the native `peer` CLI to query the contract installed on org1 / peer1: 124 ```shell 125 peer chaincode query -n asset-transfer-basic -C mychannel -c '{"Args":["org.hyperledger.fabric:GetMetadata"]}' 126 ``` 127 128 129 ### K8s Chaincode Builder 130 131 The operator can also be configured for use with [fabric-builder-k8s](https://github.com/hyperledgendary/fabric-builder-k8s), 132 providing smooth and immediate _Chaincode Right Now!_ deployments. With the `k8s` builder, the peer node will directly 133 manage the lifecycle of the chaincode pods. 134 135 Reconstruct the network with the "k8s-fabric-peer" image: 136 ```shell 137 network down 138 139 export TEST_NETWORK_PEER_IMAGE=ghcr.io/hyperledgendary/k8s-fabric-peer 140 export TEST_NETWORK_PEER_IMAGE_LABEL=v0.6.0 141 142 network up 143 network channel create 144 ``` 145 146 Download a "k8s" chaincode package: 147 ```shell 148 curl -fsSL https://github.com/hyperledgendary/conga-nft-contract/releases/download/v0.1.1/conga-nft-contract-v0.1.1.tgz -o conga-nft-contract-v0.1.1.tgz 149 ``` 150 151 Install the smart contract: 152 ```shell 153 peer lifecycle chaincode install conga-nft-contract-v0.1.1.tgz 154 155 export PACKAGE_ID=$(peer lifecycle chaincode calculatepackageid conga-nft-contract-v0.1.1.tgz) && echo $PACKAGE_ID 156 157 peer lifecycle \ 158 chaincode approveformyorg \ 159 --channelID mychannel \ 160 --name conga-nft-contract \ 161 --version 1 \ 162 --package-id ${PACKAGE_ID} \ 163 --sequence 1 \ 164 --orderer test-network-org0-orderersnode1-orderer.${TEST_NETWORK_INGRESS_DOMAIN}:443 \ 165 --tls --cafile $PWD/temp/channel-msp/ordererOrganizations/org0/orderers/org0-orderersnode1/tls/signcerts/tls-cert.pem \ 166 --connTimeout 15s 167 168 peer lifecycle \ 169 chaincode commit \ 170 --channelID mychannel \ 171 --name conga-nft-contract \ 172 --version 1 \ 173 --sequence 1 \ 174 --orderer test-network-org0-orderersnode1-orderer.${TEST_NETWORK_INGRESS_DOMAIN}:443 \ 175 --tls --cafile $PWD/temp/channel-msp/ordererOrganizations/org0/orderers/org0-orderersnode1/tls/signcerts/tls-cert.pem \ 176 --connTimeout 15s 177 178 ``` 179 180 Inspect chaincode pods: 181 ```shell 182 kubectl -n test-network describe pods -l app.kubernetes.io/created-by=fabric-builder-k8s 183 ``` 184 185 Query the smart contract: 186 ```shell 187 peer chaincode query -n conga-nft-contract -C mychannel -c '{"Args":["org.hyperledger.fabric:GetMetadata"]}' 188 ``` 189 190 191 ## Teardown 192 193 Invariably, something in the recipe above will go awry. Look for additional diagnostics in network-debug.log and 194 reset the stage with: 195 196 ```shell 197 network down 198 ``` 199 or 200 ```shell 201 network unkind 202 ``` 203 204 205 ## Appendix: Operations Console 206 207 Launch the [Fabric Operations Console](https://github.com/hyperledger-labs/fabric-operations-console): 208 ```shell 209 network console 210 ``` 211 212 - open `https://test-network-hlf-console-console.${TEST_NETWORK_INGRESS_DOMAIN}` 213 - Accept the self-signed TLS certificate 214 - Log in as `admin:password` 215 - [Build a network](https://cloud.ibm.com/docs/blockchain?topic=blockchain-ibp-console-build-network) 216 217 218 219 ## Appendix: Alternate k8s Runtimes 220 221 ### Rancher Desktop 222 223 An excellent alternative for local development is the k3s distribution bundled with [Rancher Desktop](https://rancherdesktop.io). 224 225 1. Increase cluster resources to 8 CPU / 8GRAM 226 2. Select mobyd or containerd runtime 227 3. Disable the Traefik ingress 228 4. Restart Kubernetes 229 230 For use with mobyd / Docker container: 231 ```shell 232 export TEST_NETWORK_CLUSTER_RUNTIME="k3s" 233 export TEST_NETWORK_STAGE_DOCKER_IMAGES="false" 234 export TEST_NETWORK_STORAGE_CLASS="local-path" 235 ``` 236 237 For use with containerd: 238 ```shell 239 export TEST_NETWORK_CLUSTER_RUNTIME="k3s" 240 export TEST_NETWORK_CONTAINER_CLI="nerdctl" 241 export TEST_NETWORK_CONTAINER_NAMESPACE="--namespace k8s.io" 242 export TEST_NETWORK_STAGE_DOCKER_IMAGES="false" 243 export TEST_NETWORK_STORAGE_CLASS="local-path" 244 ``` 245 246 247 ### IKS 248 For installations at IBM Cloud, use the following configuration settings: 249 250 ```shell 251 export TEST_NETWORK_CLUSTER_RUNTIME="k3s" 252 export TEST_NETWORK_COREDNS_DOMAIN_OVERRIDE="false" 253 export TEST_NETWORK_STAGE_DOCKER_IMAGES="false" 254 export TEST_NETWORK_STORAGE_CLASS="ibm-file-gold" 255 ``` 256 257 To determine the external IP address for the Nginx ingress controller: 258 259 1. Run `network cluster init` to create the Nginx resources 260 2. Determine the IP address for the Nginx EXTERNAL-IP: 261 ```shell 262 INGRESS_IPADDR=$(kubectl -n ingress-nginx get svc/ingress-nginx-controller -o json | jq -r .status.loadBalancer.ingress[0].ip) 263 ``` 264 3. Set a virtual host domain resolving `*.EXTERNAL-IP.nip.io` or a public DNS wildcard resolver: 265 ```shell 266 export TEST_NETWORK_INGRESS_DOMAIN=$(echo $INGRESS_IPADDR | tr -s '.' '-').nip.io 267 ``` 268 269 For additional guidelines on configuring ingress and DNS, see [Considerations for Kubernetes Distributions](https://cloud.ibm.com/docs/blockchain-sw-252?topic=blockchain-sw-252-deploy-k8#console-deploy-k8-considerations). 270 271 272 ### EKS 273 274 For installations at Amazon's Elastic Kubernetes Service, use the following settings: 275 ```shell 276 export TEST_NETWORK_CLUSTER_RUNTIME="k3s" 277 export TEST_NETWORK_COREDNS_DOMAIN_OVERRIDE="false" 278 export TEST_NETWORK_STAGE_DOCKER_IMAGES="false" 279 export TEST_NETWORK_STORAGE_CLASS="gp2" 280 ``` 281 282 As an alternative to registering a public DNS domain with Route 54, the [Dead simple wildcard DNS for any IP Address](https://nip.io) 283 service may be used to associate the Nginx external IP with an `nip.io` domain. 284 285 To determine the external IP address for the ingress controller: 286 287 1. Run `network cluster init` to create the Nginx resources. 288 2. Wait for the ingress to come up and the hostname to propagate through public DNS (this will take a few minutes.) 289 3. Determine the IP address for the Nginx EXTERNAL-IP: 290 ```shell 291 INGRESS_HOSTNAME=$(kubectl -n ingress-nginx get svc/ingress-nginx-controller -o json | jq -r .status.loadBalancer.ingress[0].hostname) 292 INGRESS_IPADDR=$(dig $INGRESS_HOSTNAME +short) 293 ``` 294 4. Set a virtual host domain resolving `*.EXTERNAL-IP.nip.io` to the ingress IP: 295 ```shell 296 export TEST_NETWORK_INGRESS_DOMAIN=$(echo $INGRESS_IPADDR | tr -s '.' '-').nip.io 297 ``` 298 299 For additional guidelines on configuring ingress and DNS, see [Considerations for Kubernetes Distributions](https://cloud.ibm.com/docs/blockchain-sw-252?topic=blockchain-sw-252-deploy-k8#console-deploy-k8-considerations). 300 301 302 ## Vagrant: fabric-devenv 303 304 The [fabric-devenv](https://github.com/hyperledgendary/fabric-devenv) project will create a local development Virtual 305 Machine, including all required prerequisites for running a KIND cluster and the sample network. 306 307 To work around an issue resolving the kube DNS hostnames in vagrant, override the internal DNS name for Fabric services with: 308 309 ```shell 310 export TEST_NETWORK_KUBE_DNS_DOMAIN=test-network 311 ``` 312 313 314 ## Troubleshooting Tips 315 316 - The `network` script prints output and progress to a `network-debug.log` file. In a second shell: 317 ```shell 318 tail -f network-debug.log 319 ``` 320 321 - Tail the operator logging output: 322 ```shell 323 kubectl -n test-network logs -f deployment/fabric-operator 324 ``` 325 326 327 - On OSX, there is a bug in the Golang DNS resolver ([Fabric #3372](https://github.com/hyperledger/fabric/issues/3372) and [Golang #43398](https://github.com/golang/go/issues/43398)), 328 causing the Fabric binaries to occasionally stall out when querying DNS. 329 This issue can cause `osnadmin` / channel join to time out, throwing an error when joining the channel. 330 Fix this by turning a build of [fabric](https://github.com/hyperledger/fabric) binaries and copying the build outputs 331 from `fabric/build/bin/*` --> `sample-network/bin` 332 333 334 - Both Fabric and Kubernetes are complex systems. On occasion, things don't always work as they should, and it's 335 impossible to enumerate all failure cases that can come up in the wild. When something in kube doesn't come up 336 correctly, use the [k9s](https://k9scli.io) (or another K8s navigator) to browse deployments, pods, services, 337 and logs. Usually hitting `d` (describe) on a stuck resource in the `test-network` namespace is enough to 338 determine the source of the error.