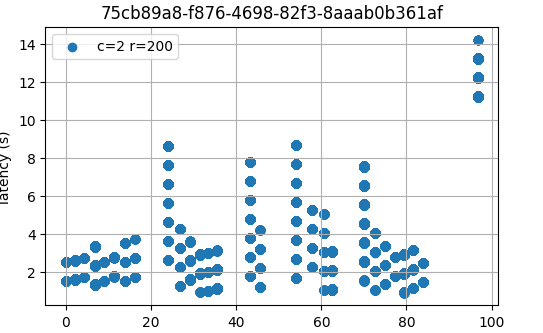

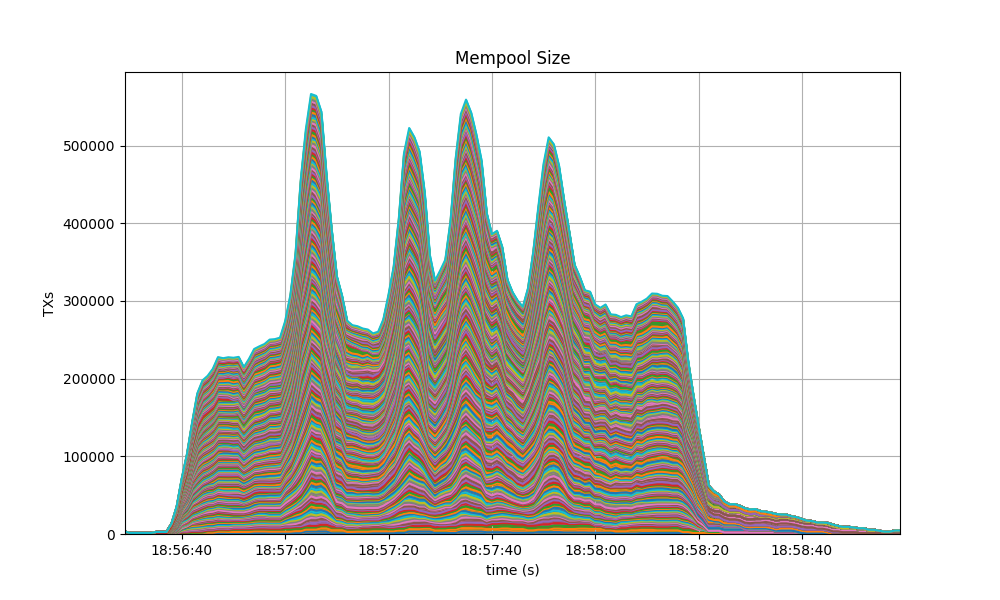

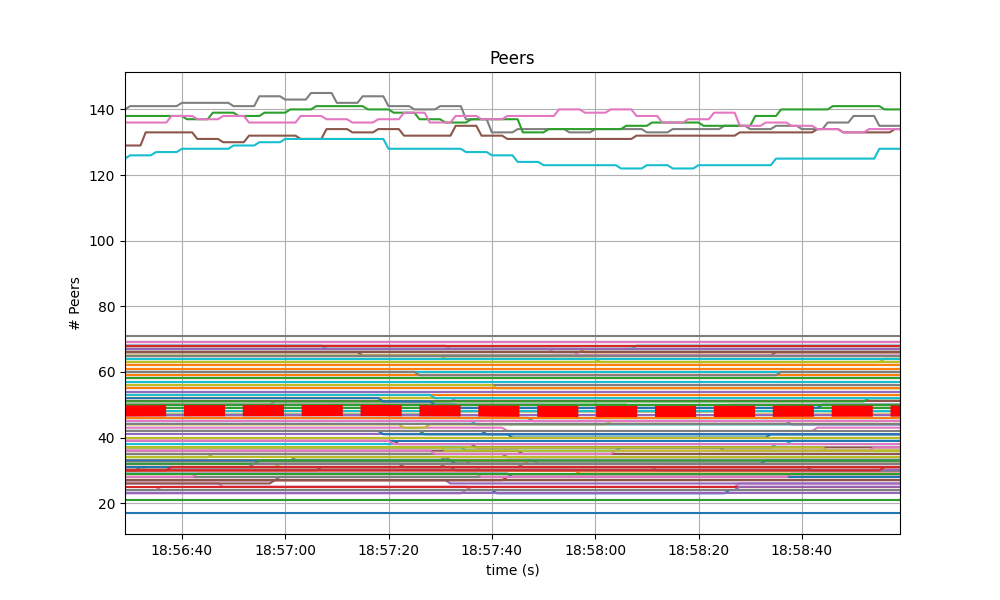

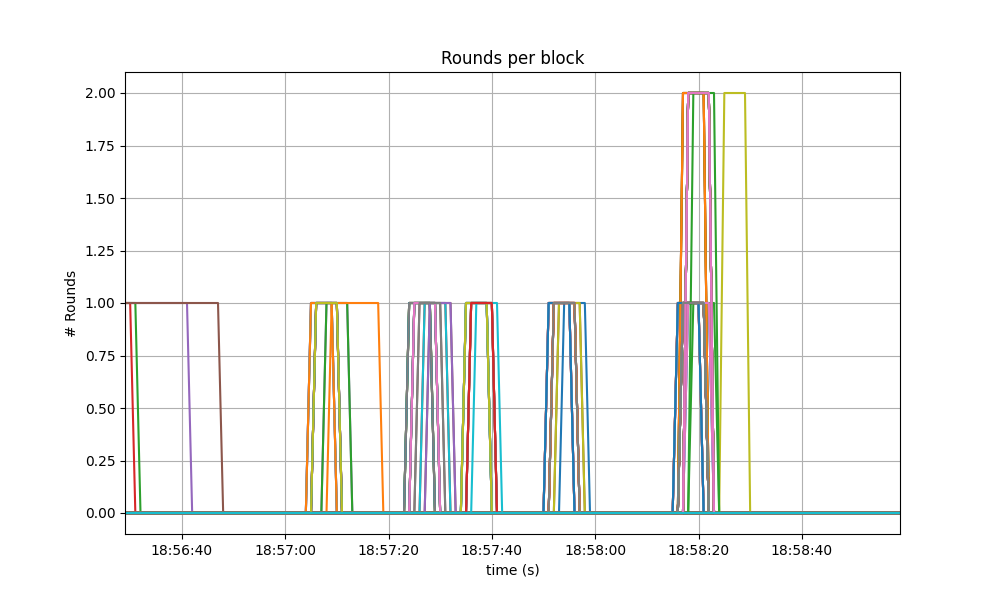

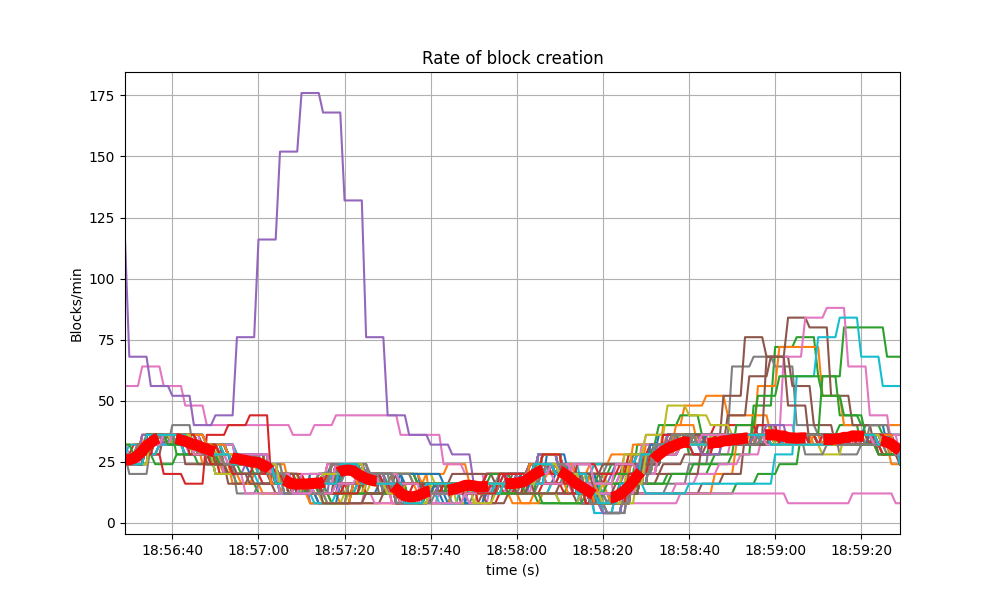

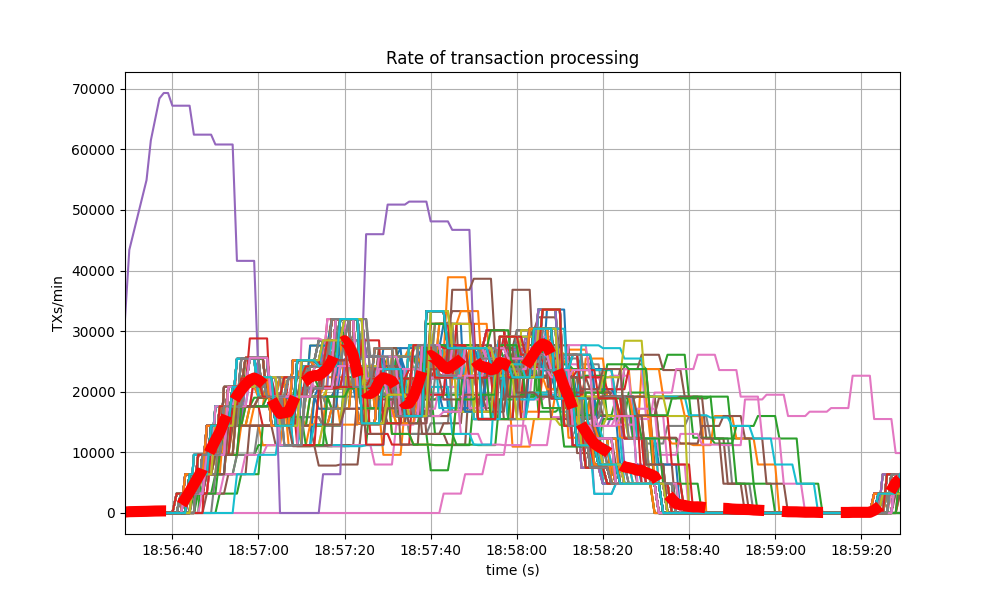

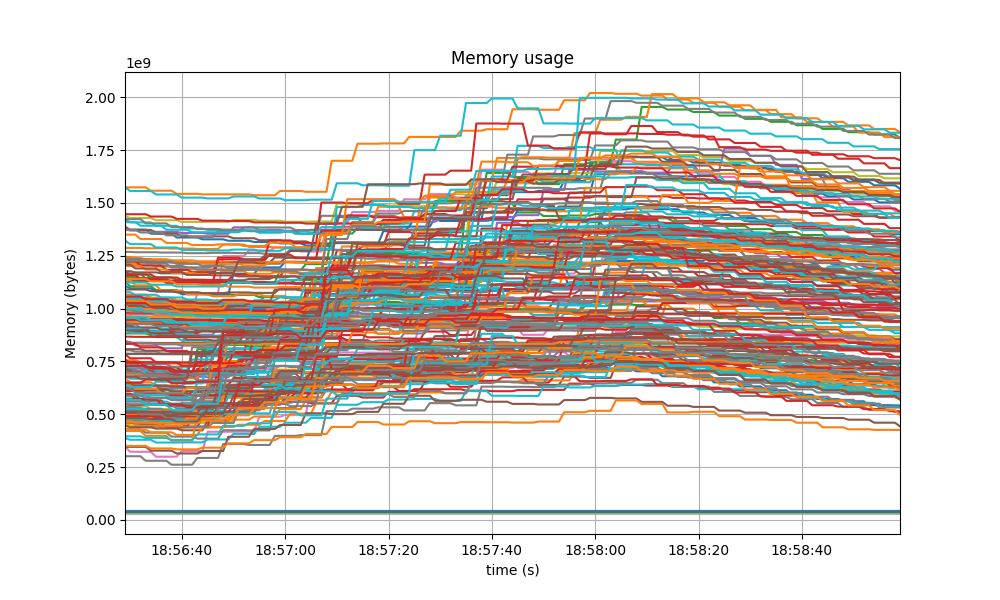

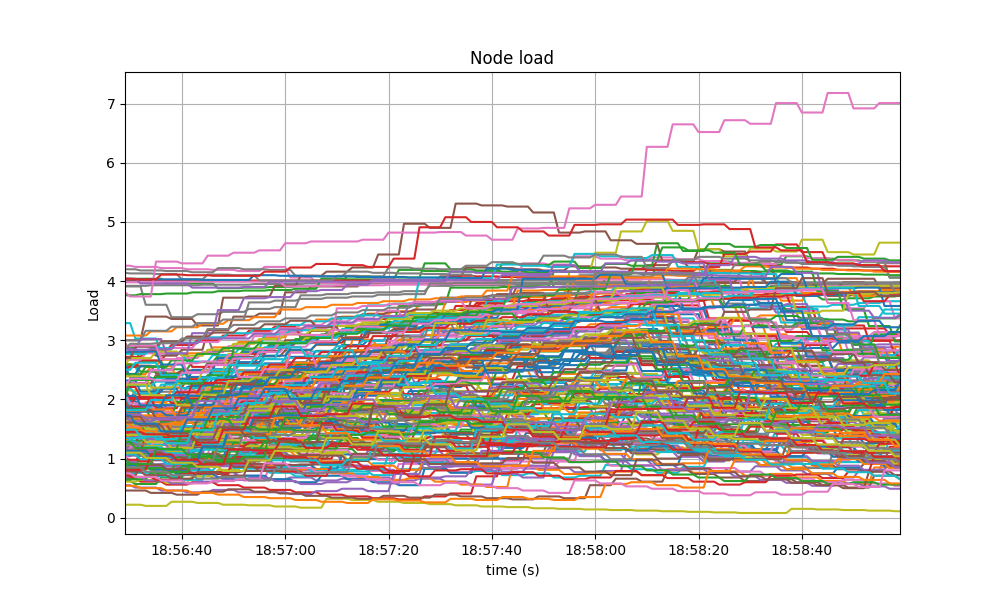

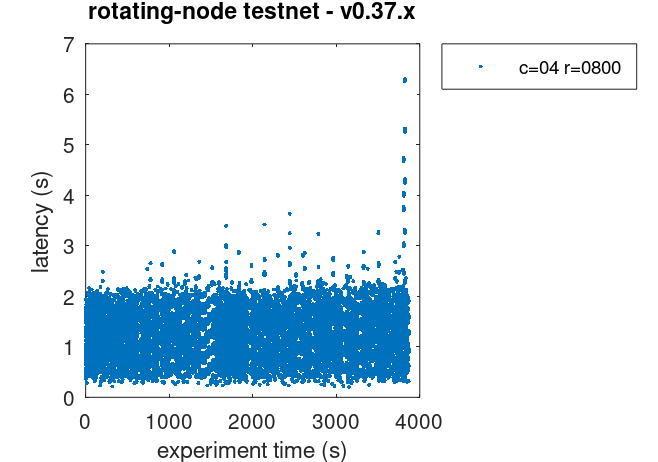

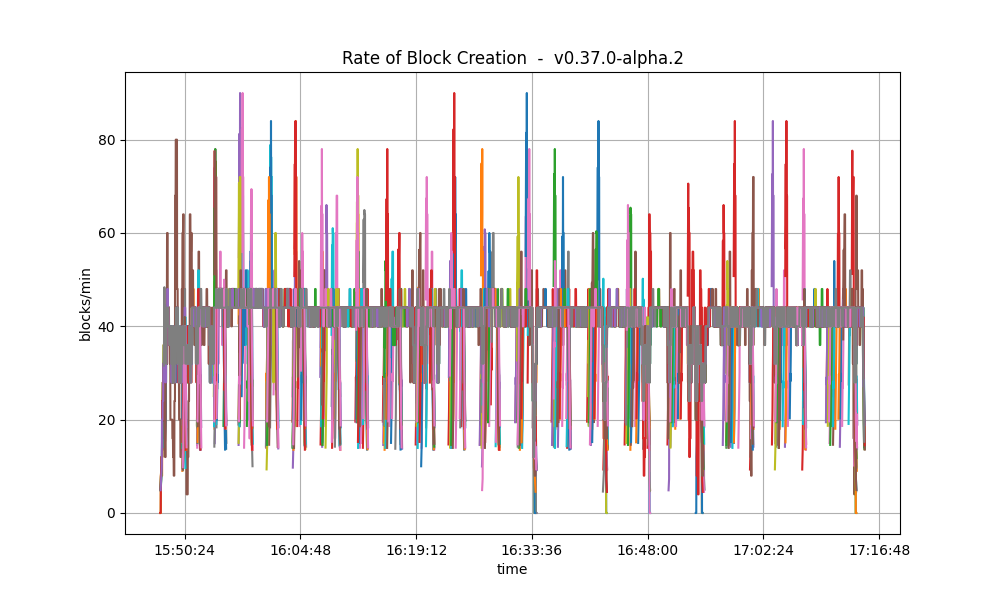

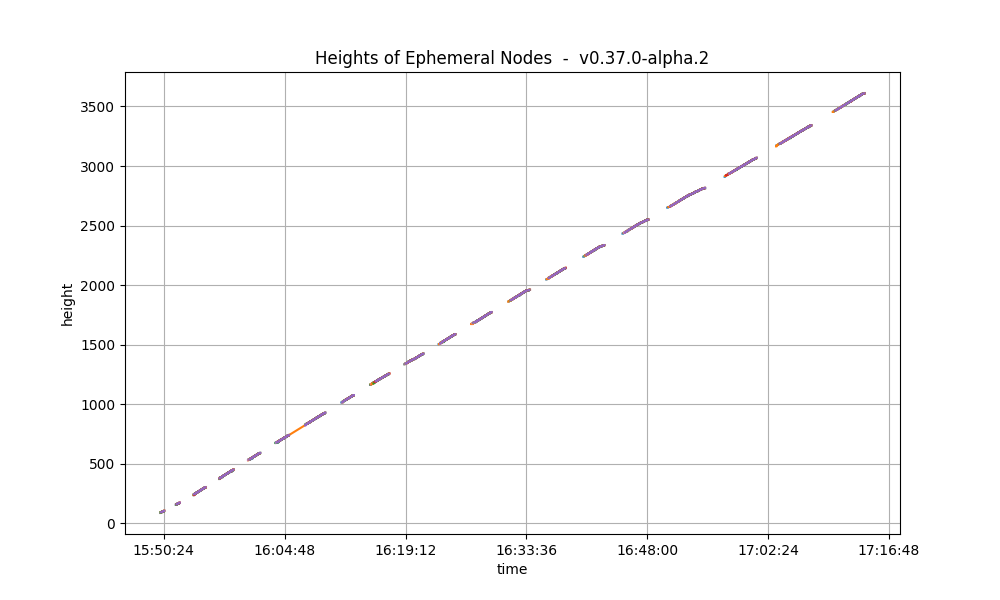

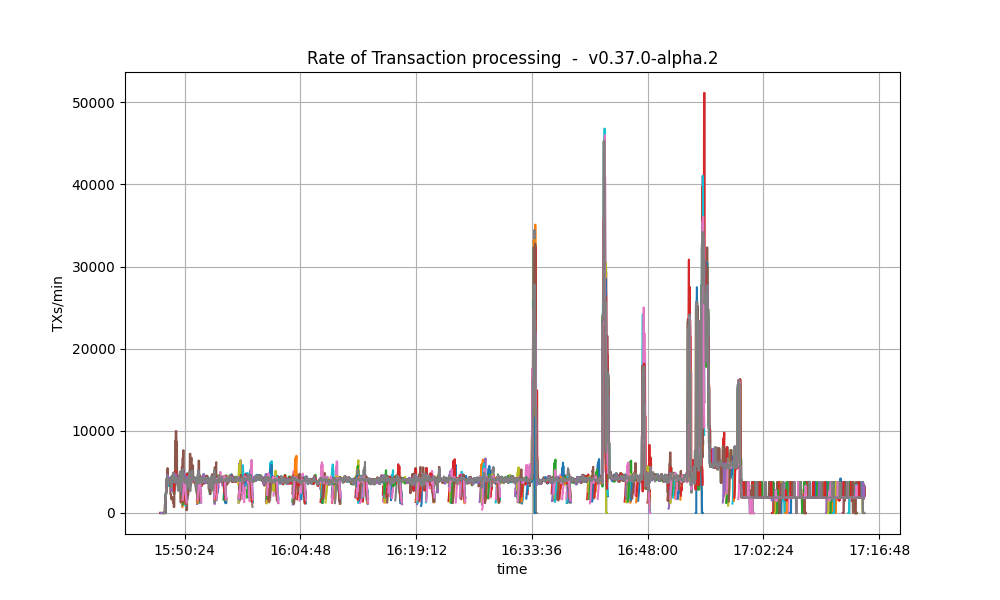

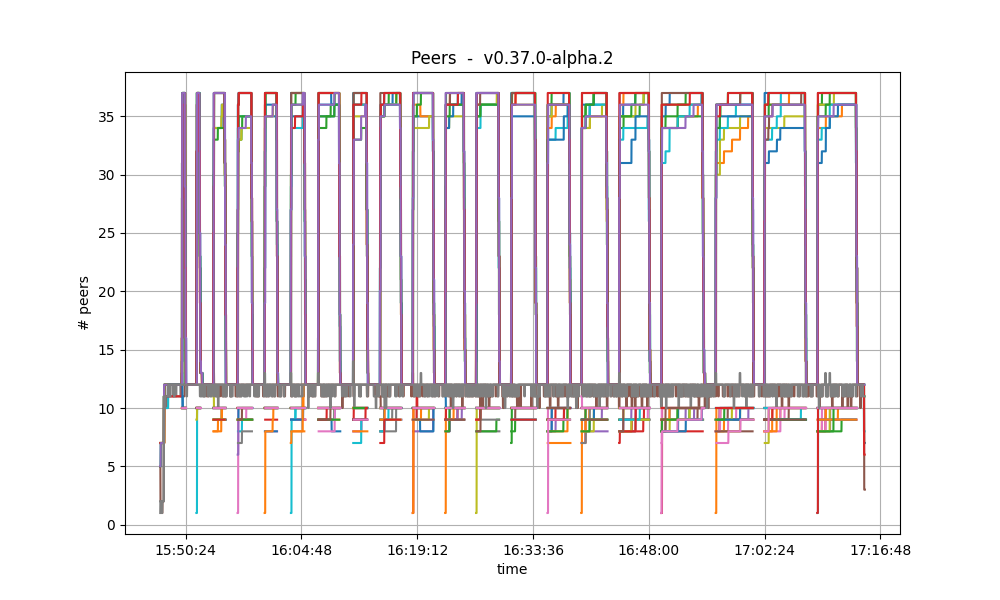

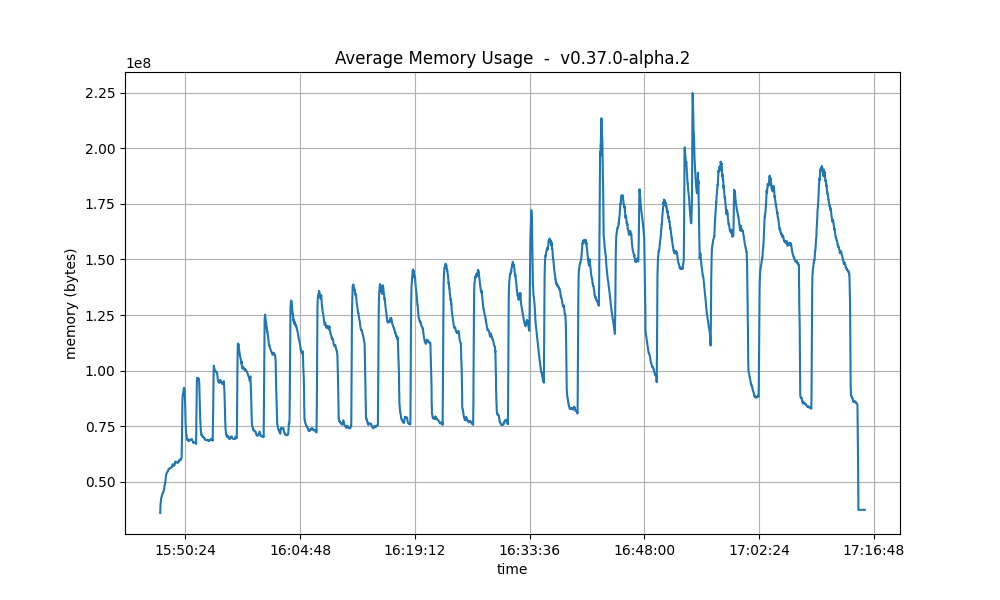

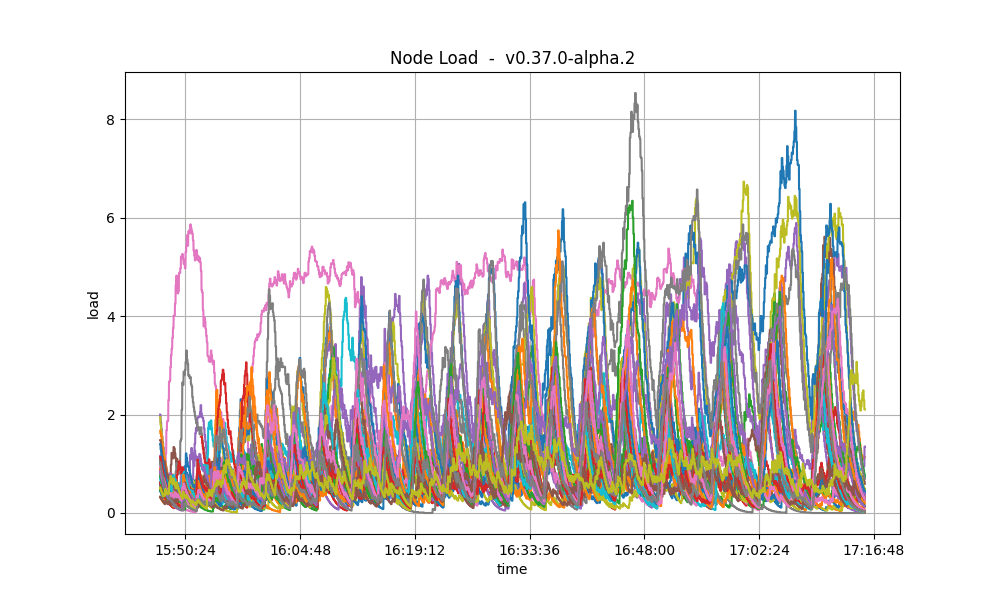

github.com/KYVENetwork/cometbft/v38@v38.0.3/docs/qa/CometBFT-QA-38.md (about) 1 --- 2 order: 1 3 parent: 4 title: CometBFT QA Results v0.38.x 5 description: This is a report on the results obtained when running CometBFT v0.38.x on testnets 6 order: 5 7 --- 8 9 # CometBFT QA Results v0.38.x 10 11 This iteration of the QA was run on CometBFT `v0.38.0-alpha.2`, the second 12 `v0.38.x` version from the CometBFT repository. 13 14 The changes with respect to the baseline, `v0.37.0-alpha.3` from Feb 21, 2023, 15 include the introduction of the `FinalizeBlock` method to complete the full 16 range of ABCI++ functionality (ABCI 2.0), and other several improvements 17 described in the 18 [CHANGELOG](https://github.com/KYVENetwork/cometbft/v38/blob/v0.38.0-alpha.2/CHANGELOG.md). 19 20 ## Issues discovered 21 22 * (critical, fixed) [\#539] and [\#546] - This bug causes the proposer to crash in 23 `PrepareProposal` because it does not have extensions while it should. 24 This happens mainly when the proposer was catching up. 25 * (critical, fixed) [\#562] - There were several bugs in the metrics-related 26 logic that were causing panics when the testnets were started. 27 28 ## 200 Node Testnet 29 30 As in other iterations of our QA process, we have used a 200-node network as 31 testbed, plus nodes to introduce load and collect metrics. 32 33 ### Saturation point 34 35 As in previous iterations of our QA experiments, we first find the transaction 36 load on which the system begins to show a degraded performance. Then we run the 37 experiments with the system subjected to a load slightly under the saturation 38 point. The method to identify the saturation point is explained 39 [here](CometBFT-QA-34.md#saturation-point) and its application to the baseline 40 is described [here](TMCore-QA-37.md#finding-the-saturation-point). 41 42 The following table summarizes the results for the different experiments 43 (extracted from 44 [`v038_report_tabbed.txt`](img38/200nodes/v038_report_tabbed.txt)). The X axis 45 (`c`) is the number of connections created by the load runner process to the 46 target node. The Y axis (`r`) is the rate or number of transactions issued per 47 second. 48 49 | | c=1 | c=2 | c=4 | 50 | ------ | --------: | --------: | ----: | 51 | r=200 | 17800 | **33259** | 33259 | 52 | r=400 | **35600** | 41565 | 41384 | 53 | r=800 | 36831 | 38686 | 40816 | 54 | r=1600 | 40600 | 45034 | 39830 | 55 56 We can observe in the table that the system is saturated beyond the diagonal 57 defined by the entries `c=1,r=400` and `c=2,r=200`. Entries in the diagonal have 58 the same amount of transaction load, so we can consider them equivalent. For the 59 chosen diagonal, the expected number of processed transactions is `1 * 400 tx/s * 89 s = 35600`. 60 (Note that we use 89 out of 90 seconds of the experiment because the last transaction batch 61 coincides with the end of the experiment and is thus not sent.) The experiments in the diagonal 62 below expect double that number, that is, `1 * 800 tx/s * 89 s = 71200`, but the 63 system is not able to process such load, thus it is saturated. 64 65 Therefore, for the rest of these experiments, we chose `c=1,r=400` as the 66 configuration. We could have chosen the equivalent `c=2,r=200`, which is the same 67 used in our baseline version, but for simplicity we decided to use the one with 68 only one connection. 69 70 Also note that, compared to the previous QA tests, we have tried to find the 71 saturation point within a higher range of load values for the rate `r`. In 72 particular we run tests with `r` equal to or above `200`, while in the previous 73 tests `r` was `200` or lower. In particular, for our baseline version we didn't 74 run the experiment on the configuration `c=1,r=400`. 75 76 For comparison, this is the table with the baseline version, where the 77 saturation point is beyond the diagonal defined by `r=200,c=2` and `r=100,c=4`. 78 79 | | c=1 | c=2 | c=4 | 80 | ----- | ----: | --------: | --------: | 81 | r=25 | 2225 | 4450 | 8900 | 82 | r=50 | 4450 | 8900 | 17800 | 83 | r=100 | 8900 | 17800 | **35600** | 84 | r=200 | 17800 | **35600** | 38660 | 85 86 ### Latencies 87 88 The following figure plots the latencies of the experiment carried out with the 89 configuration `c=1,r=400`. 90 91 . 92 93 For reference, the following figure shows the latencies of one of the 94 experiments for `c=2,r=200` in the baseline. 95 96  97 98 As can be seen, in most cases the latencies are very similar, and in some cases, 99 the baseline has slightly higher latencies than the version under test. Thus, 100 from this small experiment, we can say that the latencies measured on the two 101 versions are equivalent, or at least that the version under test is not worse 102 than the baseline. 103 104 ### Prometheus Metrics on the Chosen Experiment 105 106 This section further examines key metrics for this experiment extracted from 107 Prometheus data regarding the chosen experiment with configuration `c=1,r=400`. 108 109 #### Mempool Size 110 111 The mempool size, a count of the number of transactions in the mempool, was 112 shown to be stable and homogeneous at all full nodes. It did not exhibit any 113 unconstrained growth. The plot below shows the evolution over time of the 114 cumulative number of transactions inside all full nodes' mempools at a given 115 time. 116 117  118 119 The following picture shows the evolution of the average mempool size over all 120 full nodes, which mostly oscilates between 1000 and 2500 outstanding 121 transactions. 122 123  124 125 The peaks observed coincide with the moments when some nodes reached round 1 of 126 consensus (see below). 127 128 The behavior is similar to the observed in the baseline, presented next. 129 130  131 132  133 134 135 #### Peers 136 137 The number of peers was stable at all nodes. It was higher for the seed nodes 138 (around 140) than for the rest (between 20 and 70 for most nodes). The red 139 dashed line denotes the average value. 140 141  142 143 Just as in the baseline, shown next, the fact that non-seed nodes reach more 144 than 50 peers is due to [\#9548]. 145 146  147 148 149 #### Consensus Rounds per Height 150 151 Most heights took just one round, that is, round 0, but some nodes needed to 152 advance to round 1. 153 154  155 156 The following specific run of the baseline required some nodes to reach round 1. 157 158  159 160 161 #### Blocks Produced per Minute, Transactions Processed per Minute 162 163 The following plot shows the rate in which blocks were created, from the point 164 of view of each node. That is, it shows when each node learned that a new block 165 had been agreed upon. 166 167  168 169 For most of the time when load was being applied to the system, most of the 170 nodes stayed around 20 blocks/minute. 171 172 The spike to more than 100 blocks/minute is due to a slow node catching up. 173 174 The baseline experienced a similar behavior. 175 176  177 178 The collective spike on the right of the graph marks the end of the load 179 injection, when blocks become smaller (empty) and impose less strain on the 180 network. This behavior is reflected in the following graph, which shows the 181 number of transactions processed per minute. 182 183  184 185 The following is the transaction processing rate of the baseline, which is 186 similar to above. 187 188  189 190 191 #### Memory Resident Set Size 192 193 The following graph shows the Resident Set Size of all monitored processes, with 194 maximum memory usage of 1.6GB, slightly lower than the baseline shown after. 195 196  197 198 A similar behavior was shown in the baseline, with even a slightly higher memory 199 usage. 200 201  202 203 The memory of all processes went down as the load is removed, showing no signs 204 of unconstrained growth. 205 206 207 #### CPU utilization 208 209 ##### Comparison to baseline 210 211 The best metric from Prometheus to gauge CPU utilization in a Unix machine is 212 `load1`, as it usually appears in the [output of 213 `top`](https://www.digitalocean.com/community/tutorials/load-average-in-linux). 214 215 The load is contained below 5 on most nodes, as seen in the following graph. 216 217  218 219 The baseline had a similar behavior. 220 221  222 223 ##### Impact of vote extension signature verification 224 225 It is important to notice that the baseline (`v0.37.x`) does not implement vote extensions, 226 whereas the version under test (`v0.38.0-alpha.2`) _does_ implement them, and they are 227 configured to be activated since height 1. 228 The e2e application used in these tests verifies all received vote extension signatures (up to 175) 229 twice per height: upon `PrepareProposal` (for sanity) and upon `ProcessProposal` (to demonstrate how 230 real applications can do it). 231 232 The fact that there is no noticeable difference in the CPU utilization plots of 233 the baseline and `v0.38.0-alpha.2` means that re-verifying up 175 vote extension signatures twice 234 (besides the initial verification done by CometBFT when receiving them from the network) 235 has no performance impact in the current version of the system: the bottlenecks are elsewhere. 236 Thus, we should focus on optimizing other parts of the system: the ones that cause the current 237 bottlenecks (mempool gossip duplication, leaner proposal structure, optimized consensus gossip). 238 239 ### Test Results 240 241 The comparison against the baseline results show that both scenarios had similar 242 numbers and are therefore equivalent. 243 244 A conclusion of these tests is shown in the following table, along with the 245 commit versions used in the experiments. 246 247 | Scenario | Date | Version | Result | 248 | -------- | ---------- | ---------------------------------------------------------- | ------ | 249 | 200-node | 2023-05-21 | v0.38.0-alpha.2 (1f524d12996204f8fd9d41aa5aca215f80f06f5e) | Pass | 250 251 252 ## Rotating Node Testnet 253 254 We use `c=1,r=400` as load, which can be considered a safe workload, as it was close to (but below) 255 the saturation point in the 200 node testnet. This testnet has less nodes (10 validators and 25 full nodes). 256 257 Importantly, the baseline considered in this section is `v0.37.0-alpha.2` (Tendermint Core), 258 which is **different** from the one used in the [previous section](method.md#200-node-testnet). 259 The reason is that this testnet was not re-tested for `v0.37.0-alpha.3` (CometBFT), 260 since it was not deemed necessary. 261 262 Unlike in the baseline tests, the version of CometBFT used for these tests is _not_ affected by [\#9539], 263 which was fixed right after having run rotating testnet for `v0.37`. 264 As a result, the load introduced in this iteration of the test is higher as transactions do not get rejected. 265 266 ### Latencies 267 268 The plot of all latencies can be seen here. 269 270  271 272 Which is similar to the baseline. 273 274  275 276 The average increase of about 1 second with respect to the baseline is due to the higher 277 transaction load produced (remember the baseline was affected by [\#9539], whereby most transactions 278 produced were rejected by `CheckTx`). 279 280 ### Prometheus Metrics 281 282 The set of metrics shown here roughly match those shown on the baseline (`v0.37`) for the same experiment. 283 We also show the baseline results for comparison. 284 285 #### Blocks and Transactions per minute 286 287 This following plot shows the blocks produced per minute. 288 289  290 291 This is similar to the baseline, shown below. 292 293  294 295 The following plot shows only the heights reported by ephemeral nodes, both when they were blocksyncing 296 and when they were running consensus. 297 The second plot is the baseline plot for comparison. The baseline lacks the heights when the nodes were 298 blocksyncing as that metric was implemented afterwards. 299 300  301 302  303 304 We seen that heights follow a similar pattern in both plots: they grow in length as the experiment advances. 305 306 The following plot shows the transactions processed per minute. 307 308  309 310 For comparison, this is the baseline plot. 311 312  313 314 We can see the rate is much lower in the baseline plot. 315 The reason is that the baseline was affected by [\#9539], whereby `CheckTx` rejected most transactions 316 produced by the load runner. 317 318 #### Peers 319 320 The plot below shows the evolution of the number of peers throughout the experiment. 321 322  323 324 This is the baseline plot, for comparison. 325 326  327 328 The plotted values and their evolution are comparable in both plots. 329 330 For further details on these plots, see the [this section](./TMCore-QA-34.md#peers-1). 331 332 #### Memory Resident Set Size 333 334 The average Resident Set Size (RSS) over all processes is notably bigger on `v0.38.0-alpha.2` than on the baseline. 335 The reason for this is, again, the fact that `CheckTx` was rejecting most transactions submitted on the baseline 336 and therefore the overall transaction load was lower on the baseline. 337 This is consistent with the difference seen in the transaction rate plots 338 in the [previous section](#blocks-and-transactions-per-minute). 339 340  341 342  343 344 #### CPU utilization 345 346 The plots show metric `load1` for all nodes for `v0.38.0-alpha.2` and for the baseline. 347 348  349 350  351 352 In both cases, it is contained under 5 most of the time, which is considered normal load. 353 The load seems to be more important on `v0.38.0-alpha.2` on average because of the bigger 354 number of transactions processed per minute as compared to the baseline. 355 356 ### Test Result 357 358 | Scenario | Date | Version | Result | 359 | -------- | ---------- | ---------------------------------------------------------- | ------ | 360 | Rotating | 2023-05-23 | v0.38.0-alpha.2 (e9abb116e29beb830cf111b824c8e2174d538838) | Pass | 361 362 363 364 ## Vote Extensions Testbed 365 366 In this testnet we evaluate the effect of varying the sizes of vote extensions added to pre-commit votes on the performance of CometBFT. 367 The test uses the Key/Value store in our [[end-to-end]] test framework, which has the following simplified flow: 368 369 1. When validators send their pre-commit votes to a block of height $i$, they first extend the vote as they see fit in `ExtendVote`. 370 2. When a proposer for height $i+1$ creates a block to propose, in `PrepareProposal`, it prepends the transactions with a special transaction, which modifies a reserved key. The transaction value is derived from the extensions from height $i$; in this example, the value is derived from the vote extensions and includes the set itself, hexa encoded as string. 371 3. When a validator sends their pre-vote for the block proposed in $i+1$, they first double check in `ProcessProposal` that the special transaction in the block was properly built by the proposer. 372 4. When validators send their pre-commit for the block proposed in $i+1$, they first extend the vote, and the steps repeat for heights $i+2$ and so on. 373 374 For this test, extensions are random sequences of bytes with a predefined `vote_extension_size`. 375 Hence, two effects are seen on the network. 376 First, pre-commit vote message sizes will increase by the specified `vote_extension_size` and, second, block messages will increase by twice `vote_extension_size`, given then hexa encoding of extensions, times the number of extensions received, i.e. at least 2/3 of 175. 377 378 All tests were performed on commit d5baba237ab3a04c1fd4a7b10927ba2e6a2aab27, which corresponds to v0.38.0-alpha.2 plus commits to add the ability to vary the vote extension sizes to the test application. 379 Although the same commit is used for the baseline, in this configuration the behavior observed is the same as in the "vanilla" v0.38.0-alpha.2 test application, that is, vote extensions are 8-byte integers, compressed as variable size integers instead of a random sequence of size `vote_extension_size`. 380 381 The following table summarizes the test cases. 382 383 | Name | Extension Size (bytes) | Date | 384 | -------- | ---------------------- | ---------- | 385 | baseline | 8 (varint) | 2023-05-26 | 386 | 2k | 2048 | 2023-05-29 | 387 | 4k | 4094 | 2023-05-29 | 388 | 8k | 8192 | 2023-05-26 | 389 | 16k | 16384 | 2023-05-26 | 390 | 32k | 32768 | 2023-05-26 | 391 392 393 ### Latency 394 395 The following figures show the latencies observed on each of the 5 runs of each experiment; 396 the redline shows the average of each run. 397 It can be easily seen from these graphs that the larger the vote extension size, the more latency varies and the more common higher latencies become. 398 Even in the case of extensions of size 2k, the mean latency goes from below 5s to nearly 10s. 399 400 **Baseline** 401 402  403 404 **2k** 405 406  407 408 **4k** 409 410  411 412 **8k** 413 414  415 416 **16k** 417 418  419 420 **32k** 421 422  423 424 The following graphs combine all the runs of the same experiment. 425 They show that latency variation greatly increases with the increase of vote extensions. 426 In particular, for the 16k and 32k cases, the system goes through large gaps without transaction delivery. 427 As discussed later, this is the result of heights taking multiple rounds to finish and new transactions being held until the next block is agreed upon. 428 429 | | | 430 | ---------------------------------------------------------- | ------------------------------------------------ | 431 | baseline  | 2k  | 432 | 4k  | 8k  | 433 | 16k  | 32k  | 434 435 436 ### Blocks and Transactions per minute 437 438 The following plots show the blocks produced per minute and transactions processed per minute. 439 We have divided the presentation in an overview section, which shows the metrics for the whole experiment (five runs) and a detailed sample, which shows the metrics for the first of the five runs. 440 We repeat the approach for the other metrics as well. 441 The dashed red line shows the moving average over a 20s window. 442 443 #### Overview 444 445 It is clear from the overview plots that as the vote extension sizes increase, the rate of block creation decreases. 446 Although the rate of transaction processing also decreases, it does not seem to decrease as fast. 447 448 | Experiment | Block creation rate | Transaction rate | 449 | ------------ | ----------------------------------------------------------- | ------------------------------------------------------------- | 450 | **baseline** |  |  | 451 | **2k** |  |  | 452 | **4k** |  |  | 453 | **8k** |  |  | 454 | **16k** |  |  | 455 | **32k** |  |  | 456 457 #### First run 458 459 | Experiment | Block creation rate | Transaction rate | 460 | ------------ | ------------------------------------------------------------- | --------------------------------------------------------------- | 461 | **baseline** |  |  | 462 | **2k** |  |  | 463 | **4k** |  |  | 464 | **8k** |  |  | 465 | **16k** |  |  | 466 | **32k** |  |  | 467 468 469 ### Number of rounds 470 471 The effect of vote extensions are also felt on the number of rounds needed to reach consensus. 472 The following graphs show the number of the highest round required to reach consensus during the whole experiment. 473 474 In the baseline and low vote extension lengths, most blocks were agreed upon during round 0. 475 As the load increases, more and more rounds were required. 476 In the 32k case se see round 5 being reached frequently. 477 478 | Experiment | Number of Rounds per block | 479 | ------------ | ------------------------------------------------------------- | 480 | **baseline** |  | 481 | **2k** |  | 482 | **4k** |  | 483 | **8k** |  | 484 | **16k** |  | 485 | **32k** |  | 486 487 488 We conjecture that the reason is that the timeouts used are inadequate for the extra traffic in the network. 489 490 ### CPU 491 492 The CPU usage reached the same peaks on all tests, but the following graphs show that with larger Vote Extensions, nodes take longer to reduce the CPU usage. 493 This could mean that a backlog of processing is forming during the execution of the tests with larger extensions. 494 495 496 | Experiment | CPU | 497 | ------------ | ----------------------------------------------------- | 498 | **baseline** |  | 499 | **2k** |  | 500 | **4k** |  | 501 | **8k** |  | 502 | **16k** |  | 503 | **32k** |  | 504 505 ### Resident Memory 506 507 The same conclusion reached for CPU usage may be drawn for the memory. 508 That is, that a backlog of work is formed during the tests and catching up (freeing of memory) happens after the test is done. 509 510 A more worrying trend is that the bottom of the memory usage seems to increase in between runs. 511 We have investigated this in longer runs and confirmed that there is no such a trend. 512 513 514 515 | Experiment | Resident Set Size | 516 | ------------ | -------------------------------------------------------- | 517 | **baseline** |  | 518 | **2k** |  | 519 | **4k** |  | 520 | **8k** |  | 521 | **16k** |  | 522 | **32k** |  | 523 524 ### Mempool size 525 526 This metric shows how many transactions are outstanding in the nodes' mempools. 527 Observe that in all runs, the average number of transactions in the mempool quickly drops to near zero between runs. 528 529 530 | Experiment | Resident Set Size | 531 | ------------ | ------------------------------------------------------------------ | 532 | **baseline** |  | 533 | **2k** |  | 534 | **4k** |  | 535 | **8k** |  | 536 | **16k** |  | 537 | **32k** |  | 538 539 540 541 542 543 ### Results 544 545 | Scenario | Date | Version | Result | 546 | -------- | ---------- | ------------------------------------------------------------------------------------- | ------ | 547 | VESize | 2023-05-23 | v0.38.0-alpha.2 + varying vote extensions (9fc711b6514f99b2dc0864fc703cb81214f01783) | N/A | 548 549 550 551 [\#9539]: https://github.com/tendermint/tendermint/issues/9539 552 [\#9548]: https://github.com/tendermint/tendermint/issues/9548 553 [\#539]: https://github.com/KYVENetwork/cometbft/v38/issues/539 554 [\#546]: https://github.com/KYVENetwork/cometbft/v38/issues/546 555 [\#562]: https://github.com/KYVENetwork/cometbft/v38/issues/562 556 [end-to-end]: https://github.com/KYVENetwork/cometbft/v38/tree/main/test/e2e