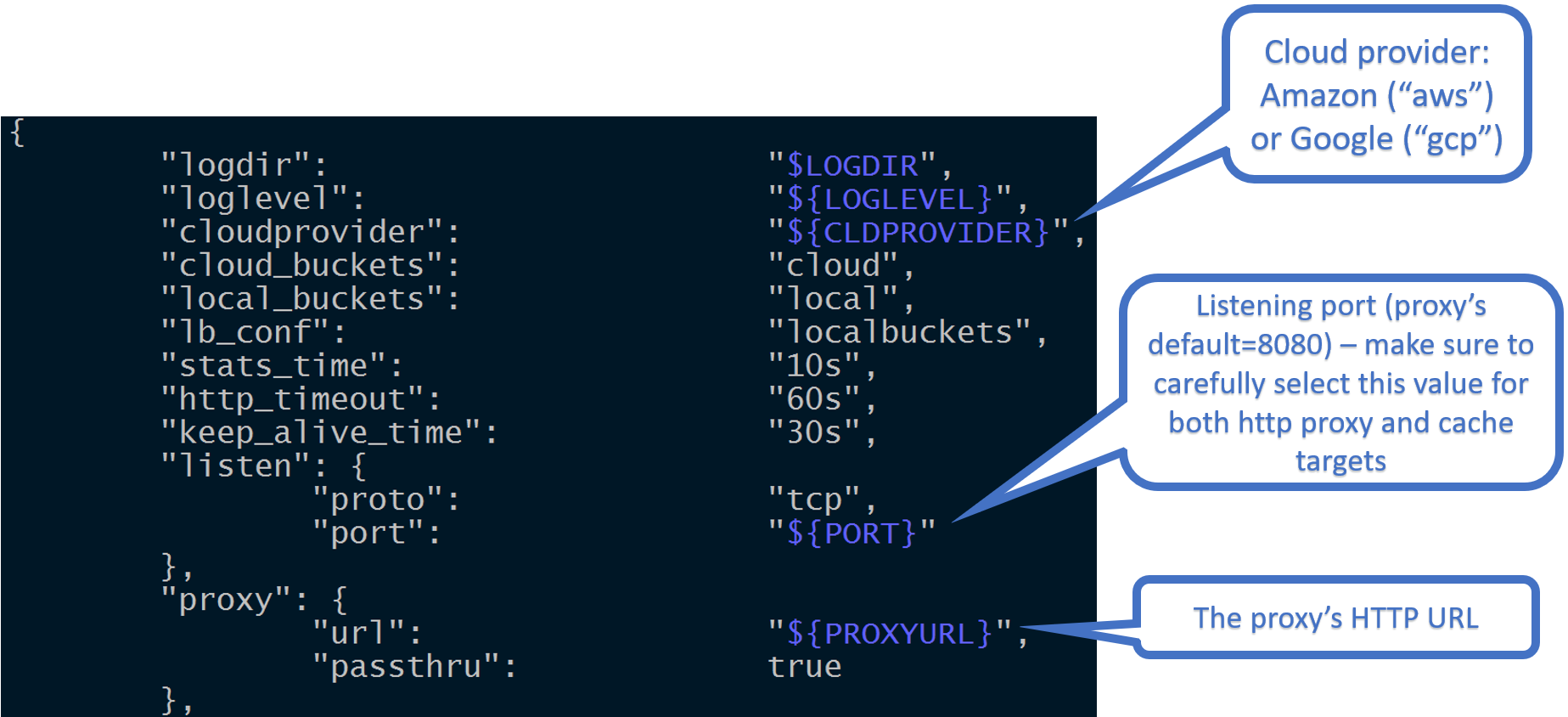

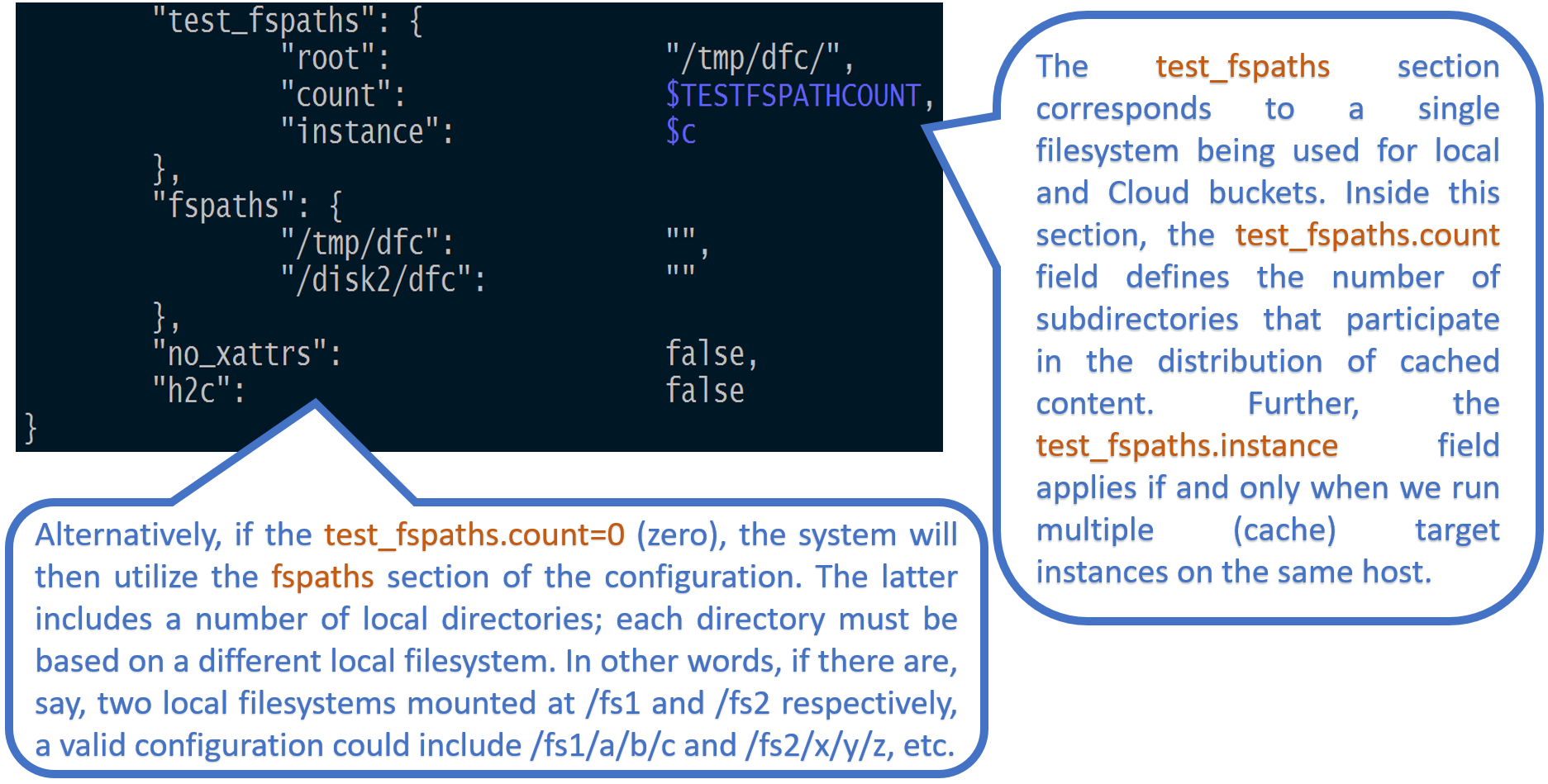

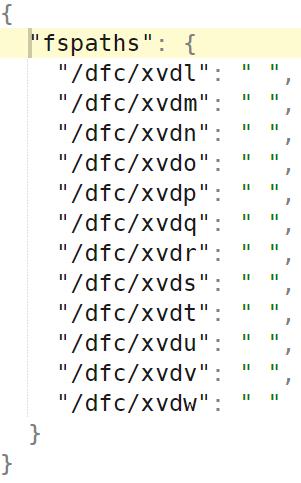

github.com/NVIDIA/aistore@v1.3.23-0.20240517131212-7df6609be51d/docs/configuration.md (about) 1 --- 2 layout: post 3 title: CONFIGURATION 4 permalink: /docs/configuration 5 redirect_from: 6 - /configuration.md/ 7 - /docs/configuration.md/ 8 --- 9 10 AIS configuration comprises: 11 12 | Name | Scope | Comment | 13 | --- | --- | --- | 14 | [ClusterConfig](https://github.com/NVIDIA/aistore/blob/main/cmn/config.go#L48) | Global | [Named sections](https://github.com/NVIDIA/aistore/blob/main/deploy/dev/local/aisnode_config.sh#L13) containing name-value knobs | 15 | [LocalConfig](https://github.com/NVIDIA/aistore/blob/main/cmn/config.go#L49) | Local | Allows to override global defaults on a per-node basis | 16 17 Cluster-wide (global) configuration is protected, namely: checksummed, versioned, and safely replicated. In effect, global config defines cluster-wide defaults inherited by each node joining the cluster. 18 19 Local config includes: 20 21 1. node's own hostnames (or IP addresses) and [mountpaths](overview.md#terminology) (data drives); 22 2. optionally, names-and-values that were changed for *this* specific node. For each node in the cluster, the corresponding capability (dubbed *config-override*) boils down to: 23 * **inheriting** cluster configuration, and optionally 24 * optionally, **locally overriding** assorted inherited defaults (see usage examples below). 25 26 Majority of the configuration knobs can be changed at runtime (and at any time). A few read-only variables are explicitly [marked](https://github.com/NVIDIA/aistore/blob/main/cmn/config.go) in the source; any attempt to modify those at runtime will return "read-only" error message. 27 28 ## CLI 29 30 For the most part, commands to view and update (CLI, cluster, node) configuration can be found [here](/docs/cli/config.md). 31 32 The [same document](docs/cli/config.md) also contains a brief theory of operation, command descriptions, numerous usage examples, and more. 33 34 > **Important:** as an input, CLI accepts both plain text and JSON-formatted values. For the latter, make sure to embed the (JSON value) argument into single quotes, e.g.: 35 36 ```console 37 $ ais config cluster backend.conf='{"gcp":{}, "aws":{}}' 38 ``` 39 40 To show the update in plain text and JSON: 41 42 ```console 43 $ ais config cluster backend.conf --json 44 45 "backend": {"aws":{},"gcp":{}} 46 47 $ ais config cluster backend.conf 48 PROPERTY VALUE 49 backend.conf map[aws:map[] gcp:map[]] 50 ``` 51 52 See also: 53 54 * [Backend providers and supported backends](/docs/providers.md) 55 56 57 ## Configuring for production 58 59 Configuring AIS cluster for production requires a careful consideration. First and foremost, there are assorted [performance](performance.md) related recommendations. 60 61 Optimal performance settings will always depend on your (hardware, network) environment. Speaking of networking, AIS supports 3 (**three**) logical networks and will, therefore, benefit, performance-wise, if provisioned with up to 3 isolated physical networks or VLANs. The logical networks are: 62 63 * user (aka public) 64 * intra-cluster control 65 * intra-cluster data 66 67 with the corresponding [JSON names](/deploy/dev/local/aisnode_config.sh), respectively: 68 69 * `hostname` 70 * `hostname_intra_control` 71 * `hostname_intra_data` 72 73 ### Example 74 75 ```console 76 $ ais config node <TAB-TAB> 77 78 p[ctfooJtb] p[qGfooQSf] p[KffoosQR] p[ckfooUEX] p[DlPmfooU] t[MgHfooNG] t[ufooIDPc] t[tFUfooCO] t[wSJfoonU] t[WofooQEW] 79 p[pbarqYtn] p[JedbargG] p[WMbargGF] p[barwMoEU] p[OUgbarGf] t[tfNbarFk] t[fbarswQP] t[vAWbarPv] t[Kopbarra] t[fXbarenn] 80 81 ## in aistore, each node has "inherited" and "local" configuration 82 ## choose "local" to show the (selected) target's disks and network 83 84 $ ais config node t[fbarswQP] local --json 85 { 86 "confdir": "/etc/ais", 87 "log_dir": "/var/log/ais", 88 "host_net": { 89 "hostname": "10.51.156.130", 90 "hostname_intra_control": "ais-target-5.nvmetal.net", 91 "hostname_intra_data": "ais-target-5.nvmetal.net", 92 "port": "51081", 93 "port_intra_control": "51082", 94 "port_intra_data": "51083" 95 }, 96 "fspaths": {"/ais/nvme0n1": "","/ais/nvme1n1": "","/ais/nvme2n1": ""}, 97 "test_fspaths": { 98 "root": "", 99 "count": 0, 100 "instance": 0 101 } 102 } 103 ``` 104 105 ### Multi-homing 106 107 All aistore nodes - both ais targets and ais gateways - can be deployed as multi-homed servers. But of course, the capability is mostly important and relevant for the targets that may be required (and expected) to move a lot of traffic, as fast as possible. 108 109 Building up on the previous section's example, here's how it may look: 110 111 ```console 112 $ ais config node t[fbarswQP] local host_net --json 113 { 114 "host_net": { 115 "hostname": "10.51.156.130, 10.51.156.131, 10.51.156.132", 116 "hostname_intra_control": "ais-target-5.nvmetal.net", 117 "hostname_intra_data": "ais-target-5.nvmetal.net", 118 "port": "51081", 119 "port_intra_control": "51082", 120 "port_intra_data": "51083" 121 }, 122 } 123 ``` 124 125 **Note**: additional NICs can be added (or removed) transparently for users, i.e. without requiring (or causing) any other changes. 126 127 The example above may serve as a simple illustration whereby `t[fbarswQP]` becomes a multi-homed device equally utilizing all 3 (three) IPv4 interfaces 128 129 ## References 130 131 * For Kubernetes deployment, please refer to a separate [ais-k8s](https://github.com/NVIDIA/ais-k8s) repository that also contains [AIS/K8s Operator](https://github.com/NVIDIA/ais-k8s/blob/master/operator/README.md) and its configuration-defining [resources](https://github.com/NVIDIA/ais-k8s/blob/master/operator/pkg/resources/cmn/config.go). 132 * To configure an optional AIStore authentication server, run `$ AIS_AUTHN_ENABLED=true make deploy`. For information on AuthN server, please see [AuthN documentation](/docs/authn.md). 133 * AIS [CLI](/docs/cli.md) is an easy-to-use convenient command-line management/monitoring tool. To get started with CLI, run `make cli` (that generates `ais` executable) and follow the prompts. 134 135 ## Cluster and Node Configuration 136 137 The first thing to keep in mind is that there are 3 (three) separate, and separately maintained, pieces: 138 139 1. Cluster configuration that comprises global defaults 140 2. Node (local) configuration 141 3. Node's local overrides of global defaults 142 143 Specifically: 144 145 ## Cluster Config 146 147 To show and/or change global config, simply type one of: 148 149 ```console 150 # 1. show cluster config 151 $ ais show cluster config 152 153 # 2. show cluster config in JSON format 154 $ ais show cluster config --json 155 156 # 3. show cluster-wide defaults for all variables prefixed with "time" 157 $ ais show config cluster time 158 # or, same: 159 $ ais show cluster config time 160 PROPERTY VALUE 161 timeout.cplane_operation 2s 162 timeout.max_keepalive 4s 163 timeout.max_host_busy 20s 164 timeout.startup_time 1m 165 timeout.send_file_time 5m 166 timeout.transport_idle_term 4s 167 168 # 4. for all nodes in the cluster set startup timeout to 2 minutes 169 $ ais config cluster timeout.startup_time=2m 170 config successfully updated 171 ``` 172 173 Typically, when we deploy a new AIS cluster, we use configuration template that contains all the defaults - see, for example, [JSON template](/deploy/dev/local/aisnode_config.sh). Configuration sections in this template, and the knobs within those sections, must be self-explanatory, and the majority of those, except maybe just a few, have pre-assigned default values. 174 175 ## Node configuration 176 177 As stated above, each node in the cluster inherits global configuration with the capability to override the latter locally. 178 179 There are also node-specific settings, such as: 180 181 * log directories 182 * network configuration, including node's hostname(s) or IP addresses 183 * node's [mountpaths](#managing-mountpaths) 184 185 > Since AIS supports n-way mirroring and erasure coding, we typically recommend not using LVMs and hardware RAIDs. 186 187 ### Example: show node's configuration 188 189 ```console 190 # ais show config t[CCDpt8088] 191 PROPERTY VALUE DEFAULT 192 auth.enabled false - 193 auth.secret aBitLongSecretKey - 194 backend.conf map[aws:map[] gcp:map[]] - 195 checksum.enable_read_range false - 196 checksum.type xxhash - 197 checksum.validate_cold_get true - 198 checksum.validate_obj_move false - 199 checksum.validate_warm_get false - 200 ... 201 ... 202 (Hint: use `--type` to select the node config's type to show: 'cluster', 'local', 'all'.) 203 ... 204 ... 205 ``` 206 207 ### Example: same as above in JSON format: 208 209 ```console 210 $ ais show config CCDpt8088 --json | tail -20 211 "lastupdate_time": "2021-03-20 18:00:20.393881867 -0700 PDT m=+2907.143584987", 212 "uuid": "ZzCknLkMi", 213 "config_version": "3", 214 "confdir": "/ais", 215 "log_dir": "/tmp/ais/log", 216 "host_net": { 217 "hostname": "", 218 "hostname_intra_control": "", 219 "hostname_intra_data": "", 220 "port": "51081", 221 "port_intra_control": "51082", 222 "port_intra_data": "51083" 223 }, 224 "fspaths": {"/ais/mp1": "","/ais/mp2": "","/ais/mp3":{},"/ais/mp4": ""}, 225 "test_fspaths": { 226 "root": "/tmp/ais", 227 "count": 0, 228 "instance": 0 229 } 230 ``` 231 232 See also: 233 * [local playground with two data drives](https://github.com/NVIDIA/aistore/blob/main/deploy/dev/local/README.md) 234 235 ###Example: use `--type` option to show only local config 236 237 ```console 238 # ais show config koLAt8081 --type local 239 PROPERTY VALUE 240 confdir /ais 241 log_dir /tmp/ais/log 242 host_net.hostname 243 host_net.hostname_intra_control 244 host_net.hostname_intra_data 245 host_net.port 51081 246 host_net.port_intra_control 51082 247 host_net.port_intra_data 51083 248 fspaths.paths /ais/mp1,/ais/mp2,/ais/mp3,/ais/mp4 249 test_fspaths.root /tmp/ais 250 test_fspaths.count 0 251 test_fspaths.instance 0 252 ``` 253 254 ### Local override (of global defaults) 255 256 Example: 257 258 ```console 259 $ ais show config t[CCDpt8088] timeout 260 # or, same: 261 $ ais config node t[CCDpt8088] timeout 262 263 PROPERTY VALUE DEFAULT 264 timeout.cplane_operation 2s - 265 timeout.join_startup_time 3m - 266 timeout.max_host_busy 20s - 267 timeout.max_keepalive 4s - 268 timeout.send_file_time 5m - 269 timeout.startup_time 1m - 270 271 $ ais config node t[CCDpt8088] timeout.startup_time=90s 272 config for node "CCDpt8088" successfully updated 273 274 $ ais config node t[CCDpt8088] timeout 275 276 PROPERTY VALUE DEFAULT 277 timeout.cplane_operation 2s - 278 timeout.join_startup_time 3m - 279 timeout.max_host_busy 20s - 280 timeout.max_keepalive 4s - 281 timeout.send_file_time 5m - 282 timeout.startup_time 1m30s 1m 283 ``` 284 285 In the `DEFAULT` column above hyphen (`-`) indicates that the corresponding value is inherited and, as far as the node `CCDpt8088`, remains unchanged. 286 287 ## Rest of this document is structured as follows 288 289 - [Basics](#basics) 290 - [Startup override](#startup-override) 291 - [Managing mountpaths](#managing-mountpaths) 292 - [Disabling extended attributes](#disabling-extended-attributes) 293 - [Enabling HTTPS](#enabling-https) 294 - [Filesystem Health Checker](#filesystem-health-checker) 295 - [Networking](#networking) 296 - [Reverse proxy](#reverse-proxy) 297 - [Curl examples](#curl-examples) 298 - [CLI examples](#cli-examples) 299 300 The picture illustrates one section of the configuration template that, in part, includes listening port: 301 302  303 304 Further, `test_fspaths` section (see below) corresponds to a **single local filesystem being partitioned** between both *local* and *Cloud* buckets. In other words, the `test_fspaths` configuration option is intended strictly for development. 305 306  307 308 In production, we use an alternative configuration called `fspaths`: the section of the [config](/deploy/dev/local/aisnode_config.sh) that includes a number of local directories, whereby each directory is based on a different local filesystem. 309 310 For `fspath` and `mountpath` terminology and details, please see section [Managing Mountpaths](#managing-mountpaths) in this document. 311 312 An example of 12 fspaths (and 12 local filesystems) follows below: 313 314  315 316 ### Example: 3 NVMe drives 317 318 ```console 319 $ ais config node <TAB-TAB> 320 321 p[ctfooJtb] p[qGfooQSf] p[KffoosQR] p[ckfooUEX] p[DlPmfooU] t[MgHfooNG] t[ufooIDPc] t[tFUfooCO] t[wSJfoonU] t[WofooQEW] 322 p[pbarqYtn] p[JedbargG] p[WMbargGF] p[barwMoEU] p[OUgbarGf] t[tfNbarFk] t[fbarswQP] t[vAWbarPv] t[Kopbarra] t[fXbarenn] 323 324 ## in aistore, each node has "inherited" and "local" configuration 325 ## choose "local" to show the target's own disks and network 326 327 $ ais config node t[fbarswQP] local --json 328 { 329 "confdir": "/etc/ais", 330 "log_dir": "/var/log/ais", 331 "host_net": { 332 "hostname": "10.51.156.130", 333 "hostname_intra_control": "ais-target-5.nvmetal.net", 334 "hostname_intra_data": "ais-target-5.nvmetal.net", 335 "port": "51081", 336 "port_intra_control": "51082", 337 "port_intra_data": "51083" 338 }, 339 "fspaths": {"/ais/nvme0n1": "","/ais/nvme1n1": "","/ais/nvme2n1": ""}, 340 "test_fspaths": { 341 "root": "", 342 "count": 0, 343 "instance": 0 344 } 345 } 346 ``` 347 348 See also: 349 * [local playground with two data drives](https://github.com/NVIDIA/aistore/blob/main/deploy/dev/local/README.md) 350 351 ## Basics 352 353 First, some basic facts: 354 355 * AIS cluster is a collection of nodes - members of the cluster. 356 * A node can be an AIS proxy (aka gateway) or an AIS target. 357 * In either case, HTTP request to read (get) or write (set) specific node's configuration will have `/v1/daemon` in its URL path. 358 * The capability to carry out cluster-wide configuration updates is also supported. The corresponding HTTP URL will have `/v1/cluster` in its path. 359 360 > Both `daemon` and `cluster` are the two RESTful resource abstractions supported by the API. Please see [AIS API](http_api.md) for naming conventions, RESTful resources, as well as API reference and details. 361 362 * To get the node's up-to-date configuration, execute: 363 ```console 364 $ ais show config <daemon-ID> 365 ``` 366 This will display all configuration sections and all the named *knobs* - i.e., configuration variables and their current values. 367 368 Most configuration options can be updated either on an individual (target or proxy) daemon, or the entire cluster. 369 Some configurations are "overridable" and can be configured on a per-daemon basis. Some of these are shown in the table below. 370 371 For examples and alternative ways to format configuration-updating requests, please see the [examples below](#examples). 372 373 Following is a table-summary that contains a *subset* of all *settable* knobs: 374 375 > **NOTE (May 2022):** this table is somewhat **outdated** and must be revisited. 376 377 | Option name | Overridable | Default value | Description | 378 |---|---|---|---| 379 | `ec.data_slices` | No | `2` | Represents the number of fragments an object is broken into (in the range [2, 100]) | 380 | `ec.disk_only` | No | `false` | If true, EC uses local drives for all operations. If false, EC automatically chooses between memory and local drives depending on the current memory load | 381 | `ec.enabled` | No | `false` | Enables or disables data protection | 382 | `ec.objsize_limit` | No | `262144` | Indicated the minimum size of an object in bytes that is erasure encoded. Smaller objects are replicated | 383 | `ec.parity_slices` | No | `2` | Represents the number of redundant fragments to provide protection from failures (in the range [2, 32]) | 384 | `ec.compression` | No | `"never"` | LZ4 compression parameters used when EC sends its fragments and replicas over network. Values: "never" - disables, "always" - compress all data, or a set of rules for LZ4, e.g "ratio=1.2" means enable compression from the start but disable when average compression ratio drops below 1.2 to save CPU resources | 385 | `mirror.burst_buffer` | No | `512` | the maximum queue size for the (pending) objects to be mirrored. When exceeded, target logs a warning. | 386 | `mirror.copies` | No | `1` | the number of local copies of an object | 387 | `mirror.enabled` | No | `false` | If true, for every object PUT a target creates object replica on another mountpath. Later, on object GET request, loadbalancer chooses a mountpath with lowest disk utilization and reads the object from it | 388 | `rebalance.dest_retry_time` | No | `2m` | If a target does not respond within this interval while rebalance is running the target is excluded from rebalance process | 389 | `rebalance.enabled` | No | `true` | Enables and disables automatic rebalance after a target receives the updated cluster map. If the (automated rebalancing) option is disabled, you can still use the REST API (`PUT {"action": "start", "value": {"kind": "rebalance"}} v1/cluster`) to initiate cluster-wide rebalancing | 390 | `rebalance.multiplier` | No | `4` | A tunable that can be adjusted to optimize cluster rebalancing time (advanced usage only) | 391 | `transport.quiescent` | No | `20s` | Rebalance moves to the next stage or starts the next batch of objects when no objects are received during this time interval | 392 | `versioning.enabled` | No | `true` | Enables and disables versioning. For the supported 3rd party backends, versioning is _on_ only when it enabled for (and supported by) the specific backend | 393 | `versioning.validate_warm_get` | No | `false` | If false, a target returns a requested object immediately if it is cached. If true, a target fetches object's version(via HEAD request) from Cloud and if the received version mismatches locally cached one, the target redownloads the object and then returns it to a client | 394 | `checksum.enable_read_range` | Yes | `false` | See [Supported Checksums and Brief Theory of Operations](checksum.md) | 395 | `checksum.type` | Yes | `xxhash` | Checksum type. Please see [Supported Checksums and Brief Theory of Operations](checksum.md) | 396 | `checksum.validate_cold_get` | Yes | `true` | Please see [Supported Checksums and Brief Theory of Operations](checksum.md) | 397 | `checksum.validate_warm_get` | Yes | `false` | See [Supported Checksums and Brief Theory of Operations](checksum.md) | 398 | `client.client_long_timeout` | Yes | `30m` | Default _long_ client timeout | 399 | `client.client_timeout` | Yes | `10s` | Default client timeout | 400 | `client.list_timeout` | Yes | `2m` | Client list objects timeout | 401 | `transport.block_size` | Yes | `262144` | Maximum data block size used by LZ4, greater values may increase compression ration but requires more memory. Value is one of 64KB, 256KB(AIS default), 1MB, and 4MB | 402 | `disk.disk_util_high_wm` | Yes | `80` | Operations that implement self-throttling mechanism, e.g. LRU, turn on the maximum throttle if disk utilization is higher than `disk_util_high_wm` | 403 | `disk.disk_util_low_wm` | Yes | `60` | Operations that implement self-throttling mechanism, e.g. LRU, do not throttle themselves if disk utilization is below `disk_util_low_wm` | 404 | `disk.iostat_time_long` | Yes | `2s` | The interval that disk utilization is checked when disk utilization is below `disk_util_low_wm`. | 405 | `disk.iostat_time_short` | Yes | `100ms` | Used instead of `iostat_time_long` when disk utilization reaches `disk_util_high_wm`. If disk utilization is between `disk_util_high_wm` and `disk_util_low_wm`, a proportional value between `iostat_time_short` and `iostat_time_long` is used. | 406 | `distributed_sort.call_timeout` | Yes | `"10m"` | a maximum time a target waits for another target to respond | 407 | `distributed_sort.compression` | Yes | `"never"` | LZ4 compression parameters used when dSort sends its shards over network. Values: "never" - disables, "always" - compress all data, or a set of rules for LZ4, e.g "ratio=1.2" means enable compression from the start but disable when average compression ratio drops below 1.2 to save CPU resources | 408 | `distributed_sort.default_max_mem_usage` | Yes | `"80%"` | a maximum amount of memory used by running dSort. Can be set as a percent of total memory(e.g `80%`) or as the number of bytes(e.g, `12G`) | 409 | `distributed_sort.dsorter_mem_threshold` | Yes | `"100GB"` | minimum free memory threshold which will activate specialized dsorter type which uses memory in creation phase - benchmarks shows that this type of dsorter behaves better than general type | 410 | `distributed_sort.duplicated_records` | Yes | `"ignore"` | what to do when duplicated records are found: "ignore" - ignore and continue, "warn" - notify a user and continue, "abort" - abort dSort operation | 411 | `distributed_sort.ekm_malformed_line` | Yes | `"abort"` | what to do when extraction key map notices a malformed line: "ignore" - ignore and continue, "warn" - notify a user and continue, "abort" - abort dSort operation | 412 | `distributed_sort.ekm_missing_key` | Yes | `"abort"` | what to do when extraction key map have a missing key: "ignore" - ignore and continue, "warn" - notify a user and continue, "abort" - abort dSort operation | 413 | `distributed_sort.missing_shards` | Yes | `"ignore"` | what to do when missing shards are detected: "ignore" - ignore and continue, "warn" - notify a user and continue, "abort" - abort dSort operation | 414 | `fshc.enabled` | Yes | `true` | Enables and disables filesystem health checker (FSHC) | 415 | `log.level` | Yes | `3` | Set global logging level. The greater number the more verbose log output | 416 | `lru.capacity_upd_time` | Yes | `10m` | Determines how often AIStore updates filesystem usage | 417 | `lru.dont_evict_time` | Yes | `120m` | LRU does not evict an object which was accessed less than dont_evict_time ago | 418 | `lru.enabled` | Yes | `true` | Enables and disabled the LRU | 419 | `space.highwm` | Yes | `90` | LRU starts immediately if a filesystem usage exceeds the value | 420 | `space.lowwm` | Yes | `75` | If filesystem usage exceeds `highwm` LRU tries to evict objects so the filesystem usage drops to `lowwm` | 421 | `periodic.notif_time` | Yes | `30s` | An interval of time to notify subscribers (IC members) of the status and statistics of a given asynchronous operation (such as Download, Copy Bucket, etc.) | 422 | `periodic.stats_time` | Yes | `10s` | A *housekeeping* time interval to periodically update and log internal statistics, remove/rotate old logs, check available space (and run LRU *xaction* if need be), etc. | 423 | `resilver.enabled` | Yes | `true` | Enables and disables automatic reresilver after a mountpath has been added or removed. If the (automated resilvering) option is disabled, you can still use the REST API (`PUT {"action": "start", "value": {"kind": "resilver", "node": targetID}} v1/cluster`) to initiate resilvering | 424 | `timeout.max_host_busy` | Yes | `20s` | Maximum latency of control-plane operations that may involve receiving new bucket metadata and associated processing | 425 | `timeout.send_file_time` | Yes | `5m` | Timeout for sending/receiving an object from another target in the same cluster | 426 | `timeout.transport_idle_term` | Yes | `4s` | Max idle time to temporarily teardown long-lived intra-cluster connection | 427 428 ## Startup override 429 430 AIS command-line allows to override configuration at AIS node's startup. For example: 431 432 ```console 433 $ aisnode -config=/etc/ais.json -local_config=/etc/ais_local.json -role=target -config_custom="client.timeout=13s,log.level=4" 434 ``` 435 436 As shown above, the CLI option in-question is: `confjson`. 437 Its value is a JSON-formatted map of string names and string values. 438 By default, the config provided in `config_custom` will be persisted on the disk. 439 To make it transient either add `-transient=true` flag or add additional JSON entry: 440 441 ```console 442 $ aisnode -config=/etc/ais.json -local_config=/etc/ais_local.json -role=target -transient=true -config_custom="client.timeout=13s, transient=true" 443 ``` 444 445 Another example. 446 To override locally-configured address of the primary proxy, run: 447 448 ```console 449 $ aisnode -config=/etc/ais.json -local_config=/etc/ais_local.json -role=target -config_custom="proxy.primary_url=http://G" 450 # where G denotes the designated primary's hostname and port. 451 ``` 452 453 To achieve the same on temporary basis, add `-transient=true` as follows: 454 455 ```console 456 $ aisnode -config=/etc/ais.json -local_config=/etc/ais_local.json -role=target -config_custom="proxy.primary_url=http://G" 457 ``` 458 459 > Please see [AIS command-line](command_line.md) for other command-line options and details. 460 461 ## Managing mountpaths 462 463 * [Mountpath](overview.md#terminology) - is a single disk **or** a volume (a RAID) formatted with a local filesystem of choice, **and** a local directory that AIS can fully own and utilize (to store user data and system metadata). Note that any given disk (or RAID) can have (at most) one mountpath (meaning **no disk sharing**) and mountpath directories cannot be nested. Further: 464 - a mountpath can be temporarily disabled and (re)enabled; 465 - a mountpath can also be detached and (re)attached, thus effectively supporting growth and "shrinkage" of local capacity; 466 - it is safe to execute the 4 listed operations (enable, disable, attach, detach) at any point during runtime; 467 - in a typical deployment, the total number of mountpaths would compute as a direct product of (number of storage targets) x (number of disks in each target). 468 469 Configuration option `fspaths` specifies the list of local mountpath directories. Each configured `fspath` is, simply, a local directory that provides the basis for AIS `mountpath`. 470 471 > In regards **non-sharing of disks** between mountpaths: for development we make an exception, such that multiple mountpaths are actually allowed to share a disk and coexist within a single filesystem. This is done strictly for development convenience, though. 472 473 AIStore [REST API](http_api.md) makes it possible to list, add, remove, enable, and disable a `fspath` (and, therefore, the corresponding local filesystem) at runtime. Filesystem's health checker (FSHC) monitors the health of all local filesystems: a filesystem that "accumulates" I/O errors will be disabled and taken out, as far as the AIStore built-in mechanism of object distribution. For further details about FSHC, please refer to [FSHC readme](/health/fshc.md). 474 475 ## Disabling extended attributes 476 477 To make sure that AIStore does not utilize xattrs, configure: 478 * `checksum.type`=`none` 479 * `versioning.enabled`=`true`, and 480 * `write_policy.md`=`never` 481 482 for all targets in AIStore cluster. 483 484 Or, simply update global configuration (to have those cluster-wide defaults later inherited by all newly created buckets). 485 486 This can be done via the [common configuration "part"](/deploy/dev/local/aisnode_config.sh) that'd be further used to deploy the cluster. 487 488 Extended attributes can be disabled on per bucket basis. To do this, turn off saving metadata to disks (CLI): 489 490 ```console 491 $ ais bucket props ais://mybucket write_policy.md=never 492 Bucket props successfully updated 493 "write_policy.md" set to: "never" (was: "") 494 ``` 495 496 Disable extended attributes only if you need fast and **temporary** storage. 497 Without xattrs, a node loses its objects after the node reboots. 498 If extended attributes are disabled globally when deploying a cluster, node IDs are not permanent and a node can change its ID after it restarts. 499 500 ## Enabling HTTPS 501 502 To switch from HTTP protocol to an encrypted HTTPS, configure `net.http.use_https`=`true` and modify `net.http.server_crt` and `net.http.server_key` values so they point to your OpenSSL certificate and key files respectively (see [AIStore configuration](/deploy/dev/local/aisnode_config.sh)). 503 504 See also: 505 506 * [HTTPS from scratch](/docs/getting_started.md) 507 * [Switching an already deployed cluster between HTTP and HTTPS](/docs/switch_https.md) 508 509 ## Filesystem Health Checker 510 511 Default installation enables filesystem health checker component called FSHC. FSHC can be also disabled via section "fshc" of the [configuration](/deploy/dev/local/aisnode_config.sh). 512 513 When enabled, FSHC gets notified on every I/O error upon which it performs extensive checks on the corresponding local filesystem. One possible outcome of this health-checking process is that FSHC disables the faulty filesystems leaving the target with one filesystem less to distribute incoming data. 514 515 Please see [FSHC readme](/health/fshc.md) for further details. 516 517 ## Networking 518 519 In addition to user-accessible public network, AIStore will optionally make use of the two other networks: 520 521 * intra-cluster control 522 * intra-cluster data 523 524 The way the corresponding config may look in production (e.g.) follows: 525 526 ```console 527 $ ais config node t[nKfooBE] local h... <TAB-TAB> 528 host_net.hostname host_net.port_intra_control host_net.hostname_intra_control 529 host_net.port host_net.port_intra_data host_net.hostname_intra_data 530 531 $ ais config node t[nKfooBE] local host_net --json 532 533 "host_net": { 534 "hostname": "10.50.56.205", 535 "hostname_intra_control": "ais-target-27.ais.svc.cluster.local", 536 "hostname_intra_data": "ais-target-27.ais.svc.cluster.local", 537 "port": "51081", 538 "port_intra_control": "51082", 539 "port_intra_data": "51083" 540 } 541 ``` 542 543 The fact that there are 3 logical networks is not a "limitation" - not a requirement to specifically have 3. Using the example above, here's a small deployment-time change to run a single one: 544 545 ```console 546 "host_net": { 547 "hostname": "10.50.56.205", 548 "hostname_intra_control": "ais-target-27.ais.svc.cluster.local", 549 "hostname_intra_data": "ais-target-27.ais.svc.cluster.local", 550 "port": "51081", 551 "port_intra_control": "51081, # <<<<<< notice the same port 552 "port_intra_data": "51081" # <<<<<< ditto 553 } 554 ``` 555 556 Ideally though, production clusters are deployed over 3 physically different and isolated networks, whereby intense data traffic, for instance, does not introduce additional latency for the control one, etc. 557 558 Separately, there's a **multi-homing** capability motivated by the fact that today's server systems may often have, say, two 50Gbps network adapters. To deliver the entire 100Gbps _without_ LACP trunking and (static) teaming, we could simply have something like: 559 560 ```console 561 "host_net": { 562 "hostname": "10.50.56.205, 10.50.56.206", 563 "hostname_intra_control": "ais-target-27.ais.svc.cluster.local", 564 "hostname_intra_data": "ais-target-27.ais.svc.cluster.local", 565 "port": "51081", 566 "port_intra_control": "51082", 567 "port_intra_data": "51083" 568 } 569 ``` 570 571 No other changes. Just add the second NIC - second IPv4 addr `10.50.56.206` above, and that's all. 572 573 ## Reverse proxy 574 575 AIStore gateway can act as a reverse proxy vis-à-vis AIStore storage targets. This functionality is limited to GET requests only and must be used with caution and consideration. Related [configuration variable](/deploy/dev/local/aisnode_config.sh) is called `rproxy` - see sub-section `http` of the section `net`. For further details, please refer to [this readme](rproxy.md). 576 577 ## Curl examples 578 579 The following assumes that `G` and `T` are the (hostname:port) of one of the deployed gateways (in a given AIS cluster) and one of the targets, respectively. 580 581 ### Cluster-wide operation (all nodes) 582 583 * Set the stats logging interval to 1 second 584 585 ```console 586 $ curl -i -X PUT -H 'Content-Type: application/json' -d '{"action": "set-config","name": "periodic.stats_time", "value": "1s"}' 'http://G/v1/cluster' 587 ``` 588 589 or, same: 590 591 ```console 592 $ curl -i -X PUT 'http://G/v1/cluster/set-config?periodic.stats_time=1s' 593 ``` 594 595 > Notice the two alternative ways to form the requests. 596 597 ### Cluster-wide operation (all nodes) 598 * Set the stats logging interval to 2 minutes 599 600 ```console 601 $ curl -i -X PUT -H 'Content-Type: application/json' -d '{"action": "set-config","name": "periodic.stats_time", "value": "2m"}' 'http://G/v1/cluster' 602 ``` 603 604 ### Cluster-wide operation (all nodes) 605 * Set the default number of n-way copies to 4 (can still be redefined on a per-bucket basis) 606 607 ```console 608 $ curl -i -X PUT -H 'Content-Type: application/json' -d '{"action": "set-config","name": "mirror.copies", "value": "4"}' 'http://G/v1/cluster' 609 610 # or, same using CLI: 611 $ ais config cluster mirror.copies 4 612 ``` 613 614 ### Single-node operation (single node) 615 * Set log level = 1 616 617 ```console 618 $ curl -i -X PUT -H 'Content-Type: application/json' -d '{"action": "set-config","name": "log.level", "value": "1"}' 'http://T/v1/daemon' 619 # or, same: 620 $ curl -i -X PUT 'http://T/v1/daemon/set-config?log.level=1' 621 622 # or, same using CLI (assuming the node in question is t[tZktGpbM]): 623 $ ais config node t[tZktGpbM] log.level 1 624 ``` 625 626 ## CLI examples 627 628 [AIS CLI](/docs/cli.md) is an integrated management-and-monitoring command line tool. The following CLI command sequence, first - finds out all AIS knobs that contain substring "time" in their names, second - modifies `list_timeout` from 2 minutes to 5 minutes, and finally, displays the modified value: 629 630 ```console 631 $ ais show config p[rZTp8080] --type all --json | jq '.timeout.list_timeout' 632 "2m" 633 634 $ ais config cluster timeout.list_timeout=5m 635 Config has been updated successfully. 636 637 $ ais show config p[rZTp8080] --type all --json | jq '.timeout.list_timeout' 638 "5m" 639 ``` 640 641 The example above demonstrates cluster-wide configuration update but note: single-node updates are also supported. 642 643 ### Cluster-wide operation (all nodes) 644 * Set `periodic.stats_time` = 1 minute, `periodic.iostat_time_long` = 4 seconds 645 646 ```console 647 $ ais config cluster periodic.stats_time=1m disk.iostat_time_long=4s 648 ``` 649 650 ### Single-node operation (single node) 651 AIS configuration includes a section called `disk`. The `disk` in turn contains several knobs - one of those knobs is `disk.iostat_time_long`, another - `disk.disk_util_low_wm`. To update one or both of those named variables on all or one of the clustered nodes, you could: 652 * Set `disk.iostat_time_long` = 3 seconds, `disk.disk_util_low_wm` = 40 percent on daemon with ID `target1` 653 654 ```console 655 $ ais config node target1 periodic.stats_time=1m disk.iostat_time_long=4s 656 ```