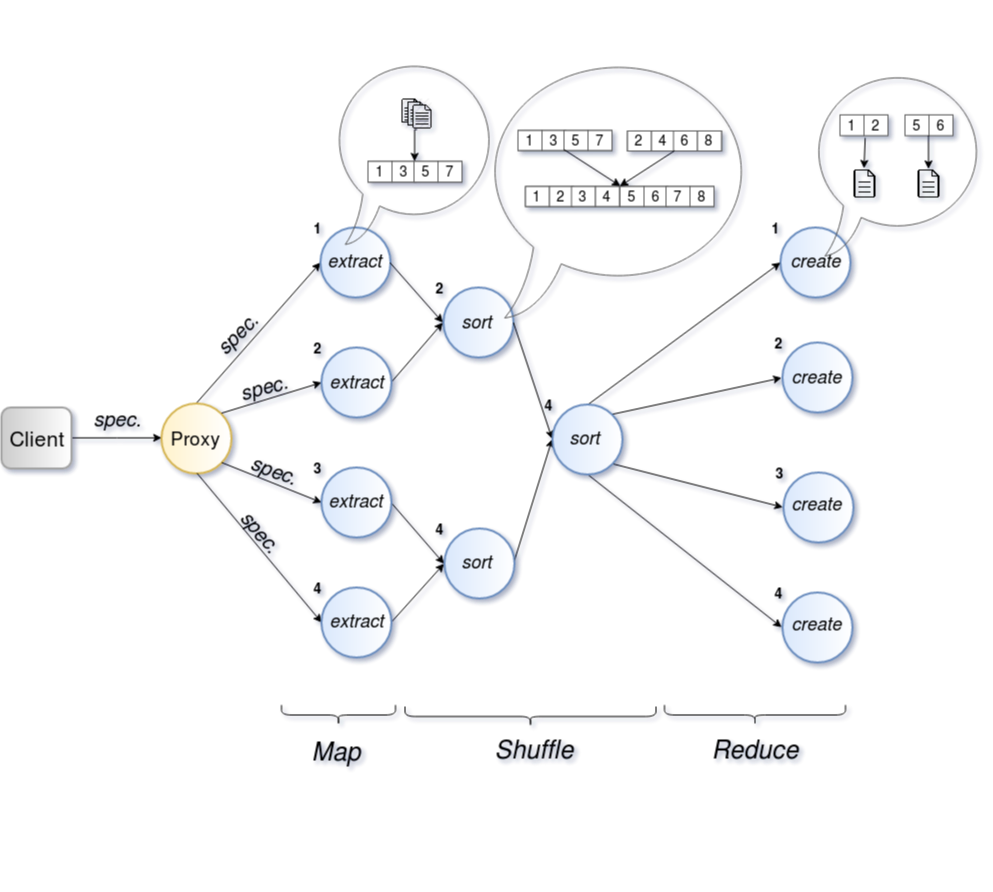

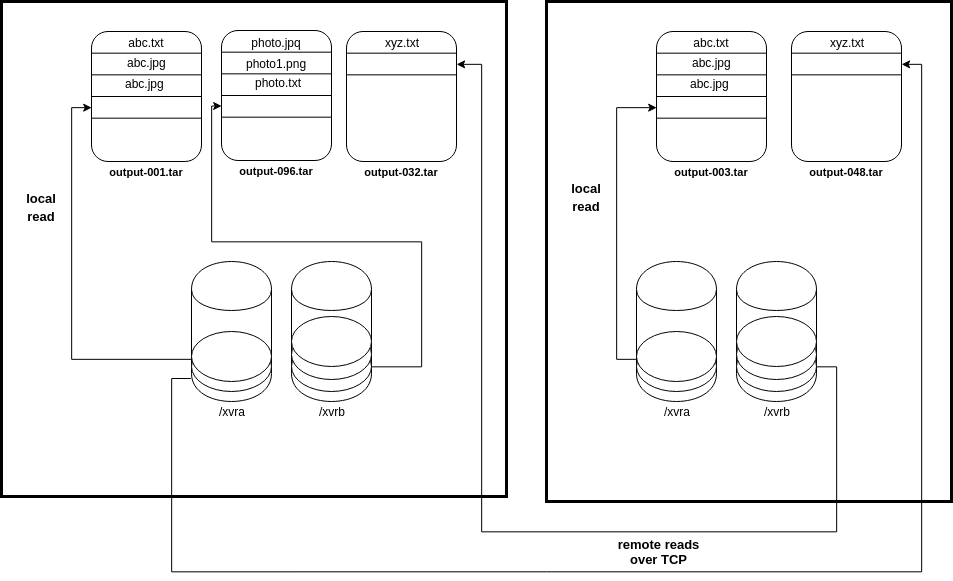

github.com/NVIDIA/aistore@v1.3.23-0.20240517131212-7df6609be51d/docs/dsort.md (about) 1 --- 2 layout: post 3 title: DSORT 4 permalink: /docs/dsort 5 redirect_from: 6 - /dsort.md/ 7 - /docs/dsort.md/ 8 --- 9 10 Dsort is extension for AIStore. It was designed to perform map-reduce like 11 operations on terabytes and petabytes of AI datasets. As a part of the whole 12 system, Dsort is capable of taking advantage of objects stored on AIStore without 13 much overhead. 14 15 AI datasets are usually stored in tarballs, zip objects, msgpacks or tf-records. 16 Focusing only on these types of files and specific workload allows us to tweak 17 performance without too much tradeoffs. 18 19 ## Capabilities 20 21 Example of map-reduce like operation which can be performed on dSort is 22 shuffling (in particular sorting) all objects across all shards by a given 23 algorithm. 24 25  26 27 We allow for output shards to be different size than input shards, thus a user 28 is also able to reshard the objects. This means that output shards can contain 29 more or less objects inside the shard than in the input shard, depending on 30 requested sizes of the shards. 31 32 The result of such an operation would mean that we could get output shards with 33 different sizes with objects that are shuffled across all the shards, which 34 would then be ready to be processed by a machine learning script/model. 35 36 ## Terms 37 38 **Object** - single piece of data. In tarballs and zip files, an *object* is 39 single file contained in this type of archives. In msgpack (assuming that 40 msgpack file is stream of dictionaries) *object* is single dictionary. 41 42 **Shard** - collection of objects. In tarballs and zip files, a *shard* is whole 43 archive. In msgpack is the whole msgpack file. 44 45 We distinguish two kinds of shards: input and output. Input shards, as the name 46 says, it is given as an input for the dSort operation. Output on the other hand 47 is something that is the result of the operation. Output shards can differ from 48 input shards in many ways: size, number of objects, names etc. 49 50 Shards are assumed to be already on AIStore cluster or somewhere in a remote bucket 51 so that AIStore can access them. Output shards will always be placed in the same 52 bucket and directory as the input shards - accessing them after completed dSort, 53 is the same as input shards but of course with different names. 54 55 **Record** - abstracts multiple objects with same key name into single 56 structure. Records are inseparable which means if they come from single shard 57 they will also be in output shard together. 58 59 Eg. if we have a tarball which contains files named: `file1.txt`, `file1.png`, 60 `file2.png`, then we would have 2 *records*: one for `file1` and one for 61 `file2`. 62 63 **Extraction phase** - dSort has multiple phases in which it does the whole 64 operation. The first of them is **extraction**. In this phase, dSort is reading 65 input shards and looks inside them to get to the objects and metadata. Objects 66 and their metadata are then extracted to either disk or memory so that dSort 67 won't need another pass of the whole data set again. This way Dsort can create 68 **Records** which are then used for the whole operation as the main source of 69 information (like location of the objects, sizes, names etc.). Extraction phase 70 is very critical because it does I/O operations. To make the following phases 71 faster, we added support for extraction to memory so that requests for the given 72 objects will be served from RAM instead of disk. The user can specify how much 73 memory can be used for the extraction phase, either in raw numbers like `1GB` or 74 percentages `60%`. 75 76 As mentioned this operation does a lot of I/O operations. To allow the user to 77 have better control over the disk usage, we have provided a concurrency 78 parameter which limits the number of shards that can be read at the same time. 79 80 **Sorting phase** - in this phase, the metadata is processed and aggregated on a 81 single machine. It can be processed in various ways: sorting, shuffling, 82 resizing etc. This is usually the fastest phase but still uses a lot of CPU 83 processing power, to process the metadata. 84 85 The merging of metadata is performed in multiple steps to distribute the load 86 across machines. 87 88 **Creation phase** - it is last phase of dSort where output shards are created. 89 Like the extraction phase, the creation phase is bottlenecked by disk and I/O. 90 Additionally, this phase may use a lot of bandwidth because objects may have 91 been extracted on different machine. 92 93 Similarly to the extraction phase we expose a concurrency parameter for the 94 user, to limit number of shards created simultaneously. 95 96 Shards are created from local records or remote records. Local records are 97 records which were extracted on the machine where the shard is being created, 98 and similarly, remote records are records which were extracted on different 99 machines. This means that a single machine will typically have a lot of 100 read/write operations on the disk coming from either local or remote requests. 101 This is why tweaking the concurrency parameter is really important and can have 102 great impact on performance. We strongly advise to make couple of tests on small 103 load to see what value of this parameter will result in the best performance. 104 Eg. tests shown that on setup: 10x targets, 10x disks on each target, the best 105 concurrency value is 60. 106 107 The other thing that user needs to remember is that when running multiple dSort 108 operations at once, it might be better to set the concurrency parameter to 109 something lower since both of the operation may use disk at the same time. A 110 higher concurrency parameter can result in performance degradation. 111 112  113 114 **Metrics** - user can monitor whole operation thanks to metrics. Metrics 115 provide an overview of what is happening in the cluster, for example: which 116 phase is currently running, how much time has been spent on each phase, etc. 117 There are many metrics (numbers and stats) recorded for each of the phases. 118 119 ## Metrics 120 121 Dsort allows users to fetch the statistics of a given job (either 122 started/running or already finished). Each phase has different, specific metrics 123 which can be monitored. Description of metrics returned for *single node*: 124 125 * `local_extraction` 126 * `started_time` - timestamp when the local extraction has started. 127 * `end_time` - timestamp when the local extraction has finished. 128 * `elapsed` - duration (in seconds) of the local extraction phase. 129 * `running` - informs if the phase is currently running. 130 * `finished` - informs if the phase has finished. 131 * `total_count` - static number of shards which needs to be scanned - informs what is the expected number of input shards. 132 * `extracted_count` - number of shards extracted/processed by given node. This number can differ from node to node since shards may not be equally distributed. 133 * `extracted_size` - size of extracted/processed shards by given node. 134 * `extracted_record_count` - number of records extracted (in total) from all processed shards. 135 * `extracted_to_disk_count` - number of records extracted (in total) and saved to the disk (there was not enough space to save them in memory). 136 * `extracted_to_disk_size` - size of extracted records which were saved to the disk. 137 * `single_shard_stats` - statistics about single shard processing. 138 * `total_ms` - total number of milliseconds spent extracting all shards. 139 * `count` - number of extracted shards. 140 * `min_ms` - shortest duration of extracting a shard (in milliseconds). 141 * `max_ms` - longest duration of extracting a shard (in milliseconds). 142 * `avg_ms` - average duration of extracting a shard (in milliseconds). 143 * `min_throughput` - minimum throughput of extracting a shard (in bytes per second). 144 * `max_throughput` - maximum throughput of extracting a shard (in bytes per second). 145 * `avg_throughput` - average throughput of extracting a shard (in bytes per second). 146 * `meta_sorting` 147 * `started_time` - timestamp when the meta sorting has started. 148 * `end_time` - timestamp when the meta sorting has finished. 149 * `elapsed` - duration (in seconds) of the meta sorting phase. 150 * `running` - informs if the phase is currently running. 151 * `finished` - informs if the phase has finished. 152 * `sent_stats` - statistics about sending records to other nodes. 153 * `total_ms` - total number of milliseconds spent on sending the records. 154 * `count` - number of records sent to other targets. 155 * `min_ms` - shortest duration of sending the records (in milliseconds). 156 * `max_ms` - longest duration of sending the records (in milliseconds). 157 * `avg_ms` - average duration of sending the records (in milliseconds). 158 * `recv_stats` - statistics about receiving records from other nodes. 159 * `total_ms` - total number of milliseconds spent on receiving the records from nodes. 160 * `count` - number of records received from other targets. 161 * `min_ms` - shortest duration of receiving the records (in milliseconds). 162 * `max_ms` - longest duration of receiving the records (in milliseconds). 163 * `avg_ms` - average duration of receiving the records (in milliseconds). 164 * `shard_creation` 165 * `started_time` - timestamp when the shard creation has started. 166 * `end_time` - timestamp when the shard creation has finished. 167 * `elapsed` - duration (in seconds) of the shard creation phase. 168 * `running` - informs if the phase is currently running. 169 * `finished` - informs if the phase has finished. 170 * `to_create` - number of shards which needs to be created on given node. 171 * `created_count` - number of shards already created. 172 * `moved_shard_count` - number of shards moved from the node to another one (it sometimes makes sense to create shards locally and send it via network). 173 * `req_stats` - statistics about sending requests for records. 174 * `total_ms` - total number of milliseconds spent on sending requests for records from other nodes. 175 * `count` - number of requested records. 176 * `min_ms` - shortest duration of sending a request (in milliseconds). 177 * `max_ms` - longest duration of sending a request (in milliseconds). 178 * `avg_ms` - average duration of sending a request (in milliseconds). 179 * `resp_stats` - statistics about waiting for the records. 180 * `total_ms` - total number of milliseconds spent on waiting for the records from other nodes. 181 * `count` - number of records received from other nodes. 182 * `min_ms` - shortest duration of waiting for a record (in milliseconds). 183 * `max_ms` - longest duration of waiting for a record (in milliseconds). 184 * `avg_ms` - average duration of waiting for a record (in milliseconds). 185 * `local_send_stats` - statistics about sending record content to other target. 186 * `total_ms` - total number of milliseconds spent on writing the record content to the wire. 187 * `count` - number of records received from other nodes. 188 * `min_ms` - shortest duration of waiting for a record content to written into the wire (in milliseconds). 189 * `max_ms` - longest duration of waiting for a record content to written into the wire (in milliseconds). 190 * `avg_ms` - average duration of waiting for a record content to written into the wire (in milliseconds). 191 * `min_throughput` - minimum throughput of writing record content into the wire (in bytes per second). 192 * `max_throughput` - maximum throughput of writing record content into the wire (in bytes per second). 193 * `avg_throughput` - average throughput of writing record content into the wire (in bytes per second). 194 * `local_recv_stats` - statistics receiving record content from other target. 195 * `total_ms` - total number of milliseconds spent on receiving the record content from the wire. 196 * `count` - number of records received from other nodes. 197 * `min_ms` - shortest duration of waiting for a record content to be read from the wire (in milliseconds). 198 * `max_ms` - longest duration of waiting for a record content to be read from the wire (in milliseconds). 199 * `avg_ms` - average duration of waiting for a record content to be read from the wire (in milliseconds). 200 * `min_throughput` - minimum throughput of reading record content from the wire (in bytes per second). 201 * `max_throughput` - maximum throughput of reading record content from the wire (in bytes per second). 202 * `avg_throughput` - average throughput of reading record content from the wire (in bytes per second). 203 * `single_shard_stats` - statistics about single shard creation. 204 * `total_ms` - total number of milliseconds spent creating all shards. 205 * `count` - number of created shards. 206 * `min_ms` - shortest duration of creating a shard (in milliseconds). 207 * `max_ms` - longest duration of creating a shard (in milliseconds). 208 * `avg_ms` - average duration of creating a shard (in milliseconds). 209 * `min_throughput` - minimum throughput of creating a shard (in bytes per second). 210 * `max_throughput` - maximum throughput of creating a shard (in bytes per second). 211 * `avg_throughput` - average throughput of creating a shard (in bytes per second). 212 * `aborted` - informs if the job has been aborted. 213 * `archived` - informs if the job has finished and was archived to journal. 214 * `description` - description of the job. 215 216 Example output for single node: 217 ```json 218 { 219 "local_extraction": { 220 "started_time": "2019-06-17T12:27:25.102691781+02:00", 221 "end_time": "2019-06-17T12:28:04.982017787+02:00", 222 "elapsed": 39, 223 "running": false, 224 "finished": true, 225 "total_count": 1000, 226 "extracted_count": 182, 227 "extracted_size": 4771020800, 228 "extracted_record_count": 9100, 229 "extracted_to_disk_count": 4, 230 "extracted_to_disk_size": 104857600, 231 "single_shard_stats": { 232 "total_ms": 251417, 233 "count": 182, 234 "min_ms": 30, 235 "max_ms": 2696, 236 "avg_ms": 1381, 237 "min_throughput": 9721724, 238 "max_throughput": 847903603, 239 "avg_throughput": 50169799 240 } 241 }, 242 "meta_sorting": { 243 "started_time": "2019-06-17T12:28:04.982041542+02:00", 244 "end_time": "2019-06-17T12:28:05.336979995+02:00", 245 "elapsed": 0, 246 "running": false, 247 "finished": true, 248 "sent_stats": { 249 "total_ms": 99, 250 "count": 1, 251 "min_ms": 99, 252 "max_ms": 99, 253 "avg_ms": 99 254 }, 255 "recv_stats": { 256 "total_ms": 246, 257 "count": 1, 258 "min_ms": 246, 259 "max_ms": 246, 260 "avg_ms": 246 261 } 262 }, 263 "shard_creation": { 264 "started_time": "2019-06-17T12:28:05.725630555+02:00", 265 "end_time": "2019-06-17T12:29:19.108651924+02:00", 266 "elapsed": 73, 267 "running": false, 268 "finished": true, 269 "to_create": 9988, 270 "created_count": 9988, 271 "moved_shard_count": 0, 272 "req_stats": { 273 "total_ms": 160, 274 "count": 8190, 275 "min_ms": 0, 276 "max_ms": 20, 277 "avg_ms": 0 278 }, 279 "resp_stats": { 280 "total_ms": 4323665, 281 "count": 8190, 282 "min_ms": 0, 283 "max_ms": 6829, 284 "avg_ms": 527 285 }, 286 "single_shard_stats": { 287 "total_ms": 4487385, 288 "count": 9988, 289 "min_ms": 0, 290 "max_ms": 6829, 291 "avg_ms": 449, 292 "min_throughput": 76989, 293 "max_throughput": 709852568, 294 "avg_throughput": 98584381 295 } 296 }, 297 "aborted": false, 298 "archived": true 299 } 300 ``` 301 302 ## API 303 304 You can use the [AIS's CLI](/docs/cli.md) to start, abort, retrieve metrics or list dSort jobs. 305 It is also possible generate random dataset to test dSort's capabilities. 306 307 ## Config 308 309 | Config value | Default value | Description | 310 |---|---|---| 311 | `duplicated_records` | "ignore" | what to do when duplicated records are found: "ignore" - ignore and continue, "warn" - notify a user and continue, "abort" - abort dSort operation | 312 | `missing_shards` | "ignore" | what to do when missing shards are detected: "ignore" - ignore and continue, "warn" - notify a user and continue, "abort" - abort dSort operation | 313 | `ekm_malformed_line` | "abort" | what to do when extraction key map notices a malformed line: "ignore" - ignore and continue, "warn" - notify a user and continue, "abort" - abort dSort operation | 314 | `ekm_missing_key` | "abort" | what to do when extraction key map have a missing key: "ignore" - ignore and continue, "warn" - notify a user and continue, "abort" - abort dSort operation | 315 | `call_timeout` | "10m" | a maximum time a target waits for another target to respond | 316 | `default_max_mem_usage` | "80%" | a maximum amount of memory used by running dSort. Can be set as a percent of total memory(e.g `80%`) or as the number of bytes(e.g, `12G`) | 317 | `dsorter_mem_threshold` | "100GB" | minimum free memory threshold which will activate specialized dsorter type which uses memory in creation phase - benchmarks shows that this type of dsorter behaves better than general type | 318 | `compression` | "never" | LZ4 compression parameters used when dSort sends its shards over network. Values: "never" - disables, "always" - compress all data, or a set of rules for LZ4, e.g "ratio=1.2" means enable compression from the start but disable when average compression ratio drops below 1.2 to save CPU resources | 319 320 321 To clear what these values means we have couple examples to showcase certain scenarios. 322 323 ### Examples 324 325 #### `default_max_mem_usage` 326 327 Lets assume that we have `N` targets, where each target has `Y`GB of RAM and `default_max_mem_usage` is set to `80%`. 328 So dSort can allocate memory until the number of memory used (total in the system) is below `80% * Y`GB. 329 What this means is that regardless of how much other subsystems or programs working at the same instance use memory, the dSort will never allocate memory if the watermark is reached. 330 For example if some other program already allocated `90% * Y`GB memory (only `10%` is left), then dSort will not allocate any memory since it will notice that the watermark is already exceeded. 331 332 #### `dsorter_mem_threshold` 333 334 Dsort has implemented for now 2 different types of so called "dsorter": `dsorter_mem` and `dsorter_general`. 335 These two implementations use memory, disks and network a little bit differently and are designated to different use cases. 336 337 By default `dsorter_general` is used as it was implemented for all types of workloads. 338 It is allocates memory during the first phase of dSort and uses it in the last phase. 339 340 `dsorter_mem` was implemented in mind to speed up the creation phase which is usually a biggest bottleneck. 341 It has specific way of building shards in memory and then persisting them do the disk. 342 This makes this dsorter memory oriented and it is required for it to have enough memory to build the shards. 343 344 To determine which dsorter to use we have introduced a heuristic which tries to determine when it is best to use `dsorter_mem` instead of `dsorter_general`. 345 Config value `dsorter_mem_threshold` sets the threshold above which the `dsorter_mem` will be used. 346 If **all** targets have max memory usage (see `default_max_mem_usage`) above the `dsorter_mem_threshold` then `dsorter_mem` is chosen for the dSort job. 347 For example if each target has `Y`GB of RAM, `default_max_mem_usage` is set to `80%` and `dsorter_mem_threshold` is set to `100GB` then as long as on all targets `80% * Y > 100GB` then `dsorter_mem` will be used.