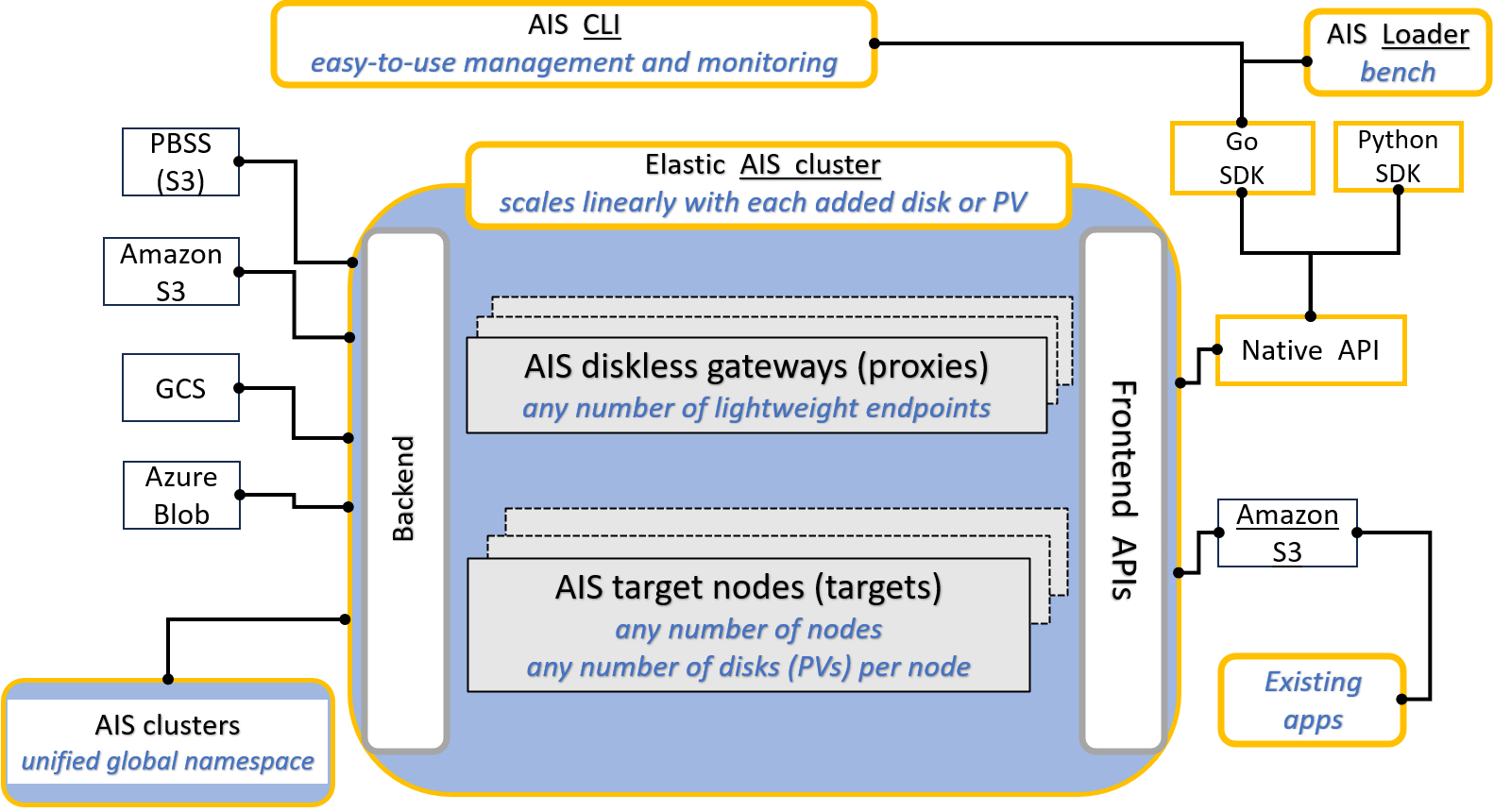

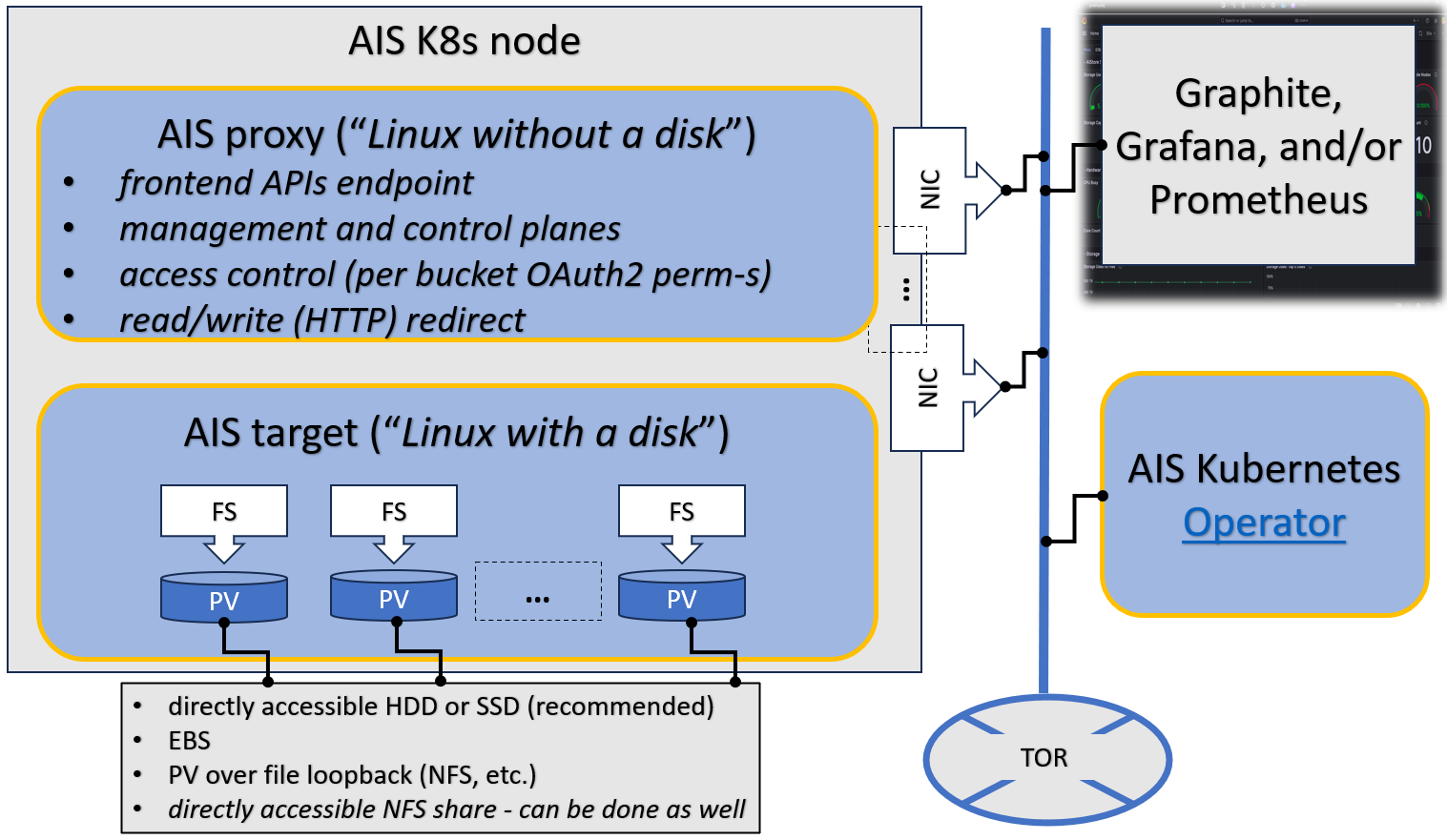

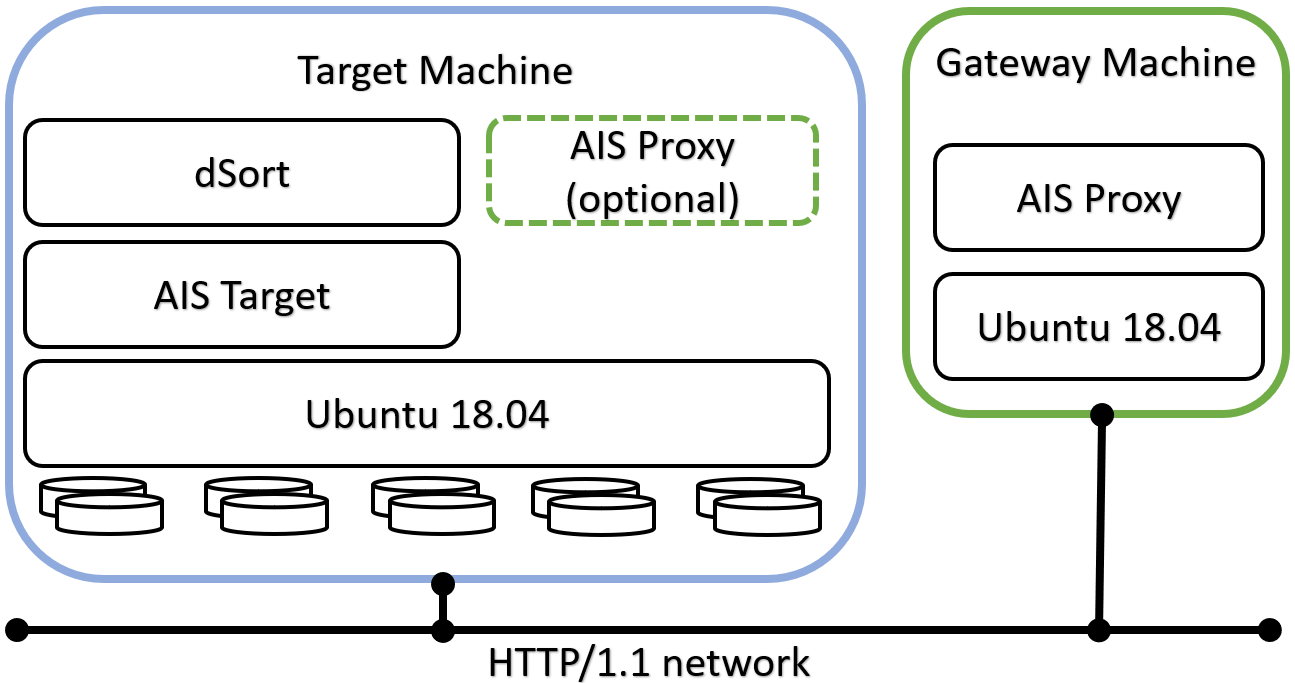

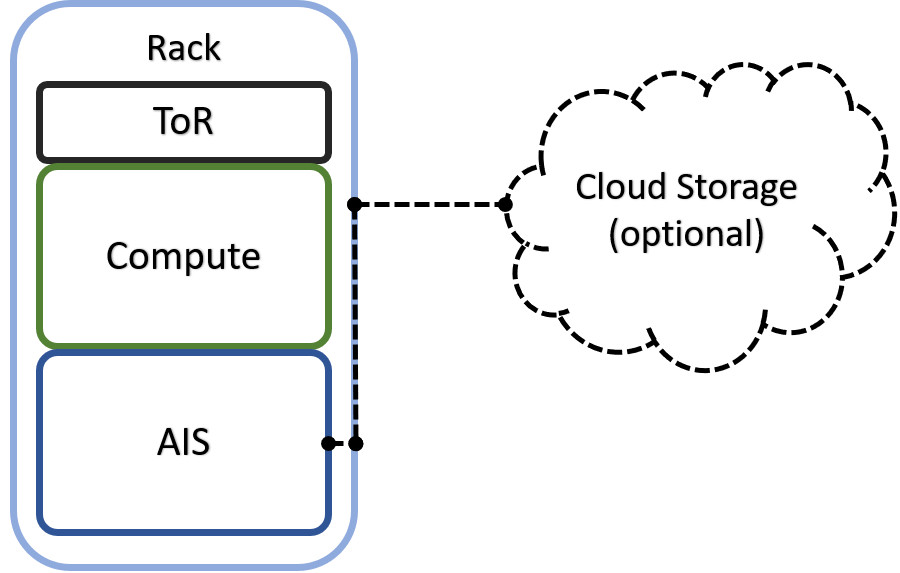

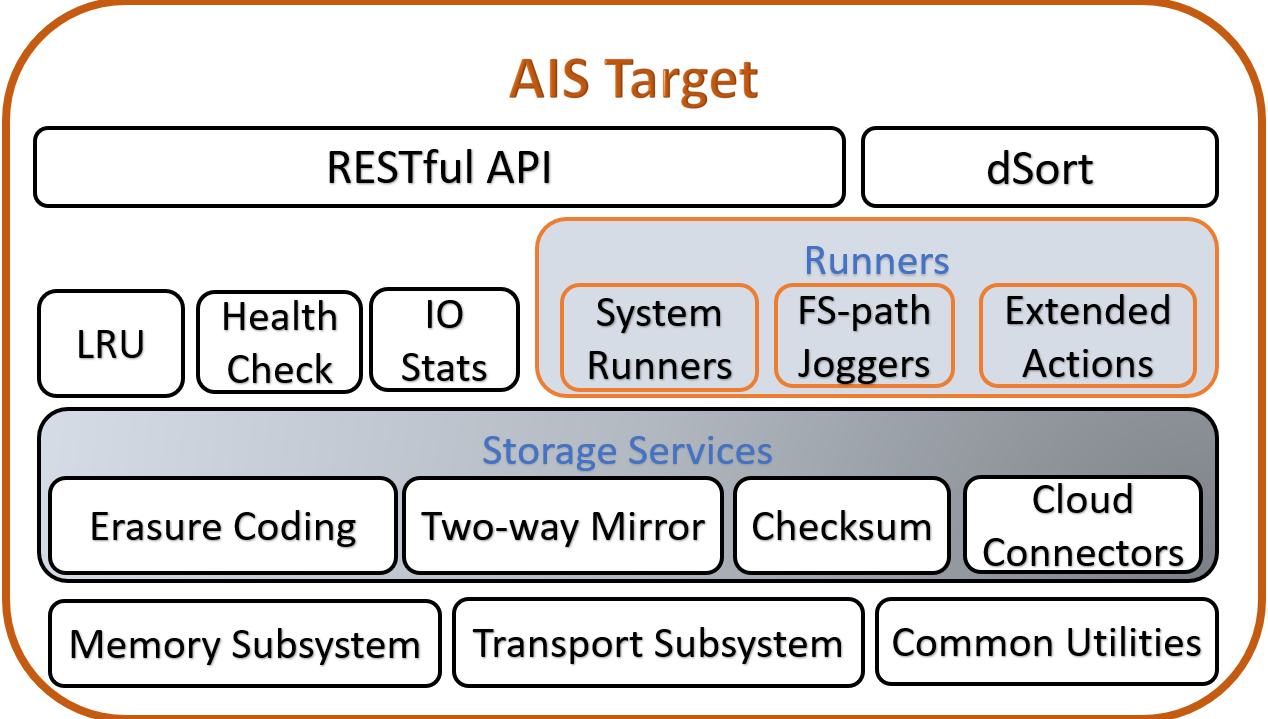

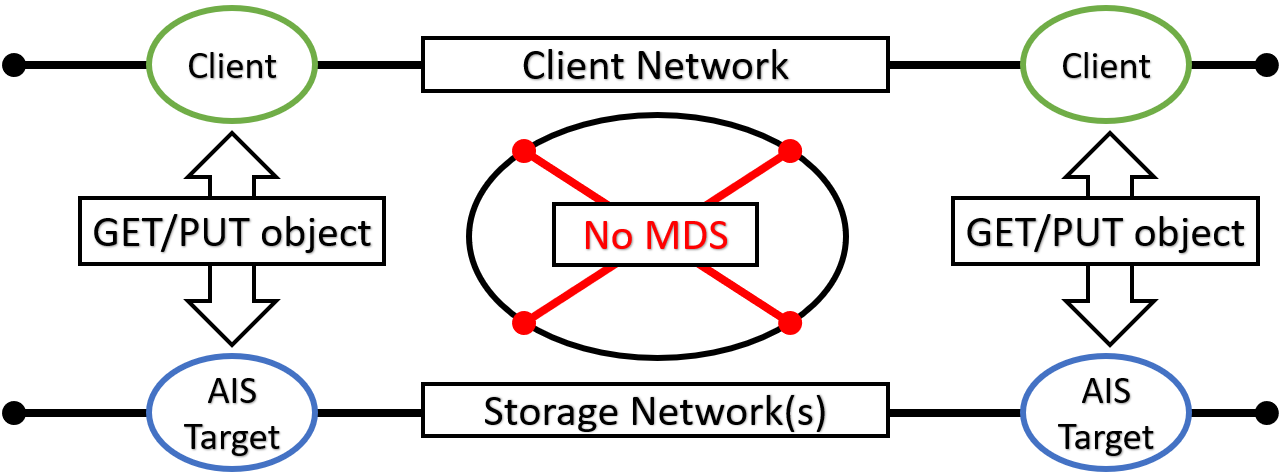

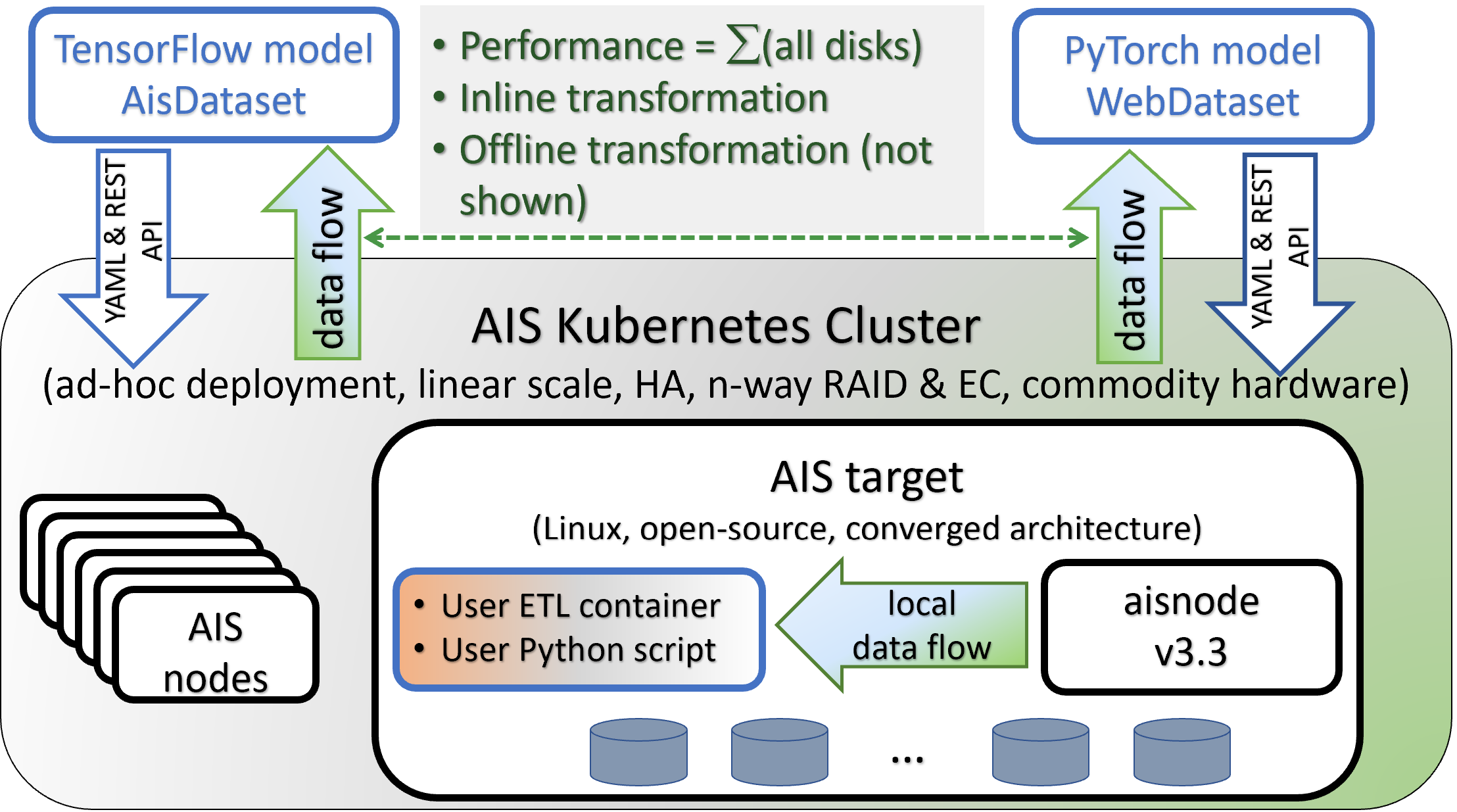

github.com/NVIDIA/aistore@v1.3.23-0.20240517131212-7df6609be51d/docs/overview.md (about) 1 --- 2 layout: post 3 title: OVERVIEW 4 permalink: /docs/overview 5 redirect_from: 6 - /overview.md/ 7 - /docs/overview.md/ 8 --- 9 10 ## Introduction 11 12 Training deep learning (DL) models on petascale datasets is essential for achieving competitive and state-of-the-art performance in applications such as speech, video analytics, and object recognition. However, existing distributed filesystems were not developed for the access patterns and usability requirements of DL jobs. 13 14 In this [white paper](https://arxiv.org/abs/2001.01858) we describe AIStore (AIS) and components, and then compare system performance experimentally using image classification workloads and storing training data on a variety of backends. 15 16 See also: 17 18 * [blog](https://aiatscale.org/blog) 19 * [white paper](https://arxiv.org/abs/2001.01858) 20 * [at-a-glance poster](https://storagetarget.files.wordpress.com/2019/12/deep-learning-large-scale-phys-poster-1.pdf) 21 22 The rest of this document is structured as follows: 23 24 - [At a glance](#at-a-glance) 25 - [Terminology](#terminology) 26 - [Design Philosophy](#design-philosophy) 27 - [Key Concepts and Diagrams](#key-concepts-and-diagrams) 28 - [Traffic Patterns](#traffic-patterns) 29 - [Read-after-write consistency](#read-after-write-consistency) 30 - [Open Format](#open-format) 31 - [Existing Datasets](#existing-datasets) 32 - [Data Protection](#data-protection) 33 - [Erasure Coding vs IO Performance](#erasure-coding-vs-io-performance) 34 - [Scale-Out](#scale-out) 35 - [Networking](#networking) 36 - [HA](#ha) 37 - [Other Services](#other-services) 38 - [dSort](#dsort) 39 - [CLI](#cli) 40 - [ETL](#etl) 41 - [_No limitations_ principle](#no-limitations-principle) 42 43 ## At a glance 44 45 Following is a high-level **block diagram** with an emphasis on supported frontend and backend APIs, and the capability to scale-out horizontally. The picture also tries to make the point that AIS aggregates an arbitrary numbers of storage servers ("targets") with local or locally accessible drives, whereby each drive is formatted with a local filesystem of choice (e.g., xfs or zfs). 46 47  48 49 In any aistore cluster, there are **two kinds of nodes**: proxies (a.k.a. gateways) and storage nodes (targets): 50 51  52 53 Proxies provide access points ("endpoints") for the frontend API. At any point in time there is a single **primary** proxy that also controls versioning and distribution of the current cluster map. When and if the primary fails, another proxy is majority-elected to perform the (primary) role. 54 55 All user data is equally distributed (or [balanced](/docs/rebalance.md)) across all storage nodes ("targets"). Which, combined with zero (I/O routing and metadata processing) overhead, provides for linear scale with no limitation on the total number of aggregated storage drives. 56 57 ## Terminology 58 59 * [Backend Provider](providers.md) - an abstraction, and simultaneously an API-supported option, that allows to delineate between "remote" and "local" buckets with respect to a given AIS cluster. 60 61 * [Unified Global Namespace](providers.md) - AIS clusters *attached* to each other, effectively, form a super-cluster providing unified global namespace whereby all buckets and all objects of all included clusters are uniformly accessible via any and all individual access points (of those clusters). 62 63 * [Mountpath](configuration.md) - a single disk **or** a volume (a RAID) formatted with a local filesystem of choice, **and** a local directory that AIS can fully own and utilize (to store user data and system metadata). Note that any given disk (or RAID) can have (at most) one mountpath - meaning **no disk sharing**. Secondly, mountpath directories cannot be nested. Further: 64 - a mountpath can be temporarily disabled and (re)enabled; 65 - a mountpath can also be detached and (re)attached, thus effectively supporting growth and "shrinkage" of local capacity; 66 - it is safe to execute the 4 listed operations (enable, disable, attach, detach) at any point during runtime; 67 - in a typical deployment, the total number of mountpaths would compute as a direct product of (number of storage targets) x (number of disks in each target). 68 69 * [Xaction](/xact/README.md) - asynchronous batch operations that may take many seconds (minutes, hours, etc.) to execute - are called *eXtended actions* or simply *xactions*. CLI and [CLI documentation](/docs/cli) refers to such operations as **jobs** - the more familiar term that can be used interchangeably. Examples include erasure coding or n-way mirroring a dataset, resharding and reshuffling a dataset, archiving multiple objects, copying buckets, and many more. All [eXtended actions](/xact/README.md) support generic [API](/api/xaction.go) and [CLI](/docs/cli/job.md#show-job-statistics) to show both common counters (byte and object numbers) as well as operation-specific extended statistics. 70 71 ## Design Philosophy 72 73 It is often more optimal to let applications control how and whether the stored content is stored in chunks. That's the simple truth that holds, in particular, for AI datasets that are often pre-sharded with content and boundaries of those shards based on application-specific optimization criteria. More exactly, the datasets could be pre-sharded, post-sharded, and otherwise transformed to facilitate training, inference, and simulation by the AI apps. 74 75 The corollary of this statement is two-fold: 76 77 - Breaking objects into pieces (often called chunks but also slices, segments, fragments, and blocks) and the related functionality does not necessarily belong to an AI-optimized storage system per se; 78 - Instead of chunking the objects and then reassembling them with the help of cluster-wide metadata (that must be maintained with extreme care), the storage system could alternatively focus on providing assistance to simplify and accelerate dataset transformations. 79 80 Notice that the same exact approach works for the other side of the spectrum - the proverbial [small-file problem](https://www.quora.com/What-is-the-small-file-problem-in-Hadoop). Here again, instead of optimizing small-size IOPS, we focus on application-specific (re)sharding, whereby each shard would have a desirable size, contain a batch of the original (small) files, and where the files (aka samples) would be sorted to optimizes subsequent computation. 81 82 ## Key Concepts and Diagrams 83 84 In this section: high-level diagrams that introduce key concepts and architecture, as well as possible deployment options. 85 86 AIS cluster *comprises* arbitrary (and not necessarily equal) numbers of **gateways** and **storage targets**. Targets utilize local disks while gateways are HTTP **proxies** that provide most of the control plane and never touch the data. 87 88 > The terms *gateway* and *proxy* are used interchangeably throughout this README and other sources in the repository. 89 90 Both **gateways** and **targets** are userspace daemons that join (and, by joining, form) a storage cluster at their respective startup times, or upon user request. AIStore can be deployed on any commodity hardware with pretty much any Linux distribution (although we do recommend 4.x kernel). There are no designed-in size/scale type limitations. There are no dependencies on special hardware capabilities. The code itself is free, open, and MIT-licensed. 91 92 The diagram depicting AIS clustered node follows below, and makes the point that gateways and storage targets can be colocated in a single machine (or a VM) but not necessarily: 93 94  95 96 AIS can be deployed as a self-contained standalone persistent storage cluster or a fast tier in front of any of the supported backends including Amazon S3 and Google Cloud (GCP). The built-in caching mechanism provides LRU replacement policy on a per-bucket basis while taking into account configurable high and low capacity watermarks (see [LRU](storage_svcs.md#lru) for details). AWS/GCP integration is *turnkey* and boils down to provisioning AIS targets with credentials to access Cloud-based buckets. 97 98 If (compute + storage) rack is a *unit of deployment*, it may as well look as follows: 99 100  101 102 Finally, AIS target provides a number of storage services with [S3-like RESTful API](http_api.md) on top and a MapReduce layer that we call [dSort](#dsort). 103 104  105 106 ## Traffic Patterns 107 108 In AIS, all inter- and intra-cluster networking is based on HTTP/1.1 (with HTTP/2 option currently under development). 109 HTTP(S) clients execute RESTful operations vis-à-vis AIS gateways and data then moves **directly** between the clients and storage targets with no metadata servers and no extra processing in-between: 110 111  112 113 > MDS in the diagram above stands for the metadata server(s) or service(s). 114 115 In the picture, a client on the left side makes an I/O request which is then fully serviced by the *left* target - one of the nodes in the AIS cluster (not shown). 116 Symmetrically, the *right* client engages with the *right* AIS target for its own GET or PUT object transaction. 117 In each case, the entire transaction is executed via a single TCP session that connects the requesting client directly to one of the clustered nodes. 118 As far as the datapath is concerned, there are no extra hops in the line of communications. 119 120 > For detailed traffic patterns diagrams, please refer to [this readme](traffic_patterns.md). 121 122 Distribution of objects across AIS cluster is done via (lightning fast) two-dimensional consistent-hash whereby objects get distributed across all storage targets and, within each target, all local disks. 123 124 ## Read-after-write consistency 125 126 `PUT(object)` is a transaction. New object (or new version of the object) becomes visible/accessible only when aistore finishes writing the first replica and its metadata. 127 128 For S3 or any other remote [backend](/docs/providers.md), the latter includes: 129 130 * remote PUT via vendor's SDK library; 131 * local write under a temp name; 132 * getting successful remote response that carries remote metadata; 133 * simultaneously, computing checksum (per bucket config); 134 * optionally, checksum validation, if configured; 135 * finally, writing combined object metadata, at which point the object becomes visible and accessible. 136 137 But _not_ prior to that point! 138 139 If configured, additional copies and EC slices are added asynchronously. E.g., given a bucket with 3-way replication you may already read the first replica when the other two (copies) are still pending. 140 141 It is worth emphasizing that the same rules of data protection and consistency are universally enforced across the board for all _data writing_ scenarios, including (but not limited to): 142 143 * RESTful PUT (above); 144 * cold GET (as in: `ais get s3://abc/xyz /dev/null` when S3 has `abc/xyz` while aistore doesn't); 145 * copy bucket; transform bucket; 146 * multi-object copy; multi-object transform; multi-object archive; 147 * prefetch remote bucket; 148 * download very large remote objects (blobs); 149 * rename bucket; 150 * promote NFS share 151 152 and more. 153 154 ## Open Format 155 156 AIS targets utilize local Linux filesystems including (but not limited to) xfs, ext4, and openzfs. User data is checksummed and stored *as is* without any alteration (that also allows us to support direct client <=> disk datapath). AIS on-disk format is, therefore, largely defined by local filesystem(s) chosen at deployment time. 157 158 Notwithstanding, AIS stores and then maintains object replicas, erasure-coded slices, bucket metadata - in short, a variety of local and global-scope (persistent) structures - for details, please refer to: 159 160 - [On-Disk Layout](on_disk_layout.md) 161 162 > **You can access your data with and without AIS, and without any need to *convert* or *export/import*, etc. - at any time! Your data is stored in its original native format using user-given object names. Your data can be migrated out of AIS at any time as well, and, again, without any dependency whatsoever on the AIS itself.** 163 164 > Your own data is [unlocked](https://en.wikipedia.org/wiki/Vendor_lock-in) and immediately available at all times. 165 166 ## Existing Datasets 167 168 Common way to use AIStore include the most fundamental and, often, the very first step: populating AIS cluster with an existing dataset, or datasets. Those (datasets) can come from remote buckets (AWS, Google Cloud, Azure), HDFS directories, NFS shares, local files, or any vanilla HTTP(S) locations. 169 170 To this end, AIS provides 6 (six) easy ways ranging from the conventional on-demand caching to *promoting* colocated files and directories, and more. 171 172 > Related references and examples include this [technical blog](https://aiatscale.org/blog/2021/12/07/cp-files-to-ais) that shows how to copy a file-based dataset in two easy steps. 173 174 1. [Cold GET](#existing-datasets-cold-get) 175 2. [Prefetch](#existing-datasets-batch-prefetch) 176 3. [Internet Downloader](#existing-datasets-integrated-downloader) 177 4. [HTTP(S) Datasets](#existing-datasets-https-datasets) 178 5. [Promote local or shared files](#promote-local-or-shared-files) 179 6. [Backend Bucket](bucket.md#backend-bucket) 180 7. [Download very large objects (BLOBs)](/docs/cli/blob-downloader.md) 181 8. [Copy remote bucket](/docs/cli/bucket.md#copy-bucket) 182 9. [Copy multiple remote objects](/docs/cli/bucket.md#copy-multiple-objects) 183 184 In particular: 185 186 ### Existing Datasets: Cold GET 187 188 If the dataset in question is accessible via S3-like object API, start working with it via GET primitive of the [AIS API](http_api.md). Just make sure to provision AIS with the corresponding credentials to access the dataset's bucket in the Cloud. 189 190 > As far as supported S3-like backends, AIS currently supports Amazon S3, Google Cloud, and Azure. 191 192 > AIS executes *cold GET* from the Cloud if and only if the object is not stored (by AIS), **or** the object has a bad checksum, **or** the object's version is outdated. 193 194 In all other cases, AIS will service the GET request without going to Cloud. 195 196 ### Existing Datasets: Batch Prefetch 197 198 Alternatively or in parallel, you can also *prefetch* a flexibly-defined *list* or *range* of objects from any given remote bucket, as described in [this readme](batch.md). 199 200 For CLI usage, see: 201 202 * [CLI: prefetch](/docs/cli/object.md#prefetch-objects) 203 204 ### Existing Datasets: integrated Downloader 205 206 But what if the dataset in question exists in the form of (vanilla) HTTP/HTTPS URL(s)? What if there's a popular bucket in, say, Google Cloud that contains images that you'd like to bring over into your Data Center and make available locally for AI researchers? 207 208 For these and similar use cases we have [AIS Downloader](/docs/downloader.md) - an integrated tool that can execute massive download requests, track their progress, and populate AIStore directly from the Internet. 209 210 ### Existing Datasets: HTTP(S) Datasets 211 212 AIS can also be designated as HTTP proxy vis-à-vis 3rd party object storages. This mode of operation requires: 213 214 1. HTTP(s) client side: set the `http_proxy` (`https_proxy` - for HTTPS) environment 215 2. Disable proxy for AIS cluster IP addresses/hostnames (for `curl` use option `--noproxy`) 216 217 Note that `http_proxy` is supported by most UNIX systems and is recognized by most (but not all) HTTP clients: 218 219 WARNING: Currently HTTP(S) based datasets can only be used with clients which support an option of overriding the proxy for certain hosts (for e.g. `curl ... --noproxy=$(curl -s G/v1/cluster?what=target_ips)`). 220 If used otherwise, we get stuck in a redirect loop, as the request to target gets redirected via proxy. 221 222 ```console 223 $ export http_proxy=<AIS proxy IPv4 or hostname> 224 ``` 225 226 In combination, these two settings have an effect of redirecting all **unmodified** client-issued HTTP(S) requests to the AIS proxy/gateway with subsequent execution transparently from the client perspective. AIStore will on the fly create a bucket to store and cache HTTP(S) reachable files all the while supporting the entire gamut of functionality including ETL. Examples for HTTP(S) datasets can be found in [this readme](bucket.md#public-https-dataset) 227 228 ### Promote local or shared files 229 230 AIS can also `promote` files and directories to objects. The operation entails synchronous or asynchronus massively-parallel downloading of any accessible file source, including: 231 232 - a local directory (or directories) of any target node (or nodes); 233 - a file share mounted on one or several (or all) target nodes in the cluster. 234 235 You can now use `promote` ([CLI](/docs/cli/object.md#promote-files-and-directories), API) to populate AIS datasets with **any external file source**. 236 237 Originally (experimentally) introduced in the v3.0 to handle "files and directories colocated within AIS storage target machines", `promote` has been redefined, extended (in terms of supported options and permutations), and completely reworked in the v3.9. 238 239 ## Data Protection 240 241 AIS supports end-to-end checksumming and two distinct [storage services](storage_svcs.md) - N-way mirroring and erasure coding - providing for data redundancy. 242 243 The functionality that we denote as end-to-end checksumming further entails: 244 245 - self-healing upon detecting corruption, 246 - optimizing-out redundant writes upon detecting existence of the destination object, 247 - utilizing client-provided checksum (iff provided) to perform end-to-end checksum validation, 248 - utilizing Cloud checksum of an object that originated in a Cloud bucket, and 249 - utilizing its version to perform so-called "cold" GET when object exists both in AIS and in the Cloud, 250 251 and more. 252 253 Needless to say, each of these sub-topics may require additional discussion of: 254 255 * [configurable options](configuration.md), 256 * [default settings](bucket.md), and 257 * the corresponding performance tradeoffs. 258 259 ### Erasure Coding vs IO Performance 260 261 When an AIS bucket is EC-configured as (D, P), where D is the number of data slices and P - the number of parity slices, the corresponding space utilization ratio is not `(D + P)/D`, as one would assume. 262 263 It is, actually, `1 + (D + P)/D`. 264 265 This is because AIS was created to perform and scale in the first place. AIS always keeps one full replica at its [HRW location](traffic_patterns.md). 266 267 AIS will utilize EC to automatically self-heal upon detecting corruption (of the full replica). When a client performs a read on a non-existing (or not found) name, AIS will check with EC - assuming, obviously, that the bucket is erasure coded. 268 269 EC-related philosophy can be summarized as one word: **recovery**. EC plays no part in the fast path. 270 271 ## Scale-Out 272 273 The scale-out category includes balanced and fair distribution of objects where each storage target will store (via a variant of the consistent hashing) 1/Nth of the entire namespace where (the number of objects) N is unlimited by design. 274 275 > AIS cluster capability to **scale-out is truly unlimited**. The real-life limitations can only be imposed by the environment - capacity of a given Data Center, for instance. 276 277 Similar to the AIS gateways, AIS storage targets can join and leave at any moment causing the cluster to rebalance itself in the background and without downtime. 278 279 ## Networking 280 281 Architecture-wise, aistore is built to support 3 (three) logical networks: 282 * user-facing public and, possibly, **multi-home**) network interface 283 * intra-cluster control, and 284 * intra-cluster data 285 286 The way the corresponding config may look in production (e.g.) follows: 287 288 ```console 289 $ ais config node t[nKfooBE] local h... <TAB-TAB> 290 host_net.hostname host_net.port_intra_control host_net.hostname_intra_control 291 host_net.port host_net.port_intra_data host_net.hostname_intra_data 292 293 $ ais config node t[nKfooBE] local host_net --json 294 295 "host_net": { 296 "hostname": "10.50.56.205", 297 "hostname_intra_control": "ais-target-27.ais.svc.cluster.local", 298 "hostname_intra_data": "ais-target-27.ais.svc.cluster.local", 299 "port": "51081", 300 "port_intra_control": "51082", 301 "port_intra_data": "51083" 302 } 303 ``` 304 305 The fact that there are 3 logical networks is not a limitation - i.e, not a requirement to have exactly 3 (networks). 306 307 Using the example above, here's a small deployment-time change to run a single one: 308 309 ```console 310 "host_net": { 311 "hostname": "10.50.56.205", 312 "hostname_intra_control": "ais-target-27.ais.svc.cluster.local", 313 "hostname_intra_data": "ais-target-27.ais.svc.cluster.local", 314 "port": "51081", 315 "port_intra_control": "51081, # <<<<<< notice the same port 316 "port_intra_data": "51081" # <<<<<< ditto 317 } 318 ``` 319 320 Ideally though, production clusters are deployed over 3 physically different and isolated networks, whereby intense data traffic, for instance, does not introduce additional latency for the control one, etc. 321 322 Separately, there's a **multi-homing** capability motivated by the fact that today's server systems may often have, say, two 50Gbps network adapters. To deliver the entire 100Gbps _without_ LACP trunking and (static) teaming, we could simply have something like: 323 324 ```console 325 "host_net": { 326 "hostname": "10.50.56.205, 10.50.56.206", 327 "hostname_intra_control": "ais-target-27.ais.svc.cluster.local", 328 "hostname_intra_data": "ais-target-27.ais.svc.cluster.local", 329 "port": "51081", 330 "port_intra_control": "51082", 331 "port_intra_data": "51083" 332 } 333 ``` 334 335 And that's all: add the second NIC (second IPv4 addr `10.50.56.206` above) with **no** other changes. 336 337 See also: 338 339 * [aistore configuration](configuration.md) 340 341 ## HA 342 343 AIS features a [highly-available control plane](ha.md) where all gateways are absolutely identical in terms of their (client-accessible) data and control plane [APIs](http_api.md). 344 345 Gateways can be ad hoc added and removed, deployed remotely and/or locally to the compute clients (the latter option will eliminate one network roundtrip to resolve object locations). 346 347 ## Fast Tier 348 AIS can be deployed as a fast tier in front of any of the multiple supported [backends](providers.md). 349 350 As a fast tier, AIS populates itself on demand (via *cold* GETs) and/or via its own *prefetch* API (see [List/Range Operations](batch.md#listrange-operations)) that runs in the background to download batches of objects. 351 352 ## Other Services 353 354 The (quickly growing) list of services includes (but is not limited to): 355 * [health monitoring and recovery](/health/fshc.md) 356 * [range read](http_api.md) 357 * [dry-run (to measure raw network and disk performance)](performance.md#performance-testing) 358 * performance and capacity monitoring with full observability via StatsD/Grafana 359 * load balancing 360 361 > Load balancing consists in optimal selection of a local object replica and, therefore, requires buckets configured for [local mirroring](storage_svcs.md#read-load-balancing). 362 363 Most notably, AIStore provides **[dSort](/docs/dsort.md)** - a MapReduce layer that performs a wide variety of user-defined merge/sort *transformations* on large datasets used for/by deep learning applications. 364 365 ## dSort 366 367 Dsort “views” AIS objects as named shards that comprise archived key/value data. In its 1.0 realization, dSort supports tar, zip, and tar-gzip formats and a variety of built-in sorting algorithms; it is designed, though, to incorporate other popular archival formats including `tf.Record` and `tf.Example` ([TensorFlow](https://www.tensorflow.org/tutorials/load_data/tfrecord)) and [MessagePack](https://msgpack.org/index.html). The user runs dSort by specifying an input dataset, by-key or by-value (i.e., by content) sorting algorithm, and a desired size of the resulting shards. The rest is done automatically and in parallel by the AIS storage targets, with no part of the processing that’d involve a single-host centralization and with dSort stage and progress-within-stage that can be monitored via user-friendly statistics. 368 369 By design, dSort tightly integrates with the AIS-object to take full advantage of the combined clustered CPU and IOPS. Each dSort job (note that multiple jobs can execute in parallel) generates a massively-parallel intra-cluster workload where each AIS target communicates with all other targets and executes a proportional "piece" of a job. This ultimately results in a *transformed* dataset optimized for subsequent training and inference by deep learning apps. 370 371 ## CLI 372 373 AIStore includes an easy-to-use management-and-monitoring facility called [AIS CLI](/docs/cli.md). Once [installed](/docs/cli.md#getting-started), to start using it, simply execute: 374 375 ```console 376 $ export AIS_ENDPOINT=http://G 377 $ ais --help 378 ``` 379 380 where `G` (above) denotes a `hostname:port` address of any AIS gateway (for developers it'll often be `localhost:8080`). Needless to say, the "exporting" must be done only once. 381 382 One salient feature of AIS CLI is its Bash style [auto-completions](/docs/cli.md#ais-cli-shell-auto-complete) that allow users to easily navigate supported operations and options by simply pressing the TAB key: 383 384  385 386 AIS CLI is currently quickly developing. For more information, please see the project's own [README](/docs/cli.md). 387 388 ## ETL 389 390 AIStore is a hyper-converged architecture tailored specifically to run [extract-transform-load](/ext/etl/README.md) workloads - run them close to data and on (and by) all storage nodes in parallel: 391 392  393 394 For background and further references, see: 395 396 * [Extract, Transform, Load with AIStore](etl.md) 397 * [AIS-ETL introduction and a Jupyter notebook walk-through](https://www.youtube.com/watch?v=4PHkqTSE0ls) 398 399 400 ## _No limitations_ principle 401 402 There are **no** designed-in limitations on the: 403 404 * object sizes 405 * total number of objects and buckets in AIS cluster 406 * number of objects in a single AIS bucket 407 * numbers of gateways (proxies) and storage targets in AIS cluster 408 409 Ultimately, the limit on object size may be imposed by a local filesystem of choice and a physical disk capacity. While limit on the cluster size - by the capacity of the hosting AIStore Data Center. But as far as AIS itself, it does not impose any limitations whatsoever.