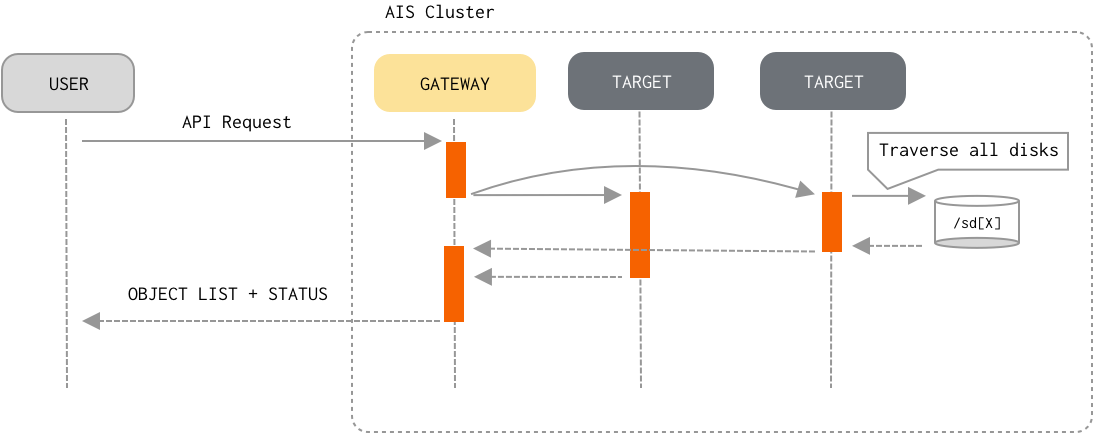

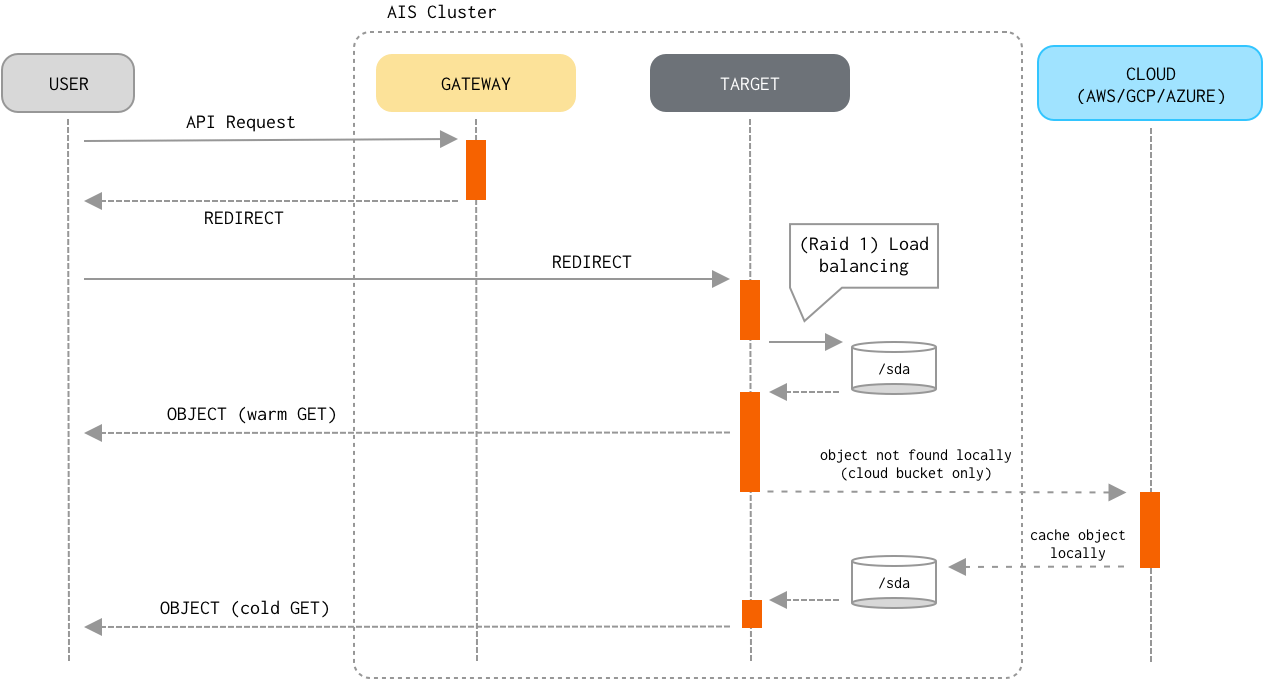

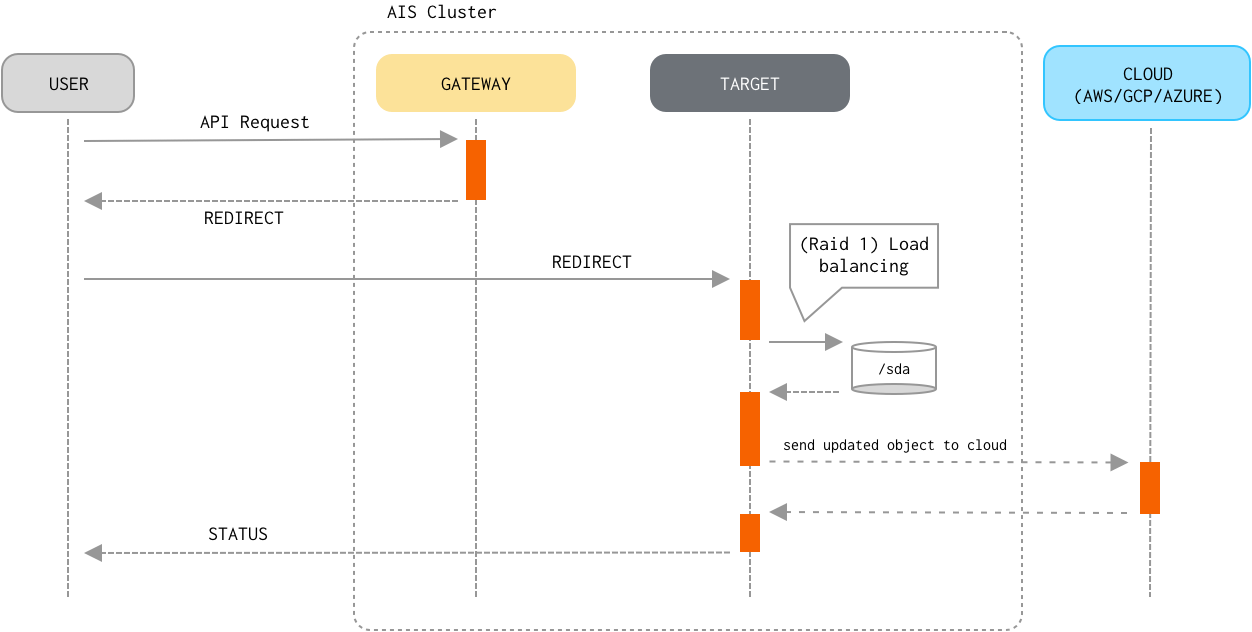

github.com/NVIDIA/aistore@v1.3.23-0.20240517131212-7df6609be51d/docs/traffic_patterns.md (about) 1 --- 2 layout: post 3 title: TRAFFIC PATTERNS 4 permalink: /docs/traffic-patterns 5 redirect_from: 6 - /traffic_patterns.md/ 7 - /docs/traffic_patterns.md/ 8 --- 9 10 ## Table of Contents 11 12 - [Read and Write Traffic Patterns](#read-and-write-traffic-patterns) 13 - [Read](#read) 14 - [Write](#write) 15 - [List Objects](#list-objects) 16 17 ## Read and Write Traffic Patterns 18 19 `GET object` and `PUT object` are by far the most common operations often "responsible" for more 90% (and sometimes more than 99%) of the entire traffic load. As far as I/O processing pipeline, the first few steps of the GET and, respectively, PUT processing are very similar: 20 21 1. A client sends a `GET` or `PUT` request to any of the AIStore proxies/gateways. 22 2. The proxy determines the storage target it must redirect the request to, the steps including: 23 1. Extract bucket and object names from the request. 24 2. Select a storage target as an HRW function of the `(cluster map, bucket, object)` triplet, where HRW stands for [Highest Random Weight](https://en.wikipedia.org/wiki/Rendezvous_hashing); 25 note that since HRW is a consistent hashing mechanism, the output of the computation will be (consistently) the same for the same `(bucket, object)` pair and cluster configuration. 26 3. Redirect the request to the selected target. 27 3. The target parses the bucket and object from the (redirected) request and determines whether the bucket is an AIS bucket or provided by one of the supported 3rd party backends. 28 4. The target then determines a `mountpath` (and therefore, a local filesystem) that will be used to perform the I/O operation. 29 This time, the target computes HRW (configured mountpaths, bucket, object) on the input that, in addition to the same `(bucket, object)` pair includes all currently active/enabled mountpaths. 30 5. Once the highest-randomly-weighted `mountpath` is selected, the target then forms a fully-qualified name to perform the local read/write operation. 31 For instance, given a `mountpath = /a/b/c`, the fully-qualified name may look as `/a/b/c/@<provider>/<bucket_name>/%<content_type>/<object_name>`. 32 33 Beyond these 5 (five) common steps the similarity between `GET` and `PUT` request handling ends, and the remaining steps include: 34 35 ### Read 36 37 5. If the object already exists locally (i.e., belongs to an AIS bucket, or the most recent version of a Cloud-based object is cached and resides on a local disk), the target optionally validates the object's checksum and version. 38 This type of `GET` is often referred to as a "warm `GET`". 39 6. Otherwise, the target performs a "cold `GET`" by downloading the latest version of the object from the Cloud. 40 The target caches downloaded cloud object locally on the disk, so the next request will retrieve the object without requesting the cloud. 41 7. Finally, the target delivers the object to the client. 42 43 > Consistency of the Read operation (vs Write/Delete/Append) is properly enforced. 44 45  46 47 ### Write 48 49 5. If the object already exists locally and its checksum matches the checksum provided in the request, processing stops because the object hasn't changed. 50 6. The target streams the object contents from an HTTP request to a temporary work file. 51 7. Upon receiving the last byte of the object, the target calculates the checksum of the received stream of bytes and compares checksums to ensure data integrity (done only when checksum has been included in the request). 52 In case the checksums don't match, the target discards the object and returns an error to the client. 53 8. When the object streaming has finished, the target sends the new version of the object to the Cloud. 54 9. The target then renames the temporary work file to the fully-qualified name, writes extended attributes (which include versioning and checksum), and commits the PUT transaction. 55 56  57 58 > Consistency of the Write operation (vs Read/Delete/Append) is properly enforced. 59 60 ## List Objects 61 62 In contrast with the Read and Write datapath, `list-objects` flow "engages" all targets in the cluster (and, effectively, all clustered disks). To optimize (memory and networking-wise) and speed-up the processing, AIS employs a number of (designed-in) caching and buffering techniques: 63 64 1. Client sends an API request to any AIStore proxy/gateway to receive a new *page* of listed objects (a typical page size is 1000 - for a remote bucket, 10K - for an AIS bucket). 65 2. The proxy then checks if the requested page can be served from: 66 1. A *cache* - that may have been populated by the previous `list-objects` requests. 67 2. A *buffer* - when targets send more `list-objects` entries than requested proxy *buffers* the entries and then uses those *buffers* to serve subsequent requests. 68 3. Otherwise, the proxy broadcasts the requests to all targets and waits for their responses. 69 3. Upon request, each target starts traversing its local mountpaths. 70 To further optimize time on restarting traversal of the objects upon *next page* request, each target resumes its traversal from the last object that was returned in response to the *previous page* request. 71 The target does it by keeping active thread which stops (produced enough entries) and starts (more entries needed) when requested. 72 4. Finally, the proxy combines all the responses and returns the list to the client. 73 The proxy also *buffers* those `list-objects` entries that do not fit one page (which is limited in size - see above). 74 The proxy may also *cache* the entries for reuse across multiple clients and requests. 75 76