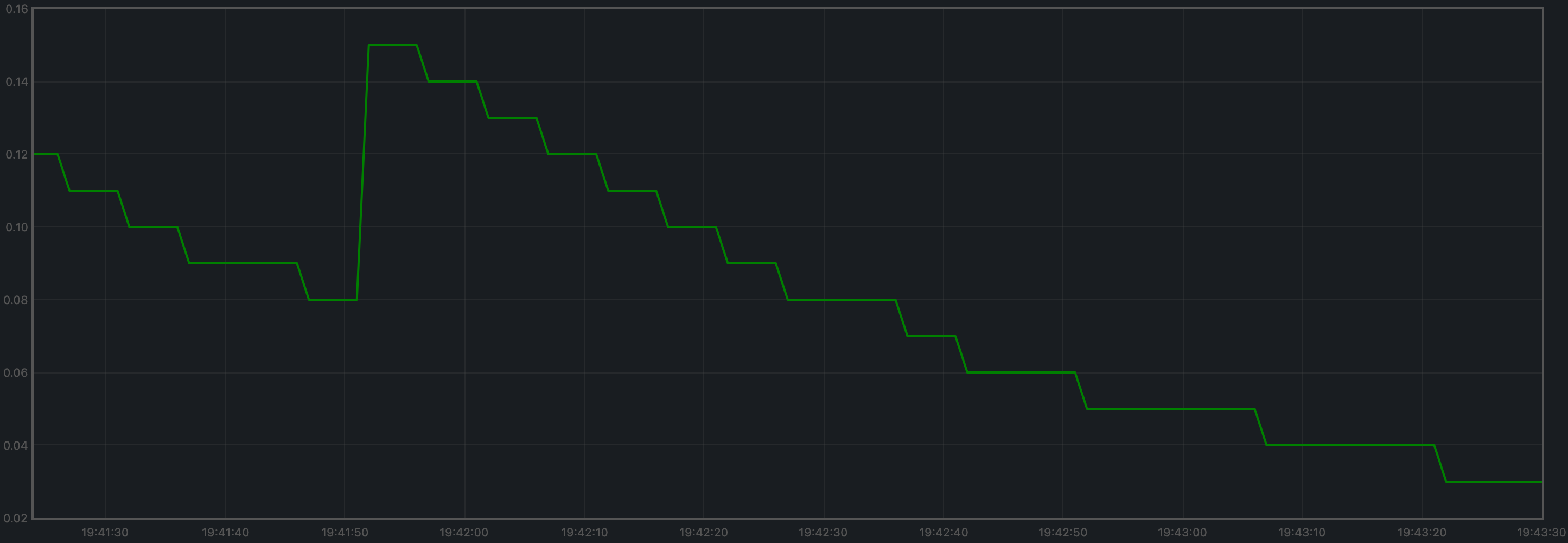

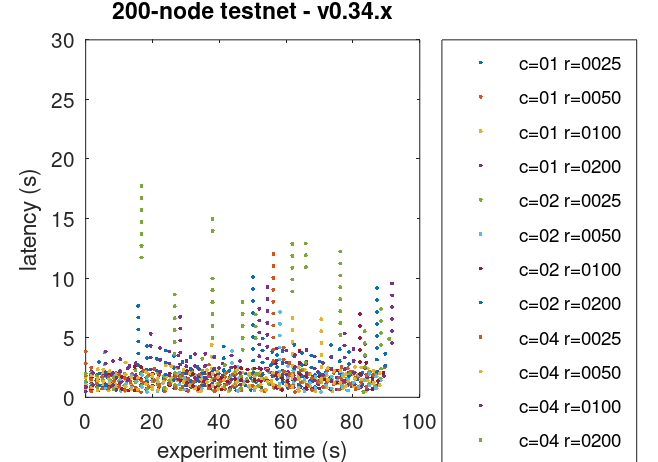

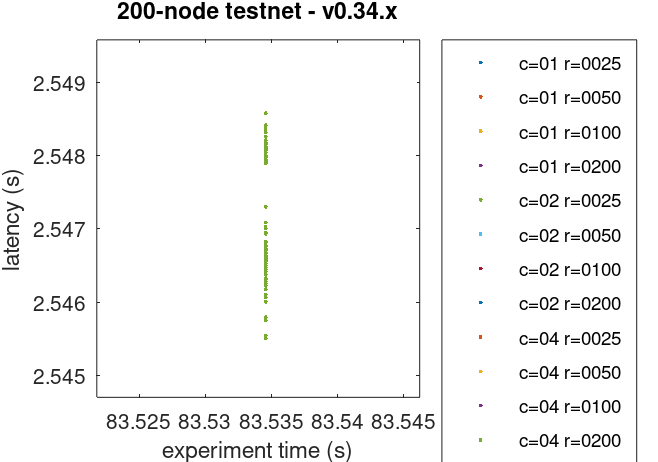

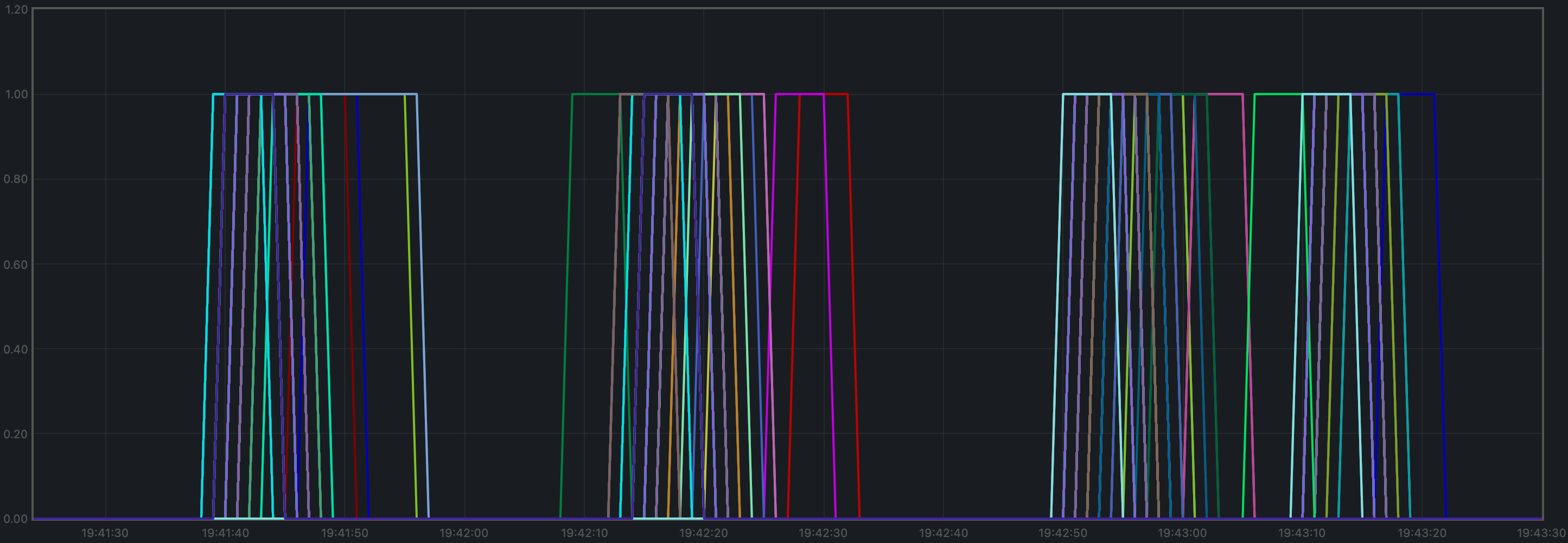

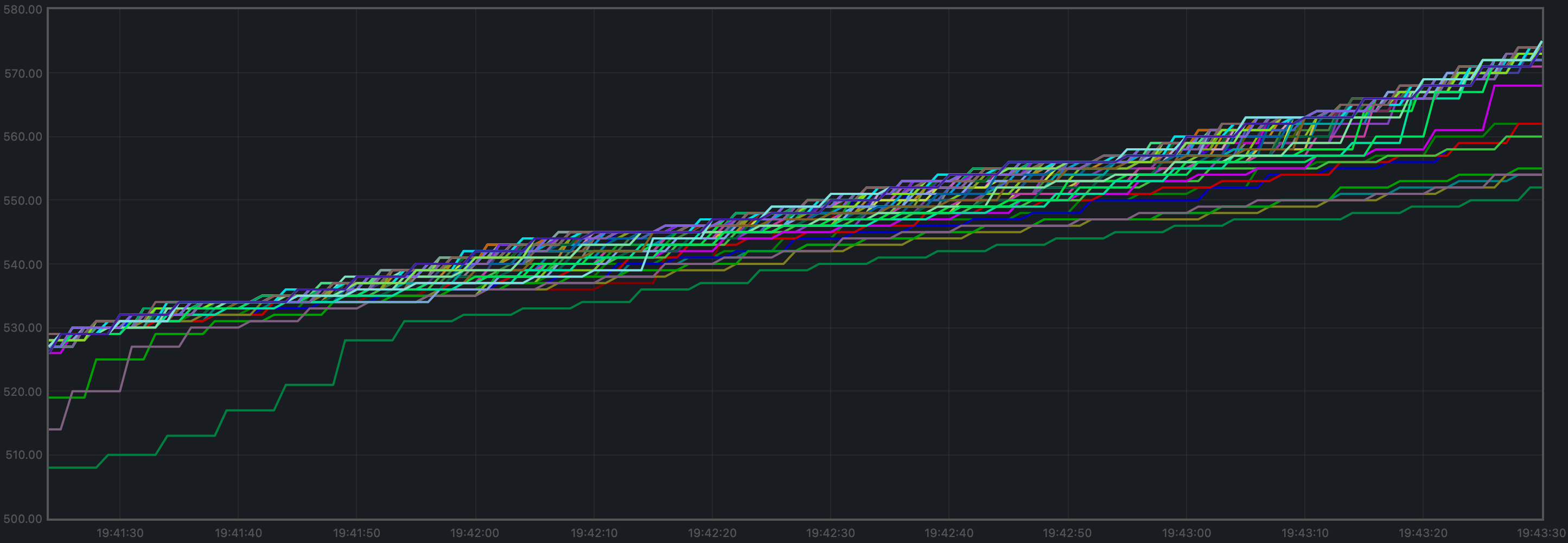

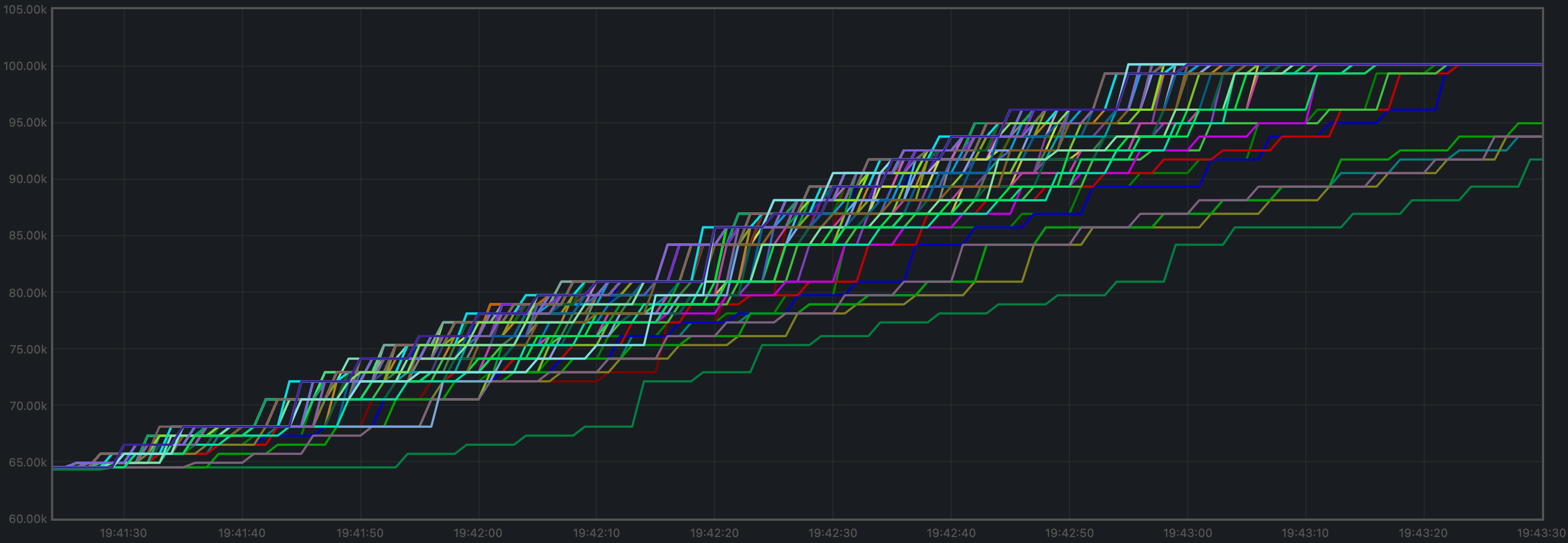

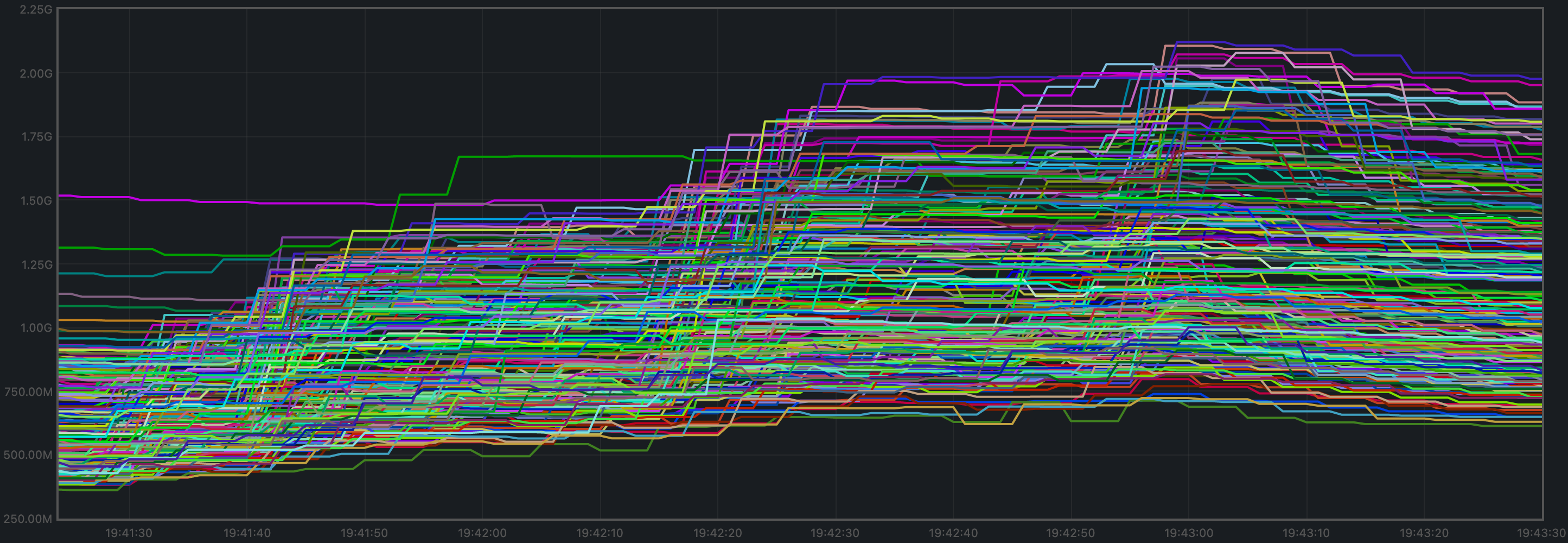

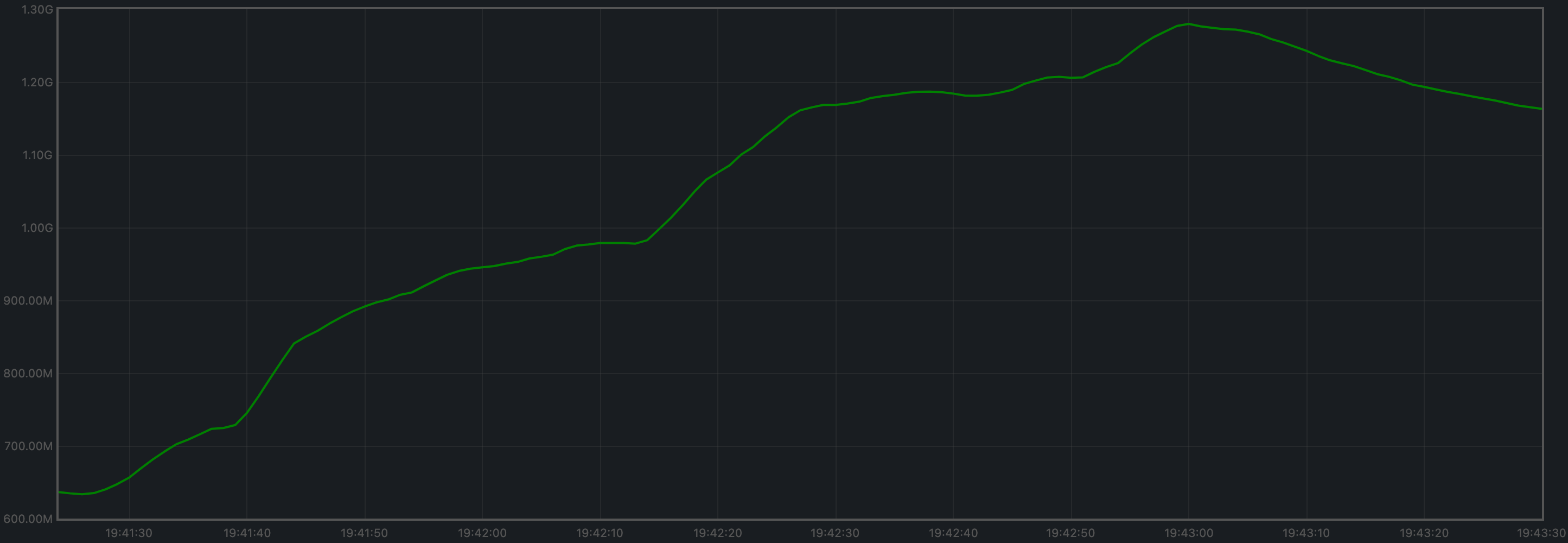

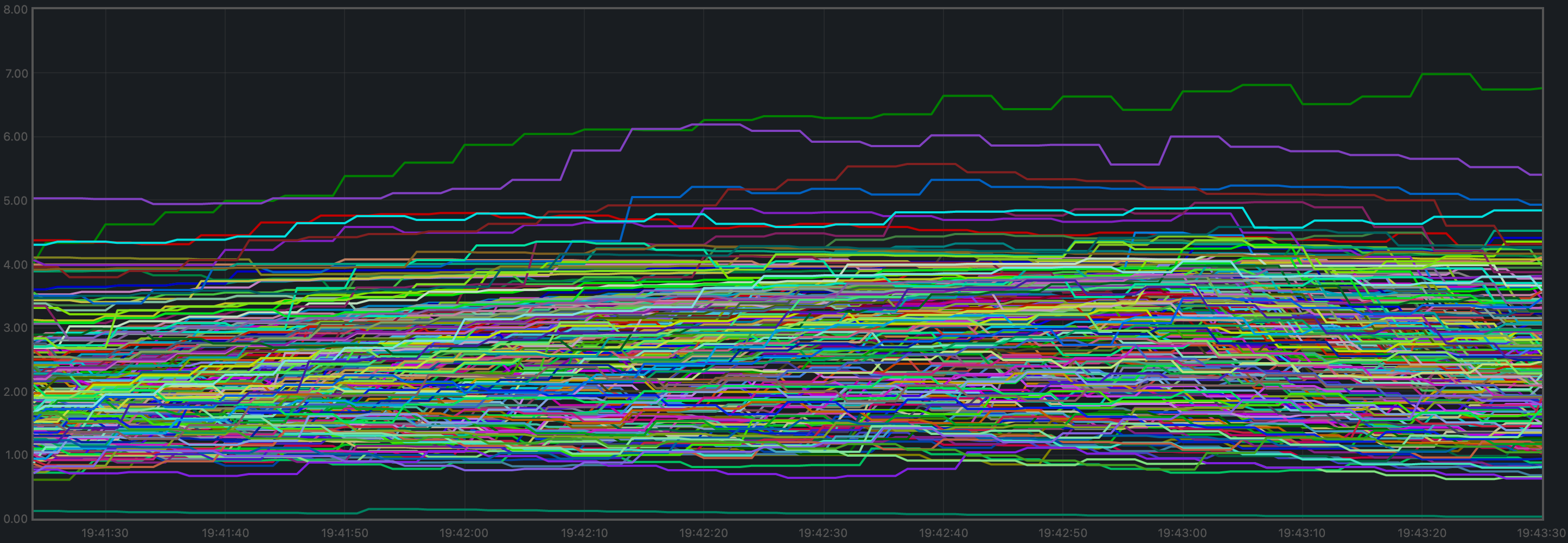

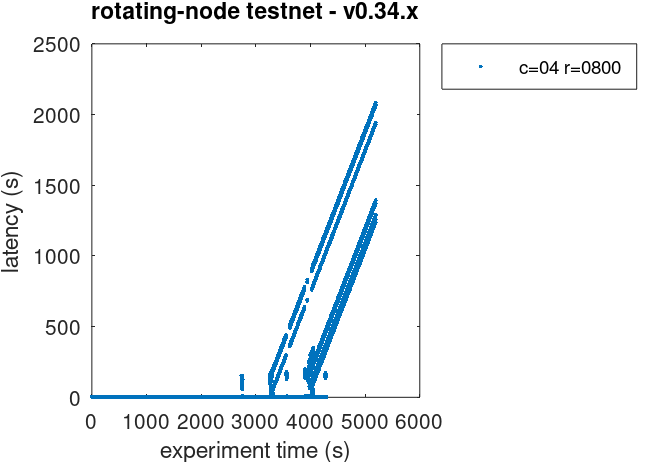

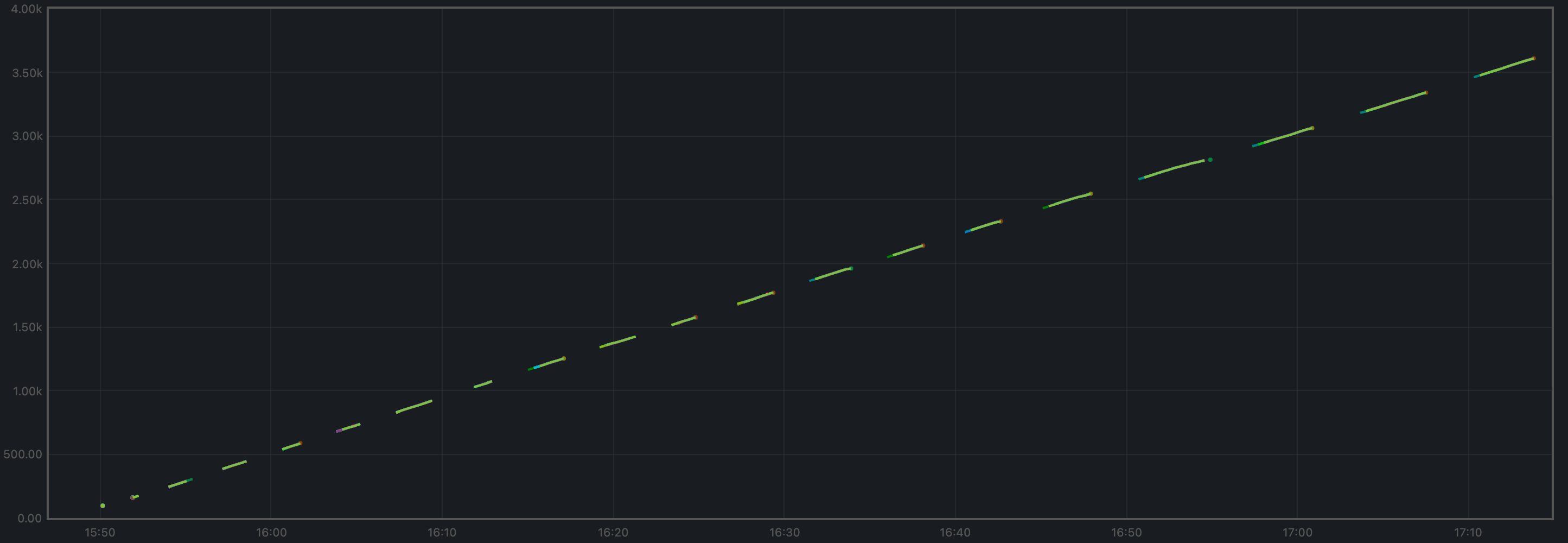

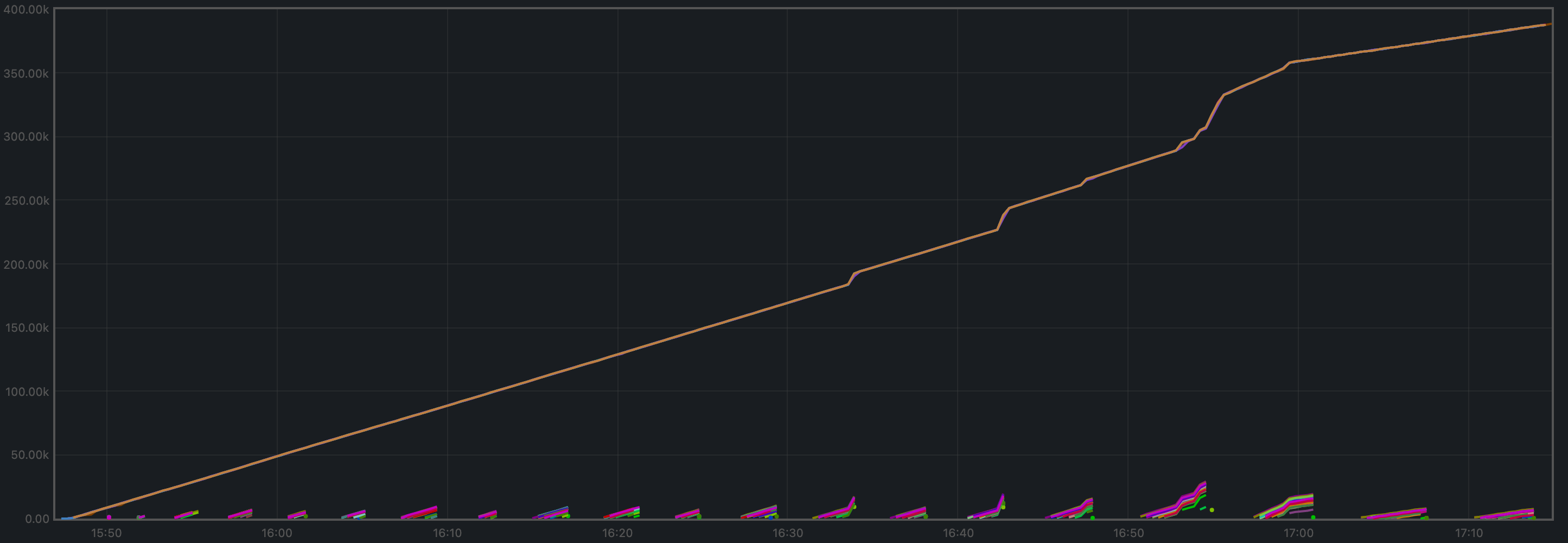

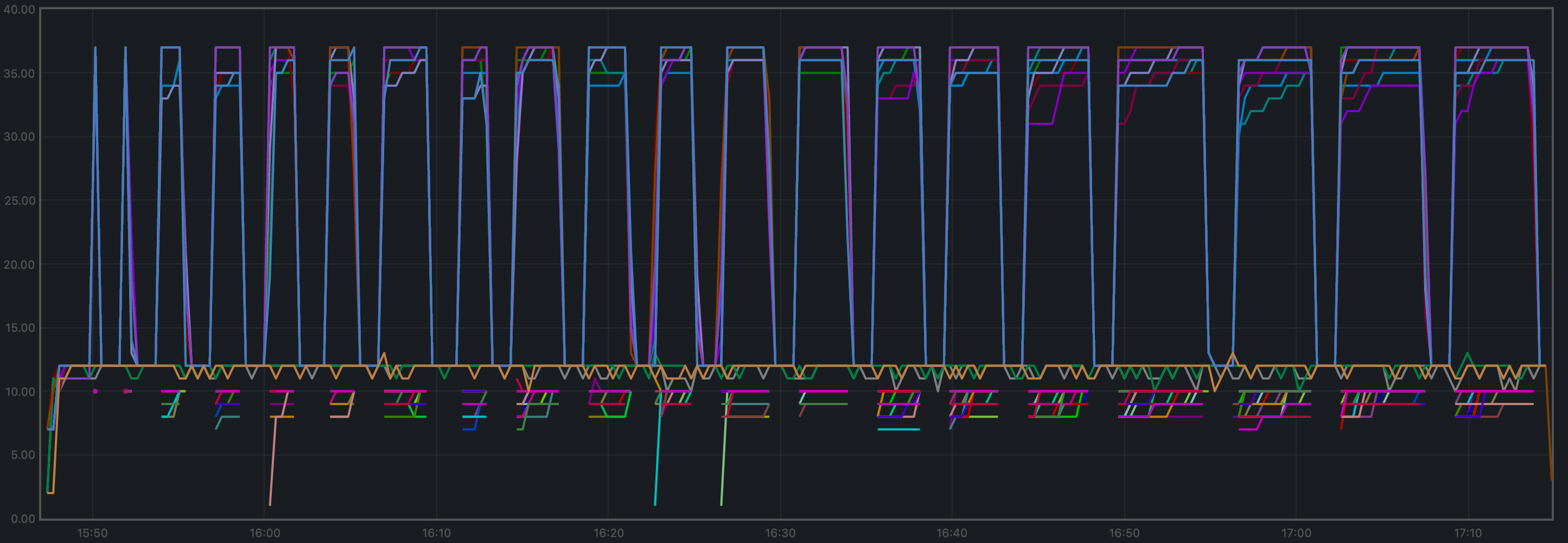

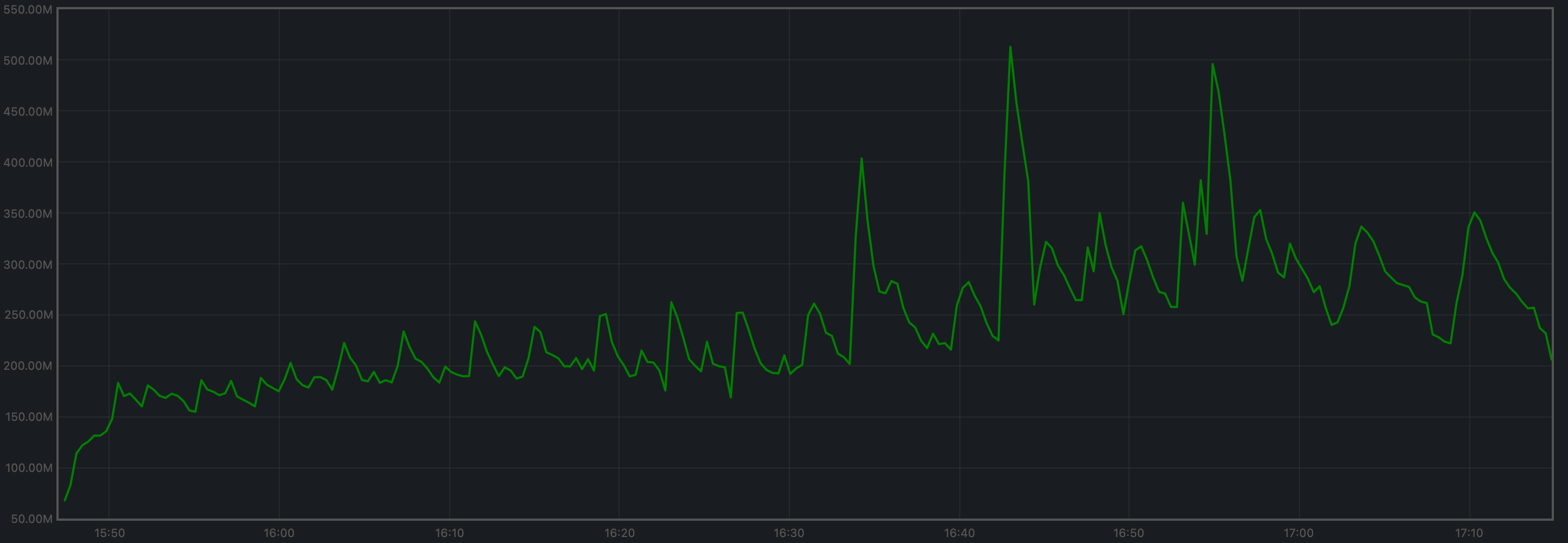

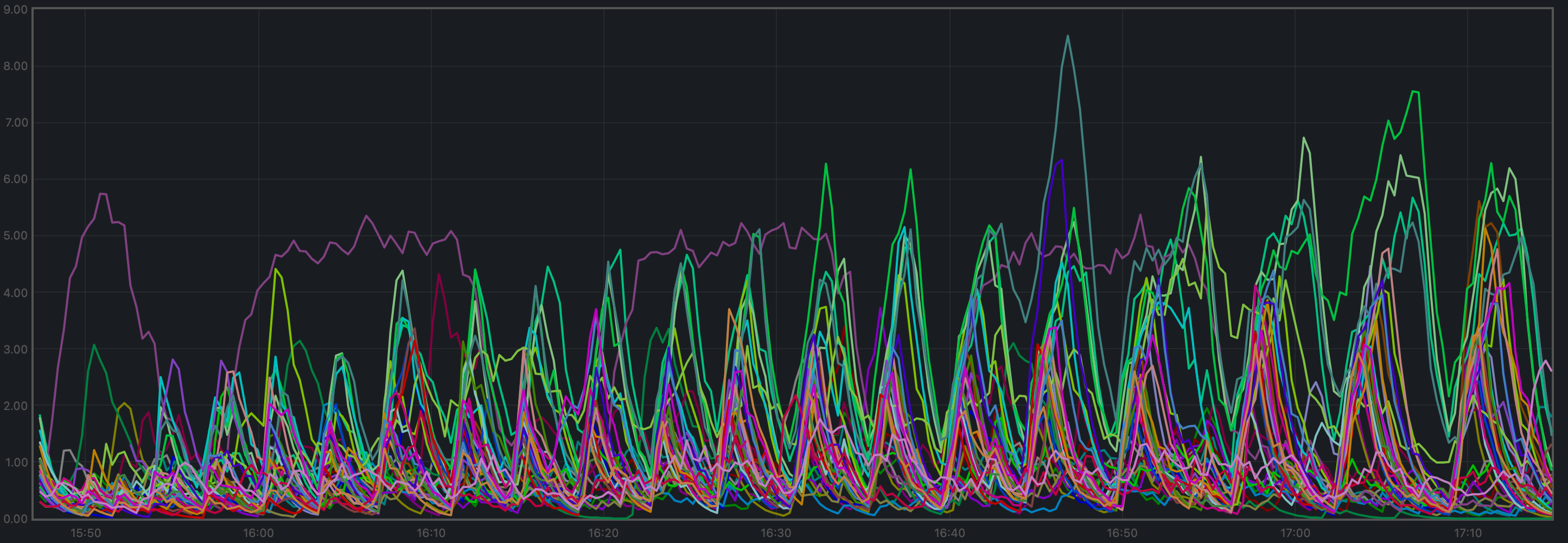

github.com/Oyster-zx/tendermint@v0.34.24-fork/docs/qa/v034/README.md (about) 1 --- 2 order: 1 3 parent: 4 title: Tendermint Quality Assurance Results for v0.34.x 5 description: This is a report on the results obtained when running v0.34.x on testnets 6 order: 2 7 --- 8 9 # v0.34.x 10 11 ## 200 Node Testnet 12 13 ### Finding the Saturation Point 14 15 The first goal when examining the results of the tests is identifying the saturation point. 16 The saturation point is a setup with a transaction load big enough to prevent the testnet 17 from being stable: the load runner tries to produce slightly more transactions than can 18 be processed by the testnet. 19 20 The following table summarizes the results for v0.34.x, for the different experiments 21 (extracted from file [`v034_report_tabbed.txt`](./img/v034_report_tabbed.txt)). 22 23 The X axis of this table is `c`, the number of connections created by the load runner process to the target node. 24 The Y axis of this table is `r`, the rate or number of transactions issued per second. 25 26 | | c=1 | c=2 | c=4 | 27 | :--- | ----: | ----: | ----: | 28 | r=25 | 2225 | 4450 | 8900 | 29 | r=50 | 4450 | 8900 | 17800 | 30 | r=100 | 8900 | 17800 | 35600 | 31 | r=200 | 17800 | 35600 | 38660 | 32 33 The table shows the number of 1024-byte-long transactions that were produced by the load runner, 34 and processed by Tendermint, during the 90 seconds of the experiment's duration. 35 Each cell in the table refers to an experiment with a particular number of websocket connections (`c`) 36 to a chosen validator, and the number of transactions per second that the load runner 37 tries to produce (`r`). Note that the overall load that the tool attempts to generate is $c \cdot r$. 38 39 We can see that the saturation point is beyond the diagonal that spans cells 40 41 * `r=200,c=2` 42 * `r=100,c=4` 43 44 given that the total transactions should be close to the product of the rate, the number of connections, 45 and the experiment time (89 seconds, since the last batch never gets sent). 46 47 All experiments below the saturation diagonal (`r=200,c=4`) have in common that the total 48 number of transactions processed is noticeably less than the product $c \cdot r \cdot 89$, 49 which is the expected number of transactions when the system is able to deal well with the 50 load. 51 With `r=200,c=4`, we obtained 38660 whereas the theoretical number of transactions should 52 have been $200 \cdot 4 \cdot 89 = 71200$. 53 54 At this point, we chose an experiment at the limit of the saturation diagonal, 55 in order to further study the performance of this release. 56 **The chosen experiment is `r=200,c=2`**. 57 58 This is a plot of the CPU load (average over 1 minute, as output by `top`) of the load runner for `r=200,c=2`, 59 where we can see that the load stays close to 0 most of the time. 60 61  62 63 ### Examining latencies 64 65 The method described [here](../method.md) allows us to plot the latencies of transactions 66 for all experiments. 67 68  69 70 As we can see, even the experiments beyond the saturation diagonal managed to keep 71 transaction latency stable (i.e. not constantly increasing). 72 Our interpretation for this is that contention within Tendermint was propagated, 73 via the websockets, to the load runner, 74 hence the load runner could not produce the target load, but a fraction of it. 75 76 Further examination of the Prometheus data (see below), showed that the mempool contained many transactions 77 at steady state, but did not grow much without quickly returning to this steady state. This demonstrates 78 that the transactions were able to be processed by the Tendermint network at least as quickly as they 79 were submitted to the mempool. Finally, the test script made sure that, at the end of an experiment, the 80 mempool was empty so that all transactions submitted to the chain were processed. 81 82 Finally, the number of points present in the plot appears to be much less than expected given the 83 number of transactions in each experiment, particularly close to or above the saturation diagonal. 84 This is a visual effect of the plot; what appear to be points in the plot are actually potentially huge 85 clusters of points. To corroborate this, we have zoomed in the plot above by setting (carefully chosen) 86 tiny axis intervals. The cluster shown below looks like a single point in the plot above. 87 88  89 90 The plot of latencies can we used as a baseline to compare with other releases. 91 92 The following plot summarizes average latencies versus overall throughputs 93 across different numbers of WebSocket connections to the node into which 94 transactions are being loaded. 95 96  97 98 ### Prometheus Metrics on the Chosen Experiment 99 100 As mentioned [above](#finding-the-saturation-point), the chosen experiment is `r=200,c=2`. 101 This section further examines key metrics for this experiment extracted from Prometheus data. 102 103 #### Mempool Size 104 105 The mempool size, a count of the number of transactions in the mempool, was shown to be stable and homogeneous 106 at all full nodes. It did not exhibit any unconstrained growth. 107 The plot below shows the evolution over time of the cumulative number of transactions inside all full nodes' mempools 108 at a given time. 109 The two spikes that can be observed correspond to a period where consensus instances proceeded beyond the initial round 110 at some nodes. 111 112  113 114 The plot below shows evolution of the average over all full nodes, which oscillates between 1500 and 2000 115 outstanding transactions. 116 117  118 119 The peaks observed coincide with the moments when some nodes proceeded beyond the initial round of consensus (see below). 120 121 #### Peers 122 123 The number of peers was stable at all nodes. 124 It was higher for the seed nodes (around 140) than for the rest (between 21 and 74). 125 The fact that non-seed nodes reach more than 50 peers is due to #9548. 126 127  128 129 #### Consensus Rounds per Height 130 131 Most heights took just one round, but some nodes needed to advance to round 1 at some point. 132 133  134 135 #### Blocks Produced per Minute, Transactions Processed per Minute 136 137 The blocks produced per minute are the slope of this plot. 138 139  140 141 Over a period of 2 minutes, the height goes from 530 to 569. 142 This results in an average of 19.5 blocks produced per minute. 143 144 The transactions processed per minute are the slope of this plot. 145 146  147 148 Over a period of 2 minutes, the total goes from 64525 to 100125 transactions, 149 resulting in 17800 transactions per minute. However, we can see in the plot that 150 all transactions in the load are processed long before the two minutes. 151 If we adjust the time window when transactions are processed (approx. 105 seconds), 152 we obtain 20343 transactions per minute. 153 154 #### Memory Resident Set Size 155 156 Resident Set Size of all monitored processes is plotted below. 157 158  159 160 The average over all processes oscillates around 1.2 GiB and does not demonstrate unconstrained growth. 161 162  163 164 #### CPU utilization 165 166 The best metric from Prometheus to gauge CPU utilization in a Unix machine is `load1`, 167 as it usually appears in the 168 [output of `top`](https://www.digitalocean.com/community/tutorials/load-average-in-linux). 169 170  171 172 It is contained in most cases below 5, which is generally considered acceptable load. 173 174 ### Test Result 175 176 **Result: N/A** (v0.34.x is the baseline) 177 178 Date: 2022-10-14 179 180 Version: 3ec6e424d6ae4c96867c2dcf8310572156068bb6 181 182 ## Rotating Node Testnet 183 184 For this testnet, we will use a load that can safely be considered below the saturation 185 point for the size of this testnet (between 13 and 38 full nodes): `c=4,r=800`. 186 187 N.B.: The version of Tendermint used for these tests is affected by #9539. 188 However, the reduced load that reaches the mempools is orthogonal to functionality 189 we are focusing on here. 190 191 ### Latencies 192 193 The plot of all latencies can be seen in the following plot. 194 195  196 197 We can observe there are some very high latencies, towards the end of the test. 198 Upon suspicion that they are duplicate transactions, we examined the latencies 199 raw file and discovered there are more than 100K duplicate transactions. 200 201 The following plot shows the latencies file where all duplicate transactions have 202 been removed, i.e., only the first occurrence of a duplicate transaction is kept. 203 204  205 206 This problem, existing in `v0.34.x`, will need to be addressed, perhaps in the same way 207 we addressed it when running the 200 node test with high loads: increasing the `cache_size` 208 configuration parameter. 209 210 ### Prometheus Metrics 211 212 The set of metrics shown here are less than for the 200 node experiment. 213 We are only interested in those for which the catch-up process (blocksync) may have an impact. 214 215 #### Blocks and Transactions per minute 216 217 Just as shown for the 200 node test, the blocks produced per minute are the gradient of this plot. 218 219  220 221 Over a period of 5229 seconds, the height goes from 2 to 3638. 222 This results in an average of 41 blocks produced per minute. 223 224 The following plot shows only the heights reported by ephemeral nodes 225 (which are also included in the plot above). Note that the _height_ metric 226 is only showed _once the node has switched to consensus_, hence the gaps 227 when nodes are killed, wiped out, started from scratch, and catching up. 228 229  230 231 The transactions processed per minute are the gradient of this plot. 232 233  234 235 The small lines we see periodically close to `y=0` are the transactions that 236 ephemeral nodes start processing when they are caught up. 237 238 Over a period of 5229 minutes, the total goes from 0 to 387697 transactions, 239 resulting in 4449 transactions per minute. We can see some abrupt changes in 240 the plot's gradient. This will need to be investigated. 241 242 #### Peers 243 244 The plot below shows the evolution in peers throughout the experiment. 245 The periodic changes observed are due to the ephemeral nodes being stopped, 246 wiped out, and recreated. 247 248  249 250 The validators' plots are concentrated at the higher part of the graph, whereas the ephemeral nodes 251 are mostly at the lower part. 252 253 #### Memory Resident Set Size 254 255 The average Resident Set Size (RSS) over all processes seems stable, and slightly growing toward the end. 256 This might be related to the increased in transaction load observed above. 257 258  259 260 The memory taken by the validators and the ephemeral nodes (when they are up) is comparable. 261 262 #### CPU utilization 263 264 The plot shows metric `load1` for all nodes. 265 266  267 268 It is contained under 5 most of the time, which is considered normal load. 269 The purple line, which follows a different pattern is the validator receiving all 270 transactions, via RPC, from the load runner process. 271 272 ### Test Result 273 274 **Result: N/A** 275 276 Date: 2022-10-10 277 278 Version: a28c987f5a604ff66b515dd415270063e6fb069d