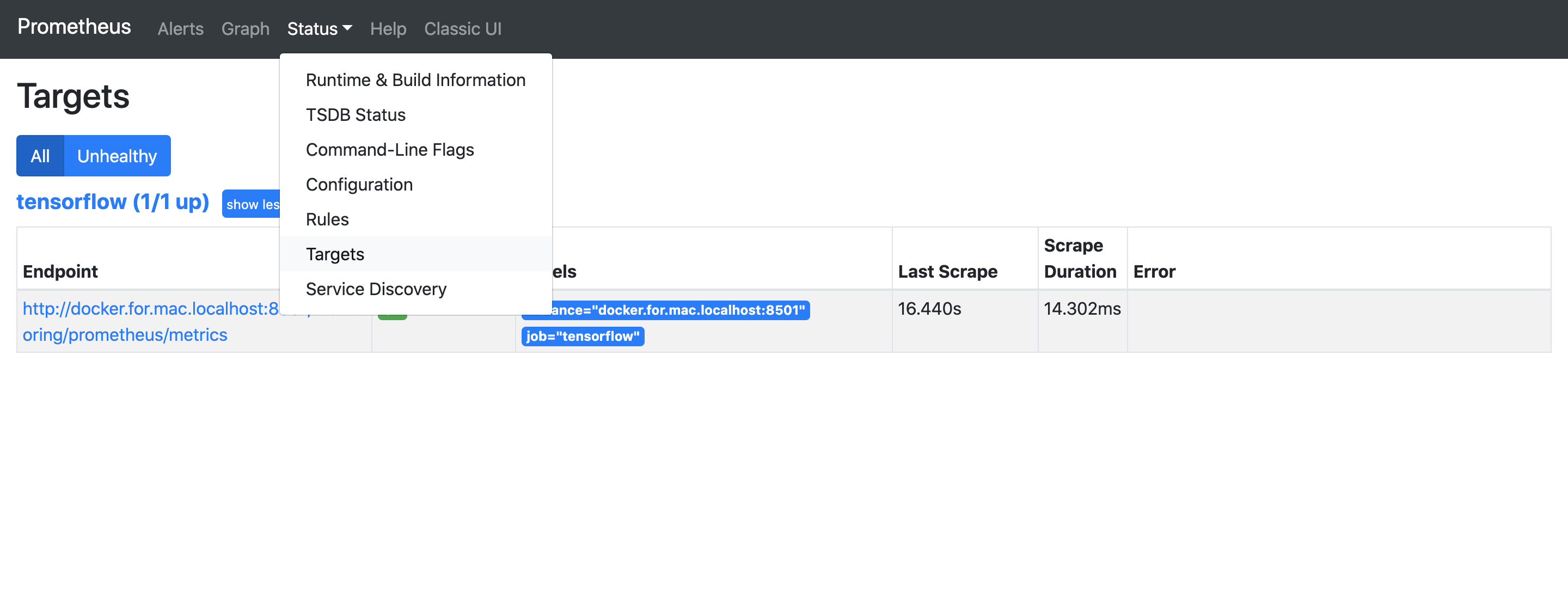

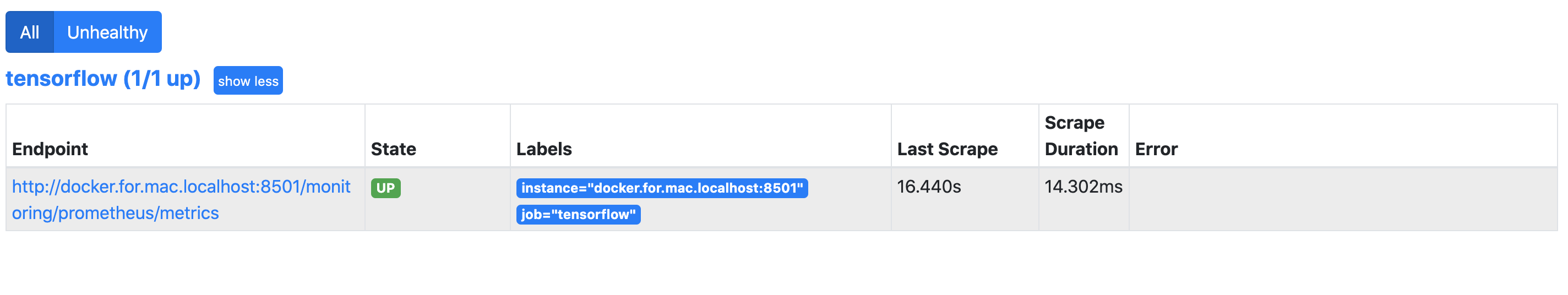

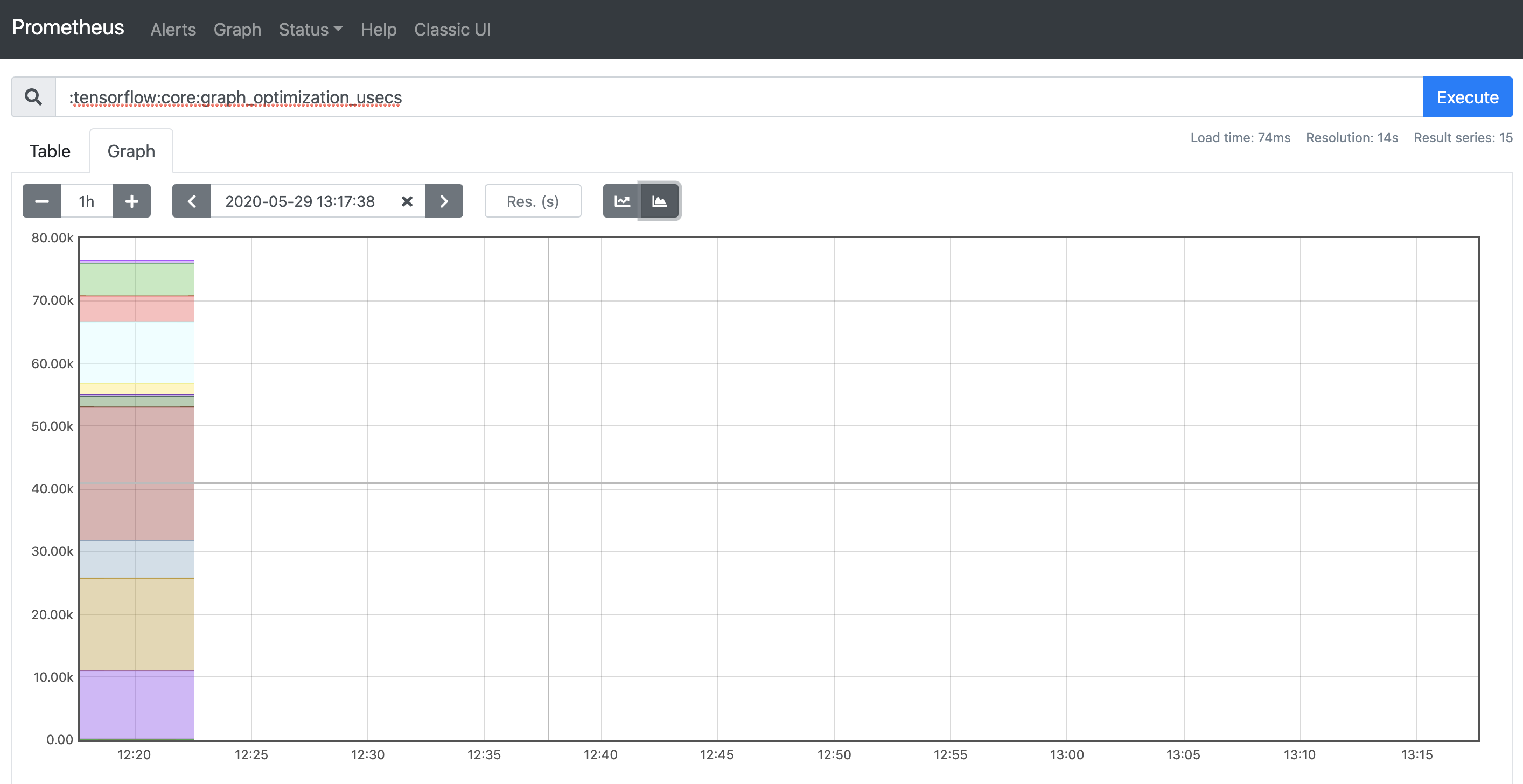

github.com/alwaysproblem/mlserving-tutorial@v0.0.0-20221124033215-121cfddbfbf4/TFserving/README.md (about) 1 # TFServing 2 3 ## Basic tutorial for Tensorflow Serving 4 5 ## **Install Docker** 6 7 - **Window/MacOS**: install Docker from [DockerHub](https://hub.docker.com/?overlay=onboarding). (*need to register new account if you are newbie*) 8 9 - **linux**: install [Docker](https://runnable.com/docker/install-docker-on-linux) 10 11 ## **Tutorial for starting** 12 13 - clone this repo 14 15 ```bash 16 $ git clone https://github.com/Alwaysproblem/MLserving-tutorial 17 $ cd MLserving-tutorial/TFserving/ClientAPI 18 ``` 19 20 - clone tensorflow from source (optional) 21 22 ```bash 23 $ git clone -b <version_you_need> https://github.com/tensorflow/tensorflow 24 ``` 25 26 27 - clone serving from source (optional) 28 29 ```bash 30 $ git clone -b <version_you_need> https://github.com/tensorflow/serving 31 ``` 32 33 ## **Easy TFServer** 34 35 - try simple example from tensorflow document. 36 37 ```bash 38 # Download the TensorFlow Serving Docker image and repo 39 $ docker pull tensorflow/serving 40 41 $ git clone https://github.com/tensorflow/serving 42 # Location of demo models 43 TESTDATA="$(pwd)/serving/tensorflow_serving/servables/tensorflow/testdata" 44 45 # Start TensorFlow Serving container and open the REST API port 46 $ docker run -it --rm -p 8501:8501 \ 47 -v "$TESTDATA/saved_model_half_plus_two_cpu:/models/half_plus_two" \ 48 -e MODEL_NAME=half_plus_two \ 49 tensorflow/serving & 50 51 # Query the model using the predict API 52 # need to create a new terminal. 53 $ curl -d '{"instances": [1.0, 2.0, 5.0]}' \ 54 -X POST http://localhost:8501/v1/models/half_plus_two:predict 55 56 # Returns => { "predictions": [2.5, 3.0, 4.5] } 57 ``` 58 59 - Docker common command. 60 61 ```bash 62 #kill all the alive image. 63 $ docker kill $(docker ps -q) 64 65 #stop all the alinve image 66 $ docker stop $(docker ps -q) 67 68 # remove all non-running image 69 $ docker rm $$(docker ps -aq) 70 71 # check all images 72 $ dokcker ps -a 73 74 #check the all alive image. 75 $ docker ps 76 77 #run a serving image as a daemon with a readable name. 78 $ docker run -d --name serving_base tensorflow/serving 79 80 #execute a command in the docker, you should substitute $(docker image name) for you own image name. 81 $ docker exec -it ${docker image name} sh -c "cd /tmp" 82 83 # enter docker ubuntu bash 84 $ docker exec -it ${docker image name} bash -l 85 ``` 86 87 ## **Run Server with your own saved pretrain models** 88 89 - make sure your model directory like this: 90 91 ```text 92 ---save 93 | 94 ---Model Name 95 | 96 ---1 97 | 98 ---asset 99 | 100 ---variables 101 | 102 ---model.pb 103 ``` 104 105 - substitute **user_define_model_name** for you own model name and **path_to_your_own_models** for directory path of your own model 106 107 ```bash 108 # run the server. 109 $ docker run -it --rm -p 8501:8501 -v "$(pwd)/${path_to_your_own_models}/1:/models/${user_define_model_name}" -e MODEL_NAME=${user_define_model_name} tensorflow/serving & 110 111 #run the client. 112 $ curl -d '{"instances": [[1.0, 2.0]]}' -X POST http://localhost:8501/v1/models/${user_define_model_name}:predict 113 ``` 114 115 - you also can use tensorflow_model_server command after entering docker bash 116 117 ```bash 118 $ docker exec -it ${docker image name} bash -l 119 120 $ tensorflow_model_server --port=8500 --rest_api_port=8501 --model_name=${MODEL_NAME} --model_base_path=${MODEL_BASE_PATH}/${MODEL_NAME} 121 ``` 122 123 - example 124 - Save the model after running LinearKeras.py 125 126 ```bash 127 $ docker run -it --rm -p 8501:8501 -v "$(pwd)/save/Toy:/models/Toy" -e MODEL_NAME=Toy tensorflow/serving & 128 129 $ curl -d '{"instances": [[1.0, 2.0]]}' -X POST http://localhost:8501/v1/models/Toy:predict 130 131 # { 132 # "predictions": [[0.999035] 133 # ] 134 ``` 135 136 - bind your own model to the server 137 - bind bash path to the model. 138 139 ```bash 140 $ docker run -p 8501:8501 --mount type=bind,source=/path/to/my_model/,target=/models/my_model -e MODEL_NAME=my_model -it tensorflow/serving 141 ``` 142 143 - example 144 145 ```bash 146 $ docker run -p 8501:8501 --mount type=bind,source=$(pwd)/save/Toy,target=/models/Toy -e MODEL_NAME=Toy -it tensorflow/serving 147 148 $ curl -d '{"instances": [[1.0, 2.0]]}' -X POST http://localhost:8501/v1/models/Toy:predict 149 150 # { 151 # "predictions": [[0.999035] 152 # ] 153 ``` 154 155 ## RESTful API 156 157 - data is like 158 159 | a | b | c | d | e | f | 160 | :---: | :---: | :---: | :---: | :---: | :---: | 161 | 390 | 25 | 1 | 1 | 1 | 2 | 162 | 345 | 34 | 45 | 2 | 34 | 3456 | 163 164 - `instances` means a row of data 165 166 ```json 167 {"instances": [ 168 { 169 "a": [390], 170 "b": [25], 171 "c": [1], 172 "d": [1], 173 "e": [1], 174 "f": [2] 175 }, 176 { 177 "a": [345], 178 "b": [34], 179 "c": [45], 180 "d": [2], 181 "e": [34], 182 "f": [3456] 183 } 184 ] 185 } 186 ``` 187 188 - `inputs` means a column of data 189 190 ```json 191 {"inputs": 192 { 193 "a": [[390], [345]], 194 "b": [[25], [34]], 195 "c": [[1], [45]], 196 "d": [[1], [2]], 197 "e": [[1], [34]], 198 "f": [[2], [3456]] 199 }, 200 } 201 ``` 202 203 - [REST API](https://www.tensorflow.org/tfx/serving/api_rest) 204 205 ## **Run multiple model in TFServer** 206 207 - set up the configuration file named Toy.config 208 209 ```protobuf 210 model_config_list: { 211 config: { 212 name: "Toy", 213 base_path: "/models/save/Toy/", 214 model_platform: "tensorflow" 215 }, 216 config: { 217 name: "Toy_double", 218 base_path: "/models/save/Toy_double/", 219 model_platform: "tensorflow" 220 } 221 } 222 ``` 223 224 - substitute **Config Path** for you own configeratin file. 225 226 ```bash 227 docker run -it --rm -p 8501:8501 -v "$(pwd):/models/" tensorflow/serving --model_config_file=/models/${Config Path} --model_config_file_poll_wait_seconds=60 228 ``` 229 230 - example 231 232 ```bash 233 $ docker run -it --rm -p 8501:8501 -v "$(pwd):/models/" tensorflow/serving --model_config_file=/models/config/Toy.config 234 235 $ curl -d '{"instances": [[1.0, 2.0]]}' -X POST http://localhost:8501/v1/models/Toy_double:predict 236 # { 237 # "predictions": [[6.80301666] 238 # ] 239 # } 240 241 $ curl -d '{"instances": [[1.0, 2.0]]}' -X POST http://localhost:8501/v1/models/Toy:predict 242 # { 243 # "predictions": [[0.999035] 244 # ]` 245 # } 246 ``` 247 248 - bind your own path to TFserver. The model target path is related to the configuration file. 249 250 ```bash 251 $ docker run --rm -p 8500:8500 -p 8501:8501 \ 252 --mount type=bind,source=${/path/to/my_model/},target=/models/${my_model} \ 253 --mount type=bind,source=${/path/to/my/models.config},target=/models/${models.config} -it tensorflow/serving --model_config_file=/models/{models.config} 254 ``` 255 256 - example 257 258 ```bash 259 $ docker run --rm -p 8500:8500 -p 8501:8501 --mount type=bind,source=$(pwd)/save/,target=/models/save --mount type=bind,source=$(pwd)/config/Toy.config,target=/models/Toy.config -it tensorflow/serving --model_config_file=/models/Toy.config 260 261 $ curl -d '{"instances": [[1.0, 2.0]]}' -X POST http://localhost:8501/v1/models/Toy_double:predict 262 # { 263 # "predictions": [[6.80301666] 264 # ] 265 # } 266 267 $ curl -d '{"instances": [[1.0, 2.0]]}' -X POST http://localhost:8501/v1/models/Toy:predict 268 # { 269 # "predictions": [[0.999035] 270 # ] 271 # } 272 ``` 273 274 ## **Version control for TFServer** 275 276 - set up single version control configuration file. 277 278 ```protobuf 279 model_config_list: { 280 config: { 281 name: "Toy", 282 base_path: "/models/save/Toy/", 283 model_platform: "tensorflow", 284 model_version_policy: { 285 specific { 286 versions: 1 287 } 288 } 289 }, 290 config: { 291 name: "Toy_double", 292 base_path: "/models/save/Toy_double/", 293 model_platform: "tensorflow" 294 } 295 } 296 ``` 297 298 - set up multiple version control configuration file. 299 300 ```protobuf 301 model_config_list: { 302 config: { 303 name: "Toy", 304 base_path: "/models/save/Toy/", 305 model_platform: "tensorflow", 306 model_version_policy: { 307 specific { 308 versions: 1, 309 versions: 2 310 } 311 } 312 }, 313 config: { 314 name: "Toy_double", 315 base_path: "/models/save/Toy_double/", 316 model_platform: "tensorflow" 317 } 318 } 319 ``` 320 321 - example 322 323 ```bash 324 $ docker run --rm -p 8500:8500 -p 8501:8501 --mount type=bind,source=$(pwd)/save/,target=/models/save --mount type=bind,source=$(pwd)/config/versionctrl.config,target=/models/versionctrl.config -it tensorflow/serving --model_config_file=/models/versionctrl.config --model_config_file_poll_wait_seconds=60 325 ``` 326 327 - for POST 328 329 ```bash 330 $ curl -d '{"instances": [[1.0, 2.0]]}' -X POST http://localhost:8501/v1/models/Toy/versions/1:predict 331 # { 332 # "predictions": [[10.8054295] 333 # ] 334 # } 335 336 $ curl -d '{"instances": [[1.0, 2.0]]}' -X POST http://localhost:8501/v1/models/Toy/versions/2:predict 337 # { 338 # "predictions": [[0.999035] 339 # ] 340 # } 341 ``` 342 343 - for gRPC 344 345 ```bash 346 $ python3 ClientAPI/python/grpc_request.py -m Toy -v 1 347 # outputs { 348 # key: "output_1" 349 # value { 350 # dtype: DT_FLOAT 351 # tensor_shape { 352 # dim { 353 # size: 2 354 # } 355 # dim { 356 # size: 1 357 # } 358 # } 359 # float_val: 10.805429458618164 360 # float_val: 14.010123252868652 361 # } 362 # } 363 # model_spec { 364 # name: "Toy" 365 # version { 366 # value: 1 367 # } 368 # signature_name: "serving_default" 369 # } 370 $ python3 ClientAPI/python/grpc_request.py -m Toy -v 2 371 # outputs { 372 # key: "output_1" 373 # value { 374 # dtype: DT_FLOAT 375 # tensor_shape { 376 # dim { 377 # size: 2 378 # } 379 # dim { 380 # size: 1 381 # } 382 # } 383 # float_val: 0.9990350008010864 384 # float_val: 0.9997349381446838 385 # } 386 # } 387 # model_spec { 388 # name: "Toy" 389 # version { 390 # value: 2 391 # } 392 # signature_name: "serving_default" 393 # } 394 ``` 395 396 - set an alias label for each version. Only avaliable for gRPC. 397 398 ```protobuf 399 model_config_list: { 400 config: { 401 name: "Toy", 402 base_path: "/models/save/Toy/", 403 model_platform: "tensorflow", 404 model_version_policy: { 405 specific { 406 versions: 1, 407 versions: 2 408 } 409 }, 410 version_labels { 411 key: 'stable', 412 value: 1 413 }, 414 version_labels { 415 key: 'canary', 416 value: 2 417 } 418 }, 419 config: { 420 name: "Toy_double", 421 base_path: "/models/save/Toy_double/", 422 model_platform: "tensorflow" 423 } 424 } 425 ``` 426 427 - refer to [https://www.tensorflow.org/tfx/serving/serving_config](https://www.tensorflow.org/tfx/serving/serving_config) 428 429 Please **note that** labels can only be assigned to model versions that are loaded and available for serving. Once a model version is available, one may reload the model config on the fly, to assign a label to it (can be achieved using HandleReloadConfigRequest RPC endpoint). 430 431 Maybe you should delete the label related part first, then start the tensorflow serving, and finally add the label related part to the config file on the fly. 432 433 - set flag `--allow_version_labels_for_unavailable_models` true will be able to add version lables at the first runing. 434 435 ``` bash 436 $ docker run --rm -p 8500:8500 -p 8501:8501 --mount type=bind,source=$(pwd)/save/,target=/models/save --mount type=bind,source=$(pwd)/config/versionlabels.config,target=/models/versionctrl.config -it tensorflow/serving --model_config_file=/models/versionctrl.config --model_config_file_poll_wait_seconds=60 --allow_version_labels_for_unavailable_models 437 ``` 438 439 ```bash 440 $ python3 ClientAPI/python/grpc_request.py -m Toy -l stable 441 # outputs { 442 # key: "output_1" 443 # value { 444 # dtype: DT_FLOAT 445 # tensor_shape { 446 # dim { 447 # size: 2 448 # } 449 # dim { 450 # size: 1 451 # } 452 # } 453 # float_val: 10.805429458618164 454 # float_val: 14.010123252868652 455 # } 456 # } 457 # model_spec { 458 # name: "Toy" 459 # version { 460 # value: 1 461 # } 462 # signature_name: "serving_default" 463 # } 464 $ python3 ClientAPI/python/grpc_request.py -m Toy -l canary 465 # outputs { 466 # key: "output_1" 467 # value { 468 # dtype: DT_FLOAT 469 # tensor_shape { 470 # dim { 471 # size: 2 472 # } 473 # dim { 474 # size: 1 475 # } 476 # } 477 # float_val: 0.9990350008010864 478 # float_val: 0.9997349381446838 479 # } 480 # } 481 # model_spec { 482 # name: "Toy" 483 # version { 484 # value: 2 485 # } 486 # signature_name: "serving_default" 487 # } 488 ``` 489 490 ## **Other Configuration parameter** 491 492 - [Configuration](https://github.com/tensorflow/serving/tree/master/tensorflow_serving/config) 493 494 - Batch Configuration: need to set `--enable_batching=true` and pass the config to `--batching_parameters_file`, [more](https://github.com/tensorflow/serving/blob/master/tensorflow_serving/batching/README.md#batch-scheduling-parameters-and-tuning) 495 496 - CPU-only: One Approach 497 498 If your system is CPU-only (no GPU), then consider starting with the following values: `num_batch_threads` equal to the number of CPU cores; `max_batch_size` to infinity; `batch_timeout_micros` to 0. Then experiment with `batch_timeout_micros` values in the 1-10 millisecond (1000-10000 microsecond) range, while keeping in mind that 0 may be the optimal value. 499 500 - GPU: One Approach 501 502 If your model uses a GPU device for part or all of your its inference work, consider the following approach: 503 504 Set `num_batch_threads` to the number of CPU cores. 505 506 Temporarily set `batch_timeout_micros` to infinity while you tune `max_batch_size` to achieve the desired balance between throughput and average latency. Consider values in the hundreds or thousands. 507 508 For online serving, tune `batch_timeout_micros` to rein in tail latency. The idea is that batches normally get filled to `max_batch_size`, but occasionally when there is a lapse in incoming requests, to avoid introducing a latency spike it makes sense to process whatever's in the queue even if it represents an underfull batch. The best value for `batch_timeout_micros` is typically a few milliseconds, and depends on your context and goals. Zero is a value to consider; it works well for some workloads. (For bulk processing jobs, choose a large value, perhaps a few seconds, to ensure good throughput but not wait too long for the final (and likely underfull) batch.) 509 510 `batch.config` 511 512 ```protobuf 513 max_batch_size { value: 1 } 514 batch_timeout_micros { value: 0 } 515 max_enqueued_batches { value: 1000000 } 516 num_batch_threads { value: 8 } 517 ``` 518 519 - example 520 - server 521 522 ```bash 523 docker run --rm -p 8500:8500 -p 8501:8501 --mount type=bind,source=$(pwd),target=/models --mount type=bind,source=$(pwd)/config/versionctrl.config,target=/models/versionctrl.config -it tensorflow/serving --model_config_file=/models/versionctrl.config --model_config_file_poll_wait_seconds=60 --enable_batching=true --batching_parameters_file=/models/batch/batchpara.config 524 ``` 525 526 - client 527 - return error `"Task size 2 is larger than maximum batch size 1"` 528 529 ```bash 530 $ python3 ClientAPI/python/grpc_request.py -m Toy -v 1 531 # Traceback (most recent call last): 532 # File "grpcRequest.py", line 58, in <module> 533 # resp = stub.Predict(request, timeout_req) 534 # File "/Users/yongxiyang/opt/anaconda3/envs/tf2cpu/lib/python3.7/site-packages/grpc/_channel.py", line 824, in __call__ 535 # return _end_unary_response_blocking(state, call, False, None) 536 # File "/Users/yongxiyang/opt/anaconda3/envs/tf2cpu/lib/python3.7/site-packages/grpc/_channel.py", line 726, in _end_unary_response_blocking 537 # raise _InactiveRpcError(state) 538 # grpc._channel._InactiveRpcError: <_InactiveRpcError of RPC that terminated with: 539 # status = StatusCode.INVALID_ARGUMENT 540 # details = "Task size 2 is larger than maximum batch size 1" 541 # debug_error_string = "{"created":"@1591246233.042335000","description":"Error received from peer ipv4:0.0.0.0:8500","file":"src# /core/lib/surface/call.cc","file_line":1056,"grpc_message":"Task size 2 is larger than maximum batch size 1","grpc_status":3}" 542 ``` 543 544 - monitor: pass file path to `--monitoring_config_file` 545 546 `monitor.config` 547 548 ```protobuf 549 prometheus_config { 550 enable: true, 551 path: "/models/metrics" 552 } 553 ``` 554 555 - request through RESTful API 556 - example 557 - server 558 559 ```bash 560 $ docker run --rm -p 8500:8500 -p 8501:8501 -v "$(pwd):/models" -it tensorflow/serving --model_config_file=/models/config/versionlabels.config --model_config_file_poll_wait_seconds=60 --allow_version_labels_for_unavailable_models --monitoring_config_file=/models/monitor/monitor.config 561 ``` 562 563 - client 564 565 ```bash 566 $ curl -X GET http://localhost:8501/monitoring/prometheus/metrics 567 # # TYPE :tensorflow:api:op:using_fake_quantization gauge 568 # # TYPE :tensorflow:cc:saved_model:load_attempt_count counter 569 # :tensorflow:cc:saved_model:load_attempt_count{model_path="/models/save/Toy/1",status="success"} 1 570 # :tensorflow:cc:saved_model:load_attempt_count{model_path="/models/save/Toy/2",status="success"} 1 571 # ... 572 # # TYPE :tensorflow:cc:saved_model:load_latency counter 573 # :tensorflow:cc:saved_model:load_latency{model_path="/models/save/Toy/1"} 54436 574 # :tensorflow:cc:saved_model:load_latency{model_path="/models/save/Toy/2"} 45230 575 # ... 576 # # TYPE :tensorflow:mlir:import_failure_count counter 577 # # TYPE :tensorflow:serving:model_warmup_latency histogram 578 # # TYPE :tensorflow:serving:request_example_count_total counter 579 # # TYPE :tensorflow:serving:request_example_counts histogram 580 # # TYPE :tensorflow:serving:request_log_count counter 581 ``` 582 583 - show monitor data in the prometheus docker 584 - modified your own prometheus configuration file 585 586 ```yaml 587 # my global config 588 global: 589 scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute. 590 evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute. 591 # scrape_timeout is set to the global default (10s). 592 593 # Alertmanager configuration 594 alerting: 595 alertmanagers: 596 - static_configs: 597 - targets: 598 # - alertmanager:9093 599 600 # Load rules once and periodically evaluate them according to the global 'evaluation_interval'. 601 rule_files: 602 # - "first_rules.yml" 603 # - "second_rules.yml" 604 605 # A scrape configuration containing exactly one endpoint to scrape: 606 # Here it's Prometheus itself. 607 scrape_configs: 608 - job_name: 'tensorflow' 609 scrape_interval: 5s 610 metrics_path: '/monitoring/prometheus/metrics' 611 static_configs: 612 - targets: ['docker.for.mac.localhost:8501'] # for `Mac users` 613 # - targets: ['127.0.0.1:8501'] 614 ``` 615 616 - start prometheus docker server 617 618 ```bash 619 $ docker run --rm -ti --name prometheus -p 127.0.0.1:9090:9090 -v "$(pwd)/monitor:/tmp" prom/prometheus --config.file=/tmp/prometheus/prome.yaml 620 ``` 621 622 - access prometheus on the webUI 623 - check target and status 624  625  626 - webUI on [localhost:9090](http://localhost:9090/) 627  628 629 <!-- - request with gRPC TODO: --> 630 631 ## **Obtain the information** 632 633 - get the information data structure. 634 635 ```bash 636 curl -d '{"instances": [[1.0, 2.0]]}' -X GET http://localhost:8501/v1/models/Toy/metadata 637 ``` 638 639 - get the information data structure with gRPC 640 641 ```bash 642 $ python ClientAPI/python/grpc_metadata.py -m Toy -v 2 643 # model_spec { 644 # name: "Toy" 645 # version { 646 # value: 2 647 # } 648 # } 649 # metadata { 650 # key: "signature_def" 651 # value { 652 # type_url: "type.googleapis.com/tensorflow.serving.SignatureDefMap" 653 # value: "\n\253\001\n\017serving_default\022\227\001\n;\n\007input_1\0220\n\031serving_default_input_1:0\020\001\032\021\022\013\010\377\377\377\377\377\377\377\377\377\001\022\002\010\002\022<\n\010output_1\0220\n\031StatefulPartitionedCall:0\020\001\032\021\022\013\010\377\377\377\377\377\377\377\377\377\001\022\002\010\001\032\032tensorflow/serving/predict\n>\n\025__saved_model_init_op\022%\022#\n\025__saved_model_init_op\022\n\n\004NoOp\032\002\030\001" 654 # } 655 # } 656 ``` 657 658 ## **Accerleration by GPU** 659 660 - pull tensorflow server GPU version from DockerHub. 661 662 ```bash 663 docker pull tensorflow/serving:latest-gpu 664 ``` 665 666 - clone the server.git if you haven't done it. 667 668 ```bash 669 git clone https://github.com/tensorflow/serving 670 ``` 671 672 - set `--runtime==nvidia` and use the `tensorflow/serving:latest-gpu` 673 674 ```bash 675 docker run --runtime=nvidia -p 8501:8501 -v "$(pwd)/${path_to_your_own_models}/1:/models/${user_define_model_name}" -e MODEL_NAME=${user_define_model_name} tensorflow/serving & 676 ``` 677 678 - example 679 680 ```bash 681 docker run --runtime=nvidia -p 8501:8501 -v "$(pwd)/save/Toy:/models/Toy" -e MODEL_NAME=Toy tensorflow/serving:latest-gpu & 682 or 683 nvidia-docker run -p 8501:8501 -v "$(pwd)/save/Toy:/models/Toy" -e MODEL_NAME=Toy tensorflow/serving:latest-gpu & 684 or 685 docker run --gpu ${all/1} -p 8501:8501 -v "$(pwd)/save/Toy:/models/Toy" -e MODEL_NAME=Toy tensorflow/serving:latest-gpu & 686 ``` 687 688 ## Setup client API 689 690 691 - [GO](ClientAPI/go/README.md) 692 - [Python](ClientAPI/python/README.md) 693 - [Cpp-cmake](ClientAPI/cpp/cmake/README.md) 694 - [Cpp-cmake-static-lib](ClientAPI/cpp/cmake-static-lib/README.md) 695 - [Cpp-make](ClientAPI/cpp/make/README.md) 696 - [Cpp-make-static-lib](ClientAPI/cpp/make-static-lib/README.md) 697 698 ## Feature Column and vocabulary file for serving 699 <!-- TODO: --> 700 701 ## For production 702 703 - [SavedModel Warmup](https://www.tensorflow.org/tfx/serving/saved_model_warmup) 704 - please see ClientAPI/wramup/warmup.py 705 - `--enable_model_warmup`: Enables model warmup using user-provided PredictionLogs in assets.extra/ directory 706 707 ```bash 708 $ python ClientAPI/wramup/warmup.py # it will generate tf_serving_warmup_requests tfrecords (2.9.2) 709 $ cp tf_serving_warmup_requests <model_dir>/<version>/assets.extra/tf_serving_warmup_requests 710 ``` 711 712 The server log: 713 ```log 714 2022-11-04 06:34:39.419417: I external/org_tensorflow/tensorflow/cc/saved_model/loader.cc:213] Running initialization op on SavedModel bundle at path: /models/save/Toy/2 715 2022-11-04 06:34:39.426058: I external/org_tensorflow/tensorflow/cc/saved_model/loader.cc:305] SavedModel load for tags { serve }; Status: success: OK. Took 32252 microseconds. 716 2022-11-04 06:34:39.426708: I tensorflow_serving/servables/tensorflow/saved_model_warmup_util.cc:73] Starting to read warmup data for model at /models/save/Toy/2/assets.extra/tf_serving_warmup_requests with model-warmup-options 717 2022-11-04 06:34:39.441661: I tensorflow_serving/servables/tensorflow/saved_model_warmup_util.cc:210] Finished reading warmup data for model at /models/save/Toy/2/assets.extra/tf_serving_warmup_requests. Number of warmup records read: 1. Elapsed time (microseconds): 15304. 718 ``` 719 720 ## Advanced Tutorial 721 722 ### Custom Operation 723 724 For custom operation of tensorflow, please refer to the [Create an Op](https://www.tensorflow.org/guide/create_op) and [Serving TensorFlow models with custom ops](https://www.tensorflow.org/tfx/serving/custom_op). However, we only provide a example for custom op and the way to modify the tensorflow server. you can click this link for detail: 725 726 [Custom operation examples](CustomOp/README.md)