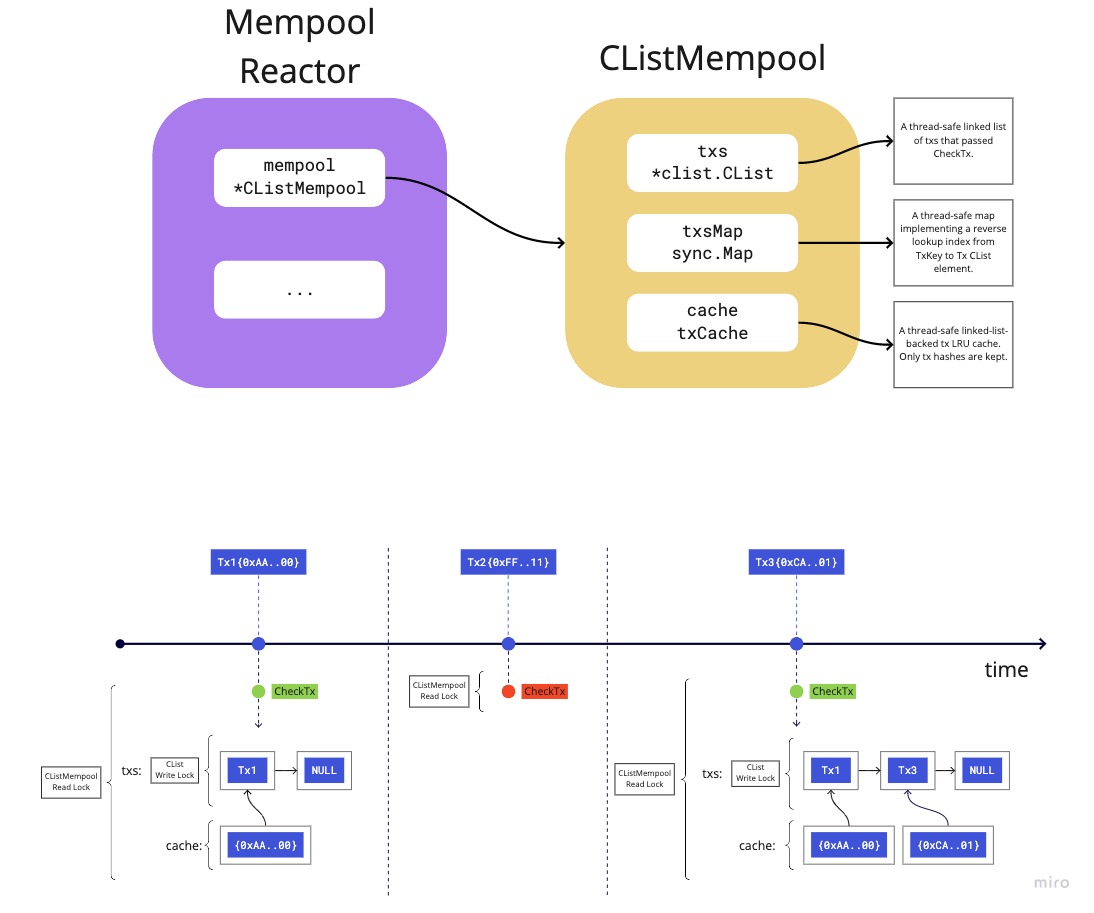

github.com/badrootd/nibiru-cometbft@v0.37.5-0.20240307173500-2a75559eee9b/docs/architecture/adr-067-mempool-refactor.md (about) 1 # ADR 067: Mempool Refactor 2 3 - [ADR 067: Mempool Refactor](#adr-067-mempool-refactor) 4 - [Changelog](#changelog) 5 - [Status](#status) 6 - [Context](#context) 7 - [Current Design](#current-design) 8 - [Alternative Approaches](#alternative-approaches) 9 - [Prior Art](#prior-art) 10 - [Ethereum](#ethereum) 11 - [Diem](#diem) 12 - [Decision](#decision) 13 - [Detailed Design](#detailed-design) 14 - [CheckTx](#checktx) 15 - [Mempool](#mempool) 16 - [Eviction](#eviction) 17 - [Gossiping](#gossiping) 18 - [Performance](#performance) 19 - [Future Improvements](#future-improvements) 20 - [Consequences](#consequences) 21 - [Positive](#positive) 22 - [Negative](#negative) 23 - [Neutral](#neutral) 24 - [References](#references) 25 26 ## Changelog 27 28 - April 19, 2021: Initial Draft (@alexanderbez) 29 30 ## Status 31 32 Accepted 33 34 ## Context 35 36 Tendermint Core has a reactor and data structure, mempool, that facilitates the 37 ephemeral storage of uncommitted transactions. Honest nodes participating in a 38 Tendermint network gossip these uncommitted transactions to each other if they 39 pass the application's `CheckTx`. In addition, block proposers select from the 40 mempool a subset of uncommitted transactions to include in the next block. 41 42 Currently, the mempool in Tendermint Core is designed as a FIFO queue. In other 43 words, transactions are included in blocks as they are received by a node. There 44 currently is no explicit and prioritized ordering of these uncommitted transactions. 45 This presents a few technical and UX challenges for operators and applications. 46 47 Namely, validators are not able to prioritize transactions by their fees or any 48 incentive aligned mechanism. In addition, the lack of prioritization also leads 49 to cascading effects in terms of DoS and various attack vectors on networks, 50 e.g. [cosmos/cosmos-sdk#8224](https://github.com/cosmos/cosmos-sdk/discussions/8224). 51 52 Thus, Tendermint Core needs the ability for an application and its users to 53 prioritize transactions in a flexible and performant manner. Specifically, we're 54 aiming to either improve, maintain or add the following properties in the 55 Tendermint mempool: 56 57 - Allow application-determined transaction priority. 58 - Allow efficient concurrent reads and writes. 59 - Allow block proposers to reap transactions efficiently by priority. 60 - Maintain a fixed mempool capacity by transaction size and evict lower priority 61 transactions to make room for higher priority transactions. 62 - Allow transactions to be gossiped by priority efficiently. 63 - Allow operators to specify a maximum TTL for transactions in the mempool before 64 they're automatically evicted if not selected for a block proposal in time. 65 - Ensure the design allows for future extensions, such as replace-by-priority and 66 allowing multiple pending transactions per sender, to be incorporated easily. 67 68 Note, not all of these properties will be addressed by the proposed changes in 69 this ADR. However, this proposal will ensure that any unaddressed properties 70 can be addressed in an easy and extensible manner in the future. 71 72 ### Current Design 73 74  75 76 At the core of the `v0` mempool reactor is a concurrent linked-list. This is the 77 primary data structure that contains `Tx` objects that have passed `CheckTx`. 78 When a node receives a transaction from another peer, it executes `CheckTx`, which 79 obtains a read-lock on the `*CListMempool`. If the transaction passes `CheckTx` 80 locally on the node, it is added to the `*CList` by obtaining a write-lock. It 81 is also added to the `cache` and `txsMap`, both of which obtain their own respective 82 write-locks and map a reference from the transaction hash to the `Tx` itself. 83 84 Transactions are continuously gossiped to peers whenever a new transaction is added 85 to a local node's `*CList`, where the node at the front of the `*CList` is selected. 86 Another transaction will not be gossiped until the `*CList` notifies the reader 87 that there are more transactions to gossip. 88 89 When a proposer attempts to propose a block, they will execute `ReapMaxBytesMaxGas` 90 on the reactor's `*CListMempool`. This call obtains a read-lock on the `*CListMempool` 91 and selects as many transactions as possible starting from the front of the `*CList` 92 moving to the back of the list. 93 94 When a block is finally committed, a caller invokes `Update` on the reactor's 95 `*CListMempool` with all the selected transactions. Note, the caller must also 96 explicitly obtain a write-lock on the reactor's `*CListMempool`. This call 97 will remove all the supplied transactions from the `txsMap` and the `*CList`, both 98 of which obtain their own respective write-locks. In addition, the transaction 99 may also be removed from the `cache` which obtains it's own write-lock. 100 101 ## Alternative Approaches 102 103 When considering which approach to take for a priority-based flexible and 104 performant mempool, there are two core candidates. The first candidate is less 105 invasive in the required set of protocol and implementation changes, which 106 simply extends the existing `CheckTx` ABCI method. The second candidate essentially 107 involves the introduction of new ABCI method(s) and would require a higher degree 108 of complexity in protocol and implementation changes, some of which may either 109 overlap or conflict with the upcoming introduction of [ABCI++](https://github.com/tendermint/tendermint/blob/v0.37.x/docs/rfc/rfc-013-abci%2B%2B.md). 110 111 For more information on the various approaches and proposals, please see the 112 [mempool discussion](https://github.com/tendermint/tendermint/discussions/6295). 113 114 ## Prior Art 115 116 ### Ethereum 117 118 The Ethereum mempool, specifically [Geth](https://github.com/ethereum/go-ethereum), 119 contains a mempool, `*TxPool`, that contains various mappings indexed by account, 120 such as a `pending` which contains all processable transactions for accounts 121 prioritized by nonce. It also contains a `queue` which is the exact same mapping 122 except it contains not currently processable transactions. The mempool also 123 contains a `priced` index of type `*txPricedList` that is a priority queue based 124 on transaction price. 125 126 ### Diem 127 128 The [Diem mempool](https://github.com/diem/diem/blob/master/mempool/README.md#implementation-details) 129 contains a similar approach to the one we propose. Specifically, the Diem mempool 130 contains a mapping from `Account:[]Tx`. On top of this primary mapping from account 131 to a list of transactions, are various indexes used to perform certain actions. 132 133 The main index, `PriorityIndex`. is an ordered queue of transactions that are 134 “consensus-ready” (i.e., they have a sequence number which is sequential to the 135 current sequence number for the account). This queue is ordered by gas price so 136 that if a client is willing to pay more (than other clients) per unit of 137 execution, then they can enter consensus earlier. 138 139 ## Decision 140 141 To incorporate a priority-based flexible and performant mempool in Tendermint Core, 142 we will introduce new fields, `priority` and `sender`, into the `ResponseCheckTx` 143 type. 144 145 We will introduce a new versioned mempool reactor, `v1` and assume an implicit 146 version of the current mempool reactor as `v0`. In the new `v1` mempool reactor, 147 we largely keep the functionality the same as `v0` except we augment the underlying 148 data structures. Specifically, we keep a mapping of senders to transaction objects. 149 On top of this mapping, we index transactions to provide the ability to efficiently 150 gossip and reap transactions by priority. 151 152 ## Detailed Design 153 154 ### CheckTx 155 156 We introduce the following new fields into the `ResponseCheckTx` type: 157 158 ```diff 159 message ResponseCheckTx { 160 uint32 code = 1; 161 bytes data = 2; 162 string log = 3; // nondeterministic 163 string info = 4; // nondeterministic 164 int64 gas_wanted = 5 [json_name = "gas_wanted"]; 165 int64 gas_used = 6 [json_name = "gas_used"]; 166 repeated Event events = 7 [(gogoproto.nullable) = false, (gogoproto.jsontag) = "events,omitempty"]; 167 string codespace = 8; 168 + int64 priority = 9; 169 + string sender = 10; 170 } 171 ``` 172 173 It is entirely up the application in determining how these fields are populated 174 and with what values, e.g. the `sender` could be the signer and fee payer 175 of the transaction, the `priority` could be the cumulative sum of the fee(s). 176 177 Only `sender` is required, while `priority` can be omitted which would result in 178 using the default value of zero. 179 180 ### Mempool 181 182 The existing concurrent-safe linked-list will be replaced by a thread-safe map 183 of `<sender:*Tx>`, i.e a mapping from `sender` to a single `*Tx` object, where 184 each `*Tx` is the next valid and processable transaction from the given `sender`. 185 186 On top of this mapping, we index all transactions by priority using a thread-safe 187 priority queue, i.e. a [max heap](https://en.wikipedia.org/wiki/Min-max_heap). 188 When a proposer is ready to select transactions for the next block proposal, 189 transactions are selected from this priority index by highest priority order. 190 When a transaction is selected and reaped, it is removed from this index and 191 from the `<sender:*Tx>` mapping. 192 193 We define `Tx` as the following data structure: 194 195 ```go 196 type Tx struct { 197 // Tx represents the raw binary transaction data. 198 Tx []byte 199 200 // Priority defines the transaction's priority as specified by the application 201 // in the ResponseCheckTx response. 202 Priority int64 203 204 // Sender defines the transaction's sender as specified by the application in 205 // the ResponseCheckTx response. 206 Sender string 207 208 // Index defines the current index in the priority queue index. Note, if 209 // multiple Tx indexes are needed, this field will be removed and each Tx 210 // index will have its own wrapped Tx type. 211 Index int 212 } 213 ``` 214 215 ### Eviction 216 217 Upon successfully executing `CheckTx` for a new `Tx` and the mempool is currently 218 full, we must check if there exists a `Tx` of lower priority that can be evicted 219 to make room for the new `Tx` with higher priority and with sufficient size 220 capacity left. 221 222 If such a `Tx` exists, we find it by obtaining a read lock and sorting the 223 priority queue index. Once sorted, we find the first `Tx` with lower priority and 224 size such that the new `Tx` would fit within the mempool's size limit. We then 225 remove this `Tx` from the priority queue index as well as the `<sender:*Tx>` 226 mapping. 227 228 This will require additional `O(n)` space and `O(n*log(n))` runtime complexity. Note that the space complexity does not depend on the size of the tx. 229 230 ### Gossiping 231 232 We keep the existing thread-safe linked list as an additional index. Using this 233 index, we can efficiently gossip transactions in the same manner as they are 234 gossiped now (FIFO). 235 236 Gossiping transactions will not require locking any other indexes. 237 238 ### Performance 239 240 Performance should largely remain unaffected apart from the space overhead of 241 keeping an additional priority queue index and the case where we need to evict 242 transactions from the priority queue index. There should be no reads which 243 block writes on any index 244 245 ## Future Improvements 246 247 There are a few considerable ways in which the proposed design can be improved or 248 expanded upon. Namely, transaction gossiping and for the ability to support 249 multiple transactions from the same `sender`. 250 251 With regards to transaction gossiping, we need empirically validate whether we 252 need to gossip by priority. In addition, the current method of gossiping may not 253 be the most efficient. Specifically, broadcasting all the transactions a node 254 has in it's mempool to it's peers. Rather, we should explore for the ability to 255 gossip transactions on a request/response basis similar to Ethereum and other 256 protocols. Not only does this reduce bandwidth and complexity, but also allows 257 for us to explore gossiping by priority or other dimensions more efficiently. 258 259 Allowing for multiple transactions from the same `sender` is important and will 260 most likely be a needed feature in the future development of the mempool, but for 261 now it suffices to have the preliminary design agreed upon. Having the ability 262 to support multiple transactions per `sender` will require careful thought with 263 regards to the interplay of the corresponding ABCI application. Regardless, the 264 proposed design should allow for adaptations to support this feature in a 265 non-contentious and backwards compatible manner. 266 267 ## Consequences 268 269 ### Positive 270 271 - Transactions are allowed to be prioritized by the application. 272 273 ### Negative 274 275 - Increased size of the `ResponseCheckTx` Protocol Buffer type. 276 - Causal ordering is NOT maintained. 277 - It is possible that certain transactions broadcasted in a particular order may 278 pass `CheckTx` but not end up being committed in a block because they fail 279 `CheckTx` later. e.g. Consider Tx<sub>1</sub> that sends funds from existing 280 account Alice to a _new_ account Bob with priority P<sub>1</sub> and then later 281 Bob's _new_ account sends funds back to Alice in Tx<sub>2</sub> with P<sub>2</sub>, 282 such that P<sub>2</sub> > P<sub>1</sub>. If executed in this order, both 283 transactions will pass `CheckTx`. However, when a proposer is ready to select 284 transactions for the next block proposal, they will select Tx<sub>2</sub> before 285 Tx<sub>1</sub> and thus Tx<sub>2</sub> will _fail_ because Tx<sub>1</sub> must 286 be executed first. This is because there is a _causal ordering_, 287 Tx<sub>1</sub> ➝ Tx<sub>2</sub>. These types of situations should be rare as 288 most transactions are not causally ordered and can be circumvented by simply 289 trying again at a later point in time or by ensuring the "child" priority is 290 lower than the "parent" priority. In other words, if parents always have 291 priories that are higher than their children, then the new mempool design will 292 maintain causal ordering. 293 294 ### Neutral 295 296 - A transaction that passed `CheckTx` and entered the mempool can later be evicted 297 at a future point in time if a higher priority transaction entered while the 298 mempool was full. 299 300 ## References 301 302 - [ABCI++](https://github.com/tendermint/tendermint/blob/v0.37.x/docs/rfc/rfc-013-abci%2B%2B.md) 303 - [Mempool Discussion](https://github.com/tendermint/tendermint/discussions/6295)