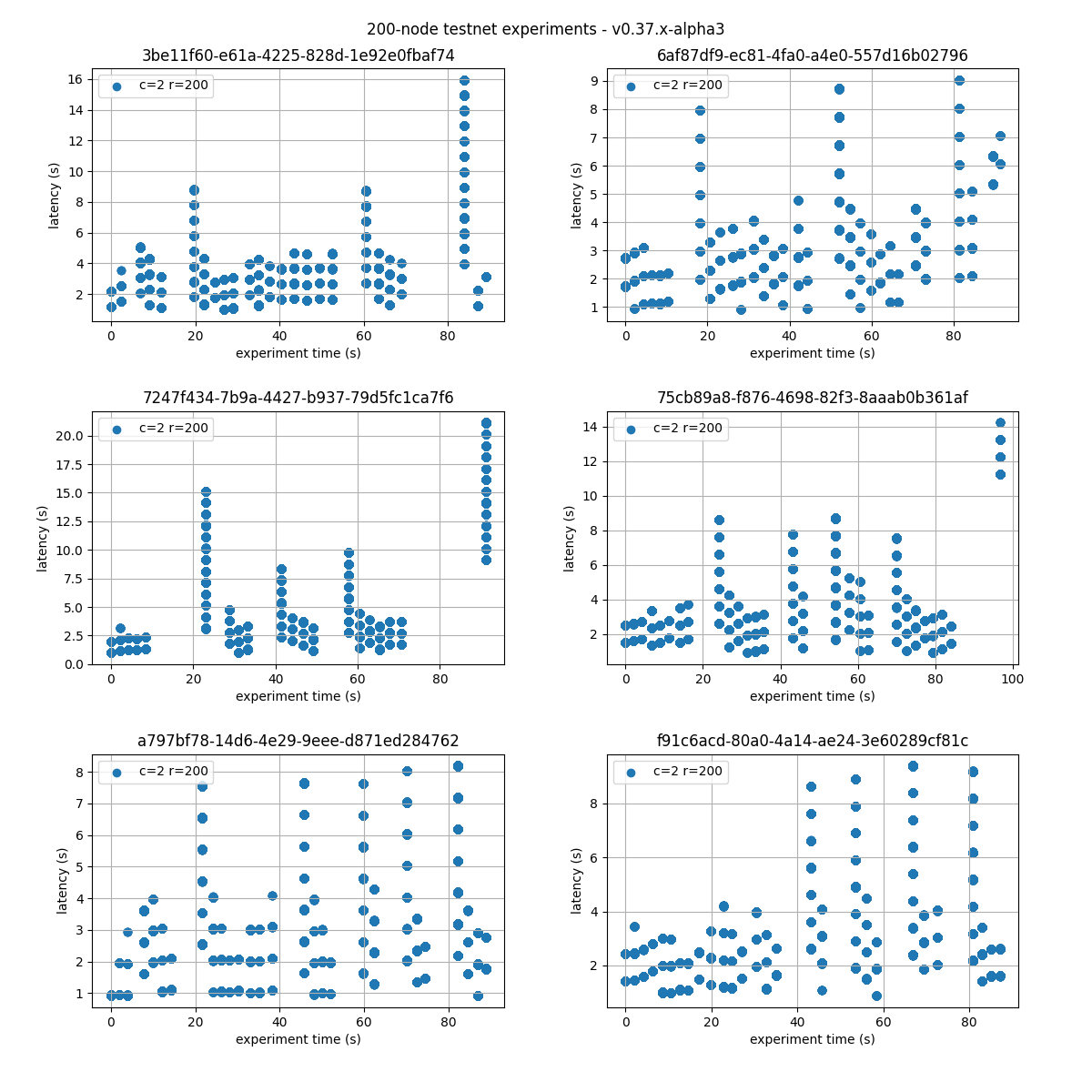

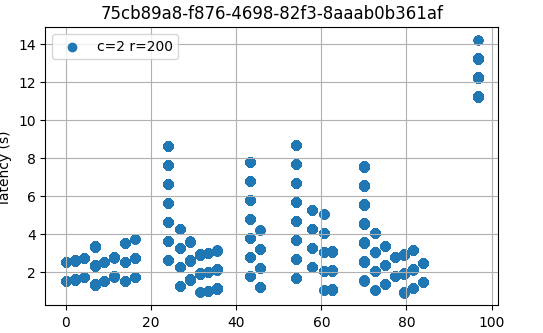

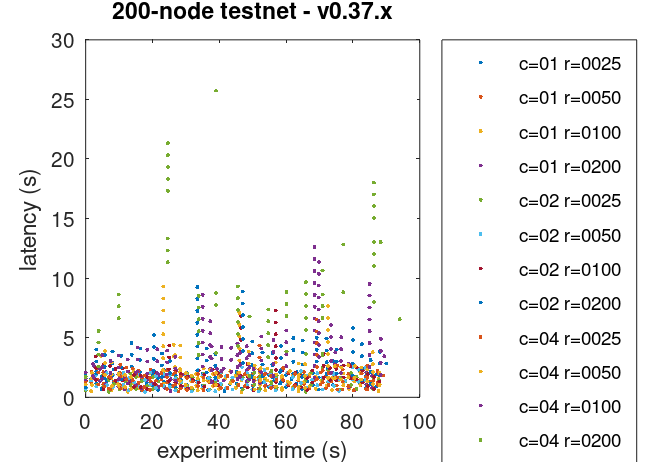

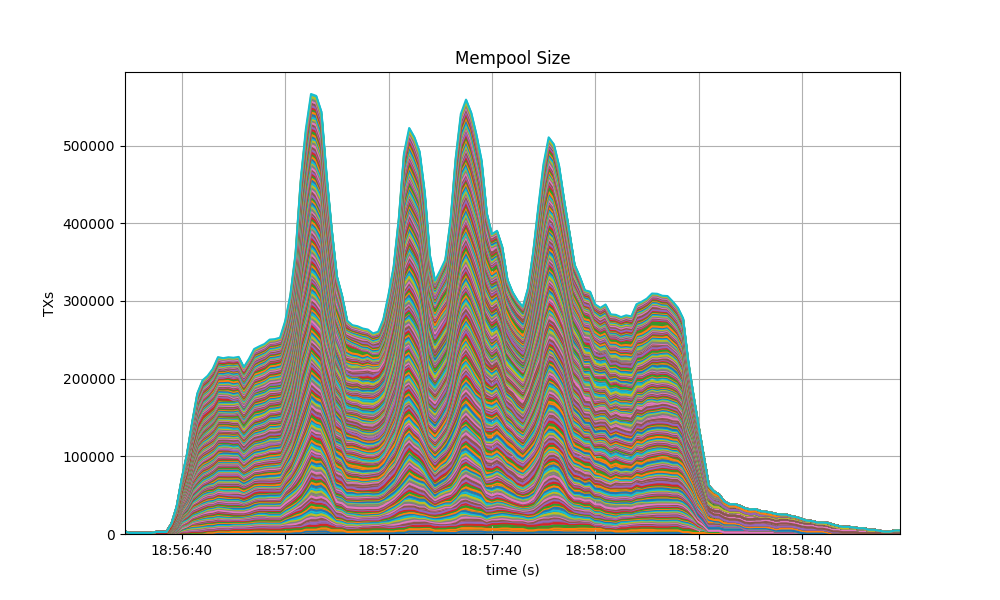

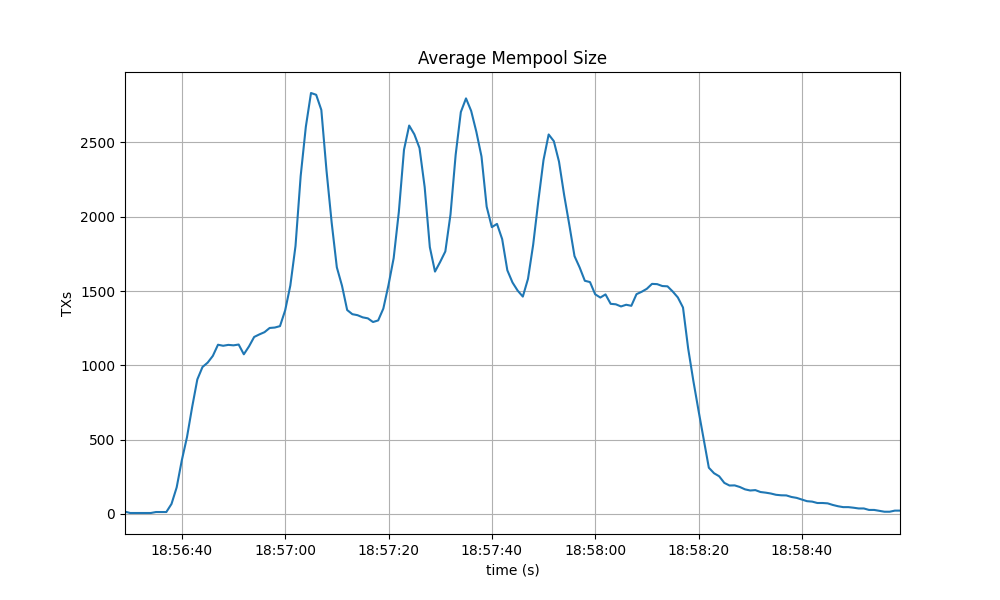

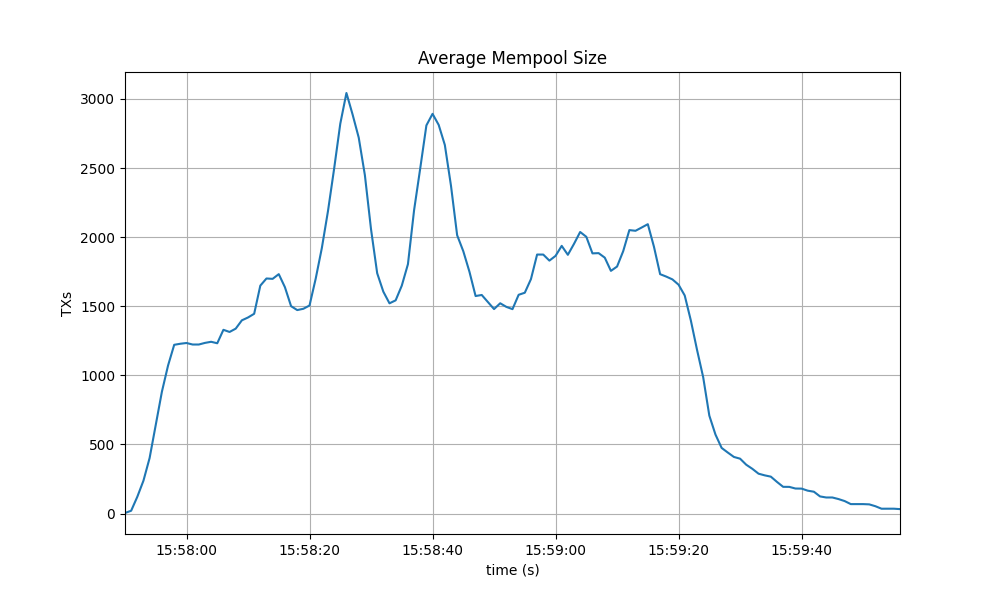

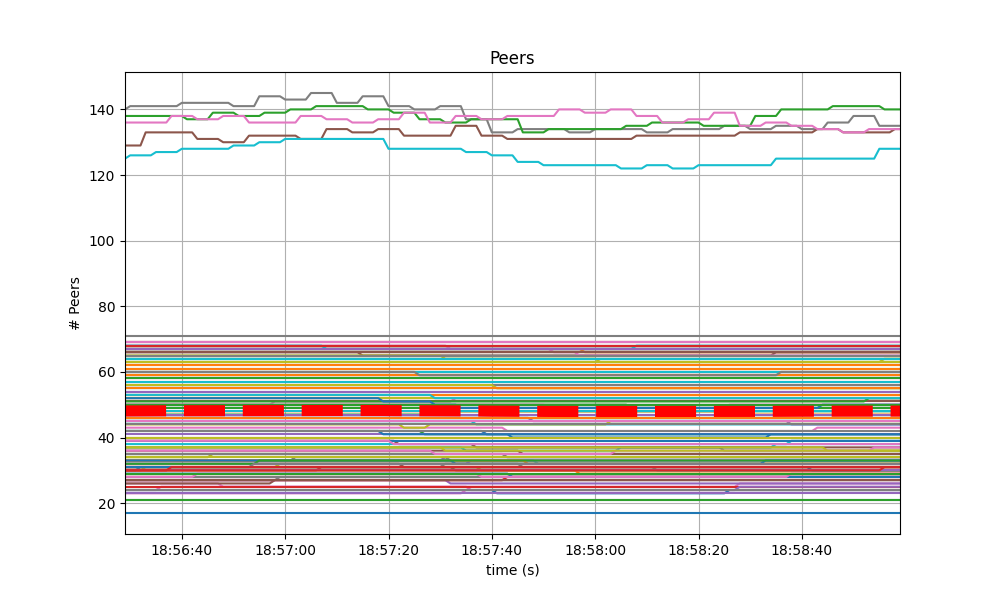

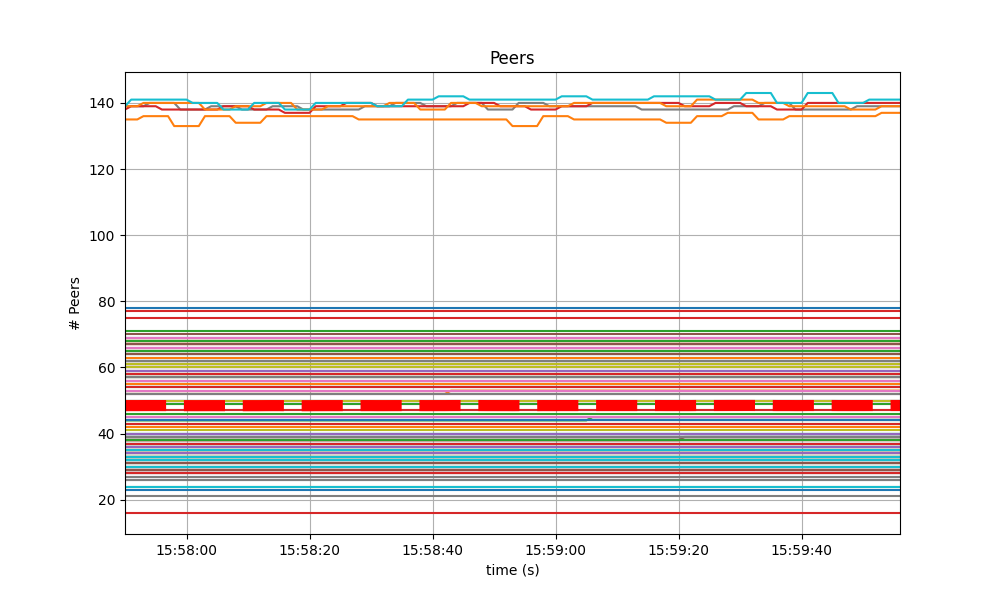

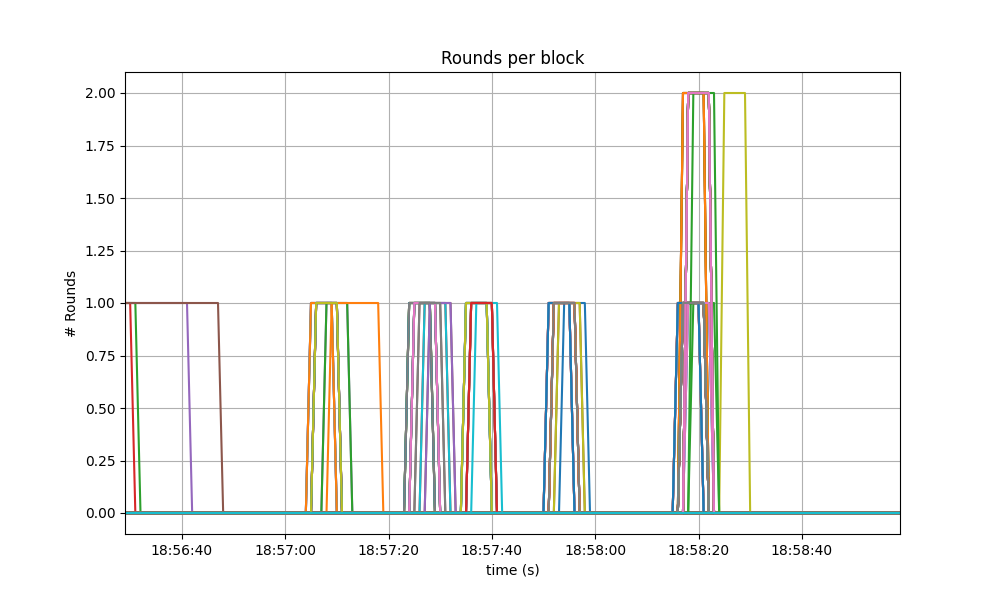

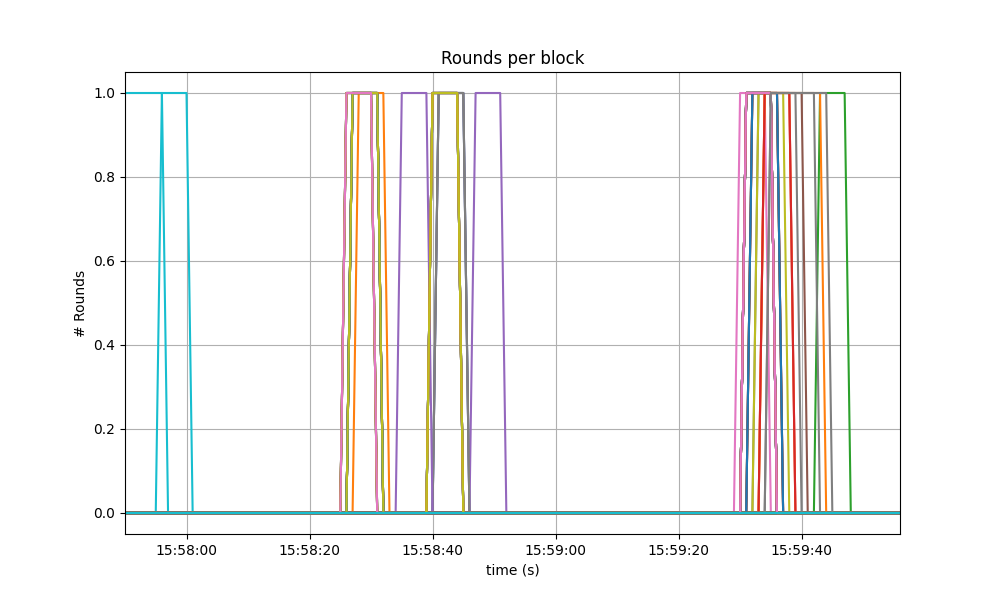

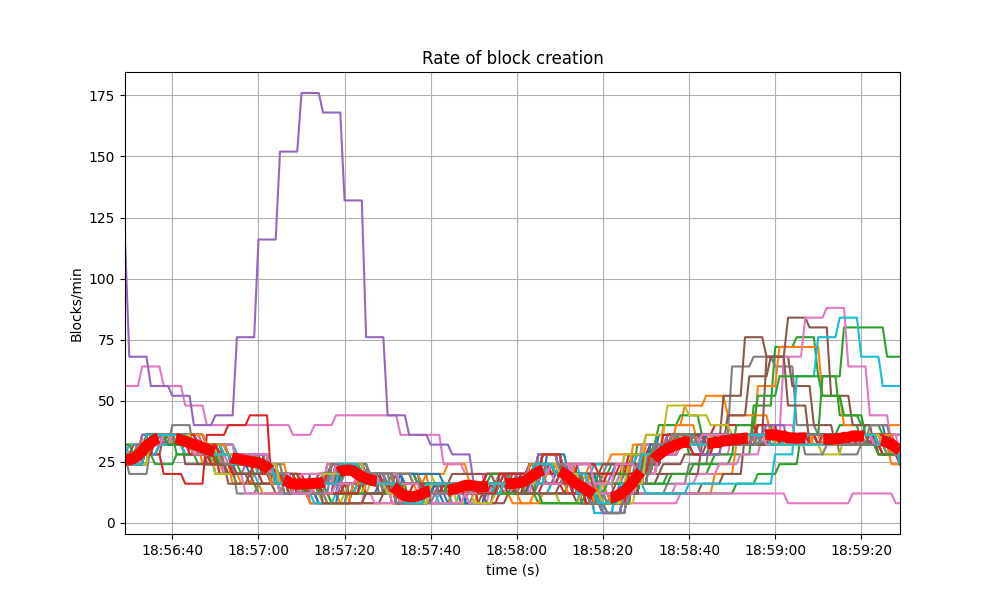

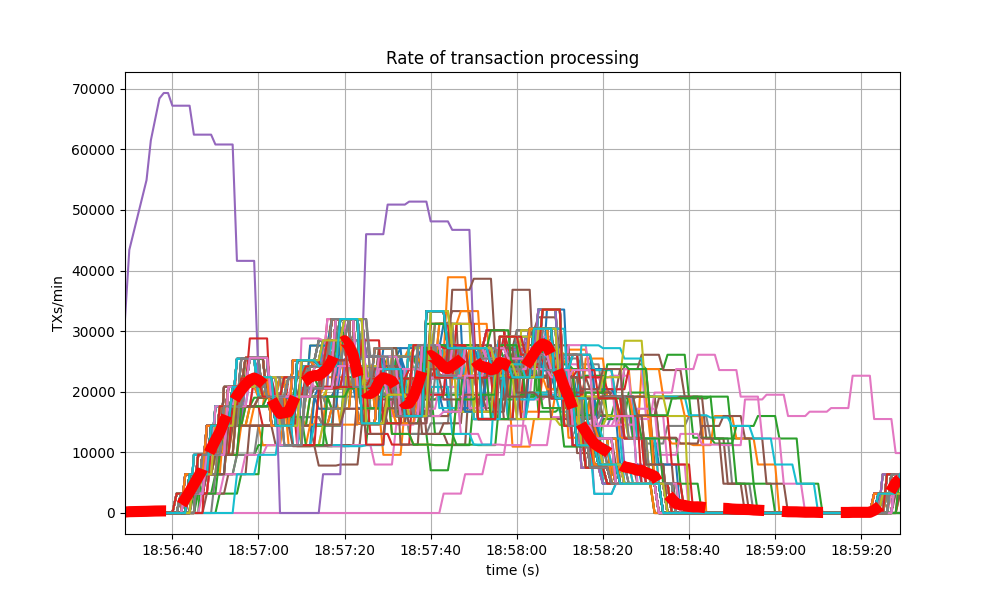

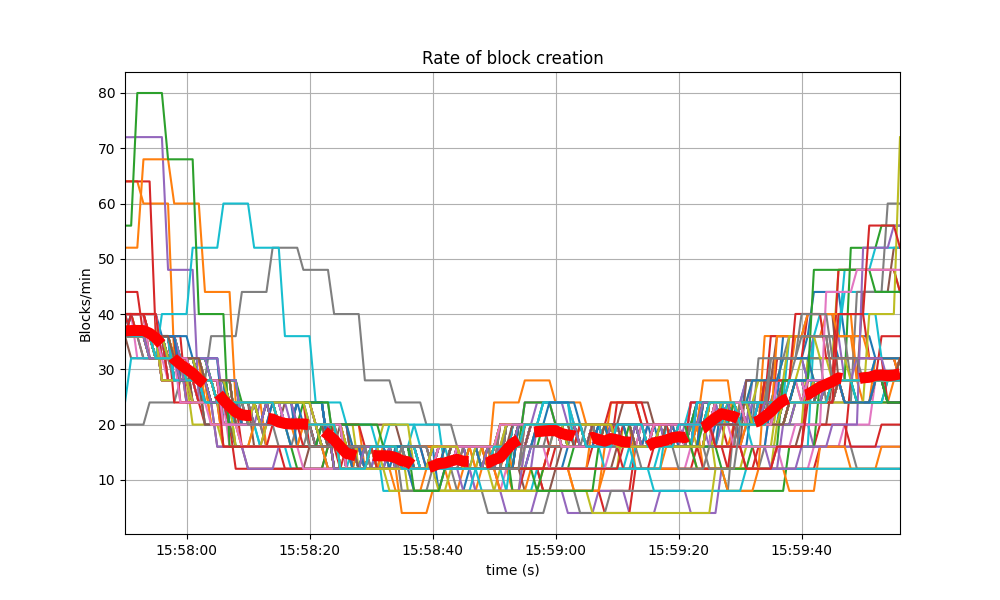

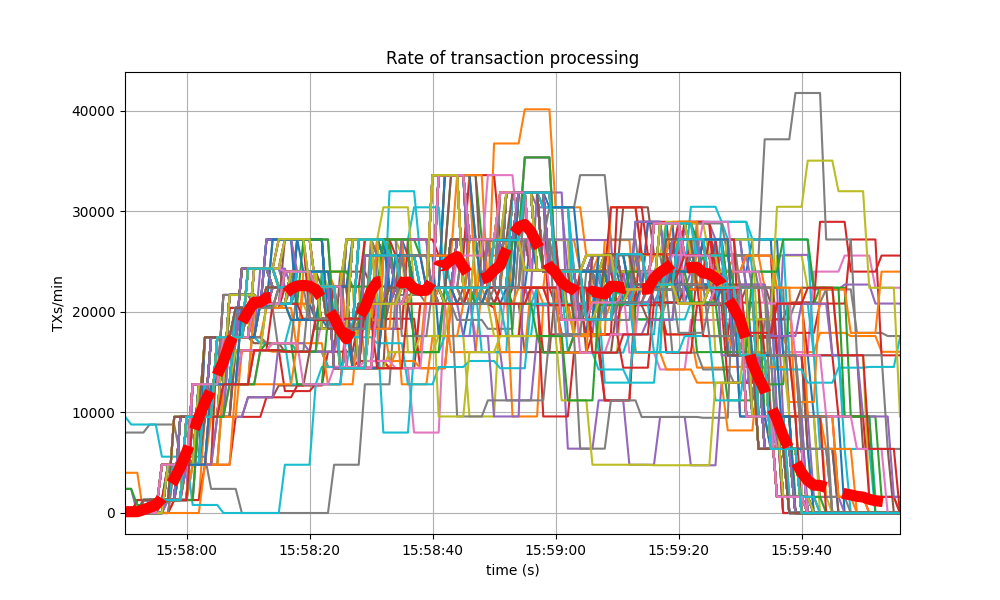

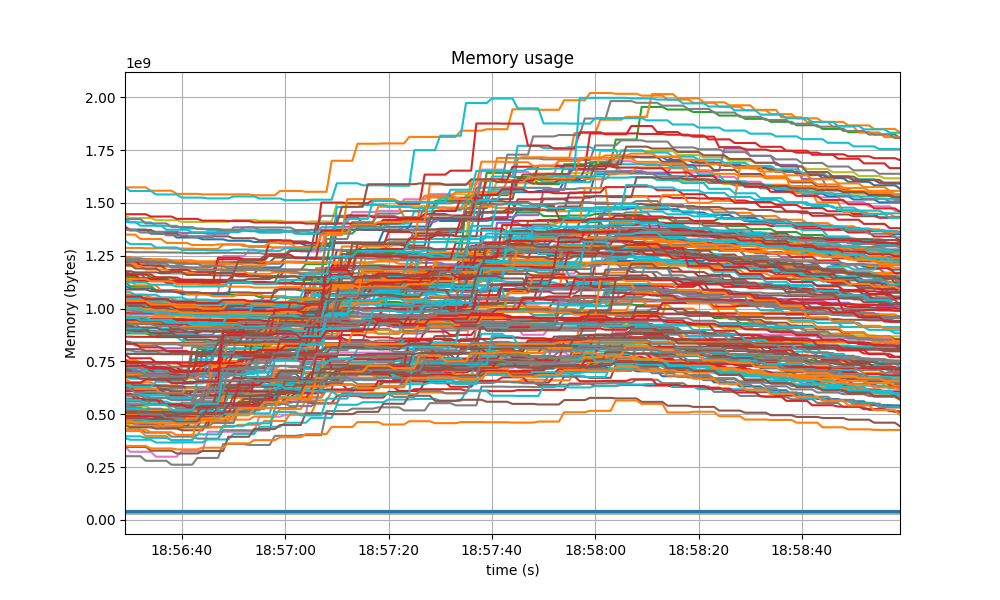

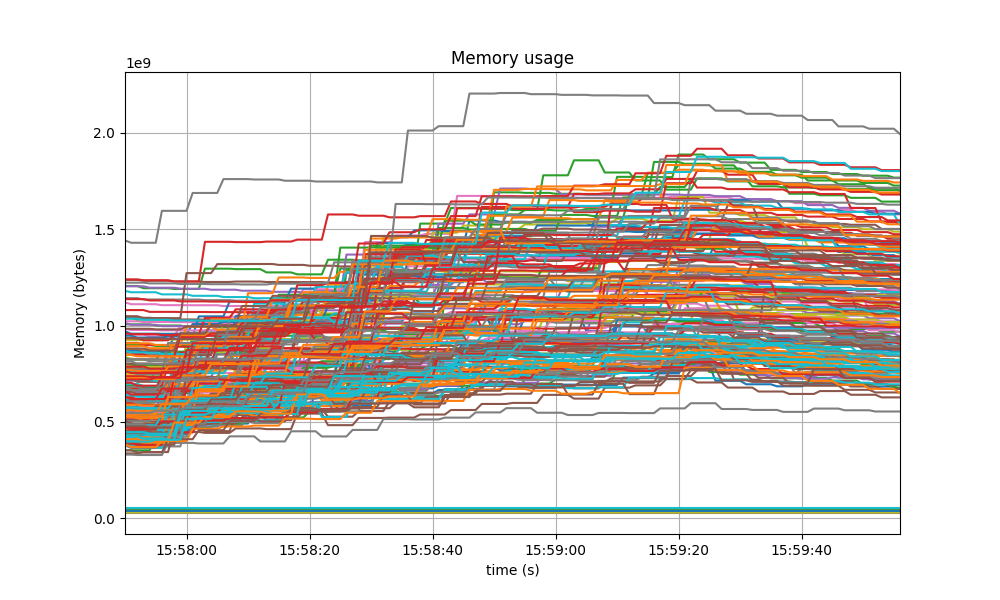

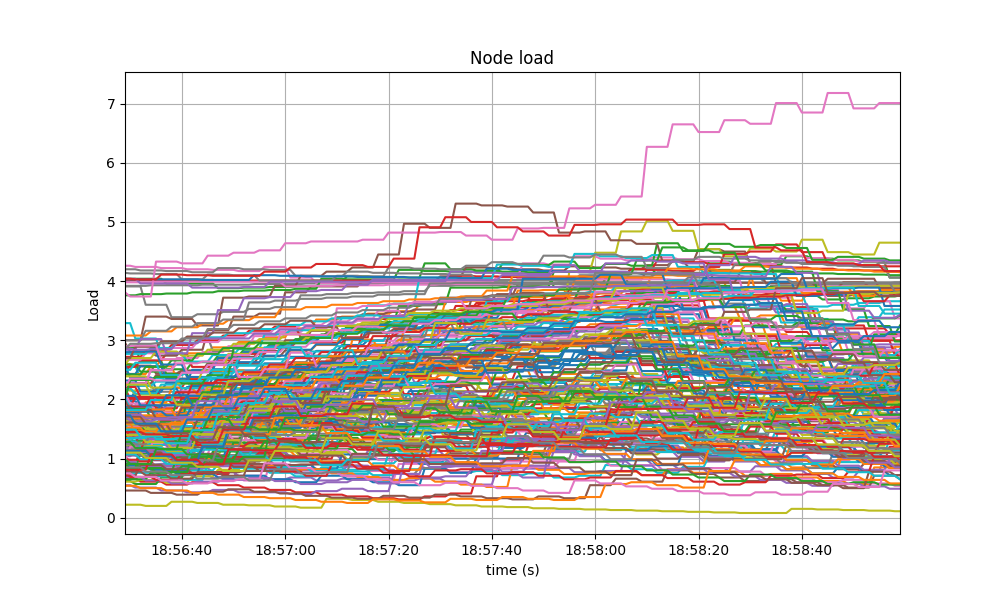

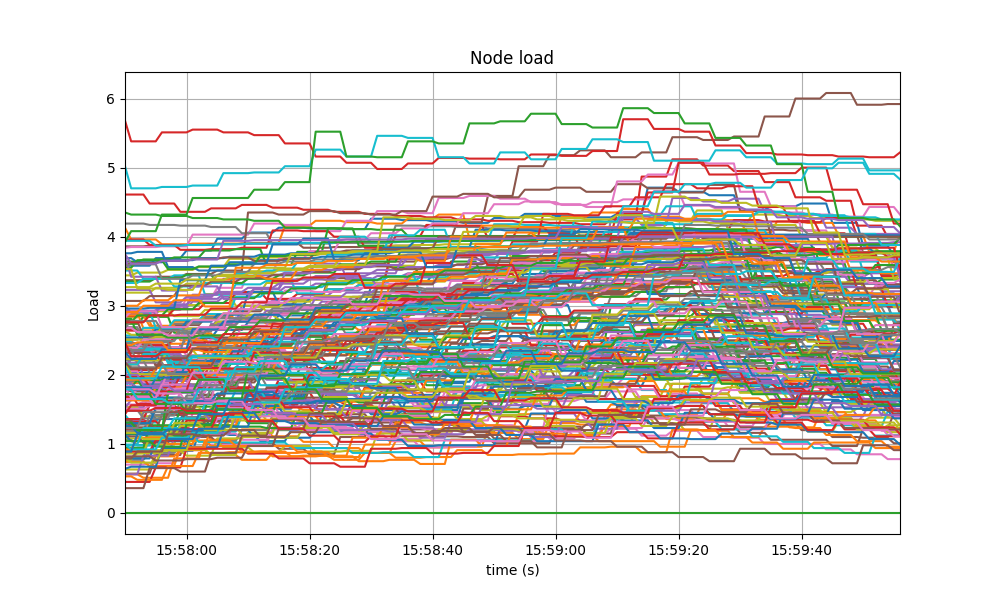

github.com/badrootd/nibiru-cometbft@v0.37.5-0.20240307173500-2a75559eee9b/docs/qa/CometBFT-QA-37.md (about) 1 --- 2 order: 1 3 parent: 4 title: CometBFT QA Results v0.37.x 5 description: This is a report on the results obtained when running CometBFT v0.37.x on testnets 6 order: 5 7 --- 8 9 # CometBFT QA Results v0.37.x 10 11 This iteration of the QA was run on CometBFT `v0.37.0-alpha3`, the first `v0.37.x` version from the CometBFT repository. 12 13 The changes with respect to the baseline, `TM v0.37.x` as of Oct 12, 2022 (Commit: 1cf9d8e276afe8595cba960b51cd056514965fd1), include the rebranding of our fork of Tendermint Core to CometBFT and several improvements, described in the CometBFT [CHANGELOG](https://github.com/cometbft/cometbft/blob/v0.37.0-alpha.3/CHANGELOG.md). 14 15 ## Testbed 16 17 As in other iterations of our QA process, we have used a 200-node network as testbed, plus nodes to introduce load and collect metrics. 18 19 ### Saturation point 20 21 As in previous iterations, in our QA experiments, the system is subjected to a load slightly under a saturation point. 22 The method to identify the saturation point is explained [here](CometBFT-QA-34.md#finding-the-saturation-point) and its application to the baseline is described [here](TMCore-QA-37.md#finding-the-saturation-point). 23 We use the same saturation point, that is, `c`, the number of connections created by the load runner process to the target node, is 2 and `r`, the rate or number of transactions issued per second, is 200. 24 25 ## Examining latencies 26 27 The following figure plots six experiments carried out with the network. 28 Unique identifiers, UUID, for each execution are presented on top of each graph. 29 30  31 32 We can see that the latencies follow comparable patterns across all experiments. 33 Therefore, in the following sections we will only present the results for one representative run, chosen randomly, with UUID starting with `75cb89a8`. 34 35 . 36 37 For reference, the following figure shows the latencies of different configuration of the baseline. 38 `c=02 r=200` corresponds to the same configuration as in this experiment. 39 40  41 42 As can be seen, latencies are similar. 43 44 ## Prometheus Metrics on the Chosen Experiment 45 46 This section further examines key metrics for this experiment extracted from Prometheus data regarding the chosen experiment. 47 48 ### Mempool Size 49 50 The mempool size, a count of the number of transactions in the mempool, was shown to be stable and homogeneous at all full nodes. 51 It did not exhibit any unconstrained growth. 52 The plot below shows the evolution over time of the cumulative number of transactions inside all full nodes' mempools at a given time. 53 54  55 56 The following picture shows the evolution of the average mempool size over all full nodes, which mostly oscilates between 1500 and 2000 outstanding transactions. 57 58  59 60 The peaks observed coincide with the moments when some nodes reached round 1 of consensus (see below). 61 62 63 The behavior is similar to the observed in the baseline, presented next. 64 65  66 67  68 69 70 ### Peers 71 72 The number of peers was stable at all nodes. 73 It was higher for the seed nodes (around 140) than for the rest (between 16 and 78). 74 The red dashed line denotes the average value. 75 76  77 78 Just as in the baseline, shown next, the fact that non-seed nodes reach more than 50 peers is due to [\#9548]. 79 80  81 82 83 ### Consensus Rounds per Height 84 85 Most heights took just one round, that is, round 0, but some nodes needed to advance to round 1 and eventually round 2. 86 87  88 89 The following specific run of the baseline presented better results, only requiring up to round 1, but reaching higher rounds is not uncommon in the corresponding software version. 90 91  92 93 ### Blocks Produced per Minute, Transactions Processed per Minute 94 95 The following plot shows the rate in which blocks were created, from the point of view of each node. 96 That is, it shows when each node learned that a new block had been agreed upon. 97 98  99 100 For most of the time when load was being applied to the system, most of the nodes stayed around 20 to 25 blocks/minute. 101 102 The spike to more than 175 blocks/minute is due to a slow node catching up. 103 104 The collective spike on the right of the graph marks the end of the load injection, when blocks become smaller (empty) and impose less strain on the network. 105 This behavior is reflected in the following graph, which shows the number of transactions processed per minute. 106 107  108 109 The baseline experienced a similar behavior, shown in the following two graphs. 110 The first depicts the block rate. 111 112  113 114 The second plots the transaction rate. 115 116  117 118 ### Memory Resident Set Size 119 120 The Resident Set Size of all monitored processes is plotted below, with maximum memory usage of 2GB. 121 122  123 124 A similar behavior was shown in the baseline, presented next. 125 126  127 128 The memory of all processes went down as the load as removed, showing no signs of unconstrained growth. 129 130 131 #### CPU utilization 132 133 The best metric from Prometheus to gauge CPU utilization in a Unix machine is `load1`, 134 as it usually appears in the 135 [output of `top`](https://www.digitalocean.com/community/tutorials/load-average-in-linux). 136 137 It is contained below 5 on most nodes, as seen in the following graph. 138 139  140 141 A similar behavior was seen in the baseline. 142 143  144 145 146 ## Test Results 147 148 The comparison against the baseline results show that both scenarios had similar numbers and are therefore equivalent. 149 150 A conclusion of these tests is shown in the following table, along with the commit versions used in the experiments. 151 152 | Scenario | Date | Version | Result | 153 |--|--|--|--| 154 |CometBFT | 2023-02-14 | v0.37.0-alpha3 (bef9a830e7ea7da30fa48f2cc236b1f465cc5833) | Pass 155 156 157 [\#9548]: https://github.com/tendermint/tendermint/issues/9548