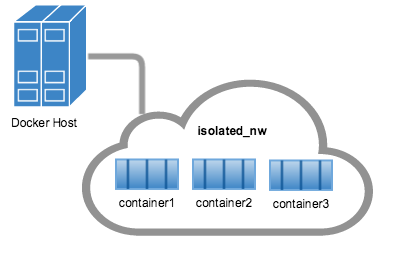

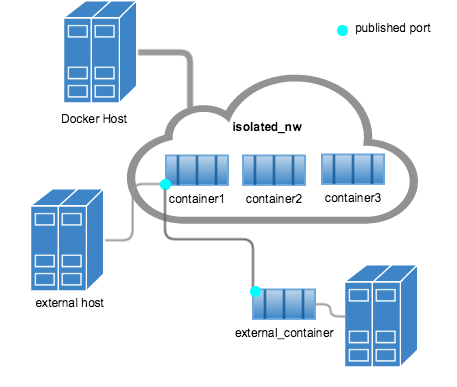

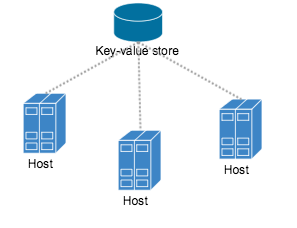

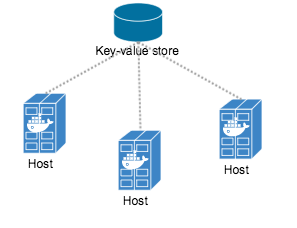

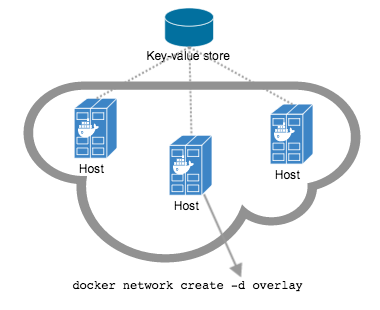

github.com/brahmaroutu/docker@v1.2.1-0.20160809185609-eb28dde01f16/docs/userguide/networking/dockernetworks.md (about) 1 <!--[metadata]> 2 +++ 3 title = "Docker container networking" 4 description = "How do we connect docker containers within and across hosts ?" 5 keywords = ["Examples, Usage, network, docker, documentation, user guide, multihost, cluster"] 6 [menu.main] 7 parent = "smn_networking" 8 weight = -5 9 +++ 10 <![end-metadata]--> 11 12 # Understand Docker container networks 13 14 To build web applications that act in concert but do so securely, use the Docker 15 networks feature. Networks, by definition, provide complete isolation for 16 containers. So, it is important to have control over the networks your 17 applications run on. Docker container networks give you that control. 18 19 This section provides an overview of the default networking behavior that Docker 20 Engine delivers natively. It describes the type of networks created by default 21 and how to create your own, user-defined networks. It also describes the 22 resources required to create networks on a single host or across a cluster of 23 hosts. 24 25 ## Default Networks 26 27 When you install Docker, it creates three networks automatically. You can list 28 these networks using the `docker network ls` command: 29 30 ``` 31 $ docker network ls 32 33 NETWORK ID NAME DRIVER 34 7fca4eb8c647 bridge bridge 35 9f904ee27bf5 none null 36 cf03ee007fb4 host host 37 ``` 38 39 Historically, these three networks are part of Docker's implementation. When 40 you run a container you can use the `--network` flag to specify which network you 41 want to run a container on. These three networks are still available to you. 42 43 The `bridge` network represents the `docker0` network present in all Docker 44 installations. Unless you specify otherwise with the `docker run 45 --network=<NETWORK>` option, the Docker daemon connects containers to this network 46 by default. You can see this bridge as part of a host's network stack by using 47 the `ifconfig` command on the host. 48 49 ``` 50 $ ifconfig 51 52 docker0 Link encap:Ethernet HWaddr 02:42:47:bc:3a:eb 53 inet addr:172.17.0.1 Bcast:0.0.0.0 Mask:255.255.0.0 54 inet6 addr: fe80::42:47ff:febc:3aeb/64 Scope:Link 55 UP BROADCAST RUNNING MULTICAST MTU:9001 Metric:1 56 RX packets:17 errors:0 dropped:0 overruns:0 frame:0 57 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 58 collisions:0 txqueuelen:0 59 RX bytes:1100 (1.1 KB) TX bytes:648 (648.0 B) 60 ``` 61 62 The `none` network adds a container to a container-specific network stack. That container lacks a network interface. Attaching to such a container and looking at its stack you see this: 63 64 ``` 65 $ docker attach nonenetcontainer 66 67 root@0cb243cd1293:/# cat /etc/hosts 68 127.0.0.1 localhost 69 ::1 localhost ip6-localhost ip6-loopback 70 fe00::0 ip6-localnet 71 ff00::0 ip6-mcastprefix 72 ff02::1 ip6-allnodes 73 ff02::2 ip6-allrouters 74 root@0cb243cd1293:/# ifconfig 75 lo Link encap:Local Loopback 76 inet addr:127.0.0.1 Mask:255.0.0.0 77 inet6 addr: ::1/128 Scope:Host 78 UP LOOPBACK RUNNING MTU:65536 Metric:1 79 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 80 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 81 collisions:0 txqueuelen:0 82 RX bytes:0 (0.0 B) TX bytes:0 (0.0 B) 83 84 root@0cb243cd1293:/# 85 ``` 86 >**Note**: You can detach from the container and leave it running with `CTRL-p CTRL-q`. 87 88 The `host` network adds a container on the hosts network stack. You'll find the 89 network configuration inside the container is identical to the host. 90 91 With the exception of the `bridge` network, you really don't need to 92 interact with these default networks. While you can list and inspect them, you 93 cannot remove them. They are required by your Docker installation. However, you 94 can add your own user-defined networks and these you can remove when you no 95 longer need them. Before you learn more about creating your own networks, it is 96 worth looking at the default `bridge` network a bit. 97 98 99 ### The default bridge network in detail 100 The default `bridge` network is present on all Docker hosts. The `docker network inspect` 101 command returns information about a network: 102 103 ``` 104 $ docker network inspect bridge 105 106 [ 107 { 108 "Name": "bridge", 109 "Id": "f7ab26d71dbd6f557852c7156ae0574bbf62c42f539b50c8ebde0f728a253b6f", 110 "Scope": "local", 111 "Driver": "bridge", 112 "IPAM": { 113 "Driver": "default", 114 "Config": [ 115 { 116 "Subnet": "172.17.0.1/16", 117 "Gateway": "172.17.0.1" 118 } 119 ] 120 }, 121 "Containers": {}, 122 "Options": { 123 "com.docker.network.bridge.default_bridge": "true", 124 "com.docker.network.bridge.enable_icc": "true", 125 "com.docker.network.bridge.enable_ip_masquerade": "true", 126 "com.docker.network.bridge.host_binding_ipv4": "0.0.0.0", 127 "com.docker.network.bridge.name": "docker0", 128 "com.docker.network.driver.mtu": "9001" 129 } 130 } 131 ] 132 ``` 133 The Engine automatically creates a `Subnet` and `Gateway` to the network. 134 The `docker run` command automatically adds new containers to this network. 135 136 ``` 137 $ docker run -itd --name=container1 busybox 138 139 3386a527aa08b37ea9232cbcace2d2458d49f44bb05a6b775fba7ddd40d8f92c 140 141 $ docker run -itd --name=container2 busybox 142 143 94447ca479852d29aeddca75c28f7104df3c3196d7b6d83061879e339946805c 144 ``` 145 146 Inspecting the `bridge` network again after starting two containers shows both newly launched containers in the network. Their ids show up in the "Containers" section of `docker network inspect`: 147 148 ``` 149 $ docker network inspect bridge 150 151 {[ 152 { 153 "Name": "bridge", 154 "Id": "f7ab26d71dbd6f557852c7156ae0574bbf62c42f539b50c8ebde0f728a253b6f", 155 "Scope": "local", 156 "Driver": "bridge", 157 "IPAM": { 158 "Driver": "default", 159 "Config": [ 160 { 161 "Subnet": "172.17.0.1/16", 162 "Gateway": "172.17.0.1" 163 } 164 ] 165 }, 166 "Containers": { 167 "3386a527aa08b37ea9232cbcace2d2458d49f44bb05a6b775fba7ddd40d8f92c": { 168 "EndpointID": "647c12443e91faf0fd508b6edfe59c30b642abb60dfab890b4bdccee38750bc1", 169 "MacAddress": "02:42:ac:11:00:02", 170 "IPv4Address": "172.17.0.2/16", 171 "IPv6Address": "" 172 }, 173 "94447ca479852d29aeddca75c28f7104df3c3196d7b6d83061879e339946805c": { 174 "EndpointID": "b047d090f446ac49747d3c37d63e4307be745876db7f0ceef7b311cbba615f48", 175 "MacAddress": "02:42:ac:11:00:03", 176 "IPv4Address": "172.17.0.3/16", 177 "IPv6Address": "" 178 } 179 }, 180 "Options": { 181 "com.docker.network.bridge.default_bridge": "true", 182 "com.docker.network.bridge.enable_icc": "true", 183 "com.docker.network.bridge.enable_ip_masquerade": "true", 184 "com.docker.network.bridge.host_binding_ipv4": "0.0.0.0", 185 "com.docker.network.bridge.name": "docker0", 186 "com.docker.network.driver.mtu": "9001" 187 } 188 } 189 ] 190 ``` 191 192 The `docker network inspect` command above shows all the connected containers and their network resources on a given network. Containers in this default network are able to communicate with each other using IP addresses. Docker does not support automatic service discovery on the default bridge network. If you want to communicate with container names in this default bridge network, you must connect the containers via the legacy `docker run --link` option. 193 194 You can `attach` to a running `container` and investigate its configuration: 195 196 ``` 197 $ docker attach container1 198 199 root@0cb243cd1293:/# ifconfig 200 ifconfig 201 eth0 Link encap:Ethernet HWaddr 02:42:AC:11:00:02 202 inet addr:172.17.0.2 Bcast:0.0.0.0 Mask:255.255.0.0 203 inet6 addr: fe80::42:acff:fe11:2/64 Scope:Link 204 UP BROADCAST RUNNING MULTICAST MTU:9001 Metric:1 205 RX packets:16 errors:0 dropped:0 overruns:0 frame:0 206 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 207 collisions:0 txqueuelen:0 208 RX bytes:1296 (1.2 KiB) TX bytes:648 (648.0 B) 209 210 lo Link encap:Local Loopback 211 inet addr:127.0.0.1 Mask:255.0.0.0 212 inet6 addr: ::1/128 Scope:Host 213 UP LOOPBACK RUNNING MTU:65536 Metric:1 214 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 215 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 216 collisions:0 txqueuelen:0 217 RX bytes:0 (0.0 B) TX bytes:0 (0.0 B) 218 ``` 219 220 Then use `ping` for about 3 seconds to test the connectivity of the containers on this `bridge` network. 221 222 ``` 223 root@0cb243cd1293:/# ping -w3 172.17.0.3 224 225 PING 172.17.0.3 (172.17.0.3): 56 data bytes 226 64 bytes from 172.17.0.3: seq=0 ttl=64 time=0.096 ms 227 64 bytes from 172.17.0.3: seq=1 ttl=64 time=0.080 ms 228 64 bytes from 172.17.0.3: seq=2 ttl=64 time=0.074 ms 229 230 --- 172.17.0.3 ping statistics --- 231 3 packets transmitted, 3 packets received, 0% packet loss 232 round-trip min/avg/max = 0.074/0.083/0.096 ms 233 ``` 234 235 Finally, use the `cat` command to check the `container1` network configuration: 236 237 ``` 238 root@0cb243cd1293:/# cat /etc/hosts 239 240 172.17.0.2 3386a527aa08 241 127.0.0.1 localhost 242 ::1 localhost ip6-localhost ip6-loopback 243 fe00::0 ip6-localnet 244 ff00::0 ip6-mcastprefix 245 ff02::1 ip6-allnodes 246 ff02::2 ip6-allrouters 247 ``` 248 To detach from a `container1` and leave it running use `CTRL-p CTRL-q`.Then, attach to `container2` and repeat these three commands. 249 250 ``` 251 $ docker attach container2 252 253 root@0cb243cd1293:/# ifconfig 254 255 eth0 Link encap:Ethernet HWaddr 02:42:AC:11:00:03 256 inet addr:172.17.0.3 Bcast:0.0.0.0 Mask:255.255.0.0 257 inet6 addr: fe80::42:acff:fe11:3/64 Scope:Link 258 UP BROADCAST RUNNING MULTICAST MTU:9001 Metric:1 259 RX packets:15 errors:0 dropped:0 overruns:0 frame:0 260 TX packets:13 errors:0 dropped:0 overruns:0 carrier:0 261 collisions:0 txqueuelen:0 262 RX bytes:1166 (1.1 KiB) TX bytes:1026 (1.0 KiB) 263 264 lo Link encap:Local Loopback 265 inet addr:127.0.0.1 Mask:255.0.0.0 266 inet6 addr: ::1/128 Scope:Host 267 UP LOOPBACK RUNNING MTU:65536 Metric:1 268 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 269 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 270 collisions:0 txqueuelen:0 271 RX bytes:0 (0.0 B) TX bytes:0 (0.0 B) 272 273 root@0cb243cd1293:/# ping -w3 172.17.0.2 274 275 PING 172.17.0.2 (172.17.0.2): 56 data bytes 276 64 bytes from 172.17.0.2: seq=0 ttl=64 time=0.067 ms 277 64 bytes from 172.17.0.2: seq=1 ttl=64 time=0.075 ms 278 64 bytes from 172.17.0.2: seq=2 ttl=64 time=0.072 ms 279 280 --- 172.17.0.2 ping statistics --- 281 3 packets transmitted, 3 packets received, 0% packet loss 282 round-trip min/avg/max = 0.067/0.071/0.075 ms 283 / # cat /etc/hosts 284 172.17.0.3 94447ca47985 285 127.0.0.1 localhost 286 ::1 localhost ip6-localhost ip6-loopback 287 fe00::0 ip6-localnet 288 ff00::0 ip6-mcastprefix 289 ff02::1 ip6-allnodes 290 ff02::2 ip6-allrouters 291 ``` 292 293 The default `docker0` bridge network supports the use of port mapping and `docker run --link` to allow communications between containers in the `docker0` network. These techniques are cumbersome to set up and prone to error. While they are still available to you as techniques, it is better to avoid them and define your own bridge networks instead. 294 295 ## User-defined networks 296 297 You can create your own user-defined networks that better isolate containers. 298 Docker provides some default **network drivers** for creating these 299 networks. You can create a new **bridge network** or **overlay network**. You 300 can also create a **network plugin** or **remote network** written to your own 301 specifications. 302 303 You can create multiple networks. You can add containers to more than one 304 network. Containers can only communicate within networks but not across 305 networks. A container attached to two networks can communicate with member 306 containers in either network. When a container is connected to multiple 307 networks, its external connectivity is provided via the first non-internal 308 network, in lexical order. 309 310 The next few sections describe each of Docker's built-in network drivers in 311 greater detail. 312 313 ### A bridge network 314 315 The easiest user-defined network to create is a `bridge` network. This network 316 is similar to the historical, default `docker0` network. There are some added 317 features and some old features that aren't available. 318 319 ``` 320 $ docker network create --driver bridge isolated_nw 321 1196a4c5af43a21ae38ef34515b6af19236a3fc48122cf585e3f3054d509679b 322 323 $ docker network inspect isolated_nw 324 325 [ 326 { 327 "Name": "isolated_nw", 328 "Id": "1196a4c5af43a21ae38ef34515b6af19236a3fc48122cf585e3f3054d509679b", 329 "Scope": "local", 330 "Driver": "bridge", 331 "IPAM": { 332 "Driver": "default", 333 "Config": [ 334 { 335 "Subnet": "172.21.0.0/16", 336 "Gateway": "172.21.0.1/16" 337 } 338 ] 339 }, 340 "Containers": {}, 341 "Options": {} 342 } 343 ] 344 345 $ docker network ls 346 347 NETWORK ID NAME DRIVER 348 9f904ee27bf5 none null 349 cf03ee007fb4 host host 350 7fca4eb8c647 bridge bridge 351 c5ee82f76de3 isolated_nw bridge 352 353 ``` 354 355 After you create the network, you can launch containers on it using the `docker run --network=<NETWORK>` option. 356 357 ``` 358 $ docker run --network=isolated_nw -itd --name=container3 busybox 359 360 8c1a0a5be480921d669a073393ade66a3fc49933f08bcc5515b37b8144f6d47c 361 362 $ docker network inspect isolated_nw 363 [ 364 { 365 "Name": "isolated_nw", 366 "Id": "1196a4c5af43a21ae38ef34515b6af19236a3fc48122cf585e3f3054d509679b", 367 "Scope": "local", 368 "Driver": "bridge", 369 "IPAM": { 370 "Driver": "default", 371 "Config": [ 372 {} 373 ] 374 }, 375 "Containers": { 376 "8c1a0a5be480921d669a073393ade66a3fc49933f08bcc5515b37b8144f6d47c": { 377 "EndpointID": "93b2db4a9b9a997beb912d28bcfc117f7b0eb924ff91d48cfa251d473e6a9b08", 378 "MacAddress": "02:42:ac:15:00:02", 379 "IPv4Address": "172.21.0.2/16", 380 "IPv6Address": "" 381 } 382 }, 383 "Options": {} 384 } 385 ] 386 ``` 387 388 The containers you launch into this network must reside on the same Docker host. 389 Each container in the network can immediately communicate with other containers 390 in the network. Though, the network itself isolates the containers from external 391 networks. 392 393  394 395 Within a user-defined bridge network, linking is not supported. You can 396 expose and publish container ports on containers in this network. This is useful 397 if you want to make a portion of the `bridge` network available to an outside 398 network. 399 400  401 402 A bridge network is useful in cases where you want to run a relatively small 403 network on a single host. You can, however, create significantly larger networks 404 by creating an `overlay` network. 405 406 407 ### An overlay network 408 409 Docker's `overlay` network driver supports multi-host networking natively 410 out-of-the-box. This support is accomplished with the help of `libnetwork`, a 411 built-in VXLAN-based overlay network driver, and Docker's `libkv` library. 412 413 The `overlay` network requires a valid key-value store service. Currently, 414 Docker's `libkv` supports Consul, Etcd, and ZooKeeper (Distributed store). Before 415 creating a network you must install and configure your chosen key-value store 416 service. The Docker hosts that you intend to network and the service must be 417 able to communicate. 418 419  420 421 Each host in the network must run a Docker Engine instance. The easiest way to 422 provision the hosts are with Docker Machine. 423 424  425 426 You should open the following ports between each of your hosts. 427 428 | Protocol | Port | Description | 429 |----------|------|-----------------------| 430 | udp | 4789 | Data plane (VXLAN) | 431 | tcp/udp | 7946 | Control plane | 432 433 Your key-value store service may require additional ports. 434 Check your vendor's documentation and open any required ports. 435 436 Once you have several machines provisioned, you can use Docker Swarm to quickly 437 form them into a swarm which includes a discovery service as well. 438 439 To create an overlay network, you configure options on the `daemon` on each 440 Docker Engine for use with `overlay` network. There are three options to set: 441 442 <table> 443 <thead> 444 <tr> 445 <th>Option</th> 446 <th>Description</th> 447 </tr> 448 </thead> 449 <tbody> 450 <tr> 451 <td><pre>--cluster-store=PROVIDER://URL</pre></td> 452 <td>Describes the location of the KV service.</td> 453 </tr> 454 <tr> 455 <td><pre>--cluster-advertise=HOST_IP|HOST_IFACE:PORT</pre></td> 456 <td>The IP address or interface of the HOST used for clustering.</td> 457 </tr> 458 <tr> 459 <td><pre>--cluster-store-opt=KEY-VALUE OPTIONS</pre></td> 460 <td>Options such as TLS certificate or tuning discovery Timers</td> 461 </tr> 462 </tbody> 463 </table> 464 465 Create an `overlay` network on one of the machines in the swarm. 466 467 $ docker network create --driver overlay my-multi-host-network 468 469 This results in a single network spanning multiple hosts. An `overlay` network 470 provides complete isolation for the containers. 471 472  473 474 Then, on each host, launch containers making sure to specify the network name. 475 476 $ docker run -itd --network=my-multi-host-network busybox 477 478 Once connected, each container has access to all the containers in the network 479 regardless of which Docker host the container was launched on. 480 481  482 483 If you would like to try this for yourself, see the [Getting started for 484 overlay](get-started-overlay.md). 485 486 ### Custom network plugin 487 488 If you like, you can write your own network driver plugin. A network 489 driver plugin makes use of Docker's plugin infrastructure. In this 490 infrastructure, a plugin is a process running on the same Docker host as the 491 Docker `daemon`. 492 493 Network plugins follow the same restrictions and installation rules as other 494 plugins. All plugins make use of the plugin API. They have a lifecycle that 495 encompasses installation, starting, stopping and activation. 496 497 Once you have created and installed a custom network driver, you use it like the 498 built-in network drivers. For example: 499 500 $ docker network create --driver weave mynet 501 502 You can inspect it, add containers to and from it, and so forth. Of course, 503 different plugins may make use of different technologies or frameworks. Custom 504 networks can include features not present in Docker's default networks. For more 505 information on writing plugins, see [Extending Docker](../../extend/index.md) and 506 [Writing a network driver plugin](../../extend/plugins_network.md). 507 508 ### Docker embedded DNS server 509 510 Docker daemon runs an embedded DNS server to provide automatic service discovery 511 for containers connected to user defined networks. Name resolution requests from 512 the containers are handled first by the embedded DNS server. If the embedded DNS 513 server is unable to resolve the request it will be forwarded to any external DNS 514 servers configured for the container. To facilitate this when the container is 515 created, only the embedded DNS server reachable at `127.0.0.11` will be listed 516 in the container's `resolv.conf` file. More information on embedded DNS server on 517 user-defined networks can be found in the [embedded DNS server in user-defined networks] 518 (configure-dns.md) 519 520 ## Links 521 522 Before the Docker network feature, you could use the Docker link feature to 523 allow containers to discover each other. With the introduction of Docker networks, 524 containers can be discovered by its name automatically. But you can still create 525 links but they behave differently when used in the default `docker0` bridge network 526 compared to user-defined networks. For more information, please refer to 527 [Legacy Links](default_network/dockerlinks.md) for link feature in default `bridge` network 528 and the [linking containers in user-defined networks](work-with-networks.md#linking-containers-in-user-defined-networks) for links 529 functionality in user-defined networks. 530 531 ## Related information 532 533 - [Work with network commands](work-with-networks.md) 534 - [Get started with multi-host networking](get-started-overlay.md) 535 - [Managing Data in Containers](../../tutorials/dockervolumes.md) 536 - [Docker Machine overview](https://docs.docker.com/machine) 537 - [Docker Swarm overview](https://docs.docker.com/swarm) 538 - [Investigate the LibNetwork project](https://github.com/docker/libnetwork)