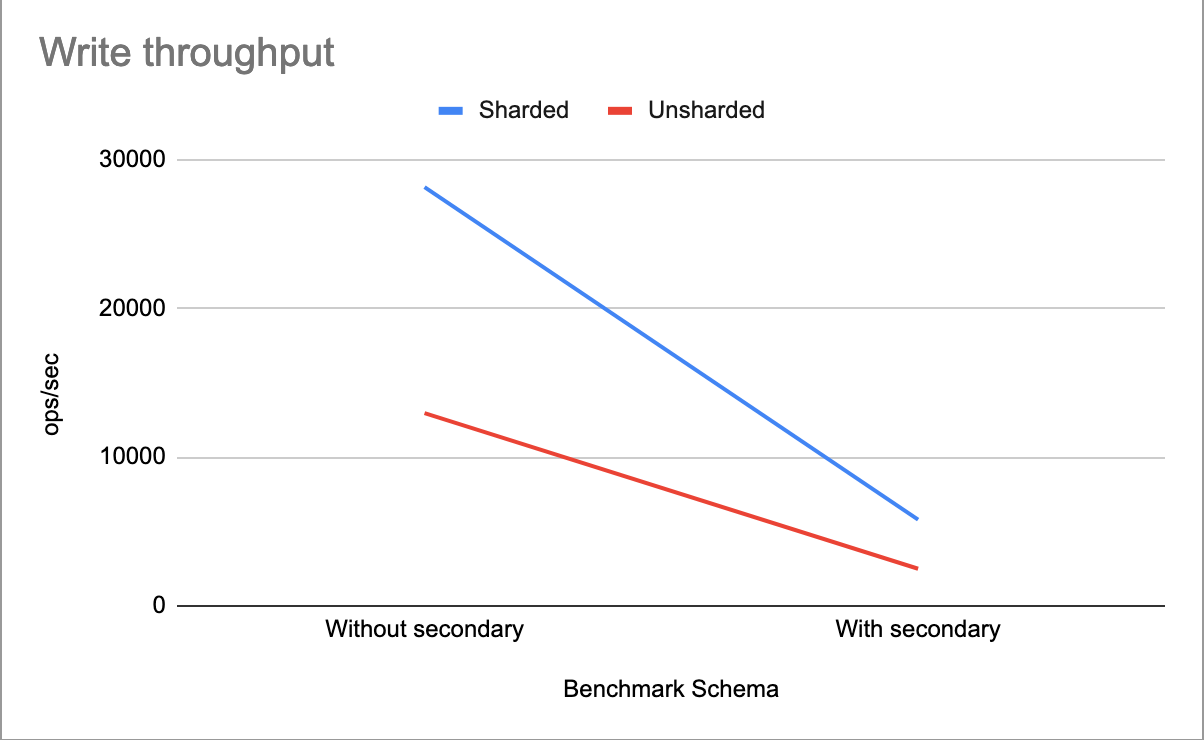

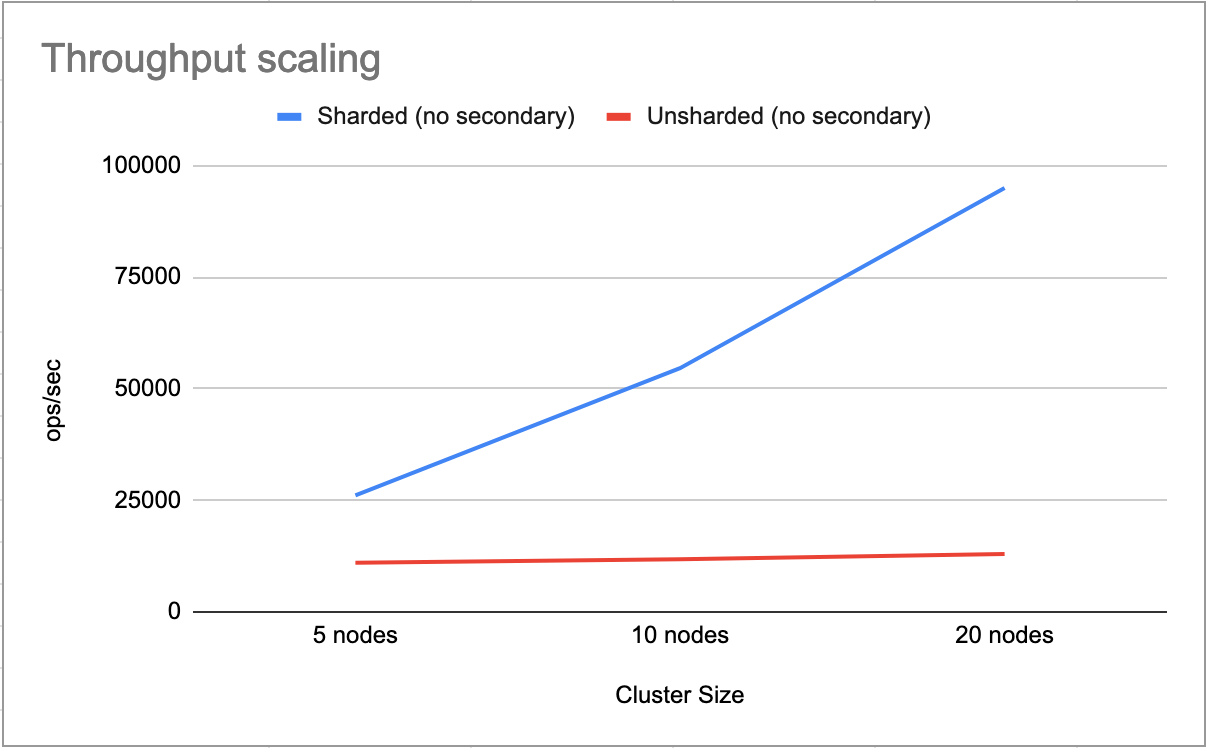

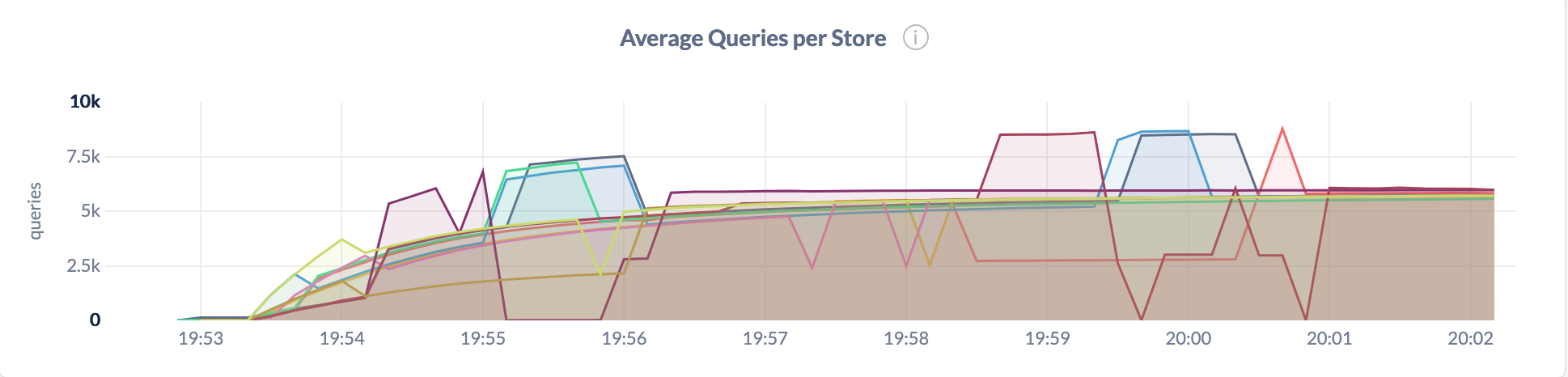

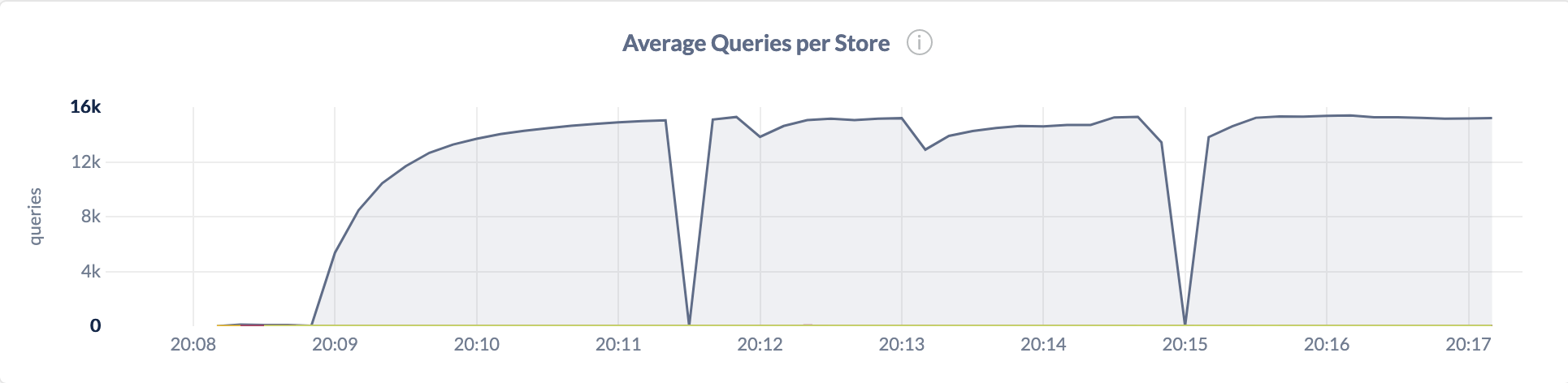

github.com/cockroachdb/cockroach@v20.2.0-alpha.1+incompatible/docs/RFCS/20191028_easier_hash_sharded_indexes.md (about) 1 - Feature Name: Easier Hash Sharded Indexes 2 - Status: draft 3 - Start Date: 2019-10-28 4 - Authors: Aayush Shah, Andrew Werner 5 - RFC PR: PR # after acceptance of initial draft 6 - Cockroach Issue: [#39340] (https://github.com/cockroachdb/cockroach/issues/39340) 7 8 # Summary 9 10 This is a proposal to provide better UX for creating hash sharded indexes through easier 11 syntax. This allows a useful mechanism to alleviate single range hot spots due to 12 sequential workloads. 13 14 # Motivation 15 16 Currently, in CockroachDB, write workloads that are sequential on a particular key will 17 cause a hotspot on a single range if there's any sort of index with the said key as a 18 prefix. Note that load-based splitting ([#31413]) doesn't help us here since our reservoir 19 sampling approach cannot find a valid split point that divides the incoming workload 20 evenly (since almost all queries are incident on _one_ of the boundaries of the concerned 21 range). 22 23 In 19.2, we added optimizer support to automatically add filters based on check 24 constraints. This can allow users to alleviate aforementioned single range hotspots by 25 creating an index on a computed shard column. However, this feature still requires some 26 relatively unattractive syntax to manually add a computed column which will act as the 27 shard key. This is illustrated in the following example from issue [#39340] 28 (https://github.com/cockroachdb/cockroach/issues/39340). 29 30 Imagine we have an IOT application where we are tracking a bunch of devices and each 31 device creates events. Sometimes we want to know which devices published events in some 32 time period. We might start with the following schema. 33 34 ```sql 35 CREATE TABLE events ( 36 device_id 37 UUID, 38 event_id 39 UUID, 40 ts 41 TIMESTAMP, 42 data 43 JSONB, 44 PRIMARY KEY (device_id, ts, event_id), 45 INDEX (ts) 46 ); 47 ``` 48 49 This schema would have a hot spot on that `INDEX (ts)` which would be rather unfortunate. 50 We can alleviate this hot spot by sharding this time ordered index. 51 52 ```sql 53 CREATE TABLE events ( 54 device_id 55 UUID, 56 shard 57 INT8 58 AS (fnv32(device_id) % 8) STORED 59 CHECK (shard IN (0, 1, 2, 3, 4, 5, 6, 7)), 60 event_id 61 UUID, 62 ts 63 TIMESTAMP, 64 data 65 JSONB, 66 PRIMARY KEY (device_id, ts, event_id), 67 INDEX (shard, ts) 68 ); 69 ``` 70 71 This isn't too big of a lift here because the device ID is easy to hash in sql. Imagine 72 instead we had a primary key based on some other features: 73 74 ```sql 75 CREATE TABLE events ( 76 product_id 77 INT8, 78 owner 79 UUID, 80 serial_number 81 VARCHAR, 82 event_id 83 UUID, 84 ts 85 TIMESTAMP, 86 data 87 JSONB, 88 PRIMARY KEY (product_id, owner, serial_number, ts, event_id), 89 INDEX (ts) 90 ); 91 ``` 92 93 In order to shard this we'll need something like: 94 95 ```sql 96 CREATE TABLE events ( 97 product_id 98 INT8, 99 owner 100 UUID, 101 serial_number 102 VARCHAR, 103 shard 104 INT8 105 AS ( 106 fnv32( 107 concat(hex(product_id)), 108 owner::STRING, 109 serial_number 110 ) 111 % 8 112 ) STORED 113 CHECK (shard IN (0, 1, 2, 3, 4, 5, 6, 7)), 114 event_id 115 UUID, 116 ts 117 TIMESTAMP, 118 data 119 JSONB, 120 PRIMARY KEY ( 121 product_id, 122 owner, 123 serial_number, 124 ts, 125 event_id 126 ), 127 INDEX (shard, ts) 128 ); 129 ``` 130 131 We can see that this is starting to get heavy. The proposal is that we shoulder the burden 132 of hashing and installing a check constraint behind a new syntax. 133 134 Borrowing from [Postgres](https://www.postgresql.org/docs/9.1/indexes-types.html) and [SQL 135 Server](https://docs.microsoft.com/en-us/sql/database-engine/determining-the-correct-bucket-count-for-hash-indexes?view=sql-server-2014), 136 we propose the following syntax: 137 138 Primary index: 139 140 ```sql 141 CREATE TABLE events ( 142 product_id 143 INT8, 144 owner 145 UUID, 146 serial_number 147 VARCHAR, 148 event_id 149 UUID, 150 ts 151 TIMESTAMP, 152 data 153 JSONB, 154 -- Creates a primary index on (shard, product_id, owner, serial_number) 155 -- with a check constraint for `shard in (0...7)` 156 PRIMARY KEY (product_id, owner, serial_number) USING HASH WITH BUCKET_COUNT=8 157 ) 158 ``` 159 160 ```sql 161 CREATE TABLE events ( 162 ts 163 DECIMAL PRIMARY KEY USING HASH WITH BUCKET_COUNT=8, 164 product_id 165 INT8, 166 ... 167 ... 168 ) 169 ``` 170 171 Secondary index: 172 173 ```sql 174 CREATE TABLE events ( 175 product_id 176 INT8, 177 owner 178 UUID, 179 serial_number 180 VARCHAR, 181 event_id 182 UUID, 183 ts 184 TIMESTAMP, 185 data 186 JSONB, 187 PRIMARY KEY (product_id, owner, serial_number, ts, event_id), 188 -- Creates a secondary index on (shard, ts) 189 -- with a check constraint for `shard in (0...7)` 190 INDEX (ts) USING HASH WITH BUCKET_COUNT=8 191 ); 192 ``` 193 ```sql 194 CREATE [UNIQUE] INDEX foo on events (ts) USING HASH WITH BUCKET_COUNT=8 195 ``` 196 197 Here, the new `USING HASH WITH BUCKET_COUNT...` syntax will create a new computed shard 198 column based on the set of columns _in the index_. 199 200 ## Benchmarks 201 202 We consider the following 4 schemas: 203 204 (1) Using hash sharded primary index on `k`. 205 ```sql 206 CREATE TABLE kv ( 207 k INT8 NOT NULL, 208 v bytes NOT NULL, 209 shard 210 INT8 AS (k % 10) STORED 211 CHECK (shard IN (0, 1, 2, 3, 4, 5, 6, 7, 8, 9)), 212 PRIMARY KEY (shard, k) 213 ); 214 ``` 215 216 (2) Using unsharded primary index on `k`. 217 ```sql 218 CREATE TABLE kv ( 219 k INT8 NOT NULL, 220 v BYTES NOT NULL, 221 PRIMARY KEY (k) 222 ); 223 ``` 224 225 (3) Hash sharded primary index on `k` and a secondary index on `v` 226 ```sql 227 CREATE TABLE kv ( 228 k INT8 NOT NULL, 229 v BYTES NOT NULL, 230 shard 231 INT4 AS (k % 10) STORED 232 CHECK (shard IN (0, 1, 2, 3, 4, 5, 6, 7, 8, 9)), 233 PRIMARY KEY (shard, k), 234 INDEX (v) 235 ) 236 ``` 237 238 (4) Primary (unsharded) index on `k` and a secondary index on `v`. 239 ```sql 240 CREATE TABLE kv 241 k INT8 NOT NULL, 242 v BYTES NOT NULL, 243 PRIMARY KEY (k), 244 INDEX (v) 245 ); 246 ``` 247 248 ### Sequential throughput on 5-node cluster 249 250 251 First, we demonstrate sequential write throughput on 252 [kv0bench](https://github.com/cockroachdb/cockroach/pull/42203), which is a `tpcc-bench` 253 style benchmark that searches for the maximum throughput that can be sustained while 254 maintaining an average latency less than a provided threshold, on a sequential `kv0` 255 workload. We ran `kv0bench` with the aforementioned threshold value configured to 256 `10.0ms`. We compare the max sustained throughput under this benchmark on all 4 of the 257 schemas described above. 258 259 The following benchmark was run on a 5 node GCE cluster with `n1-standard-8` machines. 260 261  262 263 ### Throughput scaling 264 265 Now we demonstrate sequential write throughput comparing schemas (1) and (2) from above on 266 increasingly larger cluster sizes. Here we see that we essentially unlock linear scaling 267 on such workloads by preventing a single range hotspot. 268 269  270 271 The following metrics from the CockroachDB Admin UI explain the results seen above by 272 showing an even distribution of queries across nodes in the sharded case, as opposed to 273 the unsharded case where all queries are being serviced by only one of the nodes. 274 275 Sharded: 276 277  278 279 Unsharded: 280 281  282 283 # Guide level explanation 284 285 Refer to [motivations](#Motivations) for a quick overview on how to shard your indexes. 286 287 # Future work 288 289 ## Deriving values of "purely" computed columns when all referenced columns are available 290 291 The optimizer currently doesn't derive the value of a stored computed column even when all 292 the columns that it references are available. This means we have to search all the shards. 293 294 For example, on schema (1) described in [benchmarks](#Benchmarks): 295 296 ``` 297 root@localhost:26257/kv> explain select * from kv where k = 10; 298 tree | field | description 299 +------+-------------+-----------------------------------------------------------------------------------------------------------------+ 300 | distributed | false 301 | vectorized | false 302 scan | | 303 | table | kv@primary 304 | spans | /0/10-/0/10/# /1/10-/1/10/# /2/10-/2/10/# /3/10-/3/10/# /4/10-/4/10/# /5/10-/5/10/# /6/10-/6/10/# /7/10-/7/10/# 305 | parallel | 306 (6 rows) 307 ``` 308 This means that if the shard value isn't plumbed down from the client side, performance 309 suffers in sequential workloads that aren't dominated by writes. 310 311 We ran `kv --sequential --concurrency=256 --read-percent=90` on both those schemas, with 312 the following results: 313 314 On schema (1) 315 316 ``` 317 _elapsed___errors_____ops(total)___ops/sec(cum)__avg(ms)__p50(ms)__p95(ms)__p99(ms)_pMax(ms)__total 318 300.0s 0 6218614 20728.6 11.9 11.0 19.9 26.2 192.9 read 319 _elapsed___errors_____ops(total)___ops/sec(cum)__avg(ms)__p50(ms)__p95(ms)__p99(ms)_pMax(ms)__total 320 300.0s 0 691363 2304.5 4.0 3.0 10.0 14.7 142.6 write 321 ``` 322 323 On schema (2) 324 325 ``` 326 _elapsed___errors_____ops(total)___ops/sec(cum)__avg(ms)__p50(ms)__p95(ms)__p99(ms)_pMax(ms)__total 327 300.0s 0 16702732 55675.5 3.7 2.9 10.5 21.0 385.9 read 328 _elapsed___errors_____ops(total)___ops/sec(cum)__avg(ms)__p50(ms)__p95(ms)__p99(ms)_pMax(ms)__total 329 300.0s 0 1855330 6184.4 8.5 6.6 18.9 56.6 121.6 write 330 ``` 331 332 In our current state, in order to see good performance for reads, the user would have to 333 plumb the shard value down in their queries. In the context of the prototype ([#42922]), 334 this is hard since we choose to keep the shard column hidden and the user doesn't really 335 have a way of knowing _how_ to compute the shard column. However, the idea is that we 336 won't have this limitation in 20.1. 337 338 ## Constraining scans to only search "relevant" shards (avoid full table scan when we can) 339 340 For example, for the following query: 341 342 ```sql 343 SELECT * FROM kv ORDER BY k LIMIT 5; 344 ``` 345 346 We get the following plan: 347 ``` 348 tree | field | description 349 +----------------+-------+-------------+ 350 limit | | 351 │ | count | 5 352 └── sort | | 353 │ | order | +k 354 └── scan | | 355 | table | kv@primary 356 | spans | ALL 357 ``` 358 359 This kind of query could be made faster by pushing the limit down to each partition and 360 then merging them. 361 362 TODO(aayush): add benchmark that demonstrates range scan performance under both of the 363 plans described above. 364 365 # Open Questions 366 367 - **Changing the number of shards in primary key** 368 369 This is not a problem with respect to secondary indexes since the user could simply create 370 a new index with the desired number of shards and then remove the old index. However, 371 there would be no way to change the number of shards in a primary key. How does this tie 372 in with Solon and Rohan's work with regards to being able to change primary keys? 373 374 - **Universal hash function** 375 376 The attached prototype ([#42922]) simply casts every data type to be used in the shard 377 computation to `STRING` in sql and calls `fnv32` on them. We might want to consider a 378 better approach to hash any arbitrary set of SQL data types that is faster. What are the 379 considerations when deciding whether to write a new hash function for what we're trying to 380 do? How would it be better than what we're currently doing (casting to string and then 381 `fnv32/64`)? 382 383 - **SHOW CREATE TABLE** 384 385 A `SHOW CREATE...` statement is supposed to produce syntactically valid SQL and it's 386 output must create the exact same table that it was called on (ie. it must be 387 _roundtripable_). Given this, how much do we want the user to know about what this new 388 syntax does? Broadly speaking, we have two options: 389 390 1. ***Be very explicit about the existence of a computed shard column*** 391 392 For example: 393 394 ```sql 395 CREATE TABLE abc (a INT PRIMARY KEY USING HASH WITH BUCKET_COUNT=4); 396 ``` 397 398 would simply be an alias for 399 400 ```sql 401 CREATE TABLE abc (a INT, a_shard INT AS MOD(hash(a), 4) 402 STORED 403 CHECK (a_shard IN (0,1,2,3))) 404 ``` 405 406 This means that the `SHOW CREATE TABLE` syntax doesn't hide any of what's happening from 407 the user. 408 409 2. ***Keep things transparent from the user*** 410 411 This is the approach that the prototype attached ([#42922]) with this RFC takes. If we 412 choose to go this route, we keep the computed shard column hidden and `SHOW CREATE TABLE` 413 output (roughly) returns the syntax that was used to create it. This also means that the 414 check constraint that is placed on the shard column will also be hidden. 415 416 - **Add ability to specify the column set to be used to compute the shard** 417 418 The proposed syntax `USING HASH WITH BUCKET_COUNT` simply computes the shard value based 419 on the set of columns in the index. As proposed, it doesn't allow the user to specify a 420 different set of columns to compute the shard column with. We could add something like the 421 following syntax for this: 422 423 ```sql 424 CREATE TABLE kv ( 425 k INT PRIMARY KEY USING HASH (ts, k) WITH BUCKET_COUNT=10, 426 v BYTES, 427 ts DECIMAL 428 ); 429 ``` 430 431 This would force the shard column to be computed with `(ts, k)` instead of just `k`. It 432 is, however, hard to think of cases where this kind of functionality would be useful 433 enough to justify the bloated syntax. At a high level, the only reason one would need to 434 specify a different set of columns for computing the shard is if the set of index columns 435 was not sequential. In this case, the user shouldn't be sharding the index in the first 436 place, since load-based splitting should take care of finding viable split points in most 437 other common workload distributions. However, If we can think of a good use case for this 438 kind of thing, we should easily be able to support it. 439 440 [#31413]: https://github.com/cockroachdb/cockroach/pull/31413 441 [#42922]: https://github.com/cockroachdb/cockroach/pull/42922