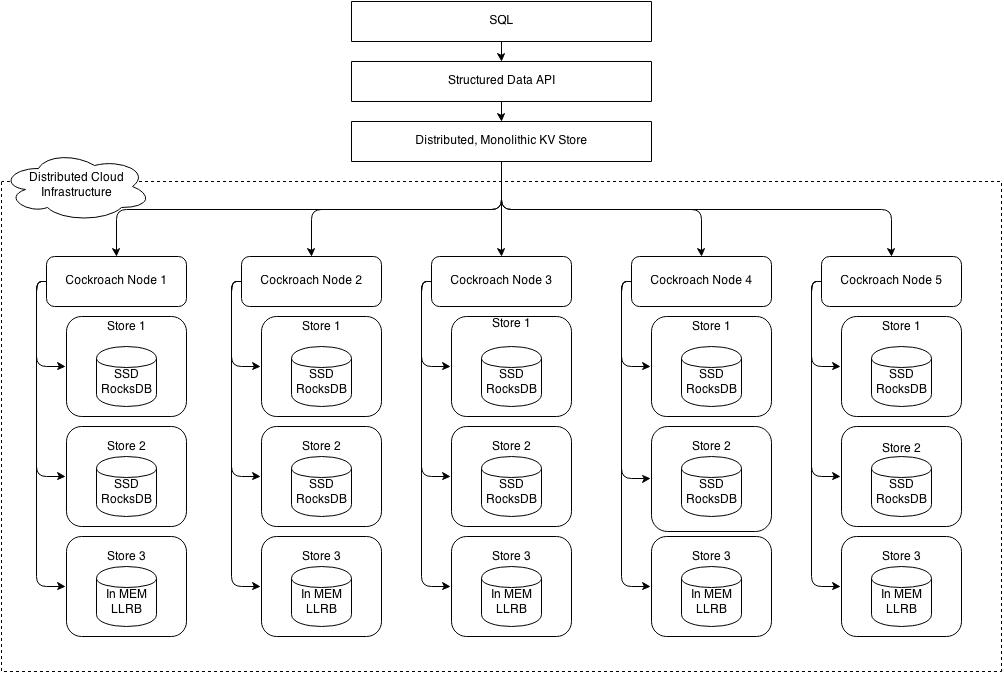

github.com/cockroachdb/cockroach@v20.2.0-alpha.1+incompatible/docs/design.md (about) 1 # About 2 3 This document is an updated version of the original design documents 4 by Spencer Kimball from early 2014. It may not always be completely up to date. 5 For a more approachable explanation of how CockroachDB works, consider reading 6 the [Architecture docs](https://www.cockroachlabs.com/docs/stable/architecture/overview.html). 7 8 # Overview 9 10 CockroachDB is a distributed SQL database. The primary design goals 11 are **scalability**, **strong consistency** and **survivability** 12 (hence the name). CockroachDB aims to tolerate disk, machine, rack, and 13 even **datacenter failures** with minimal latency disruption and **no 14 manual intervention**. CockroachDB nodes are symmetric; a design goal is 15 **homogeneous deployment** (one binary) with minimal configuration and 16 no required external dependencies. 17 18 The entry point for database clients is the SQL interface. Every node 19 in a CockroachDB cluster can act as a client SQL gateway. A SQL 20 gateway transforms and executes client SQL statements to key-value 21 (KV) operations, which the gateway distributes across the cluster as 22 necessary and returns results to the client. CockroachDB implements a 23 **single, monolithic sorted map** from key to value where both keys 24 and values are byte strings. 25 26 The KV map is logically composed of smaller segments of the keyspace called 27 ranges. Each range is backed by data stored in a local KV storage engine (we 28 use [RocksDB](http://rocksdb.org/), a variant of 29 [LevelDB](https://github.com/google/leveldb)). Range data is replicated to a 30 configurable number of additional CockroachDB nodes. Ranges are merged and 31 split to maintain a target size, by default `64M`. The relatively small size 32 facilitates quick repair and rebalancing to address node failures, new capacity 33 and even read/write load. However, the size must be balanced against the 34 pressure on the system from having more ranges to manage. 35 36 CockroachDB achieves horizontally scalability: 37 - adding more nodes increases the capacity of the cluster by the 38 amount of storage on each node (divided by a configurable 39 replication factor), theoretically up to 4 exabytes (4E) of logical 40 data; 41 - client queries can be sent to any node in the cluster, and queries 42 can operate independently (w/o conflicts), meaning that overall 43 throughput is a linear factor of the number of nodes in the cluster. 44 - queries are distributed (ref: distributed SQL) so that the overall 45 throughput of single queries can be increased by adding more nodes. 46 47 CockroachDB achieves strong consistency: 48 - uses a distributed consensus protocol for synchronous replication of 49 data in each key value range. We’ve chosen to use the [Raft 50 consensus algorithm](https://raftconsensus.github.io); all consensus 51 state is stored in RocksDB. 52 - single or batched mutations to a single range are mediated via the 53 range's Raft instance. Raft guarantees ACID semantics. 54 - logical mutations which affect multiple ranges employ distributed 55 transactions for ACID semantics. CockroachDB uses an efficient 56 **non-locking distributed commit** protocol. 57 58 CockroachDB achieves survivability: 59 - range replicas can be co-located within a single datacenter for low 60 latency replication and survive disk or machine failures. They can 61 be distributed across racks to survive some network switch failures. 62 - range replicas can be located in datacenters spanning increasingly 63 disparate geographies to survive ever-greater failure scenarios from 64 datacenter power or networking loss to regional power failures 65 (e.g. `{ US-East-1a, US-East-1b, US-East-1c }`, `{ US-East, US-West, 66 Japan }`, `{ Ireland, US-East, US-West}`, `{ Ireland, US-East, 67 US-West, Japan, Australia }`). 68 69 CockroachDB provides [snapshot 70 isolation](http://en.wikipedia.org/wiki/Snapshot_isolation) (SI) and 71 serializable snapshot isolation (SSI) semantics, allowing **externally 72 consistent, lock-free reads and writes**--both from a historical snapshot 73 timestamp and from the current wall clock time. SI provides lock-free reads 74 and writes but still allows write skew. SSI eliminates write skew, but 75 introduces a performance hit in the case of a contentious system. SSI is the 76 default isolation; clients must consciously decide to trade correctness for 77 performance. CockroachDB implements [a limited form of linearizability 78 ](#strict-serializability-linearizability), providing ordering for any 79 observer or chain of observers. 80 81 Similar to 82 [Spanner](http://static.googleusercontent.com/media/research.google.com/en/us/archive/spanner-osdi2012.pdf) 83 directories, CockroachDB allows configuration of arbitrary zones of data. 84 This allows replication factor, storage device type, and/or datacenter 85 location to be chosen to optimize performance and/or availability. 86 Unlike Spanner, zones are monolithic and don’t allow movement of fine 87 grained data on the level of entity groups. 88 89 # Architecture 90 91 CockroachDB implements a layered architecture. The highest level of 92 abstraction is the SQL layer (currently unspecified in this document). 93 It depends directly on the [*SQL layer*](#sql), 94 which provides familiar relational concepts 95 such as schemas, tables, columns, and indexes. The SQL layer 96 in turn depends on the [distributed key value store](#key-value-api), 97 which handles the details of range addressing to provide the abstraction 98 of a single, monolithic key value store. The distributed KV store 99 communicates with any number of physical cockroach nodes. Each node 100 contains one or more stores, one per physical device. 101 102  103 104 Each store contains potentially many ranges, the lowest-level unit of 105 key-value data. Ranges are replicated using the Raft consensus protocol. 106 The diagram below is a blown up version of stores from four of the five 107 nodes in the previous diagram. Each range is replicated three ways using 108 raft. The color coding shows associated range replicas. 109 110  111 112 Each physical node exports two RPC-based key value APIs: one for 113 external clients and one for internal clients (exposing sensitive 114 operational features). Both services accept batches of requests and 115 return batches of responses. Nodes are symmetric in capabilities and 116 exported interfaces; each has the same binary and may assume any 117 role. 118 119 Nodes and the ranges they provide access to can be arranged with various 120 physical network topologies to make trade offs between reliability and 121 performance. For example, a triplicated (3-way replica) range could have 122 each replica located on different: 123 124 - disks within a server to tolerate disk failures. 125 - servers within a rack to tolerate server failures. 126 - servers on different racks within a datacenter to tolerate rack power/network failures. 127 - servers in different datacenters to tolerate large scale network or power outages. 128 129 Up to `F` failures can be tolerated, where the total number of replicas `N = 2F + 1` (e.g. with 3x replication, one failure can be tolerated; with 5x replication, two failures, and so on). 130 131 # Keys 132 133 Cockroach keys are arbitrary byte arrays. Keys come in two flavors: 134 system keys and table data keys. System keys are used by Cockroach for 135 internal data structures and metadata. Table data keys contain SQL 136 table data (as well as index data). System and table data keys are 137 prefixed in such a way that all system keys sort before any table data 138 keys. 139 140 System keys come in several subtypes: 141 142 - **Global** keys store cluster-wide data such as the "meta1" and 143 "meta2" keys as well as various other system-wide keys such as the 144 node and store ID allocators. 145 - **Store local** keys are used for unreplicated store metadata 146 (e.g. the `StoreIdent` structure). "Unreplicated" indicates that 147 these values are not replicated across multiple stores because the 148 data they hold is tied to the lifetime of the store they are 149 present on. 150 - **Range local** keys store range metadata that is associated with a 151 global key. Range local keys have a special prefix followed by a 152 global key and a special suffix. For example, transaction records 153 are range local keys which look like: 154 `\x01k<global-key>txn-<txnID>`. 155 - **Replicated Range ID local** keys store range metadata that is 156 present on all of the replicas for a range. These keys are updated 157 via Raft operations. Examples include the range lease state and 158 abort span entries. 159 - **Unreplicated Range ID local** keys store range metadata that is 160 local to a replica. The primary examples of such keys are the Raft 161 state and Raft log. 162 163 Table data keys are used to store all SQL data. Table data keys 164 contain internal structure as described in the section on [mapping 165 data between the SQL model and 166 KV](#data-mapping-between-the-sql-model-and-kv). 167 168 # Versioned Values 169 170 Cockroach maintains historical versions of values by storing them with 171 associated commit timestamps. Reads and scans can specify a snapshot 172 time to return the most recent writes prior to the snapshot timestamp. 173 Older versions of values are garbage collected by the system during 174 compaction according to a user-specified expiration interval. In order 175 to support long-running scans (e.g. for MapReduce), all versions have a 176 minimum expiration. 177 178 Versioned values are supported via modifications to RocksDB to record 179 commit timestamps and GC expirations per key. 180 181 # Lock-Free Distributed Transactions 182 183 Cockroach provides distributed transactions without locks. Cockroach 184 transactions support two isolation levels: 185 186 - snapshot isolation (SI) and 187 - *serializable* snapshot isolation (SSI). 188 189 *SI* is simple to implement, highly performant, and correct for all but a 190 handful of anomalous conditions (e.g. write skew). *SSI* requires just a touch 191 more complexity, is still highly performant (less so with contention), and has 192 no anomalous conditions. Cockroach’s SSI implementation is based on ideas from 193 the literature and some possibly novel insights. 194 195 SSI is the default level, with SI provided for application developers 196 who are certain enough of their need for performance and the absence of 197 write skew conditions to consciously elect to use it. In a lightly 198 contended system, our implementation of SSI is just as performant as SI, 199 requiring no locking or additional writes. With contention, our 200 implementation of SSI still requires no locking, but will end up 201 aborting more transactions. Cockroach’s SI and SSI implementations 202 prevent starvation scenarios even for arbitrarily long transactions. 203 204 See the [Cahill paper](https://drive.google.com/file/d/0B9GCVTp_FHJIcEVyZVdDWEpYYXVVbFVDWElrYUV0NHFhU2Fv/edit?usp=sharing) 205 for one possible implementation of SSI. This is another [great paper](http://cs.yale.edu/homes/thomson/publications/calvin-sigmod12.pdf). 206 For a discussion of SSI implemented by preventing read-write conflicts 207 (in contrast to detecting them, called write-snapshot isolation), see 208 the [Yabandeh paper](https://drive.google.com/file/d/0B9GCVTp_FHJIMjJ2U2t6aGpHLTFUVHFnMTRUbnBwc2pLa1RN/edit?usp=sharing), 209 which is the source of much inspiration for Cockroach’s SSI. 210 211 Both SI and SSI require that the outcome of reads must be preserved, i.e. 212 a write of a key at a lower timestamp than a previous read must not succeed. To 213 this end, each range maintains a bounded *in-memory* cache from key range to 214 the latest timestamp at which it was read. 215 216 Most updates to this *timestamp cache* correspond to keys being read, though 217 the timestamp cache also protects the outcome of some writes (notably range 218 deletions) which consequently must also populate the cache. The cache’s entries 219 are evicted oldest timestamp first, updating the low water mark of the cache 220 appropriately. 221 222 Each Cockroach transaction is assigned a random priority and a 223 "candidate timestamp" at start. The candidate timestamp is the 224 provisional timestamp at which the transaction will commit, and is 225 chosen as the current clock time of the node coordinating the 226 transaction. This means that a transaction without conflicts will 227 usually commit with a timestamp that, in absolute time, precedes the 228 actual work done by that transaction. 229 230 In the course of coordinating a transaction between one or more 231 distributed nodes, the candidate timestamp may be increased, but will 232 never be decreased. The core difference between the two isolation levels 233 SI and SSI is that the former allows the transaction's candidate 234 timestamp to increase and the latter does not. 235 236 **Hybrid Logical Clock** 237 238 Each cockroach node maintains a hybrid logical clock (HLC) as discussed 239 in the [Hybrid Logical Clock paper](http://www.cse.buffalo.edu/tech-reports/2014-04.pdf). 240 HLC time uses timestamps which are composed of a physical component (thought of 241 as and always close to local wall time) and a logical component (used to 242 distinguish between events with the same physical component). It allows us to 243 track causality for related events similar to vector clocks, but with less 244 overhead. In practice, it works much like other logical clocks: When events 245 are received by a node, it informs the local HLC about the timestamp supplied 246 with the event by the sender, and when events are sent a timestamp generated by 247 the local HLC is attached. 248 249 For a more in depth description of HLC please read the paper. Our 250 implementation is [here](https://github.com/cockroachdb/cockroach/blob/master/pkg/util/hlc/hlc.go). 251 252 Cockroach picks a Timestamp for a transaction using HLC time. Throughout this 253 document, *timestamp* always refers to the HLC time which is a singleton 254 on each node. The HLC is updated by every read/write event on the node, and 255 the HLC time >= wall time. A read/write timestamp received in a cockroach request 256 from another node is not only used to version the operation, but also updates 257 the HLC on the node. This is useful in guaranteeing that all data read/written 258 on a node is at a timestamp < next HLC time. 259 260 **Transaction execution flow** 261 262 Transactions are executed in two phases: 263 264 1. Start the transaction by selecting a range which is likely to be 265 heavily involved in the transaction and writing a new transaction 266 record to a reserved area of that range with state "PENDING". In 267 parallel write an "intent" value for each datum being written as part 268 of the transaction. These are normal MVCC values, with the addition of 269 a special flag (i.e. “intent”) indicating that the value may be 270 committed after the transaction itself commits. In addition, 271 the transaction id (unique and chosen at txn start time by client) 272 is stored with intent values. The txn id is used to refer to the 273 transaction record when there are conflicts and to make 274 tie-breaking decisions on ordering between identical timestamps. 275 Each node returns the timestamp used for the write (which is the 276 original candidate timestamp in the absence of read/write conflicts); 277 the client selects the maximum from amongst all write timestamps as the 278 final commit timestamp. 279 280 2. Commit the transaction by updating its transaction record. The value 281 of the commit entry contains the candidate timestamp (increased as 282 necessary to accommodate any latest read timestamps). Note that the 283 transaction is considered fully committed at this point and control 284 may be returned to the client. 285 286 In the case of an SI transaction, a commit timestamp which was 287 increased to accommodate concurrent readers is perfectly 288 acceptable and the commit may continue. For SSI transactions, 289 however, a gap between candidate and commit timestamps 290 necessitates transaction restart (note: restart is different than 291 abort--see below). 292 293 After the transaction is committed, all written intents are upgraded 294 in parallel by removing the “intent” flag. The transaction is 295 considered fully committed before this step and does not wait for 296 it to return control to the transaction coordinator. 297 298 In the absence of conflicts, this is the end. Nothing else is necessary 299 to ensure the correctness of the system. 300 301 **Conflict Resolution** 302 303 Things get more interesting when a reader or writer encounters an intent 304 record or newly-committed value in a location that it needs to read or 305 write. This is a conflict, usually causing either of the transactions to 306 abort or restart depending on the type of conflict. 307 308 ***Transaction restart:*** 309 310 This is the usual (and more efficient) type of behaviour and is used 311 except when the transaction was aborted (for instance by another 312 transaction). 313 In effect, that reduces to two cases; the first being the one outlined 314 above: An SSI transaction that finds upon attempting to commit that 315 its commit timestamp has been pushed. The second case involves a transaction 316 actively encountering a conflict, that is, one of its readers or writers 317 encounter data that necessitate conflict resolution 318 (see transaction interactions below). 319 320 When a transaction restarts, it changes its priority and/or moves its 321 timestamp forward depending on data tied to the conflict, and 322 begins anew reusing the same txn id. The prior run of the transaction might 323 have written some write intents, which need to be deleted before the 324 transaction commits, so as to not be included as part of the transaction. 325 These stale write intent deletions are done during the reexecution of the 326 transaction, either implicitly, through writing new intents to 327 the same keys as part of the reexecution of the transaction, or explicitly, 328 by cleaning up stale intents that are not part of the reexecution of the 329 transaction. Since most transactions will end up writing to the same keys, 330 the explicit cleanup run just before committing the transaction is usually 331 a NOOP. 332 333 ***Transaction abort:*** 334 335 This is the case in which a transaction, upon reading its transaction 336 record, finds that it has been aborted. In this case, the transaction 337 can not reuse its intents; it returns control to the client before 338 cleaning them up (other readers and writers would clean up dangling 339 intents as they encounter them) but will make an effort to clean up 340 after itself. The next attempt (if applicable) then runs as a new 341 transaction with **a new txn id**. 342 343 ***Transaction interactions:*** 344 345 There are several scenarios in which transactions interact: 346 347 - **Reader encounters write intent or value with newer timestamp far 348 enough in the future**: This is not a conflict. The reader is free 349 to proceed; after all, it will be reading an older version of the 350 value and so does not conflict. Recall that the write intent may 351 be committed with a later timestamp than its candidate; it will 352 never commit with an earlier one. 353 354 - **Reader encounters write intent or value with newer timestamp in the 355 near future:** In this case, we have to be careful. The newer 356 intent may, in absolute terms, have happened in our read's past if 357 the clock of the writer is ahead of the node serving the values. 358 In that case, we would need to take this value into account, but 359 we just don't know. Hence the transaction restarts, using instead 360 a future timestamp (but remembering a maximum timestamp used to 361 limit the uncertainty window to the maximum clock offset). In fact, 362 this is optimized further; see the details under "choosing a time 363 stamp" below. 364 365 - **Reader encounters write intent with older timestamp**: the reader 366 must follow the intent’s transaction id to the transaction record. 367 If the transaction has already been committed, then the reader can 368 just read the value. If the write transaction has not yet been 369 committed, then the reader has two options. If the write conflict 370 is from an SI transaction, the reader can *push that transaction's 371 commit timestamp into the future* (and consequently not have to 372 read it). This is simple to do: the reader just updates the 373 transaction’s commit timestamp to indicate that when/if the 374 transaction does commit, it should use a timestamp *at least* as 375 high. However, if the write conflict is from an SSI transaction, 376 the reader must compare priorities. If the reader has the higher priority, 377 it pushes the transaction’s commit timestamp (that 378 transaction will then notice its timestamp has been pushed, and 379 restart). If it has the lower or same priority, it retries itself using as 380 a new priority `max(new random priority, conflicting txn’s 381 priority - 1)`. 382 383 - **Writer encounters uncommitted write intent**: 384 If the other write intent has been written by a transaction with a lower 385 priority, the writer aborts the conflicting transaction. If the write 386 intent has a higher or equal priority the transaction retries, using as a new 387 priority *max(new random priority, conflicting txn’s priority - 1)*; 388 the retry occurs after a short, randomized backoff interval. 389 390 - **Writer encounters newer committed value**: 391 The committed value could also be an unresolved write intent made by a 392 transaction that has already committed. The transaction restarts. On restart, 393 the same priority is reused, but the candidate timestamp is moved forward 394 to the encountered value's timestamp. 395 396 - **Writer encounters more recently read key**: 397 The *read timestamp cache* is consulted on each write at a node. If the write’s 398 candidate timestamp is earlier than the low water mark on the cache itself 399 (i.e. its last evicted timestamp) or if the key being written has a read 400 timestamp later than the write’s candidate timestamp, this later timestamp 401 value is returned with the write. A new timestamp forces a transaction 402 restart only if it is serializable. 403 404 **Transaction management** 405 406 Transactions are managed by the client proxy (or gateway in SQL Azure 407 parlance). Unlike in Spanner, writes are not buffered but are sent 408 directly to all implicated ranges. This allows the transaction to abort 409 quickly if it encounters a write conflict. The client proxy keeps track 410 of all written keys in order to resolve write intents asynchronously upon 411 transaction completion. If a transaction commits successfully, all intents 412 are upgraded to committed. In the event a transaction is aborted, all written 413 intents are deleted. The client proxy doesn’t guarantee it will resolve intents. 414 415 In the event the client proxy restarts before the pending transaction is 416 committed, the dangling transaction would continue to "live" until 417 aborted by another transaction. Transactions periodically heartbeat 418 their transaction record to maintain liveness. 419 Transactions encountered by readers or writers with dangling intents 420 which haven’t been heartbeat within the required interval are aborted. 421 In the event the proxy restarts after a transaction commits but before 422 the asynchronous resolution is complete, the dangling intents are upgraded 423 when encountered by future readers and writers and the system does 424 not depend on their timely resolution for correctness. 425 426 An exploration of retries with contention and abort times with abandoned 427 transaction is 428 [here](https://docs.google.com/document/d/1kBCu4sdGAnvLqpT-_2vaTbomNmX3_saayWEGYu1j7mQ/edit?usp=sharing). 429 430 **Transaction Records** 431 432 Please see [pkg/roachpb/data.proto](https://github.com/cockroachdb/cockroach/blob/master/pkg/roachpb/data.proto) for the up-to-date structures, the best entry point being `message Transaction`. 433 434 **Pros** 435 436 - No requirement for reliable code execution to prevent stalled 2PC 437 protocol. 438 - Readers never block with SI semantics; with SSI semantics, they may 439 abort. 440 - Lower latency than traditional 2PC commit protocol (w/o contention) 441 because second phase requires only a single write to the 442 transaction record instead of a synchronous round to all 443 transaction participants. 444 - Priorities avoid starvation for arbitrarily long transactions and 445 always pick a winner from between contending transactions (no 446 mutual aborts). 447 - Writes not buffered at client; writes fail fast. 448 - No read-locking overhead required for *serializable* SI (in contrast 449 to other SSI implementations). 450 - Well-chosen (i.e. less random) priorities can flexibly give 451 probabilistic guarantees on latency for arbitrary transactions 452 (for example: make OLTP transactions 10x less likely to abort than 453 low priority transactions, such as asynchronously scheduled jobs). 454 455 **Cons** 456 457 - Reads from non-lease holder replicas still require a ping to the lease holder 458 to update the *read timestamp cache*. 459 - Abandoned transactions may block contending writers for up to the 460 heartbeat interval, though average wait is likely to be 461 considerably shorter (see [graph in link](https://docs.google.com/document/d/1kBCu4sdGAnvLqpT-_2vaTbomNmX3_saayWEGYu1j7mQ/edit?usp=sharing)). 462 This is likely considerably more performant than detecting and 463 restarting 2PC in order to release read and write locks. 464 - Behavior different than other SI implementations: no first writer 465 wins, and shorter transactions do not always finish quickly. 466 Element of surprise for OLTP systems may be a problematic factor. 467 - Aborts can decrease throughput in a contended system compared with 468 two phase locking. Aborts and retries increase read and write 469 traffic, increase latency and decrease throughput. 470 471 **Choosing a Timestamp** 472 473 A key challenge of reading data in a distributed system with clock offset 474 is choosing a timestamp guaranteed to be greater than the latest 475 timestamp of any committed transaction (in absolute time). No system can 476 claim consistency and fail to read already-committed data. 477 478 Accomplishing consistency for transactions (or just single operations) 479 accessing a single node is easy. The timestamp is assigned by the node 480 itself, so it is guaranteed to be at a greater timestamp than all the 481 existing timestamped data on the node. 482 483 For multiple nodes, the timestamp of the node coordinating the 484 transaction `t` is used. In addition, a maximum timestamp `t+ε` is 485 supplied to provide an upper bound on timestamps for already-committed 486 data (`ε` is the maximum clock offset). As the transaction progresses, any 487 data read which have timestamps greater than `t` but less than `t+ε` 488 cause the transaction to abort and retry with the conflicting timestamp 489 t<sub>c</sub>, where t<sub>c</sub> \> t. The maximum timestamp `t+ε` remains 490 the same. This implies that transaction restarts due to clock uncertainty 491 can only happen on a time interval of length `ε`. 492 493 We apply another optimization to reduce the restarts caused 494 by uncertainty. Upon restarting, the transaction not only takes 495 into account t<sub>c</sub>, but the timestamp of the node at the time 496 of the uncertain read t<sub>node</sub>. The larger of those two timestamps 497 t<sub>c</sub> and t<sub>node</sub> (likely equal to the latter) is used 498 to increase the read timestamp. Additionally, the conflicting node is 499 marked as “certain”. Then, for future reads to that node within the 500 transaction, we set `MaxTimestamp = Read Timestamp`, preventing further 501 uncertainty restarts. 502 503 Correctness follows from the fact that we know that at the time of the read, 504 there exists no version of any key on that node with a higher timestamp than 505 t<sub>node</sub>. Upon a restart caused by the node, if the transaction 506 encounters a key with a higher timestamp, it knows that in absolute time, 507 the value was written after t<sub>node</sub> was obtained, i.e. after the 508 uncertain read. Hence the transaction can move forward reading an older version 509 of the data (at the transaction's timestamp). This limits the time uncertainty 510 restarts attributed to a node to at most one. The tradeoff is that we might 511 pick a timestamp larger than the optimal one (> highest conflicting timestamp), 512 resulting in the possibility of a few more conflicts. 513 514 We expect retries will be rare, but this assumption may need to be 515 revisited if retries become problematic. Note that this problem does not 516 apply to historical reads. An alternate approach which does not require 517 retries makes a round to all node participants in advance and 518 chooses the highest reported node wall time as the timestamp. However, 519 knowing which nodes will be accessed in advance is difficult and 520 potentially limiting. Cockroach could also potentially use a global 521 clock (Google did this with [Percolator](https://www.usenix.org/legacy/event/osdi10/tech/full_papers/Peng.pdf)), 522 which would be feasible for smaller, geographically-proximate clusters. 523 524 # Strict Serializability (Linearizability) 525 526 Roughly speaking, the gap between <i>strict serializability</i> (which we use 527 interchangeably with <i>linearizability</i>) and CockroachDB's default 528 isolation level (<i>serializable</i>) is that with linearizable transactions, 529 causality is preserved. That is, if one transaction (say, creating a posting 530 for a user) waits for its predecessor (creating the user in the first place) 531 to complete, one would hope that the logical timestamp assigned to the former 532 is larger than that of the latter. 533 In practice, in distributed databases this may not hold, the reason typically 534 being that clocks across a distributed system are not perfectly synchronized 535 and the "later" transaction touches a part disjoint from that on which the 536 first transaction ran, resulting in clocks with disjoint information to decide 537 on the commit timestamps. 538 539 In practice, in CockroachDB many transactional workloads are actually 540 linearizable, though the precise conditions are too involved to outline them 541 here. 542 543 Causality is typically not required for many transactions, and so it is 544 advantageous to pay for it only when it *is* needed. CockroachDB implements 545 this via <i>causality tokens</i>: When committing a transaction, a causality 546 token can be retrieved and passed to the next transaction, ensuring that these 547 two transactions get assigned increasing logical timestamps. 548 549 Additionally, as better synchronized clocks become a standard commodity offered 550 by cloud providers, CockroachDB can provide global linearizability by doing 551 much the same that [Google's 552 Spanner](http://research.google.com/archive/spanner.html) does: wait out the 553 maximum clock offset after committing, but before returning to the client. 554 555 See the blog post below for much more in-depth information. 556 557 https://www.cockroachlabs.com/blog/living-without-atomic-clocks/ 558 559 # Logical Map Content 560 561 Logically, the map contains a series of reserved system key/value 562 pairs preceding the actual user data (which is managed by the SQL 563 subsystem). 564 565 - `\x02<key1>`: Range metadata for range ending `\x03<key1>`. This a "meta1" key. 566 - ... 567 - `\x02<keyN>`: Range metadata for range ending `\x03<keyN>`. This a "meta1" key. 568 - `\x03<key1>`: Range metadata for range ending `<key1>`. This a "meta2" key. 569 - ... 570 - `\x03<keyN>`: Range metadata for range ending `<keyN>`. This a "meta2" key. 571 - `\x04{desc,node,range,store}-idegen`: ID generation oracles for various component types. 572 - `\x04status-node-<varint encoded Store ID>`: Store runtime metadata. 573 - `\x04tsd<key>`: Time-series data key. 574 - `<key>`: A user key. In practice, these keys are managed by the SQL 575 subsystem, which employs its own key anatomy. 576 577 # Stores and Storage 578 579 Nodes contain one or more stores. Each store should be placed on a unique disk. 580 Internally, each store contains a single instance of RocksDB with a block cache 581 shared amongst all of the stores in a node. And these stores in turn have 582 a collection of range replicas. More than one replica for a range will never 583 be placed on the same store or even the same node. 584 585 Early on, when a cluster is first initialized, the few default starting ranges 586 will only have a single replica, but as soon as other nodes are available they 587 will replicate to them until they've reached their desired replication factor, 588 the default being 3. 589 590 Zone configs can be used to control a range's replication factor and add 591 constraints as to where the range's replicas can be located. When there is a 592 change in a range's zone config, the range will up or down replicate to the 593 appropriate number of replicas and move its replicas to the appropriate stores 594 based on zone config's constraints. 595 596 # Self Repair 597 598 If a store has not been heard from (gossiped their descriptors) in some time, 599 the default setting being 5 minutes, the cluster will consider this store to be 600 dead. When this happens, all ranges that have replicas on that store are 601 determined to be unavailable and removed. These ranges will then upreplicate 602 themselves to other available stores until their desired replication factor is 603 again met. If 50% or more of the replicas are unavailable at the same time, 604 there is no quorum and the whole range will be considered unavailable until at 605 least greater than 50% of the replicas are again available. 606 607 # Rebalancing 608 609 As more data are added to the system, some stores may grow faster than others. 610 To combat this and to spread the overall load across the full cluster, replicas 611 will be moved between stores maintaining the desired replication factor. The 612 heuristics used to perform this rebalancing include: 613 614 - the number of replicas per store 615 - the total size of the data used per store 616 - free space available per store 617 618 In the future, some other factors that might be considered include: 619 620 - cpu/network load per store 621 - ranges that are used together often in queries 622 - number of active ranges per store 623 - number of range leases held per store 624 625 # Range Metadata 626 627 The default approximate size of a range is 64M (2\^26 B). In order to 628 support 1P (2\^50 B) of logical data, metadata is needed for roughly 629 2\^(50 - 26) = 2\^24 ranges. A reasonable upper bound on range metadata 630 size is roughly 256 bytes (3\*12 bytes for the triplicated node 631 locations and 220 bytes for the range key itself). 2\^24 ranges \* 2\^8 632 B would require roughly 4G (2\^32 B) to store--too much to duplicate 633 between machines. Our conclusion is that range metadata must be 634 distributed for large installations. 635 636 To keep key lookups relatively fast in the presence of distributed metadata, 637 we store all the top-level metadata in a single range (the first range). These 638 top-level metadata keys are known as *meta1* keys, and are prefixed such that 639 they sort to the beginning of the key space. Given the metadata size of 256 640 bytes given above, a single 64M range would support 64M/256B = 2\^18 ranges, 641 which gives a total storage of 64M \* 2\^18 = 16T. To support the 1P quoted 642 above, we need two levels of indirection, where the first level addresses the 643 second, and the second addresses user data. With two levels of indirection, we 644 can address 2\^(18 + 18) = 2\^36 ranges; each range addresses 2\^26 B, and 645 altogether we address 2\^(36+26) B = 2\^62 B = 4E of user data. 646 647 For a given user-addressable `key1`, the associated *meta1* record is found 648 at the successor key to `key1` in the *meta1* space. Since the *meta1* space 649 is sparse, the successor key is defined as the next key which is present. The 650 *meta1* record identifies the range containing the *meta2* record, which is 651 found using the same process. The *meta2* record identifies the range 652 containing `key1`, which is again found the same way (see examples below). 653 654 Concretely, metadata keys are prefixed by `\x02` (meta1) and `\x03` 655 (meta2); the prefixes `\x02` and `\x03` provide for the desired 656 sorting behaviour. Thus, `key1`'s *meta1* record will reside at the 657 successor key to `\x02<key1>`. 658 659 Note: we append the end key of each range to meta{1,2} records because 660 the RocksDB iterator only supports a Seek() interface which acts as a 661 Ceil(). Using the start key of the range would cause Seek() to find the 662 key *after* the meta indexing record we’re looking for, which would 663 result in having to back the iterator up, an option which is both less 664 efficient and not available in all cases. 665 666 The following example shows the directory structure for a map with 667 three ranges worth of data. Ellipses indicate additional key/value 668 pairs to fill an entire range of data. For clarity, the examples use 669 `meta1` and `meta2` to refer to the prefixes `\x02` and `\x03`. Except 670 for the fact that splitting ranges requires updates to the range 671 metadata with knowledge of the metadata layout, the range metadata 672 itself requires no special treatment or bootstrapping. 673 674 **Range 0** (located on servers `dcrama1:8000`, `dcrama2:8000`, 675 `dcrama3:8000`) 676 677 - `meta1\xff`: `dcrama1:8000`, `dcrama2:8000`, `dcrama3:8000` 678 - `meta2<lastkey0>`: `dcrama1:8000`, `dcrama2:8000`, `dcrama3:8000` 679 - `meta2<lastkey1>`: `dcrama4:8000`, `dcrama5:8000`, `dcrama6:8000` 680 - `meta2\xff`: `dcrama7:8000`, `dcrama8:8000`, `dcrama9:8000` 681 - ... 682 - `<lastkey0>`: `<lastvalue0>` 683 684 **Range 1** (located on servers `dcrama4:8000`, `dcrama5:8000`, 685 `dcrama6:8000`) 686 687 - ... 688 - `<lastkey1>`: `<lastvalue1>` 689 690 **Range 2** (located on servers `dcrama7:8000`, `dcrama8:8000`, 691 `dcrama9:8000`) 692 693 - ... 694 - `<lastkey2>`: `<lastvalue2>` 695 696 Consider a simpler example of a map containing less than a single 697 range of data. In this case, all range metadata and all data are 698 located in the same range: 699 700 **Range 0** (located on servers `dcrama1:8000`, `dcrama2:8000`, 701 `dcrama3:8000`)* 702 703 - `meta1\xff`: `dcrama1:8000`, `dcrama2:8000`, `dcrama3:8000` 704 - `meta2\xff`: `dcrama1:8000`, `dcrama2:8000`, `dcrama3:8000` 705 - `<key0>`: `<value0>` 706 - `...` 707 708 Finally, a map large enough to need both levels of indirection would 709 look like (note that instead of showing range replicas, this 710 example is simplified to just show range indexes): 711 712 **Range 0** 713 714 - `meta1<lastkeyN-1>`: Range 0 715 - `meta1\xff`: Range 1 716 - `meta2<lastkey1>`: Range 1 717 - `meta2<lastkey2>`: Range 2 718 - `meta2<lastkey3>`: Range 3 719 - ... 720 - `meta2<lastkeyN-1>`: Range 262143 721 722 **Range 1** 723 724 - `meta2<lastkeyN>`: Range 262144 725 - `meta2<lastkeyN+1>`: Range 262145 726 - ... 727 - `meta2\xff`: Range 500,000 728 - ... 729 - `<lastkey1>`: `<lastvalue1>` 730 731 **Range 2** 732 733 - ... 734 - `<lastkey2>`: `<lastvalue2>` 735 736 **Range 3** 737 738 - ... 739 - `<lastkey3>`: `<lastvalue3>` 740 741 **Range 262144** 742 743 - ... 744 - `<lastkeyN>`: `<lastvalueN>` 745 746 **Range 262145** 747 748 - ... 749 - `<lastkeyN+1>`: `<lastvalueN+1>` 750 751 Note that the choice of range `262144` is just an approximation. The 752 actual number of ranges addressable via a single metadata range is 753 dependent on the size of the keys. If efforts are made to keep key sizes 754 small, the total number of addressable ranges would increase and vice 755 versa. 756 757 From the examples above it’s clear that key location lookups require at 758 most three reads to get the value for `<key>`: 759 760 1. lower bound of `meta1<key>` 761 2. lower bound of `meta2<key>`, 762 3. `<key>`. 763 764 For small maps, the entire lookup is satisfied in a single RPC to Range 0. Maps 765 containing less than 16T of data would require two lookups. Clients cache both 766 levels of range metadata, and we expect that data locality for individual 767 clients will be high. Clients may end up with stale cache entries. If on a 768 lookup, the range consulted does not match the client’s expectations, the 769 client evicts the stale entries and possibly does a new lookup. 770 771 # Raft - Consistency of Range Replicas 772 773 Each range is configured to consist of three or more replicas, as specified by 774 their ZoneConfig. The replicas in a range maintain their own instance of a 775 distributed consensus algorithm. We use the [*Raft consensus algorithm*](https://raftconsensus.github.io) 776 as it is simpler to reason about and includes a reference implementation 777 covering important details. 778 [ePaxos](https://www.cs.cmu.edu/~dga/papers/epaxos-sosp2013.pdf) has 779 promising performance characteristics for WAN-distributed replicas, but 780 it does not guarantee a consistent ordering between replicas. 781 782 Raft elects a relatively long-lived leader which must be involved to 783 propose commands. It heartbeats followers periodically and keeps their logs 784 replicated. In the absence of heartbeats, followers become candidates 785 after randomized election timeouts and proceed to hold new leader 786 elections. Cockroach weights random timeouts such that the replicas with 787 shorter round trip times to peers are more likely to hold elections 788 first (not implemented yet). Only the Raft leader may propose commands; 789 followers will simply relay commands to the last known leader. 790 791 Our Raft implementation was developed together with CoreOS, but adds an extra 792 layer of optimization to account for the fact that a single Node may have 793 millions of consensus groups (one for each Range). Areas of optimization 794 are chiefly coalesced heartbeats (so that the number of nodes dictates the 795 number of heartbeats as opposed to the much larger number of ranges) and 796 batch processing of requests. 797 Future optimizations may include two-phase elections and quiescent ranges 798 (i.e. stopping traffic completely for inactive ranges). 799 800 # Range Leases 801 802 As outlined in the Raft section, the replicas of a Range are organized as a 803 Raft group and execute commands from their shared commit log. Going through 804 Raft is an expensive operation though, and there are tasks which should only be 805 carried out by a single replica at a time (as opposed to all of them). 806 In particular, it is desirable to serve authoritative reads from a single 807 Replica (ideally from more than one, but that is far more difficult). 808 809 For these reasons, Cockroach introduces the concept of **Range Leases**: 810 This is a lease held for a slice of (database, i.e. hybrid logical) time. 811 A replica establishes itself as owning the lease on a range by committing 812 a special lease acquisition log entry through raft. The log entry contains 813 the replica node's epoch from the node liveness table--a system 814 table containing an epoch and an expiration time for each node. A node is 815 responsible for continuously updating the expiration time for its entry 816 in the liveness table. Once the lease has been committed through raft 817 the replica becomes the lease holder as soon as it applies the lease 818 acquisition command, guaranteeing that when it uses the lease it has 819 already applied all prior writes on the replica and can see them locally. 820 821 To prevent two nodes from acquiring the lease, the requestor includes a copy 822 of the lease that it believes to be valid at the time it requests the lease. 823 If that lease is still valid when the new lease is applied, it is granted, 824 or another lease is granted in the interim and the requested lease is 825 ignored. A lease can move from node A to node B only after node A's 826 liveness record has expired and its epoch has been incremented. 827 828 Note: range leases for ranges within the node liveness table keyspace and 829 all ranges that precede it, including meta1 and meta2, are not managed using 830 the above mechanism to prevent circular dependencies. 831 832 A replica holding a lease at a specific epoch can use the lease as long as 833 the node epoch hasn't changed and the expiration time hasn't passed. 834 The replica holding the lease may satisfy reads locally, without incurring the 835 overhead of going through Raft, and is in charge or involved in handling 836 Range-specific maintenance tasks such as splitting, merging and rebalancing 837 838 All Reads and writes are generally addressed to the replica holding 839 the lease; if none does, any replica may be addressed, causing it to try 840 to obtain the lease synchronously. Requests received by a non-lease holder 841 (for the HLC timestamp specified in the request's header) fail with an 842 error pointing at the replica's last known lease holder. These requests 843 are retried transparently with the updated lease by the gateway node and 844 never reach the client. 845 846 ## Colocation with Raft leadership 847 848 The range lease is completely separate from Raft leadership, and so without 849 further efforts, Raft leadership and the Range lease might not be held by the 850 same Replica. Since it's expensive to not have these two roles colocated (the 851 lease holder has to forward each proposal to the leader, adding costly RPC 852 round-trips), each lease renewal or transfer also attempts to colocate them. 853 In practice, that means that the mismatch is rare and self-corrects quickly. 854 855 ## Command Execution Flow 856 857 This subsection describes how a lease holder replica processes a 858 read/write command in more details. Each command specifies (1) a key 859 (or a range of keys) that the command accesses and (2) the ID of a 860 range which the key(s) belongs to. When receiving a command, a node 861 looks up a range by the specified Range ID and checks if the range is 862 still responsible for the supplied keys. If any of the keys do not 863 belong to the range, the node returns an error so that the client will 864 retry and send a request to a correct range. 865 866 When all the keys belong to the range, the node attempts to 867 process the command. If the command is an inconsistent read-only 868 command, it is processed immediately. If the command is a consistent 869 read or a write, the command is executed when both of the following 870 conditions hold: 871 872 - The range replica has a range lease. 873 - There are no other running commands whose keys overlap with 874 the submitted command and cause read/write conflict. 875 876 When the first condition is not met, the replica attempts to acquire 877 a lease or returns an error so that the client will redirect the 878 command to the current lease holder. The second condition guarantees that 879 consistent read/write commands for a given key are sequentially 880 executed. 881 882 When the above two conditions are met, the lease holder replica processes the 883 command. Consistent reads are processed on the lease holder immediately. 884 Write commands are committed into the Raft log so that every replica 885 will execute the same commands. All commands produce deterministic 886 results so that the range replicas keep consistent states among them. 887 888 When a write command completes, all the replica updates their response 889 cache to ensure idempotency. When a read command completes, the lease holder 890 replica updates its timestamp cache to keep track of the latest read 891 for a given key. 892 893 There is a chance that a range lease gets expired while a command is 894 executed. Before executing a command, each replica checks if a replica 895 proposing the command has a still lease. When the lease has been 896 expired, the command will be rejected by the replica. 897 898 899 # Splitting / Merging Ranges 900 901 Nodes split or merge ranges based on whether they exceed maximum or 902 minimum thresholds for capacity or load. Ranges exceeding maximums for 903 either capacity or load are split; ranges below minimums for *both* 904 capacity and load are merged. 905 906 Ranges maintain the same accounting statistics as accounting key 907 prefixes. These boil down to a time series of data points with minute 908 granularity. Everything from number of bytes to read/write queue sizes. 909 Arbitrary distillations of the accounting stats can be determined as the 910 basis for splitting / merging. Two sensible metrics for use with 911 split/merge are range size in bytes and IOps. A good metric for 912 rebalancing a replica from one node to another would be total read/write 913 queue wait times. These metrics are gossipped, with each range / node 914 passing along relevant metrics if they’re in the bottom or top of the 915 range it’s aware of. 916 917 A range finding itself exceeding either capacity or load threshold 918 splits. To this end, the range lease holder computes an appropriate split key 919 candidate and issues the split through Raft. In contrast to splitting, 920 merging requires a range to be below the minimum threshold for both 921 capacity *and* load. A range being merged chooses the smaller of the 922 ranges immediately preceding and succeeding it. 923 924 Splitting, merging, rebalancing and recovering all follow the same basic 925 algorithm for moving data between roach nodes. New target replicas are 926 created and added to the replica set of source range. Then each new 927 replica is brought up to date by either replaying the log in full or 928 copying a snapshot of the source replica data and then replaying the log 929 from the timestamp of the snapshot to catch up fully. Once the new 930 replicas are fully up to date, the range metadata is updated and old, 931 source replica(s) deleted if applicable. 932 933 **Coordinator** (lease holder replica) 934 935 ``` 936 if splitting 937 SplitRange(split_key): splits happen locally on range replicas and 938 only after being completed locally, are moved to new target replicas. 939 else if merging 940 Choose new replicas on same servers as target range replicas; 941 add to replica set. 942 else if rebalancing || recovering 943 Choose new replica(s) on least loaded servers; add to replica set. 944 ``` 945 946 **New Replica** 947 948 *Bring replica up to date:* 949 950 ``` 951 if all info can be read from replicated log 952 copy replicated log 953 else 954 snapshot source replica 955 send successive ReadRange requests to source replica 956 referencing snapshot 957 958 if merging 959 combine ranges on all replicas 960 else if rebalancing || recovering 961 remove old range replica(s) 962 ``` 963 964 Nodes split ranges when the total data in a range exceeds a 965 configurable maximum threshold. Similarly, ranges are merged when the 966 total data falls below a configurable minimum threshold. 967 968 **TBD: flesh this out**: Especially for merges (but also rebalancing) we have a 969 range disappearing from the local node; that range needs to disappear 970 gracefully, with a smooth handoff of operation to the new owner of its data. 971 972 Ranges are rebalanced if a node determines its load or capacity is one 973 of the worst in the cluster based on gossipped load stats. A node with 974 spare capacity is chosen in the same datacenter and a special-case split 975 is done which simply duplicates the data 1:1 and resets the range 976 configuration metadata. 977 978 # Node Allocation (via Gossip) 979 980 New nodes must be allocated when a range is split. Instead of requiring 981 every node to know about the status of all or even a large number 982 of peer nodes --or-- alternatively requiring a specialized curator or 983 master with sufficiently global knowledge, we use a gossip protocol to 984 efficiently communicate only interesting information between all of the 985 nodes in the cluster. What’s interesting information? One example would 986 be whether a particular node has a lot of spare capacity. Each node, 987 when gossiping, compares each topic of gossip to its own state. If its 988 own state is somehow “more interesting” than the least interesting item 989 in the topic it’s seen recently, it includes its own state as part of 990 the next gossip session with a peer node. In this way, a node with 991 capacity sufficiently in excess of the mean quickly becomes discovered 992 by the entire cluster. To avoid piling onto outliers, nodes from the 993 high capacity set are selected at random for allocation. 994 995 The gossip protocol itself contains two primary components: 996 997 - **Peer Selection**: each node maintains up to N peers with which it 998 regularly communicates. It selects peers with an eye towards 999 maximizing fanout. A peer node which itself communicates with an 1000 array of otherwise unknown nodes will be selected over one which 1001 communicates with a set containing significant overlap. Each time 1002 gossip is initiated, each nodes’ set of peers is exchanged. Each 1003 node is then free to incorporate the other’s peers as it sees fit. 1004 To avoid any node suffering from excess incoming requests, a node 1005 may refuse to answer a gossip exchange. Each node is biased 1006 towards answering requests from nodes without significant overlap 1007 and refusing requests otherwise. 1008 1009 Peers are efficiently selected using a heuristic as described in 1010 [Agarwal & Trachtenberg (2006)](https://drive.google.com/file/d/0B9GCVTp_FHJISmFRTThkOEZSM1U/edit?usp=sharing). 1011 1012 **TBD**: how to avoid partitions? Need to work out a simulation of 1013 the protocol to tune the behavior and see empirically how well it 1014 works. 1015 1016 - **Gossip Selection**: what to communicate. Gossip is divided into 1017 topics. Load characteristics (capacity per disk, cpu load, and 1018 state [e.g. draining, ok, failure]) are used to drive node 1019 allocation. Range statistics (range read/write load, missing 1020 replicas, unavailable ranges) and network topology (inter-rack 1021 bandwidth/latency, inter-datacenter bandwidth/latency, subnet 1022 outages) are used for determining when to split ranges, when to 1023 recover replicas vs. wait for network connectivity, and for 1024 debugging / sysops. In all cases, a set of minimums and a set of 1025 maximums is propagated; each node applies its own view of the 1026 world to augment the values. Each minimum and maximum value is 1027 tagged with the reporting node and other accompanying contextual 1028 information. Each topic of gossip has its own protobuf to hold the 1029 structured data. The number of items of gossip in each topic is 1030 limited by a configurable bound. 1031 1032 For efficiency, nodes assign each new item of gossip a sequence 1033 number and keep track of the highest sequence number each peer 1034 node has seen. Each round of gossip communicates only the delta 1035 containing new items. 1036 1037 # Node and Cluster Metrics 1038 1039 Every component of the system is responsible for exporting interesting 1040 metrics about itself. These could be histograms, throughput counters, or 1041 gauges. 1042 1043 These metrics are exported for external monitoring systems (such as Prometheus) 1044 via a HTTP endpoint, but CockroachDB also implements an internal timeseries 1045 database which is stored in the replicated key-value map. 1046 1047 Time series are stored at Store granularity and allow the admin dashboard 1048 to efficiently gain visibility into a universe of information at the Cluster, 1049 Node or Store level. A [periodic background process](RFCS/20160901_time_series_culling.md) 1050 culls older timeseries data, downsampling and eventually discarding it. 1051 1052 # Zones 1053 1054 Zones provide a method for configuring the replication of portions of the 1055 keyspace. Zone values specify a protobuf containing 1056 the datacenters from which replicas for ranges which fall under 1057 the zone must be chosen. 1058 1059 Please see 1060 [pkg/config/zone.proto](https://github.com/cockroachdb/cockroach/blob/master/pkg/config/zone.proto) 1061 for up-to-date data structures used, the best entry point being 1062 `message ZoneConfig`. 1063 1064 If zones are modified in situ, each node verifies the 1065 existing zones for its ranges against the zone configuration. If 1066 it discovers differences, it reconfigures ranges in the same way 1067 that it rebalances away from busy nodes, via special-case 1:1 1068 split to a duplicate range comprising the new configuration. 1069 1070 # SQL 1071 1072 Each node in a cluster can accept SQL client connections. CockroachDB 1073 supports the PostgreSQL wire protocol, to enable reuse of native 1074 PostgreSQL client drivers. Connections using SSL and authenticated 1075 using client certificates are supported and even encouraged over 1076 unencrypted (insecure) and password-based connections. 1077 1078 Each connection is associated with a SQL session which holds the 1079 server-side state of the connection. Over the lifespan of a session 1080 the client can send SQL to open/close transactions, issue statements 1081 or queries or configure session parameters, much like with any other 1082 SQL database. 1083 1084 ## Language support 1085 1086 CockroachDB also attempts to emulate the flavor of SQL supported by 1087 PostgreSQL, although it also diverges in significant ways: 1088 1089 - CockroachDB exclusively implements MVCC-based consistency for 1090 transactions, and thus only supports SQL's isolation levels SNAPSHOT 1091 and SERIALIZABLE. The other traditional SQL isolation levels are 1092 internally mapped to either SNAPSHOT or SERIALIZABLE. 1093 1094 - CockroachDB implements its own [SQL type system](RFCS/20160203_typing.md) 1095 which only supports a limited form of implicit coercions between 1096 types compared to PostgreSQL. The rationale is to keep the 1097 implementation simple and efficient, capitalizing on the observation 1098 that 1) most SQL code in clients is automatically generated with 1099 coherent typing already and 2) existing SQL code for other databases 1100 will need to be massaged for CockroachDB anyways. 1101 1102 ## SQL architecture 1103 1104 Client connections over the network are handled in each node by a 1105 pgwire server process (goroutine). This handles the stream of incoming 1106 commands and sends back responses including query/statement results. 1107 The pgwire server also handles pgwire-level prepared statements, 1108 binding prepared statements to arguments and looking up prepared 1109 statements for execution. 1110 1111 Meanwhile the state of a SQL connection is maintained by a Session 1112 object and a monolithic `planner` object (one per connection) which 1113 coordinates execution between the session, the current SQL transaction 1114 state and the underlying KV store. 1115 1116 Upon receiving a query/statement (either directly or via an execute 1117 command for a previously prepared statement) the pgwire server forwards 1118 the SQL text to the `planner` associated with the connection. The SQL 1119 code is then transformed into a SQL query plan. 1120 The query plan is implemented as a tree of objects which describe the 1121 high-level data operations needed to resolve the query, for example 1122 "join", "index join", "scan", "group", etc. 1123 1124 The query plan objects currently also embed the run-time state needed 1125 for the execution of the query plan. Once the SQL query plan is ready, 1126 methods on these objects then carry the execution out in the fashion 1127 of "generators" in other programming languages: each node *starts* its 1128 children nodes and from that point forward each child node serves as a 1129 *generator* for a stream of result rows, which the parent node can 1130 consume and transform incrementally and present to its own parent node 1131 also as a generator. 1132 1133 The top-level planner consumes the data produced by the top node of 1134 the query plan and returns it to the client via pgwire. 1135 1136 ## Data mapping between the SQL model and KV 1137 1138 Every SQL table has a primary key in CockroachDB. (If a table is created 1139 without one, an implicit primary key is provided automatically.) 1140 The table identifier, followed by the value of the primary key for 1141 each row, are encoded as the *prefix* of a key in the underlying KV 1142 store. 1143 1144 Each remaining column or *column family* in the table is then encoded 1145 as a value in the underlying KV store, and the column/family identifier 1146 is appended as *suffix* to the KV key. 1147 1148 For example: 1149 1150 - after table `customers` is created in a database `mydb` with a 1151 primary key column `name` and normal columns `address` and `URL`, the KV pairs 1152 to store the schema would be: 1153 1154 | Key | Values | 1155 | ---------------------------- | ------ | 1156 | `/system/databases/mydb/id` | 51 | 1157 | `/system/tables/customer/id` | 42 | 1158 | `/system/desc/51/42/address` | 69 | 1159 | `/system/desc/51/42/url` | 66 | 1160 1161 (The numeric values on the right are chosen arbitrarily for the 1162 example; the structure of the schema keys on the left is simplified 1163 for the example and subject to change.) Each database/table/column 1164 name is mapped to a spontaneously generated identifier, so as to 1165 simplify renames. 1166 1167 Then for a single row in this table: 1168 1169 | Key | Values | 1170 | ----------------- | -------------------------------- | 1171 | `/51/42/Apple/69` | `1 Infinite Loop, Cupertino, CA` | 1172 | `/51/42/Apple/66` | `http://apple.com/` | 1173 1174 Each key has the table prefix `/51/42` followed by the primary key 1175 prefix `/Apple` followed by the column/family suffix (`/66`, 1176 `/69`). The KV value is directly encoded from the SQL value. 1177 1178 Efficient storage for the keys is guaranteed by the underlying RocksDB engine 1179 by means of prefix compression. 1180 1181 Finally, for SQL indexes, the KV key is formed using the SQL value of the 1182 indexed columns, and the KV value is the KV key prefix of the rest of 1183 the indexed row. 1184 1185 ## Distributed SQL 1186 1187 Dist-SQL is a new execution framework being developed as of Q3 2016 with the 1188 goal of distributing the processing of SQL queries. 1189 See the [Distributed SQL 1190 RFC](RFCS/20160421_distributed_sql.md) 1191 for a detailed design of the subsystem; this section will serve as a summary. 1192 1193 Distributing the processing is desirable for multiple reasons: 1194 - Remote-side filtering: when querying for a set of rows that match a filtering 1195 expression, instead of querying all the keys in certain ranges and processing 1196 the filters after receiving the data on the gateway node over the network, 1197 we'd like the filtering expression to be processed by the lease holder or 1198 remote node, saving on network traffic and related processing. 1199 - For statements like `UPDATE .. WHERE` and `DELETE .. WHERE` we want to 1200 perform the query and the updates on the node which has the data (as opposed 1201 to receiving results at the gateway over the network, and then performing the 1202 update or deletion there, which involves additional round-trips). 1203 - Parallelize SQL computation: when significant computation is required, we 1204 want to distribute it to multiple node, so that it scales with the amount of 1205 data involved. This applies to `JOIN`s, aggregation, sorting. 1206 1207 The approach we took was originally inspired by 1208 [Sawzall](https://cloud.google.com/dataflow/model/programming-model) - a 1209 project by Rob Pike et al. at Google that proposes a "shell" (high-level 1210 language interpreter) to ease the exploitation of MapReduce. It provides a 1211 clear separation between "local" processes which process a limited amount of 1212 data and distributed computations, which are abstracted away behind a 1213 restricted set of conceptual constructs. 1214 1215 To run SQL statements in a distributed fashion, we introduce a couple of concepts: 1216 - _logical plan_ - similar on the surface to the `planNode` tree described in 1217 the [SQL](#sql) section, it represents the abstract (non-distributed) data flow 1218 through computation stages. 1219 - _physical plan_ - a physical plan is conceptually a mapping of the _logical 1220 plan_ nodes to CockroachDB nodes. Logical plan nodes are replicated and 1221 specialized depending on the cluster topology. The components of the physical 1222 plan are scheduled and run on the cluster. 1223 1224 ## Logical planning 1225 1226 The logical plan is made up of _aggregators_. Each _aggregator_ consumes an 1227 _input stream_ of rows (or multiple streams for joins) and produces an _output 1228 stream_ of rows. Both the input and the output streams have a set schema. The 1229 streams are a logical concept and might not map to a single data stream in the 1230 actual computation. Aggregators will be potentially distributed when converting 1231 the *logical plan* to a *physical plan*; to express what distribution and 1232 parallelization is allowed, an aggregator defines a _grouping_ on the data that 1233 flows through it, expressing which rows need to be processed on the same node 1234 (this mechanism constraints rows matching in a subset of columns to be 1235 processed on the same node). This concept is useful for aggregators that need 1236 to see some set of rows for producing output - e.g. the SQL aggregation 1237 functions. An aggregator with no grouping is a special but important case in 1238 which we are not aggregating multiple pieces of data, but we may be filtering, 1239 transforming, or reordering individual pieces of data. 1240 1241 Special **table reader** aggregators with no inputs are used as data sources; a 1242 table reader can be configured to output only certain columns, as needed. 1243 A special **final** aggregator with no outputs is used for the results of the 1244 query/statement. 1245 1246 To reflect the result ordering that a query has to produce, some aggregators 1247 (`final`, `limit`) are configured with an **ordering requirement** on the input 1248 stream (a list of columns with corresponding ascending/descending 1249 requirements). Some aggregators (like `table readers`) can guarantee a certain 1250 ordering on their output stream, called an **ordering guarantee**. All 1251 aggregators have an associated **ordering characterization** function 1252 `ord(input_order) -> output_order` that maps `input_order` (an ordering 1253 guarantee on the input stream) into `output_order` (an ordering guarantee for 1254 the output stream) - meaning that if the rows in the input stream are ordered 1255 according to `input_order`, then the rows in the output stream will be ordered 1256 according to `output_order`. 1257 1258 The ordering guarantee of the table readers along with the characterization 1259 functions can be used to propagate ordering information across the logical plan. 1260 When there is a mismatch (an aggregator has an ordering requirement that is not 1261 matched by a guarantee), we insert a **sorting aggregator**. 1262 1263 ### Types of aggregators 1264 1265 - `TABLE READER` is a special aggregator, with no input stream. It's configured 1266 with spans of a table or index and the schema that it needs to read. 1267 Like every other aggregator, it can be configured with a programmable output 1268 filter. 1269 - `JOIN` performs a join on two streams, with equality constraints between 1270 certain columns. The aggregator is grouped on the columns that are 1271 constrained to be equal. 1272 - `JOIN READER` performs point-lookups for rows with the keys indicated by the 1273 input stream. It can do so by performing (potentially remote) KV reads, or by 1274 setting up remote flows. 1275 - `SET OPERATION` takes several inputs and performs set arithmetic on them 1276 (union, difference). 1277 - `AGGREGATOR` is the one that does "aggregation" in the SQL sense. It groups 1278 rows and computes an aggregate for each group. The group is configured using 1279 the group key. `AGGREGATOR` can be configured with one or more aggregation 1280 functions: 1281 - `SUM` 1282 - `COUNT` 1283 - `COUNT DISTINCT` 1284 - `DISTINCT` 1285 1286 An optional output filter has access to the group key and all the 1287 aggregated values (i.e. it can use even values that are not ultimately 1288 outputted). 1289 - `SORT` sorts the input according to a configurable set of columns. 1290 This is a no-grouping aggregator, hence it can be distributed arbitrarily to 1291 the data producers. This means that it doesn't produce a global ordering, 1292 instead it just guarantees an intra-stream ordering on each physical output 1293 streams). The global ordering, when needed, is achieved by an input 1294 synchronizer of a grouped processor (such as `LIMIT` or `FINAL`). 1295 - `LIMIT` is a single-group aggregator that stops after reading so many input 1296 rows. 1297 - `FINAL` is a single-group aggregator, scheduled on the gateway, that collects 1298 the results of the query. This aggregator will be hooked up to the pgwire 1299 connection to the client. 1300 1301 ## Physical planning 1302 1303 Logical plans are transformed into physical plans in a *physical planning 1304 phase*. See the [corresponding 1305 section](RFCS/20160421_distributed_sql.md#from-logical-to-physical) of the Distributed SQL RFC 1306 for details. To summarize, each aggregator is planned as one or more 1307 *processors*, which we distribute starting from the data layout - `TABLE 1308 READER`s have multiple instances, split according to the ranges - each instance 1309 is planned on the lease holder of the relevant range. From that point on, 1310 subsequent processors are generally either colocated with their inputs, or 1311 planned as singletons, usually on the final destination node. 1312 1313 ### Processors 1314 1315 When turning a _logical plan_ into a _physical plan_, its nodes are turned into 1316 _processors_. Processors are generally made up of three components: 1317 1318  1319 1320 1. The *input synchronizer* merges the input streams into a single stream of 1321 data. Types: 1322 * single-input (pass-through) 1323 * unsynchronized: passes rows from all input streams, arbitrarily 1324 interleaved. 1325 * ordered: the input physical streams have an ordering guarantee (namely the 1326 guarantee of the corresponding logical stream); the synchronizer is careful 1327 to interleave the streams so that the merged stream has the same guarantee. 1328 1329 2. The *data processor* core implements the data transformation or aggregation 1330 logic (and in some cases performs KV operations). 1331 1332 3. The *output router* splits the data processor's output to multiple streams; 1333 types: 1334 * single-output (pass-through) 1335 * mirror: every row is sent to all output streams 1336 * hashing: each row goes to a single output stream, chosen according 1337 to a hash function applied on certain elements of the data tuples. 1338 * by range: the router is configured with range information (relating to a 1339 certain table) and is able to send rows to the nodes that are lease holders for 1340 the respective ranges (useful for `JoinReader` nodes (taking index values 1341 to the node responsible for the PK) and `INSERT` (taking new rows to their 1342 lease holder-to-be)). 1343 1344 To illustrate with an example from the Distributed SQL RFC, the query: 1345 ``` 1346 TABLE Orders (OId INT PRIMARY KEY, CId INT, Value DECIMAL, Date DATE) 1347 1348 SELECT CID, SUM(VALUE) FROM Orders 1349 WHERE DATE > 2015 1350 GROUP BY CID 1351 ORDER BY 1 - SUM(Value) 1352 ``` 1353 1354 produces the following logical plan: 1355 1356  1357 1358 This logical plan above could be transformed into either one of the following 1359 physical plans: 1360 1361  1362 1363 or 1364 1365  1366 1367 1368 ## Execution infrastructure 1369 1370 Once a physical plan has been generated, the system needs to divvy it up 1371 between the nodes and send it around for execution. Each node is responsible 1372 for locally scheduling data processors and input synchronizers. Nodes also 1373 communicate with each other for connecting output routers to input 1374 synchronizers through a streaming interface. 1375 1376 ### Creating a local plan: the `ScheduleFlows` RPC 1377 1378 Distributed execution starts with the gateway making a request to every node 1379 that's supposed to execute part of the plan asking the node to schedule the 1380 sub-plan(s) it's responsible for (except for "on-the-fly" flows, see design 1381 doc). A node might be responsible for multiple disparate pieces of the overall 1382 DAG - let's call each of them a *flow*. A flow is described by the sequence of 1383 physical plan nodes in it, the connections between them (input synchronizers, 1384 output routers) plus identifiers for the input streams of the top node in the 1385 plan and the output streams of the (possibly multiple) bottom nodes. A node 1386 might be responsible for multiple heterogeneous flows. More commonly, when a 1387 node is the lease holder for multiple ranges from the same table involved in 1388 the query, it will run a `TableReader` configured with all the spans to be 1389 read across all the ranges local to the node. 1390 1391 A node therefore implements a `ScheduleFlows` RPC which takes a set of flows, 1392 sets up the input and output [mailboxes](#mailboxes), creates the local 1393 processors and starts their execution. 1394 1395 ### Local scheduling of flows 1396 1397 The simplest way to schedule the different processors locally on a node is 1398 concurrently: each data processor, synchronizer and router runs as a goroutine, 1399 with channels between them. The channels are buffered to synchronize producers 1400 and consumers to a controllable degree. 1401 1402 ### Mailboxes 1403 1404 Flows on different nodes communicate with each other over gRPC streams. To 1405 allow the producer and the consumer to start at different times, 1406 `ScheduleFlows` creates named mailboxes for all the input and output streams. 1407 These message boxes will hold some number of tuples in an internal queue until 1408 a gRPC stream is established for transporting them. From that moment on, gRPC 1409 flow control is used to synchronize the producer and consumer. A gRPC stream is 1410 established by the consumer using the `StreamMailbox` RPC, taking a mailbox id 1411 (the same one that's been already used in the flows passed to `ScheduleFlows`). 1412 1413 A diagram of a simple query using mailboxes for its execution: 1414  1415 1416 ## A complex example: Daily Promotion 1417 1418 To give a visual intuition of all the concepts presented, we draw the physical plan of a relatively involved query. The 1419 point of the query is to help with a promotion that goes out daily, targeting 1420 customers that have spent over $1000 in the last year. We'll insert into the 1421 `DailyPromotion` table rows representing each such customer and the sum of her 1422 recent orders. 1423 1424 ```SQL 1425 TABLE DailyPromotion ( 1426 Email TEXT, 1427 Name TEXT, 1428 OrderCount INT 1429 ) 1430 1431 TABLE Customers ( 1432 CustomerID INT PRIMARY KEY, 1433 Email TEXT, 1434 Name TEXT 1435 ) 1436 1437 TABLE Orders ( 1438 CustomerID INT, 1439 Date DATETIME, 1440 Value INT, 1441 1442 PRIMARY KEY (CustomerID, Date), 1443 INDEX date (Date) 1444 ) 1445 1446 INSERT INTO DailyPromotion 1447 (SELECT c.Email, c.Name, os.OrderCount FROM 1448 Customers AS c 1449 INNER JOIN 1450 (SELECT CustomerID, COUNT(*) as OrderCount FROM Orders 1451 WHERE Date >= '2015-01-01' 1452 GROUP BY CustomerID HAVING SUM(Value) >= 1000) AS os 1453 ON c.CustomerID = os.CustomerID) 1454 ``` 1455 1456 A possible physical plan: 1457