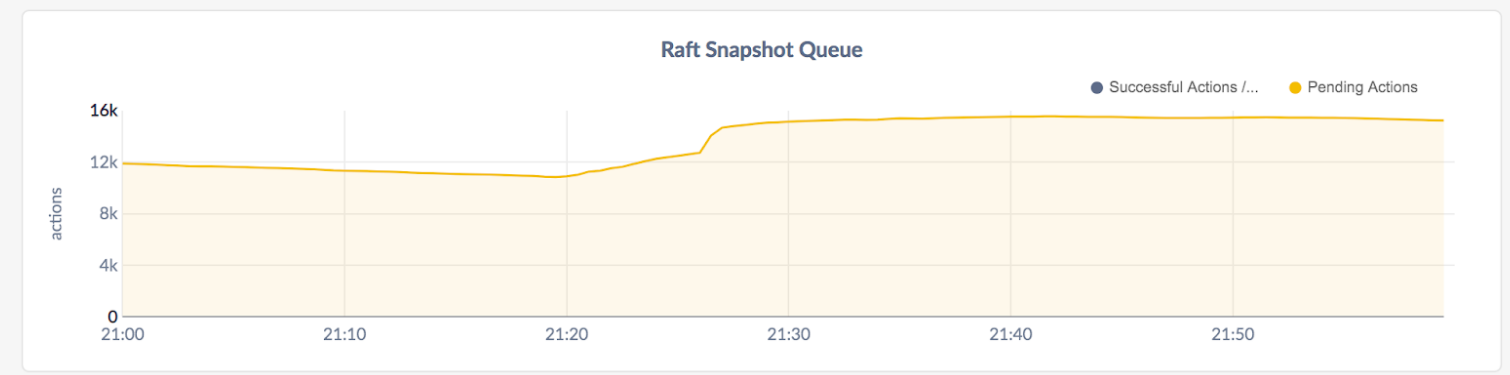

github.com/cockroachdb/cockroach@v20.2.0-alpha.1+incompatible/docs/tech-notes/raft-snapshots.md (about) 1 # Raft snapshots and why you see them when you oughtn't 2 3 Original author: Tobias Grieger 4 5 # Introduction 6 7 Each and every Range in CockroachDB is fundamentally a *Raft* group 8 (with a lot of garnish around it). Raft is a consensus protocol that 9 provides a distributed state machine being fed an ordered log of 10 commands, the *Raft log*. Raft is a leader-based protocol that keeps 11 leadership stable, which means that typically one of the members of 12 the group is the designated leader and is in charge of distributing 13 the log to followers and marking log entries as committed. 14 15 In typical operation (read: “practically always”) the process that 16 plays out with each write to a Range is that each of the members of 17 the Raft group receives new entries (corresponding to some digested 18 form of the write request) from the leader, which it acknowledges. A 19 little later, the leader sends an updated *Commit index* instructing the 20 followers to apply the current (and any preceding) writes to their 21 state, in the order given by the log. 22 23 A *Raft snapshot* is necessary when the leader does not have the log 24 entries a follower needs and thus needs to send a full initial 25 state. This happens in two scenarios: 26 27 1. Log truncation. It’s not economical to store all of the Raft log in 28 perpetuity. For example, imagine a workload that updates a single 29 key-value pair over and over (no MVCC). The Raft log’s growth is 30 unbounded, yet the size of the state is constant. 31 32 When all followers have applied a command, a prefix of the log up 33 to that command can be discarded. But followers can be unavailable 34 for extended periods of time; it may not always be wise to wait for 35 *all* followers to have caught up, especially if the Raft log has 36 already grown very large. If we don’t wait and an affected follower 37 wants to catch up, it has to do so through a snapshot. 38 39 2. A configuration change adds a new follower to the Raft group. For 40 example, a three member group gets turned into a four member 41 group. Naively, the new member starts with a clean slate, and would 42 need all of the Raft log. The details are a bit in the weeds, but 43 even if the log had never been truncated (see 1.) we’d still need a 44 snapshot, for our initial state is not equal to the empty state 45 (i.e. if a replica applies all of the log on top of an empty state, 46 it wouldn’t end up in the correct state). 47 48 # Terminology 49 50 We have already introduced *Raft* and take it that you know what a *Range* 51 is. Here is some more terminology and notation which is also helpful 52 to decipher the logs should you ever have to. 53 54 **Replica:** a member of a Range, represented at runtime by a 55 `*storage.Replica` object (housed by a `*storage.Store`). A Range 56 typically has an odd number of Replicas, and practically always three 57 or five. 58 59 **Replica ID:** A Range is identified by a Range ID, but Raft doesn’t even 60 know which Range it is handling (we do all of that routing in our 61 transport layer). All Raft knows is the members of the group, which 62 are identified by *Replica IDs*. For each range, the initial Replica IDs 63 are 1, 2, 3, and each newly added Replica is assigned the next higher 64 (and never previously used) one [1]. For example, if a Range has Replicas 65 with IDs 1, 2, 4, then you can deduce that member 3 was at some point 66 removed from the range and a new replica 4 added (in some unknown 67 order). 68 69 [1] The replica ID counter is stored in the range descriptor. 70 71 The notation you would find in the logs for a member with Replica ID 3 72 of a Range with ID 17 is r17/3. Raft snapshot: a snapshot that is 73 requested internally by Raft. This is different from a snapshot that 74 CockroachDB sends preemptively, see below. 75 76 **Raft snapshot queue:** a Store-level queue that sends the snapshots 77 requested by Raft. Prior to the introduction of the queue, Raft 78 snapshots would often bring a cluster to its knees by overloading the 79 system. The queue makes sure there aren’t ever too many Raft snapshots 80 in flight at the same time. 81 82 **Snapshot reservation:** A Store-level mechanism that sits on the receive 83 path of a snapshot, to make sure we’re not accepting too many 84 (preemptive or Raft) snapshots at once (which could overload the 85 node). 86 87 **Replica Change (or Replication Change):** the process of adding or 88 removing a Replica from/to a Range. An addition usually involves a: 89 90 **Preemptive snapshot:** a snapshot we send before adding a replica to the 91 group. The idea is that we’ll opportunistically place state on the 92 store that will house the replica once it is added, so that it can 93 catch up from the Raft log instead of needing a Raft snapshot. This 94 minimizes the time during which the follower is part of the commit 95 quorum but unable to participate in said quorum. 96 97 A preemptive snapshot is rate limited (at time of writing to 2mb/s) 98 and creates a “placeholder” Replica that doesn’t have an associated 99 Raft group (and has Replica ID zero) that is typically short-lived (as 100 it either turns into a full Replica, or gets deleted). See: 101 102 **Replica GC:** the process of deleting Replicas that are not members 103 of their Raft group any more. Determining whether a Replica is GC’able 104 is surprisingly tricky. 105 106 **Quota pool:** A flow control mechanism that prevents “faster” replicas 107 from leaving a slower (but “functional”, for example on a slower 108 network connection) Replica behind. Without a quota pool, the Raft 109 group would end up in a weakened state where losing one of the 110 up-to-date replicas incurs an unavailability until the straggler has 111 caught up. It can also trigger periodic Raft log truncations that cut 112 off the slow follower, which requires regular Raft snapshots. 113 114 **Quorum:** strictly more than half of the number of Replicas in a 115 Range. For example, with three Replicas, quorum is two. With four 116 Replicas, quorum is three. With five Replicas, quorum is three. 117 118 **Log truncation:** the process of truncating the logs. The Raft 119 leader computes this index periodically and sends out a Raft command 120 that instructs replicas to delete a given prefix of their Raft 121 logs. These operations are triggered by the Raft log queue. 122 123 **Range Split (or just Split):** an operation that splits a Range into 124 two Ranges by shrinking a Range (from the right) and creating a new 125 one (with members on the same stores) from the remainder. For example, 126 r17 with original bounds [a,z) might split into r17 with bounds [a,c) 127 and r18 with bounds [c,z). The right hand side is initialized with the 128 data from the (original) left hand side Replica. 129 130 **Range Merge (or just Merge):** the reverse of a Split, but more 131 complex to implement than a Split for a variety of reasons, the most 132 relevant to this document being that it needs the Replicas to be 133 colocated (i.e. with replicas on the same stores). 134 135 We’ll discuss the interplay between the various mechanisms above in 136 more detail, but this is a good moment to reflect on the title of this 137 document. Raft snapshots are expensive in that they transfer loads of 138 data around; it’s typically the right choice to avoid them. When could 139 they pop up outside of failure situations? 140 141 1. We control replication changes and have preemptive snapshots (which 142 still move the same amount of data, but can happen at a leisurely 143 pace without running a vulnerable Raft group); no Raft snapshots 144 are involved from the looks of it 145 146 2. We control Raft log truncation; if followers are online, the quota 147 pool makes sure that the followers are always at “comparable” log 148 positions, so a (significant) prefix of the log that can be 149 truncated without causing a Raft snapshot should always be 150 available. 151 152 3. A split shouldn’t require a Raft snapshot: Before the split, all 153 the data is on whichever stores the replicas are on. After the 154 split, the data is in the same place, just kept across two ranges - 155 a bookkeeping change. 156 157 4. Merges also shouldn’t need Raft snapshots. They’re doing replica 158 changes first (see above) followed by a bookkeeping change that 159 doesn’t move data any more. 160 161 It seems that the only “reasonable” situation in which you’d expect 162 Raft snapshots is if a node goes offline for some time and the Raft 163 log grows so much that we’d prefer a snapshot for catching up the node 164 once it comes back. 165 166 Pop quiz: how many Raft snapshots would you expect if you started a 167 fresh 15 node cluster on a 2.1.2-alpha SHA from November 2018, and 168 imported a significant amount (TBs) of data into it? 169 170  171 172 I bet ~15,000 wasn’t exactly what you were thinking of, and yet this 173 was a problem that was long overlooked and just recently addressed by 174 means of mitigation rather than resolution of root causes. It’s not 175 just expensive to have that many snapshots. You can see that thousands 176 of snapshots are requested, and the graph isn’t exactly in a hurry to 177 dip back towards zero. This is in part because the Raft snapshot queue 178 had (and has) efficiency concerns, but fundamentally consider that 179 each queued snapshot might correspond to transferring ~64mb of data 180 over the network (closer to ~32mb in practice). That’s on the order of 181 hundreds of gigabytes, i.e. it’ll take time no matter how hard we try. 182 183 At the end of this document, you will have an understanding of how 184 this graph came to be, how it will look today (spoiler: mostly a zero 185 flatline), and what the root causes are. 186 187 # Fantastic Raft snapshots and where to find them 188 189 We will now go into the details and discover how we might see Raft 190 snapshots and how they might proliferate as seen in the above 191 graph. In doing so, we’ll discover a “meta root cause” which is “poor 192 interplay between mechanisms that have been mentioned above”. To 193 motivate this, consider the following conflicts of interest: 194 195 - Log truncation wants to keep the log very short (this is also a 196 performance concern). Snapshots want their starting index to not be 197 truncated away (or they’re useless). 198 - ReplicaGC wants to remove old data as fast as possible. Replication 199 changes want their preemptive snapshots kept around until they have 200 completed. 201 202 It’s a good warm-up exercise to go through why these conflicts would cause a snapshot. 203 204 ## Problem 1: Truncation cuts off an in-flight snapshot 205 206 Say a replication change sends a preemptive snapshot at log 207 index 123. Some writes arrive to the range; the Raft log queue checks 208 the state of the group, sees three up-to-date followers, and issues a 209 truncation to index 127. The preemptive snapshot arrives and is 210 applied. The replication change completes and a new Replica is formed 211 from the preemptive snapshot. It will immediately require a Raft 212 snapshot because it needs index 124 next, but the leader only has 127 213 and higher. 214 215 **Solution** (already in place): track the index of in-flight snapshots 216 and don’t truncate them away. Similar mechanisms make sure that we 217 don’t cut off followers that are lagging behind slightly, unless we 218 really really think it’s the right thing to do. 219 220 ## Problem 2: Preemptive snapshots accidentally replicaGC’ed 221 222 Again a preemptive snapshot arrives at a Store. While the replication 223 change hasn’t completed, this preemptive Replica is gc’able and may be 224 removed if picked up by the replica GC queue. If this happens and the 225 replication change completes, the newly added follower will have a 226 clean slate and will need a Raft snapshot to recover, putting the 227 Range in a vulnerable state until it has done so. 228 229 **Solution** (unimplemented): delay the GC if we know that the replica 230 change is still ongoing. Note that this is more difficult than it 231 sounds. The “driver” of the replica change is another node, and for 232 all we know it might’ve aborted the change (in which case the snapshot 233 needs to be deleted ASAP). Simply delaying GC of preemptive snapshots 234 via a timeout mechanism is tempting, but can backfire: a “wide” 235 preemptive snapshot may be kept around erroneously and can block 236 “smaller” snapshots that are now sent after the Range has split into 237 smaller parts. We can detect this situation, but perhaps it is simpler 238 to add a direct RPC from the “driver” to the preemptive snapshot to 239 keep it alive for the right amount of time. 240 241 These two were relatively straightforward. If we throw splits in the mix, things get a lot worse. 242 243 ## Problem 3: Splits can exponentiate the effect of problem 2 244 245 Consider the situation in problem 2, but between the preemptive 246 snapshot being applied and the replication change succeeding, the 247 range is split 100 times (splitting small ranges is fast and this 248 happens during IMPORT/RESTORE). The preemptive snapshot is removed, so 249 the Replica will receive a new snapshot. This snapshot is likely to 250 post-date all of the splits, so it will be much “narrower” than 251 before. In applying it, everything outside of its bounds is deleted, 252 and that’s the data for the 100 new ranges that we will now need Raft 253 snapshots for. 254 255 **Solution:** This is not an issue if Problem 2 is solved, but see 256 Problem 5 for a related problem. 257 258 ## Problem 4: The split trigger can cause Raft snapshots 259 260 This needs a bit of detail about how splits are implemented. A split 261 is essentially a transaction with a special “split trigger” attached 262 to its commit. Upon applying the commit, each replica carries out the 263 logical split into two ranges as described by the split trigger, 264 culminating in the creation of a new Replica (the right-hand side) and 265 its initialization with data (inherited from the left-hand side). This 266 is conceptually easy, but things always get more complicated in a 267 distributed system. Note that we have multiple replicas that may be 268 applying the split trigger at different times. What if one replica 269 took, say, a second longer until it started applying it? All replicas 270 except for the straggler will already have initialized their new 271 right-hand sides, and the right-hand sides are configured to talk to 272 the right-hand side on our straggler - which hasn’t executed the split 273 trigger yet! The result is that upon receiving messages for this 274 yet-nonexistent replica, it will be created as an absolutely empty 275 replica. This replica will promptly [2] tell the leader that it needs a 276 snapshot. In all likelihood, the split trigger is going to execute 277 very soon and render the snapshot useless, but the damage has been 278 done. 279 280 [2] The part that already processed the split may form a quorum and 281 start accept requests. These requests will be routed also to the node 282 that hasn't processed the split yet. To process those requests, the 283 slower node needs a snapshot. 284 285 **Solution:** what’s implemented today is a mechanism that simply 286 delays snapshots to “blank” replicas for multiple seconds. This works, 287 but there are situations in which the “blank” replica really needs the 288 Raft snapshot (see Problems 3,5,6). The idea is to replace this with a 289 mechanism that can detect whether there’s an outstanding split trigger 290 that will obviate the snapshot. 291 292 ## Problem 5: Replica removal after a split can cause Raft snapshots 293 294 This is Problem 4, but with a scenario that really needs the Raft 295 snapshot. Imagine a range splits into two and immediately afterwards, 296 a replication change (on the original range, i.e. the left-hand side) 297 removes one of the followers. This can lead to that follower Replica 298 being replica GC’ed before it has applied the split trigger, and so 299 the data removed encompasses that that should become the right-hand 300 side (which is still configured to have a replica on that store). A 301 Raft snapshot is necessary and in particular note that the 302 timeout-based mechanism in Solution 4 will have to time out first and 303 accidentally delays this snapshot. 304 305 **Solution:** See end of Solution 4 to avoid the delayed snapshot. To 306 avoid the need for a snapshot in the first place, avoid (or delay) 307 removing replicas that are pending application of a split trigger, or 308 do the opposite and delay splits when a replication change is 309 ongoing. Not implemented to date. Both options are problematic as they 310 can intertwine the split and replicate queues, which already have 311 hidden dependencies. 312 313 ## Problem 6: Splitting a range that needs snapshots leads to two such ranges 314 315 If a range which has a follower that needs a snapshot (for whatever 316 reason) is split, the result will be two Raft snapshots required to 317 catch up the left-hand side and right hand side of the follower, 318 respectively. You might argue that these snapshots will be smaller and 319 add up to the original size and so that’s ok, but this isn’t how it 320 works owing to various deficiencies of the Raft snapshot queue. 321 322 This is particularly bad since we also know from Problem 4 and 5 that 323 we sometimes accidentally delay the Raft snapshot for seconds (before 324 we even queue it), leaving ample time for splits to create more 325 snapshots. To add insult to injury, all new ranges created in this 326 state are also false positives for the timeout mechanism in Problem 4. 327 328 Note that this is similar to Problem 3 in some sense but it can’t be detected by the split. 329 330 **Solution:** Splits typically happen on the Raft leader and can thus 331 peek into the Raft state and detect pending snapshots. A mechanism to 332 delay the split in such cases was implemented, though it doesn’t apply 333 to splits carried out through the “split queue” (as that queue does 334 not react well to being throttled). The main scenario in which this 335 problem was observed is IMPORT/RESTORE, which carries out rapid splits 336 and replication changes. 337 338 ## Commonalities 339 340 All of these phenomena are in principle observable by running the 341 restore2TB and import/tpch/nodes=X roachtests, though at this point 342 all that’s left of the problem is a small spike in Raft snapshots at 343 the beginning of the test (the likely root cause being Problem 3), 344 which used to be exponentiated by Problem 6 (before it was fixed). 345 346 # Summary 347 348 Raft snapshots shouldn’t be necessary in any of the situations 349 described above, and considerable progress has been made towards 350 making that at reality. The mitigation of Solution 6 is powerful and 351 guards against the occasional Raft snapshot becoming a problem, but it 352 is nevertheless worth going the extra mile to add “deep stability” to 353 this property: a zero rate of Raft snapshots is a good indicator of 354 cluster health for production and stability testing. Trying to achieve 355 it has already led to the discovery of a number of surprising 356 interactions and buglets across the core code base, and not achieving 357 it will make it hard to end-to-end test this property.