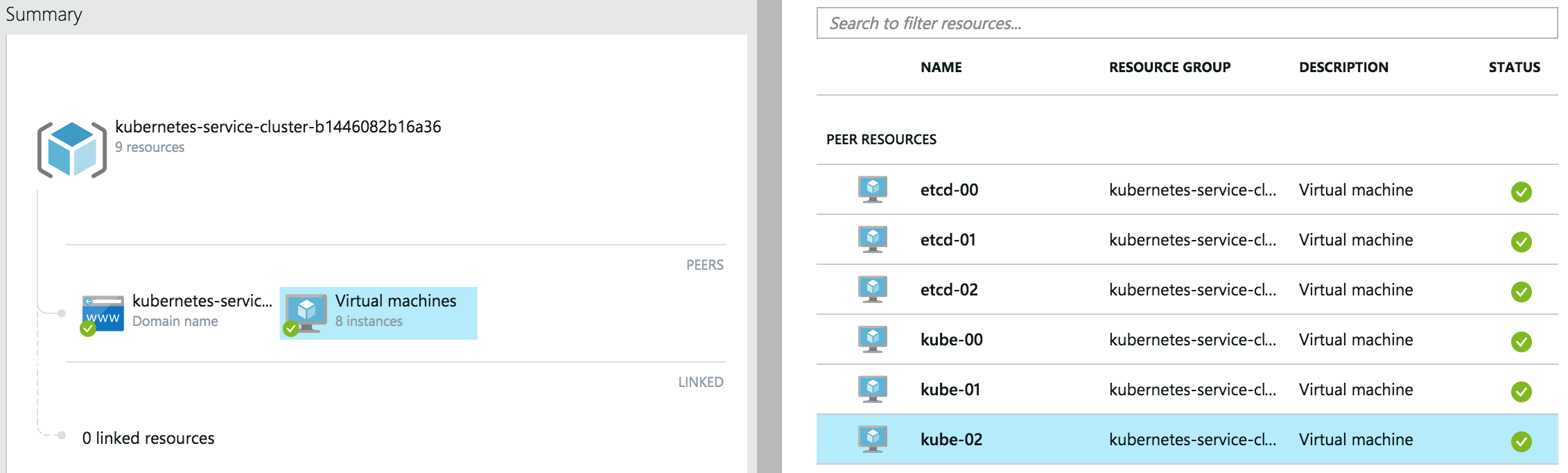

github.com/enmand/kubernetes@v1.2.0-alpha.0/docs/getting-started-guides/coreos/azure/README.md (about) 1 <!-- BEGIN MUNGE: UNVERSIONED_WARNING --> 2 3 <!-- BEGIN STRIP_FOR_RELEASE --> 4 5 <img src="http://kubernetes.io/img/warning.png" alt="WARNING" 6 width="25" height="25"> 7 <img src="http://kubernetes.io/img/warning.png" alt="WARNING" 8 width="25" height="25"> 9 <img src="http://kubernetes.io/img/warning.png" alt="WARNING" 10 width="25" height="25"> 11 <img src="http://kubernetes.io/img/warning.png" alt="WARNING" 12 width="25" height="25"> 13 <img src="http://kubernetes.io/img/warning.png" alt="WARNING" 14 width="25" height="25"> 15 16 <h2>PLEASE NOTE: This document applies to the HEAD of the source tree</h2> 17 18 If you are using a released version of Kubernetes, you should 19 refer to the docs that go with that version. 20 21 <strong> 22 The latest 1.0.x release of this document can be found 23 [here](http://releases.k8s.io/release-1.0/docs/getting-started-guides/coreos/azure/README.md). 24 25 Documentation for other releases can be found at 26 [releases.k8s.io](http://releases.k8s.io). 27 </strong> 28 -- 29 30 <!-- END STRIP_FOR_RELEASE --> 31 32 <!-- END MUNGE: UNVERSIONED_WARNING --> 33 Kubernetes on Azure with CoreOS and [Weave](http://weave.works) 34 --------------------------------------------------------------- 35 36 **Table of Contents** 37 38 - [Introduction](#introduction) 39 - [Prerequisites](#prerequisites) 40 - [Let's go!](#lets-go) 41 - [Deploying the workload](#deploying-the-workload) 42 - [Scaling](#scaling) 43 - [Exposing the app to the outside world](#exposing-the-app-to-the-outside-world) 44 - [Next steps](#next-steps) 45 - [Tear down...](#tear-down) 46 47 ## Introduction 48 49 In this guide I will demonstrate how to deploy a Kubernetes cluster to Azure cloud. You will be using CoreOS with Weave, which implements simple and secure networking, in a transparent, yet robust way. The purpose of this guide is to provide an out-of-the-box implementation that can ultimately be taken into production with little change. It will demonstrate how to provision a dedicated Kubernetes master and etcd nodes, and show how to scale the cluster with ease. 50 51 ### Prerequisites 52 53 1. You need an Azure account. 54 55 ## Let's go! 56 57 To get started, you need to checkout the code: 58 59 ```sh 60 git clone https://github.com/kubernetes/kubernetes 61 cd kubernetes/docs/getting-started-guides/coreos/azure/ 62 ``` 63 64 You will need to have [Node.js installed](http://nodejs.org/download/) on you machine. If you have previously used Azure CLI, you should have it already. 65 66 First, you need to install some of the dependencies with 67 68 ```sh 69 npm install 70 ``` 71 72 Now, all you need to do is: 73 74 ```sh 75 ./azure-login.js -u <your_username> 76 ./create-kubernetes-cluster.js 77 ``` 78 79 This script will provision a cluster suitable for production use, where there is a ring of 3 dedicated etcd nodes: 1 kubernetes master and 2 kubernetes nodes. The `kube-00` VM will be the master, your work loads are only to be deployed on the nodes, `kube-01` and `kube-02`. Initially, all VMs are single-core, to ensure a user of the free tier can reproduce it without paying extra. I will show how to add more bigger VMs later. 80 81  82 83 Once the creation of Azure VMs has finished, you should see the following: 84 85 ```console 86 ... 87 azure_wrapper/info: Saved SSH config, you can use it like so: `ssh -F ./output/kube_1c1496016083b4_ssh_conf <hostname>` 88 azure_wrapper/info: The hosts in this deployment are: 89 [ 'etcd-00', 'etcd-01', 'etcd-02', 'kube-00', 'kube-01', 'kube-02' ] 90 azure_wrapper/info: Saved state into `./output/kube_1c1496016083b4_deployment.yml` 91 ``` 92 93 Let's login to the master node like so: 94 95 ```sh 96 ssh -F ./output/kube_1c1496016083b4_ssh_conf kube-00 97 ``` 98 99 > Note: config file name will be different, make sure to use the one you see. 100 101 Check there are 2 nodes in the cluster: 102 103 ```console 104 core@kube-00 ~ $ kubectl get nodes 105 NAME LABELS STATUS 106 kube-01 kubernetes.io/hostname=kube-01 Ready 107 kube-02 kubernetes.io/hostname=kube-02 Ready 108 ``` 109 110 ## Deploying the workload 111 112 Let's follow the Guestbook example now: 113 114 ```sh 115 kubectl create -f ~/guestbook-example 116 ``` 117 118 You need to wait for the pods to get deployed, run the following and wait for `STATUS` to change from `Pending` to `Running`. 119 120 ```sh 121 kubectl get pods --watch 122 ``` 123 124 > Note: the most time it will spend downloading Docker container images on each of the nodes. 125 126 Eventually you should see: 127 128 ```console 129 NAME READY STATUS RESTARTS AGE 130 frontend-0a9xi 1/1 Running 0 4m 131 frontend-4wahe 1/1 Running 0 4m 132 frontend-6l36j 1/1 Running 0 4m 133 redis-master-talmr 1/1 Running 0 4m 134 redis-slave-12zfd 1/1 Running 0 4m 135 redis-slave-3nbce 1/1 Running 0 4m 136 ``` 137 138 ## Scaling 139 140 Two single-core nodes are certainly not enough for a production system of today. Let's scale the cluster by adding a couple of bigger nodes. 141 142 You will need to open another terminal window on your machine and go to the same working directory (e.g. `~/Workspace/kubernetes/docs/getting-started-guides/coreos/azure/`). 143 144 First, lets set the size of new VMs: 145 146 ```sh 147 export AZ_VM_SIZE=Large 148 ``` 149 150 Now, run scale script with state file of the previous deployment and number of nodes to add: 151 152 ```console 153 core@kube-00 ~ $ ./scale-kubernetes-cluster.js ./output/kube_1c1496016083b4_deployment.yml 2 154 ... 155 azure_wrapper/info: Saved SSH config, you can use it like so: `ssh -F ./output/kube_8f984af944f572_ssh_conf <hostname>` 156 azure_wrapper/info: The hosts in this deployment are: 157 [ 'etcd-00', 158 'etcd-01', 159 'etcd-02', 160 'kube-00', 161 'kube-01', 162 'kube-02', 163 'kube-03', 164 'kube-04' ] 165 azure_wrapper/info: Saved state into `./output/kube_8f984af944f572_deployment.yml` 166 ``` 167 168 > Note: this step has created new files in `./output`. 169 170 Back on `kube-00`: 171 172 ```console 173 core@kube-00 ~ $ kubectl get nodes 174 NAME LABELS STATUS 175 kube-01 kubernetes.io/hostname=kube-01 Ready 176 kube-02 kubernetes.io/hostname=kube-02 Ready 177 kube-03 kubernetes.io/hostname=kube-03 Ready 178 kube-04 kubernetes.io/hostname=kube-04 Ready 179 ``` 180 181 You can see that two more nodes joined happily. Let's scale the number of Guestbook instances now. 182 183 First, double-check how many replication controllers there are: 184 185 ```console 186 core@kube-00 ~ $ kubectl get rc 187 ONTROLLER CONTAINER(S) IMAGE(S) SELECTOR REPLICAS 188 frontend php-redis kubernetes/example-guestbook-php-redis:v2 name=frontend 3 189 redis-master master redis name=redis-master 1 190 redis-slave worker kubernetes/redis-slave:v2 name=redis-slave 2 191 ``` 192 193 As there are 4 nodes, let's scale proportionally: 194 195 ```console 196 core@kube-00 ~ $ kubectl scale --replicas=4 rc redis-slave 197 >>>>>>> coreos/azure: Updates for 1.0 198 scaled 199 core@kube-00 ~ $ kubectl scale --replicas=4 rc frontend 200 scaled 201 ``` 202 203 Check what you have now: 204 205 ```console 206 core@kube-00 ~ $ kubectl get rc 207 CONTROLLER CONTAINER(S) IMAGE(S) SELECTOR REPLICAS 208 frontend php-redis kubernetes/example-guestbook-php-redis:v2 name=frontend 4 209 redis-master master redis name=redis-master 1 210 redis-slave worker kubernetes/redis-slave:v2 name=redis-slave 4 211 ``` 212 213 You now will have more instances of front-end Guestbook apps and Redis slaves; and, if you look up all pods labeled `name=frontend`, you should see one running on each node. 214 215 ```console 216 core@kube-00 ~/guestbook-example $ kubectl get pods -l name=frontend 217 NAME READY STATUS RESTARTS AGE 218 frontend-0a9xi 1/1 Running 0 22m 219 frontend-4wahe 1/1 Running 0 22m 220 frontend-6l36j 1/1 Running 0 22m 221 frontend-z9oxo 1/1 Running 0 41s 222 ``` 223 224 ## Exposing the app to the outside world 225 226 There is no native Azure load-balancer support in Kubernetes 1.0, however here is how you can expose the Guestbook app to the Internet. 227 228 ``` 229 ./expose_guestbook_app_port.sh ./output/kube_1c1496016083b4_ssh_conf 230 Guestbook app is on port 31605, will map it to port 80 on kube-00 231 info: Executing command vm endpoint create 232 + Getting virtual machines 233 + Reading network configuration 234 + Updating network configuration 235 info: vm endpoint create command OK 236 info: Executing command vm endpoint show 237 + Getting virtual machines 238 data: Name : tcp-80-31605 239 data: Local port : 31605 240 data: Protcol : tcp 241 data: Virtual IP Address : 137.117.156.164 242 data: Direct server return : Disabled 243 info: vm endpoint show command OK 244 ``` 245 246 You then should be able to access it from anywhere via the Azure virtual IP for `kube-00` displayed above, i.e. `http://137.117.156.164/` in my case. 247 248 ## Next steps 249 250 You now have a full-blow cluster running in Azure, congrats! 251 252 You should probably try deploy other [example apps](../../../../examples/) or write your own ;) 253 254 ## Tear down... 255 256 If you don't wish care about the Azure bill, you can tear down the cluster. It's easy to redeploy it, as you can see. 257 258 ```sh 259 ./destroy-cluster.js ./output/kube_8f984af944f572_deployment.yml 260 ``` 261 262 > Note: make sure to use the _latest state file_, as after scaling there is a new one. 263 264 By the way, with the scripts shown, you can deploy multiple clusters, if you like :) 265 266 267 <!-- BEGIN MUNGE: GENERATED_ANALYTICS --> 268 []() 269 <!-- END MUNGE: GENERATED_ANALYTICS -->