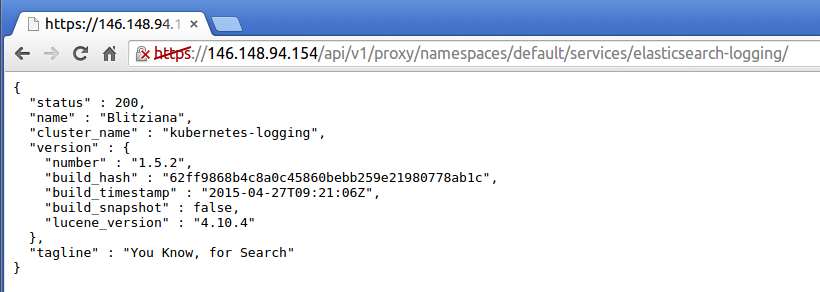

github.com/enmand/kubernetes@v1.2.0-alpha.0/docs/getting-started-guides/logging-elasticsearch.md (about) 1 <!-- BEGIN MUNGE: UNVERSIONED_WARNING --> 2 3 <!-- BEGIN STRIP_FOR_RELEASE --> 4 5 <img src="http://kubernetes.io/img/warning.png" alt="WARNING" 6 width="25" height="25"> 7 <img src="http://kubernetes.io/img/warning.png" alt="WARNING" 8 width="25" height="25"> 9 <img src="http://kubernetes.io/img/warning.png" alt="WARNING" 10 width="25" height="25"> 11 <img src="http://kubernetes.io/img/warning.png" alt="WARNING" 12 width="25" height="25"> 13 <img src="http://kubernetes.io/img/warning.png" alt="WARNING" 14 width="25" height="25"> 15 16 <h2>PLEASE NOTE: This document applies to the HEAD of the source tree</h2> 17 18 If you are using a released version of Kubernetes, you should 19 refer to the docs that go with that version. 20 21 <strong> 22 The latest 1.0.x release of this document can be found 23 [here](http://releases.k8s.io/release-1.0/docs/getting-started-guides/logging-elasticsearch.md). 24 25 Documentation for other releases can be found at 26 [releases.k8s.io](http://releases.k8s.io). 27 </strong> 28 -- 29 30 <!-- END STRIP_FOR_RELEASE --> 31 32 <!-- END MUNGE: UNVERSIONED_WARNING --> 33 34 # Cluster Level Logging with Elasticsearch and Kibana 35 36 On the Google Compute Engine (GCE) platform the default cluster level logging support targets 37 [Google Cloud Logging](https://cloud.google.com/logging/docs/) as described at the [Logging](logging.md) getting 38 started page. Here we describe how to set up a cluster to ingest logs into Elasticsearch and view them using Kibana as an 39 alternative to Google Cloud Logging. 40 41 To use Elasticsearch and Kibana for cluster logging you should set the following environment variable as shown below: 42 43 ```console 44 KUBE_LOGGING_DESTINATION=elasticsearch 45 ``` 46 47 You should also ensure that `KUBE_ENABLE_NODE_LOGGING=true` (which is the default for the GCE platform). 48 49 Now when you create a cluster a message will indicate that the Fluentd node-level log collectors 50 will target Elasticsearch: 51 52 ```console 53 $ cluster/kube-up.sh 54 ... 55 Project: kubernetes-satnam 56 Zone: us-central1-b 57 ... calling kube-up 58 Project: kubernetes-satnam 59 Zone: us-central1-b 60 +++ Staging server tars to Google Storage: gs://kubernetes-staging-e6d0e81793/devel 61 +++ kubernetes-server-linux-amd64.tar.gz uploaded (sha1 = 6987c098277871b6d69623141276924ab687f89d) 62 +++ kubernetes-salt.tar.gz uploaded (sha1 = bdfc83ed6b60fa9e3bff9004b542cfc643464cd0) 63 Looking for already existing resources 64 Starting master and configuring firewalls 65 Created [https://www.googleapis.com/compute/v1/projects/kubernetes-satnam/zones/us-central1-b/disks/kubernetes-master-pd]. 66 NAME ZONE SIZE_GB TYPE STATUS 67 kubernetes-master-pd us-central1-b 20 pd-ssd READY 68 Created [https://www.googleapis.com/compute/v1/projects/kubernetes-satnam/regions/us-central1/addresses/kubernetes-master-ip]. 69 +++ Logging using Fluentd to elasticsearch 70 ``` 71 72 The node level Fluentd collector pods and the Elasticsearech pods used to ingest cluster logs and the pod for the Kibana 73 viewer should be running in the kube-system namespace soon after the cluster comes to life. 74 75 ```console 76 $ kubectl get pods --namespace=kube-system 77 NAME READY REASON RESTARTS AGE 78 elasticsearch-logging-v1-78nog 1/1 Running 0 2h 79 elasticsearch-logging-v1-nj2nb 1/1 Running 0 2h 80 fluentd-elasticsearch-kubernetes-minion-5oq0 1/1 Running 0 2h 81 fluentd-elasticsearch-kubernetes-minion-6896 1/1 Running 0 2h 82 fluentd-elasticsearch-kubernetes-minion-l1ds 1/1 Running 0 2h 83 fluentd-elasticsearch-kubernetes-minion-lz9j 1/1 Running 0 2h 84 kibana-logging-v1-bhpo8 1/1 Running 0 2h 85 kube-dns-v3-7r1l9 3/3 Running 0 2h 86 monitoring-heapster-v4-yl332 1/1 Running 1 2h 87 monitoring-influx-grafana-v1-o79xf 2/2 Running 0 2h 88 ``` 89 90 Here we see that for a four node cluster there is a `fluent-elasticsearch` pod running which gathers 91 the Docker container logs and sends them to Elasticsearch. The Fluentd collector communicates to 92 a Kubernetes service that maps requests to specific Elasticsearch pods. Similarly, Kibana can also be 93 accessed via a Kubernetes service definition. 94 95 96 ```console 97 $ kubectl get services --namespace=kube-system 98 NAME LABELS SELECTOR IP(S) PORT(S) 99 elasticsearch-logging k8s-app=elasticsearch-logging,kubernetes.io/cluster-service=true,kubernetes.io/name=Elasticsearch k8s-app=elasticsearch-logging 10.0.222.57 9200/TCP 100 kibana-logging k8s-app=kibana-logging,kubernetes.io/cluster-service=true,kubernetes.io/name=Kibana k8s-app=kibana-logging 10.0.193.226 5601/TCP 101 kube-dns k8s-app=kube-dns,kubernetes.io/cluster-service=true,kubernetes.io/name=KubeDNS k8s-app=kube-dns 10.0.0.10 53/UDP 102 53/TCP 103 kubernetes component=apiserver,provider=kubernetes <none> 10.0.0.1 443/TCP 104 monitoring-grafana kubernetes.io/cluster-service=true,kubernetes.io/name=Grafana k8s-app=influxGrafana 10.0.167.139 80/TCP 105 monitoring-heapster kubernetes.io/cluster-service=true,kubernetes.io/name=Heapster k8s-app=heapster 10.0.208.221 80/TCP 106 monitoring-influxdb kubernetes.io/cluster-service=true,kubernetes.io/name=InfluxDB k8s-app=influxGrafana 10.0.188.57 8083/TCP 107 ``` 108 109 By default two Elasticsearch replicas are created and one Kibana replica is created. 110 111 ```console 112 $ kubectl get rc --namespace=kube-system 113 CONTROLLER CONTAINER(S) IMAGE(S) SELECTOR REPLICAS 114 elasticsearch-logging-v1 elasticsearch-logging gcr.io/google_containers/elasticsearch:1.4 k8s-app=elasticsearch-logging,version=v1 2 115 kibana-logging-v1 kibana-logging gcr.io/google_containers/kibana:1.3 k8s-app=kibana-logging,version=v1 1 116 kube-dns-v3 etcd gcr.io/google_containers/etcd:2.0.9 k8s-app=kube-dns,version=v3 1 117 kube2sky gcr.io/google_containers/kube2sky:1.9 118 skydns gcr.io/google_containers/skydns:2015-03-11-001 119 monitoring-heapster-v4 heapster gcr.io/google_containers/heapster:v0.14.3 k8s-app=heapster,version=v4 1 120 monitoring-influx-grafana-v1 influxdb gcr.io/google_containers/heapster_influxdb:v0.3 k8s-app=influxGrafana,version=v1 1 121 grafana gcr.io/google_containers/heapster_grafana:v0.7 122 ``` 123 124 The Elasticsearch and Kibana services are not directly exposed via a publicly reachable IP address. Instead, 125 they can be accessed via the service proxy running at the master. The URLs for accessing Elasticsearch 126 and Kibana via the service proxy can be found using the `kubectl cluster-info` command. 127 128 ```console 129 $ kubectl cluster-info 130 Kubernetes master is running at https://146.148.94.154 131 Elasticsearch is running at https://146.148.94.154/api/v1/proxy/namespaces/kube-system/services/elasticsearch-logging 132 Kibana is running at https://146.148.94.154/api/v1/proxy/namespaces/kube-system/services/kibana-logging 133 KubeDNS is running at https://146.148.94.154/api/v1/proxy/namespaces/kube-system/services/kube-dns 134 KubeUI is running at https://146.148.94.154/api/v1/proxy/namespaces/kube-system/services/kube-ui 135 Grafana is running at https://146.148.94.154/api/v1/proxy/namespaces/kube-system/services/monitoring-grafana 136 Heapster is running at https://146.148.94.154/api/v1/proxy/namespaces/kube-system/services/monitoring-heapster 137 InfluxDB is running at https://146.148.94.154/api/v1/proxy/namespaces/kube-system/services/monitoring-influxdb 138 ``` 139 140 Before accessing the logs ingested into Elasticsearch using a browser and the service proxy URL we need to find out 141 the `admin` password for the cluster using `kubectl config view`. 142 143 ```console 144 $ kubectl config view 145 ... 146 - name: kubernetes-satnam_kubernetes-basic-auth 147 user: 148 password: 7GlspJ9Q43OnGIJO 149 username: admin 150 ... 151 ``` 152 153 The first time you try to access the cluster from a browser a dialog box appears asking for the username and password. 154 Use the username `admin` and provide the basic auth password reported by `kubectl config view` for the 155 cluster you are trying to connect to. Connecting to the Elasticsearch URL should then give the 156 status page for Elasticsearch. 157 158  159 160 You can now type Elasticsearch queries directly into the browser. Alternatively you can query Elasticsearch 161 from your local machine using `curl` but first you need to know what your bearer token is: 162 163 ```console 164 $ kubectl config view --minify 165 apiVersion: v1 166 clusters: 167 - cluster: 168 certificate-authority-data: REDACTED 169 server: https://146.148.94.154 170 name: kubernetes-satnam_kubernetes 171 contexts: 172 - context: 173 cluster: kubernetes-satnam_kubernetes 174 user: kubernetes-satnam_kubernetes 175 name: kubernetes-satnam_kubernetes 176 current-context: kubernetes-satnam_kubernetes 177 kind: Config 178 preferences: {} 179 users: 180 - name: kubernetes-satnam_kubernetes 181 user: 182 client-certificate-data: REDACTED 183 client-key-data: REDACTED 184 token: JsUe2Z3cXqa17UQqQ8qWGGf4nOSLwSnp 185 ``` 186 187 Now you can issue requests to Elasticsearch: 188 189 ```console 190 $ curl --header "Authorization: Bearer JsUe2Z3cXqa17UQqQ8qWGGf4nOSLwSnp" --insecure https://146.148.94.154/api/v1/proxy/namespaces/kube-system/services/elasticsearch-logging/ 191 { 192 "status" : 200, 193 "name" : "Vance Astrovik", 194 "cluster_name" : "kubernetes-logging", 195 "version" : { 196 "number" : "1.5.2", 197 "build_hash" : "62ff9868b4c8a0c45860bebb259e21980778ab1c", 198 "build_timestamp" : "2015-04-27T09:21:06Z", 199 "build_snapshot" : false, 200 "lucene_version" : "4.10.4" 201 }, 202 "tagline" : "You Know, for Search" 203 } 204 ``` 205 206 Note that you need the trailing slash at the end of the service proxy URL. Here is an example of a search: 207 208 ```console 209 $ curl --header "Authorization: Bearer JsUe2Z3cXqa17UQqQ8qWGGf4nOSLwSnp" --insecure https://146.148.94.154/api/v1/proxy/namespaces/kube-system/services/elasticsearch-logging/_search?pretty=true 210 { 211 "took" : 7, 212 "timed_out" : false, 213 "_shards" : { 214 "total" : 6, 215 "successful" : 6, 216 "failed" : 0 217 }, 218 "hits" : { 219 "total" : 123711, 220 "max_score" : 1.0, 221 "hits" : [ { 222 "_index" : ".kibana", 223 "_type" : "config", 224 "_id" : "4.0.2", 225 "_score" : 1.0, 226 "_source":{"buildNum":6004,"defaultIndex":"logstash-*"} 227 }, { 228 ... 229 "_index" : "logstash-2015.06.22", 230 "_type" : "fluentd", 231 "_id" : "AU4c_GvFZL5p_gZ8dxtx", 232 "_score" : 1.0, 233 "_source":{"log":"synthetic-logger-10lps-pod: 31: 2015-06-22 20:35:33.597918073+00:00\n","stream":"stdout","tag":"kubernetes.synthetic-logger-10lps-pod_default_synth-lgr","@timestamp":"2015-06-22T20:35:33+00:00"} 234 }, { 235 "_index" : "logstash-2015.06.22", 236 "_type" : "fluentd", 237 "_id" : "AU4c_GvFZL5p_gZ8dxt2", 238 "_score" : 1.0, 239 "_source":{"log":"synthetic-logger-10lps-pod: 36: 2015-06-22 20:35:34.108780133+00:00\n","stream":"stdout","tag":"kubernetes.synthetic-logger-10lps-pod_default_synth-lgr","@timestamp":"2015-06-22T20:35:34+00:00"} 240 } ] 241 } 242 } 243 ``` 244 245 The Elasticsearch website contains information about [URI search queries](https://www.elastic.co/guide/en/elasticsearch/reference/current/search-uri-request.html) which can be used to extract the required logs. 246 247 Alternatively you can view the ingested logs using Kibana. The first time you visit the Kibana URL you will be 248 presented with a page that asks you to configure your view of the ingested logs. Select the option for 249 timeseries values and select `@timestamp`. On the following page select the `Discover` tab and then you 250 should be able to see the ingested logs. You can set the refresh interval to 5 seconds to have the logs 251 regulary refreshed. Here is a typical view of ingested logs from the Kibana viewer. 252 253  254 255 Another way to access Elasticsearch and Kibana in the cluster is to use `kubectl proxy` which will serve 256 a local proxy to the remote master: 257 258 ```console 259 $ kubectl proxy 260 Starting to serve on localhost:8001 261 ``` 262 263 Now you can visit the URL [http://localhost:8001/api/v1/proxy/namespaces/kube-system/services/elasticsearch-logging](http://localhost:8001/api/v1/proxy/namespaces/kube-system/services/elasticsearch-logging) to contact Elasticsearch and [http://localhost:8001/api/v1/proxy/namespaces/kube-system/services/kibana-logging](http://localhost:8001/api/v1/proxy/namespaces/kube-system/services/kibana-logging) to access the Kibana viewer. 264 265 266 <!-- BEGIN MUNGE: GENERATED_ANALYTICS --> 267 []() 268 <!-- END MUNGE: GENERATED_ANALYTICS -->