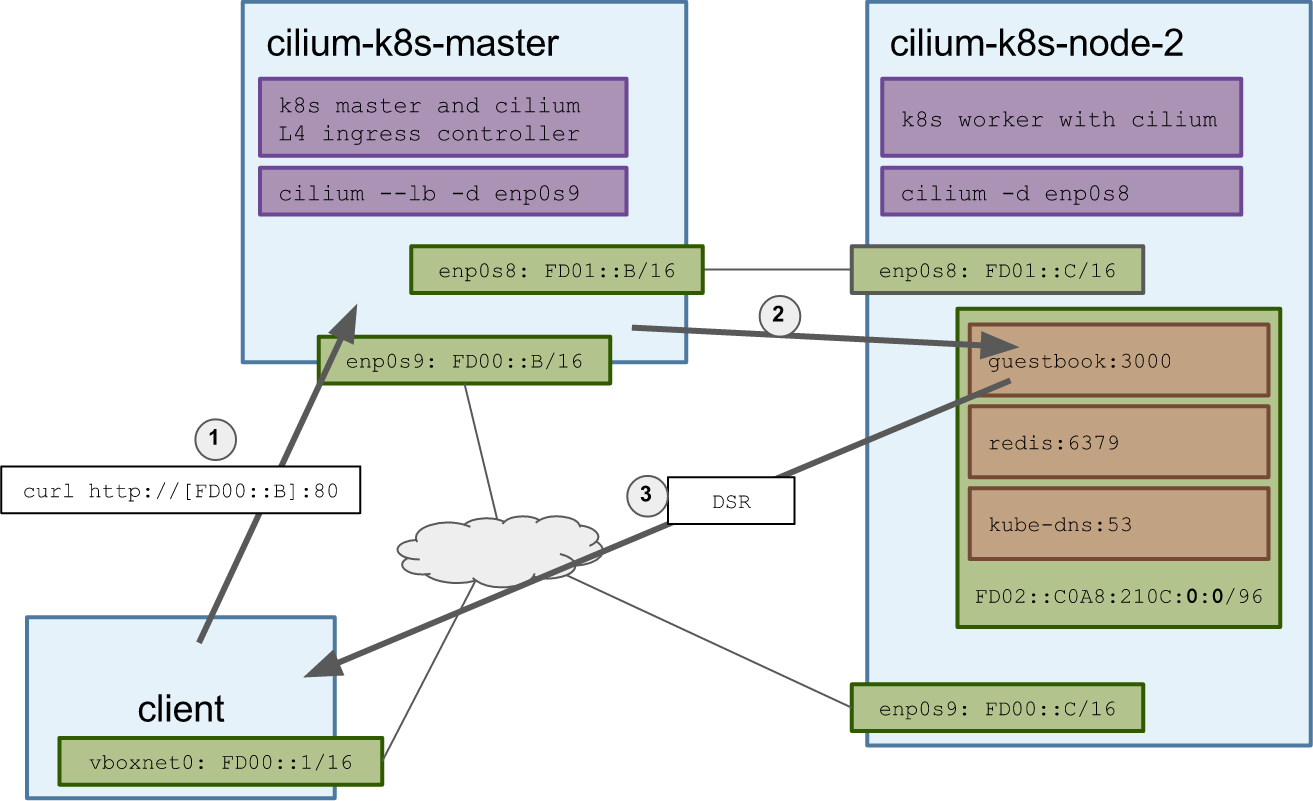

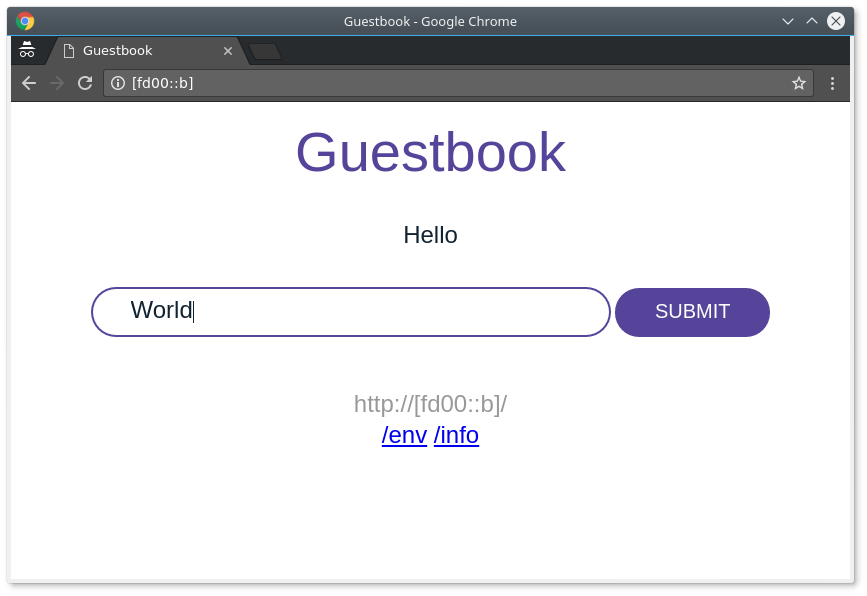

github.com/fafucoder/cilium@v1.6.11/examples/kubernetes-ingress/README.md (about) 1 # Kubernetes Network Policy + (L4) Ingress Controller with Cilium 2 3 This tutorial demonstrates how to set up Kubernetes using Cilium to: 4 5 - Provide IPv4 & IPv6 network connectivity 6 - Enforce container/pod labels based network security policies (using the v1beta 7 "Network Policy" object) 8 - Run the BPF based kube-proxy replacement for services and ingress objects 9 10 On top of this, the pods will have some labels assigned by kubernetes and cilium 11 will enforce the kubernetes policy created via [kubernetes network policy API](https://kubernetes.io/docs/concepts/services-networking/network-policies/). 12 13 Besides the network policy enforcement, kubernetes will be running **without** 14 kube-proxy and will enforce a basic set of ingress rules from an ingress object, 15 giving you a north-south loadbalancer, all of this will be taken care by cilium 16 which will also have the ability to perform east-west loadbalancer enforcement. 17 18 *What's Ingress? See [here](https://kubernetes.io/docs/concepts/services-networking/ingress/#what-is-ingress)* 19 20  21 22 ## Requirements 23 24 - VirtualBox 25 - Vagrant 26 - Cilium vagrant image with `K8S` mode ON 27 - Tested with kubernetes `v1.6.4` 28 - 4GB of RAM (2GB per VM) (Minimal 1536MB per VM) 29 30 ### Set up cilium vagrant image with K8S 31 32 Start the vagrant VM with our provided script and the following options: 33 34 - `LB=1` sets up the `cilium-k8s-master` to start up in loadbalancer mode; 35 - `IPV4=0` disables IPv4 mode in cilium; 36 - `K8S=1` installs k8s in the provided VMs; 37 - `ǸWORKERS=1` to start a second VM. 38 39 Optional: 40 - `NFS=1` syncs the project's root directory with the VM; 41 (Demands host's firewall to be open to the VMs network: `udp:111`, `udp:2049`, 42 `udp:20048`) 43 - `MEMORY=1536` sets the VMs memory to 1536 MB each. 44 45 If you encounter an error similar to: 46 47 Start the VM: 48 49 ``` 50 LB=1 IPV4=0 K8S=1 NWORKERS=1 ./contrib/vagrant/start.sh 51 ``` 52 53 *This might take a while depending on your network bandwidth and machine speed.* 54 55 56 ### Connecting to your K8S cluster 57 58 59 Install the `kubectl` in your host by running the script. If you already have 60 `kubectl` installed, run the script without the `INSTALL` environment variable 61 so it sets `cilium-k8s-local` as the default `kubectl` cluster. The `IPV6_EXT` 62 is used to set the kubernetes master with the IPv6 address. 63 64 ``` 65 INSTALL=1 IPV6_EXT=1 examples/kubernetes-ingress/scripts/06-kubectl.sh 66 ``` 67 68 ``` 69 Cluster "cilium-k8s-local" set. 70 User "admin" set. 71 Context "default-context" set. 72 Switched to context "default-context". 73 NAME STATUS MESSAGE ERROR 74 scheduler Healthy ok 75 controller-manager Healthy ok 76 etcd-0 Healthy {"health": "true"} 77 NAME STATUS AGE 78 cilium-k8s-master Ready 43m 79 cilium-k8s-node-2 Ready 36m 80 Check cilium status: 81 cilium status 82 ``` 83 84 Check if the network policies are in place: 85 ``` 86 kubectl get networkpolicies 87 ``` 88 89 ``` 90 NAME POD-SELECTOR AGE 91 guestbook-redis k8s-app.guestbook=redis 16h 92 ``` 93 94 And if the cilium network policies are also in kubernetes: 95 ``` 96 kubectl get ciliumnetworkpolicies 97 ``` 98 99 ``` 100 NAME KIND 101 guestbook-web CiliumNetworkPolicy.v1.cilium.io 102 ``` 103 104 105 If they are not present you can install them by running: 106 107 ``` 108 kubectl create -f https://raw.githubusercontent.com/cilium/cilium/master/examples/kubernetes-ingress/network-policy/guestbook-policy-redis.json 109 kubectl create -f https://raw.githubusercontent.com/cilium/cilium/master/examples/kubernetes-ingress/network-policy/guestbook-policy-web.yaml 110 ``` 111 112 113 Check if `kube-dns` is running: 114 ``` 115 kubectl get pods -o wide --all-namespaces 116 ``` 117 ``` 118 NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE 119 kube-system kube-dns-2227720648-l500t 4/4 Running 0 2m fd02::c0a8:210c:0:ad cilium-k8s-node-2 120 ``` 121 122 ### Create the guestbook pods and services 123 124 Now that you've a kubernetes cluster with all network policies and essential 125 services up and running, you can start creating your services. 126 127 Let's create the guestbook with the redis service: 128 129 ``` 130 kubectl create -f examples/kubernetes-ingress/deployments/guestbook 131 ``` 132 ``` 133 replicationcontroller "redis-master" created 134 service "redis-master" created 135 replicationcontroller "redis-slave" created 136 service "redis-slave" created 137 replicationcontroller "guestbook" created 138 service "guestbook" created 139 ``` 140 141 Check the deployment: 142 ``` 143 kubectl get pods -o wide 144 ``` 145 ``` 146 NAME READY STATUS RESTARTS AGE IP NODE 147 guestbook-1x3br 1/1 Running 0 4m fd02::c0a8:210c:0:c164 cilium-k8s-node-2 148 redis-master-svhf3 1/1 Running 0 4m fd02::c0a8:210c:0:2afc cilium-k8s-node-2 149 redis-slave-2ccvq 1/1 Running 0 4m fd02::c0a8:210c:0:367d cilium-k8s-node-2 150 ``` 151 152 *Wait until all pods are in `Running` status.* 153 154 Check the list of services installed in the cluster: 155 156 ``` 157 kubectl get svc 158 ``` 159 ``` 160 NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE 161 guestbook fd03::25be <none> 3000/TCP 5m 162 kubernetes fd03::1 <none> 443/TCP 17h 163 redis-master fd03::2d66 <none> 6379/TCP 5m 164 redis-slave fd03::fa12 <none> 6379/TCP 5m 165 ``` 166 167 ### Create ingress rules 168 169 You now have a set of pods and services inside your cluster. That isn't too much 170 helpful unless they are exposed outside of the cluster. 171 172 ``` 173 kubectl create -f examples/kubernetes-ingress/deployments/guestbook/ingress/guestbook-ingress.yaml 174 ``` 175 ``` 176 ingress "guestbook-ingress" created 177 ``` 178 Verify if the ingress controller set up an address for the given ingress rule: 179 ``` 180 kubectl get ingresses 181 ``` 182 ``` 183 NAME HOSTS ADDRESS PORTS AGE 184 guestbook-ingress * fd00::b 80 2m 185 ``` 186 187 As you may noticed, cilium has set up the public address of `cilium-k8s-master` 188 as the ingress address. This will redirect all traffic that enters that IP with 189 port 80 to the `guestbook` service running on `cilium-k8s-node-2`. The response 190 packets from `guestbook` service will go directly to the client without touching 191 the loadbalancer node (`cilium-k8s-master`). 192 193 This loadbalancing mode is referred to as direct server return and decreases the 194 system load on the load balancers as reverse translation is distributed across 195 all backend nodes. This is in particular beneficial on asymmetric bandwidth 196 workloads with small requests and potentially large responses such as media 197 streaming. 198 199 Open your browser in [http://[FD00::B]](http://[FD00::B]) and you should see 200 something similar to this: 201 202  203 204 ### Clean up 205 206 If you want to halt your VMs you can simply run: 207 208 ``` 209 NWORKERS=1 K8S=1 vagrant halt cilium-k8s-master cilium-k8s-node-2 210 ``` 211 212 And to resume use the same options when you start **plus** the `RELOAD=1`: 213 214 ``` 215 RELOAD=1 LB=1 IPV4=0 K8S=1 NWORKERS=1 ./contrib/vagrant/start.sh 216 ``` 217 218 If you want to delete and remove the VMs from your machine: 219 220 ``` 221 NWORKERS=1 K8S=1 vagrant destroy -f cilium-k8s-master cilium-k8s-node-2 222 ```