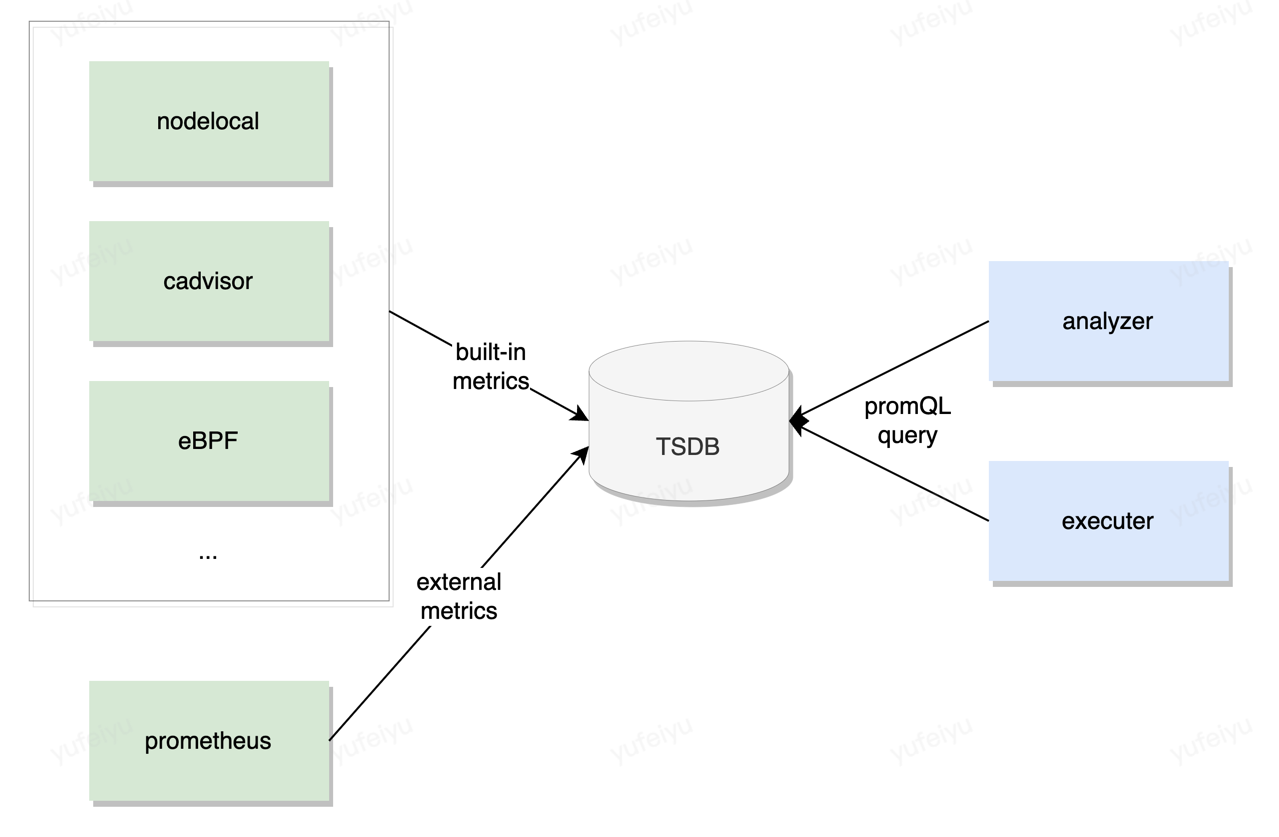

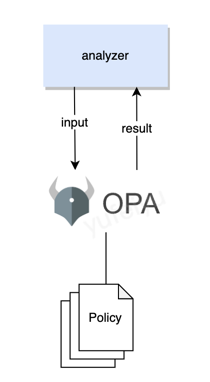

github.com/gocrane/crane@v0.11.0/docs/proposals/20220402-policy-based-abnomal-detection.md (about) 1 # Provide a policy-based abnormal detection mechanism in crane-agent 2 3 ## Table of Contents 4 5 ## Summary 6 Crane-agent is responsible for detecting abnormality on nodes and interference between running pods. 7 8 Currently, such detection mechanism is fixed and quite simple. 9 Crane-agent compares the values of some pre-defined metrics, such as node's `cpu_total_usage` and `cpu_total_utilization`, 10 with some thresholds periodically. If the metric value is higher the threshold for some times, say the `cpu_total_utilization` 11 on a node is found higher than 80% in 3 consecutive detections, crane-agent thinks the node entering into an abnormal status, 12 and will perform some further actions, such as suppressing or evicting pods with low priorities. 13 14 This proposal suggests a flexible and extensible way to detect abnormality. The criteria of abnormality can be customized by 15 users in form of policies, and the detection process is executed in a policy decision-making way, which is offloaded to a 16 general-purpose policy engine. 17 18 ## Motivation 19 The criteria of abnormality or interference are not that always as simple as something like a metric value is higher than a threshold. 20 Different users may have different QoS requirements on different applications in different environments. The rule of 21 abnormality detection varies, and it is impossible to implement all of them in code in advance. 22 23 ### Goals 24 1. Provides an abnormality detection mechanism which can consume external metrics. 25 2. Provides an abnormality detection mechanism in which the logic determining how to check the abnormality can be customized. 26 3. Metrics and detection policies can be added, updated and deleted on the fly without changing the code. 27 28 ### Non-Goals 29 1. How to handle the abnormality or interference. This proposal only focuses on detection, and the subsequent action is 30 out of scope. 31 32 33 ## Proposal 34 35 ### User Stories 36 37 #### Story 1 38 A user has a critical online application which is latency sensitive running in the cluster, and he wants to use both 39 the 99th percentile response time and the error code rate as the application QoS indicators. If either of these 2 indicators 40 deteriorates, the application is thought of being in abnormal status. 41 42 43 #### Story 2 44 The SRE team finds that if the node CPU utilization is more than 60%, the QoS of some latency sensitive applications 45 running on it are likely to decline. So they want to keep the node CPU utilization lower than 60%. 46 If the utilization is higher than this threshold, the BE applications should be suppressed 47 accordingly. 48 49 50 #### Story 3 51 The traffic of online applications is very low at night, and the offline jobs are run during this time. 52 Comparing with online applications, offline jobs always require more CPU resource quantities but less resource qualities. 53 In this case, the SRE team wants to set different node CPU load thresholds in the daytime and at night. 54 55 56 57 ### Functional Requirements 58 59 ### Implementation Details 60 #### API 61 #####NodeQOSEnsurancePolicy 62 ```yaml 63 apiVersion: ensurance.crane.io/v1alpha1 64 kind: NodeQOSEnsurancePolicy 65 metadata: 66 name: "xxx" 67 spec: 68 nodeQualityProbe: 69 prometheus: 70 targets: ['localhost:9090'] 71 queryInterval: 60s 72 metrics: 73 - name: node_cpu_utilization 74 query: 1 - avg(irate(node_cpu_seconds_total{mode="idle", instance="$nodeName"}[5m])) 75 variables: 76 - name: nodeName 77 valueFrom: 78 fieldRef: 79 fieldPath: spec.nodeName 80 objectiveEnsurances: 81 - name: "ext_cpu_total_distribute" 82 avoidanceThreshold: 2 83 restoreThreshold: 2 84 actionName: "disablescheduling" 85 policy: | 86 default abnormal = false 87 hour := time.clock([time.now_ns(), "Local"])[0] 88 abnormal { 89 input.node_cpu_utilization > 0.6 90 hour >= 7, hour < 21 91 } 92 abnormal { 93 input.node_cpu_utilization > 0.8 94 hour >= 21 95 } 96 abnormal { 97 input.node_cpu_utilization > 0.8 98 hour < 7 99 } 100 ``` 101 #####PodQOSEnsurancePolicy 102 ```yaml 103 apiVersion: ensurance.crane.io/v1alpha1 104 kind: PodQOSEnsurancePolicy 105 metadata: 106 name: "xxx" 107 spec: 108 selector: 109 matchLabels: 110 app: test 111 qualityProbe: 112 prometheus: 113 targets: ['localhost:9090'] 114 queryInterval: 60s 115 metrics: 116 - name: test_app_p90_latency 117 query: histogram_quantile(0.9, rate(http_request_duration_seconds_bucket{pod=~"$podName", node="$nodeName"}[1m])) 118 variables: 119 - name: podName 120 valueFrom: 121 fieldRef: 122 fieldPath: metadata.name 123 - name: nodeName 124 valueFrom: 125 fieldRef: 126 fieldPath: spec.nodeName 127 objectiveEnsurances: 128 - name: "ext_cpu_total_distribute" 129 avoidanceThreshold: 2 130 restoreThreshold: 2 131 actionName: "disablescheduling" 132 policy: | 133 abnormal if test_app_p90_latency[_].value > 0.1 134 ``` 135 In addition to `Prometheus`, other protocols, such as `Graphite`, `InfluxDB` can also 136 be added in the future. 137 138 #### Metrics 139 #### Built-in metrics 140 Currently, crane-agent collects a bunch of metrics(defined in `pkg/ensurance/collector/types/types.go`, e.g. 141 `cpu_total_usage`). 142 These metrics are collected by `nodelocal` and `cadvisor` collectors, both of which collects metrics every 10 seconds. 143 144 We call these metrics as built-in metrics. Users can use built-in metrics in the policy without explicit setting, and 145 crane-agent will pass their values to every request to policy engine. 146 147 ##### External metrics (New) 148 Crane-agent can also get `external` metrics by querying against prometheus servers. A new `prometheus` quality probe 149 will be added to CRDs `PodQOSEnsurancePolicy` and `NodeQOSEnsurancePolicy` as shown in above 2 example yamls. 150 151 In [PodQOSEnsurancePolicy](#PodQOSEnsurancePolicy), `.spec.nodeQualityProbe.prometheus.metrics.query` is a promQL, which 152 may includes some node variables (prefixed with `$`). In this case, crane-agent will use its node name 153 to replace the variable `$nodeName`. 154 155 156 In [PodQOSEnsurancePolicy](#PodQOSEnsurancePolicy), `.spec.qualityProbe.prometheus.metrics.query` is a promQL, which 157 may includes some pod related variables (`$nodeName`, `$podName` in this example). Crane-agent will firstly 158 get all pods that match the `.spec.selector.matchLabels` on its node. Say two pods are selected, and 159 their names are `pod-1` and `pod-2`, and the node name is `node-1`. The replaced promQL will be 160 ``` 161 histogram_quantile(0.9, rate(http_request_duration_seconds_bucket{pod=~"pod-1|pod-2", node="node-1"}[1m])) 162 ``` 163 And 2 query results are expected to get returned, like: 164 ``` 165 test_app_p90_latency{pod="pod-1", ...} 0.01 166 test_app_p90_latency{pod="pod-2", ...} 0.01 167 ``` 168 169 Simply speaking, variables in promQL help crane-agent only query metrics of its own node and the pods that running on its own node. 170 171 172 173 #### Embedded metrics TSDB 174 In order to decouple the components that collect metrics and those which consume the metrics, and to 175 make these components' logic simple, an embedded metrics TSDB will be imported into crane-agent. 176 177 Prometheus-tsdb and vmstorage are two good candidates, both of which are easy to insert values and are 178 compatible with promQL query grammar. 179 180 Both analyzer and executor fetch metrics from the TSDB without considering where the metrics come from. 181 182  183 184 #### Policy 185 The Open Policy Agent (OPA) is an open source, general-purpose policy engine that unifies policy enforcement. 186 Crane-agent will use it to evaluate if nodes or pods are abnormal. 187 188 The criteria for detecting abnormality is not pre-defined or hardcoded, instead, it is customized by users 189 at runtime. 190 191 A `policy` filed will be added to `ObjectiveEnsurance`, which is 192 a [rego](https://www.openpolicyagent.org/docs/latest/policy-language/) rule whose result is a boolean 193 element. 194 195 crane-agent will feed both the latest built-in and external metrics as input into the OPA policy engine, and OPA 196 make decisions based on input and policies. 197 198  199 200 A sample input is as follows: 201 ```json 202 { 203 "crane": { 204 "cpu_total_usage": 4680, 205 ... orhter built-in mertrcs 206 }, 207 "test_app_p90_latency": [ 208 { 209 "labels": { 210 "pod": "pod-1", 211 "node": "node-1" 212 }, 213 "value": 0.1 214 }, 215 { 216 "labels": { 217 "pod": "pod-2", 218 "node": "node-1" 219 }, 220 "value": 0.09 221 } 222 ], 223 ... 224 } 225 ``` 226