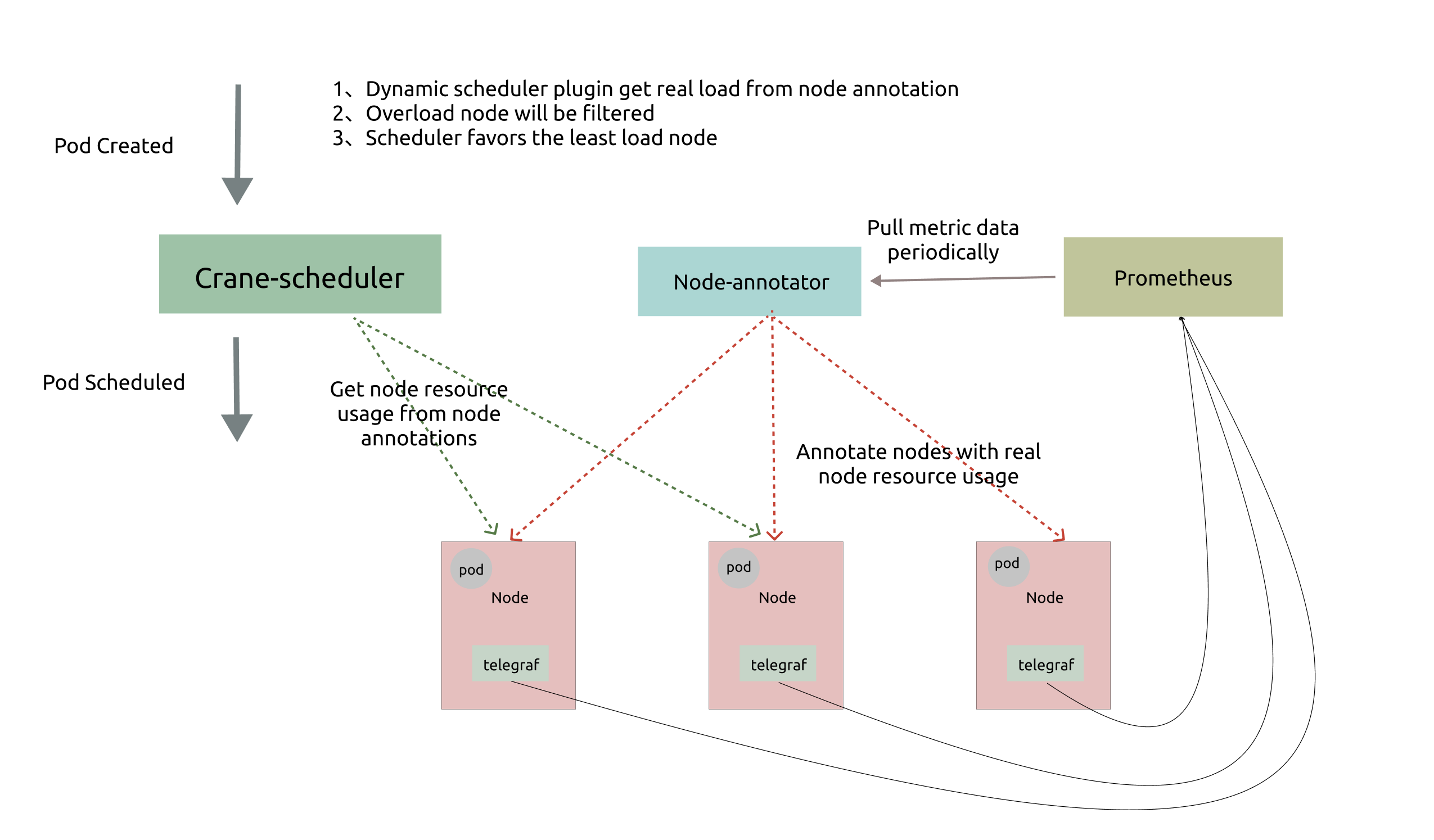

github.com/gocrane/crane@v0.11.0/docs/tutorials/dynamic-scheduler-plugin.md (about) 1 # Dynamic-scheduler: a load-aware scheduler plugin 2 3 ## Introduction 4 Native scheduler of kubernetes can only schedule pods by resource request, which can easily cause a series of load uneven problems: 5 6 - for some nodes, the actual load is not much different from the resource request, which will lead to a very high probability of stability problems. 7 - for others, the actual load is much smaller than the resource request, which will lead to a huge waste of resources. 8 9 To solve these problems, Dynamic scheduler builds a simple but efficient model based on actual node utilization data,and filters out those nodes with high load to balance the cluster. 10 ## Design Details 11 ### Architecture 12  13 14 15 As shown above, Dynamic scheduler relies on `Prometheus` and `Node-exporter` to collect and aggregate metrics data, and it consists of two components: 16 !!! note "Note" 17 `Node-annotator` is currently a module of `Crane-scheduler-controller`. 18 19 - `Node-annotator` periodically pulls data from Prometheus and marks them with timestamp on the node in the form of annotations. 20 - `Dynamic plugin` reads the load data directly from the node's annotation, filters and scores candidates based on a simple algorithm. 21 22 ### Scheduler Policy 23 Dynamic provides a default [scheduler policy](../deploy/manifests/policy.yaml) and supports user-defined policies. The default policy reies on following metrics: 24 25 - `cpu_usage_avg_5m` 26 - `cpu_usage_max_avg_1h` 27 - `cpu_usage_max_avg_1d` 28 - `mem_usage_avg_5m` 29 - `mem_usage_max_avg_1h` 30 - `mem_usage_max_avg_1d` 31 32 At the scheduling `Filter` stage, the node will be filtered if the actual usage rate of this node is greater than the threshold of any of the above metrics. And at the `Score` stage, the final score is the weighted sum of these metrics' values. 33 34 ### Hot Value 35 In the production cluster, scheduling hotspots may occur frequently because the load of the nodes can not increase immediately after the pod is created. Therefore, we define an extra metrics named `Hot Value`, which represents the scheduling frequency of the node in recent times. And the final priority of the node is the final score minus the `Hot Value`. 36