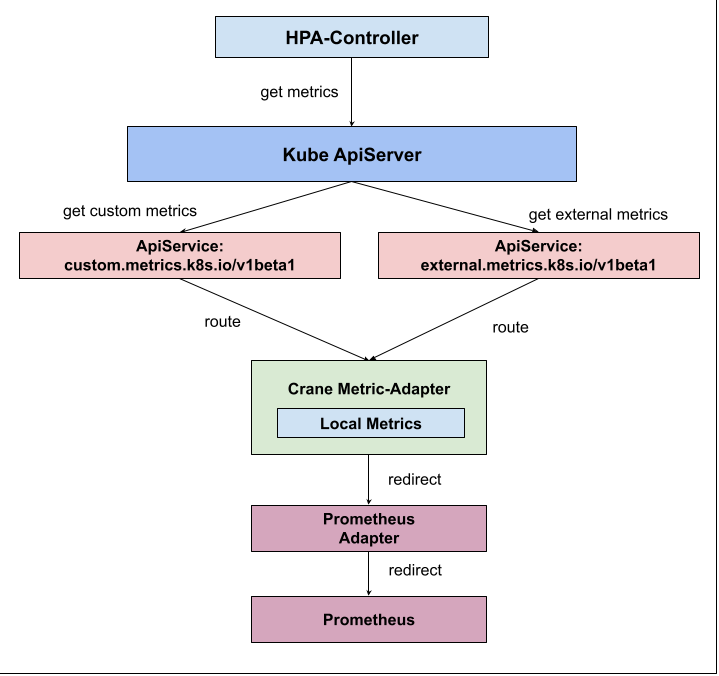

github.com/gocrane/crane@v0.11.0/docs/tutorials/effective-hpa-with-prometheus-adapter.md (about) 1 # Intelligent Autoscaling Practices Based on Effective HPA for Custom Metrics 2 3 The Kubernetes HPA supports rich elasticity scaling capabilities, with Kubernetes platform developers deploying services to implement custom Metric services and Kubernetes users configuring multiple built-in resource metrics or custom Metric metrics to achieve custom horizontal elasticity. 4 Effective HPA is compatible with the community's Kubernetes HPA capabilities, providing smarter autoscaling policies such as prediction-based autoscaling and Cron-cycle-based autoscaling. 5 Prometheus is a popular open source monitoring system today, through which user-defined metrics configurations are accessible. 6 7 In this article, we present an example of how to implement intelligent resilience of custom metrics based on Effective HPA. Some configurations are taken from [official documentation](https://github.com/kubernetes-sigs/prometheus-adapter/blob/master/docs/walkthrough.md) 8 9 ## Environment Requirements 10 11 - Kubernetes 1.18+ 12 - Helm 3.1.0 13 - Crane v0.6.0+ 14 - Prometheus 15 16 Refer to [installation documentation](https://docs.gocrane.io/dev/installation/) to install Crane in the cluster, Prometheus can be used either from the installation documentation or from the deployed Prometheus. 17 18 ## Environment build 19 20 ### Installing PrometheusAdapter 21 22 The Crane components Metric-Adapter and PrometheusAdapter are both based on [custom-metric-apiserver](https://github.com/kubernetes-sigs/custom-metrics-apiserver) which implements When installing Crane, the corresponding ApiService will be installed as the Metric-Adapter of Crane, so you need to remove the ApiService before installing PrometheusAdapter to ensure that Helm is installed successfully. 23 24 ```sh 25 # View the current ApiService 26 kubectl get apiservice 27 ``` 28 29 Since Crane is installed, the result is as follows. 30 31 ```sh 32 NAME SERVICE AVAILABLE AGE 33 v1beta1.batch Local True 35d 34 v1beta1.custom.metrics.k8s.io crane-system/metric-adapter True 18d 35 v1beta1.discovery.k8s.io Local True 35d 36 v1beta1.events.k8s.io Local True 35d 37 v1beta1.external.metrics.k8s.io crane-system/metric-adapter True 18d 38 v1beta1.flowcontrol.apiserver.k8s.io Local True 35d 39 v1beta1.metrics.k8s.io kube-system/metrics-service True 35d 40 ``` 41 42 Remove the installed ApiService by crane 43 44 ```bash 45 kubectl delete apiservice v1beta1.custom.metrics.k8s.io 46 kubectl delete apiservice v1beta1.external.metrics.k8s.io 47 ``` 48 49 Install PrometheusAdapter via Helm 50 51 ```bash 52 helm repo add prometheus-community https://prometheus-community.github.io/helm-charts 53 helm repo update 54 helm install prometheus-adapter -n crane-system prometheus-community/prometheus-adapter 55 ``` 56 57 Then change the ApiService back to Crane's Metric-Adapter 58 59 ```bash 60 kubectl apply -f https://raw.githubusercontent.com/gocrane/crane/main/deploy/metric-adapter/apiservice.yaml 61 ``` 62 63 ### Configure Metric-Adapter to enable RemoteAdapter functionality 64 65 The installation of PrometheusAdapter did not point the ApiService to PrometheusAdapter, so in order to allow PrometheusAdapter to provide custom Metric as well, the ``RemoteAdapter`` function of Crane Metric Adapter is used to forward requests to PrometheusAdapter. 66 67 Modify the Metric-Adapter configuration to configure PrometheusAdapter's Service as Crane Metric Adapter's RemoteAdapter 68 69 ```bash 70 # View the current ApiService 71 kubectl edit deploy metric-adapter -n crane-system 72 ``` 73 74 Make the following changes based on the PrometheusAdapter configuration. 75 76 ```yaml 77 apiVersion: apps/v1 78 kind: Deployment 79 metadata: 80 name: metric-adapter 81 namespace: crane-system 82 spec: 83 template: 84 spec: 85 containers: 86 - args: 87 #Add external Adapter configuration 88 - --remote-adapter=true 89 - --remote-adapter-service-namespace=crane-system 90 - --remote-adapter-service-name=prometheus-adapter 91 - --remote-adapter-service-port=443 92 ``` 93 94 #### RemoteAdapter Capabilities 95 96  97 98 Kubernetes restricts an ApiService to configure only one backend service, so in order to use the Metric provided by Crane and the Metric provided by PrometheusAdapter within a cluster, Crane supports a RemoteAdapter to solve this problem 99 100 - Crane Metric-Adapter supports the configuration of a Kubernetes Service as a Remote Adapter 101 - The Crane Metric-Adapter will first check if the request is a Crane provided Local Metric, and if not, forward it to the Remote Adapter 102 103 ## Run the example 104 105 ### Preparing the application 106 107 Deploy the following application to the cluster, which exposes the Metric to show the number of http requests received per second. 108 109 <summary>sample-app.deploy.yaml</summary> 110 111 ```yaml 112 apiVersion: apps/v1 113 kind: Deployment 114 metadata: 115 name: sample-app 116 labels: 117 app: sample-app 118 spec: 119 replicas: 1 120 selector: 121 matchLabels: 122 app: sample-app 123 template: 124 metadata: 125 labels: 126 app: sample-app 127 spec: 128 containers: 129 - image: luxas/autoscale-demo:v0.1.2 130 name: metrics-provider 131 resources: 132 limits: 133 cpu: 500m 134 requests: 135 cpu: 200m 136 ports: 137 - name: http 138 containerPort: 8080 139 ``` 140 141 <summary>sample-app.service.yaml</summary> 142 143 ```yaml 144 apiVersion: v1 145 kind: Service 146 metadata: 147 labels: 148 app: sample-app 149 name: sample-app 150 spec: 151 ports: 152 - name: http 153 port: 80 154 protocol: TCP 155 targetPort: 8080 156 selector: 157 app: sample-app 158 type: ClusterIP 159 ``` 160 161 ```bash 162 kubectl create -f sample-app.deploy.yaml 163 kubectl create -f sample-app.service.yaml 164 ``` 165 166 When the application is deployed, you can check the `http_requests_total` Metric with the command 167 168 ```bash 169 curl http://$(kubectl get service sample-app -o jsonpath='{ .spec.clusterIP }')/metrics 170 ``` 171 172 ### Configure collection rules 173 174 Configure Prometheus' ScrapeConfig to collect the application's Metric: http_requests_total 175 176 ```bash 177 kubectl edit configmap -n crane-system prometheus-server 178 ``` 179 180 Add the following configuration 181 182 ```yaml 183 - job_name: sample-app 184 kubernetes_sd_configs: 185 - role: pod 186 relabel_configs: 187 - action: keep 188 regex: default;sample-app-(.+) 189 source_labels: 190 - __meta_kubernetes_namespace 191 - __meta_kubernetes_pod_name 192 - action: labelmap 193 regex: __meta_kubernetes_pod_label_(.+) 194 - action: replace 195 source_labels: 196 - __meta_kubernetes_namespace 197 target_label: namespace 198 - source_labels: [__meta_kubernetes_pod_name] 199 action: replace 200 target_label: pod 201 ``` 202 203 At this point, you can use psql to query Prometheus: sum(rate(http_requests_total[5m])) by (pod) 204 205 ### Verify PrometheusAdapter 206 207 The default rule configuration of PrometheusAdapter supports converting http_requests_total to a custom metric of type Pods, verified by the command 208 209 ```bash 210 kubectl get --raw /apis/custom.metrics.k8s.io/v1beta1 | jq . 211 ``` 212 213 The result should include ``pods/http_requests``: 214 215 ```bash 216 { 217 "name": "pods/http_requests", 218 "singularName": "", 219 "namespaced": true, 220 "kind": "MetricValueList", 221 "verbs": [ 222 "get" 223 ] 224 } 225 ``` 226 227 This indicates that the HPA can now be configured via Pod Metric. 228 229 ### Configuring autoscaling 230 231 We can now create the Effective HPA. at this point the Effective HPA can be resilient via Pod Metric `http_requests`: 232 233 #### How to define a custom metric to enable prediction 234 235 Annotation in the Effective HPA adds the configuration according to the following rules: 236 237 ```yaml 238 annotations: 239 # metric-query.autoscaling.crane.io 是固定的前缀,后面是 Metric 名字,需跟 spec.metrics 中的 Metric.name 相同,支持 Pods 类型和 External 类型 240 metric-query.autoscaling.crane.io/http_requests: "sum(rate(http_requests_total[5m])) by (pod)" 241 ``` 242 243 <summary>sample-app-hpa.yaml</summary> 244 245 ```yaml 246 apiVersion: autoscaling.crane.io/v1alpha1 247 kind: EffectiveHorizontalPodAutoscaler 248 metadata: 249 name: php-apache 250 annotations: 251 # metric-query.autoscaling.crane.io 是固定的前缀,后面是 Metric 名字,需跟 spec.metrics 中的 Metric.name 相同,支持 Pods 类型和 External 类型 252 metric-query.autoscaling.crane.io/http_requests: "sum(rate(http_requests_total[5m])) by (pod)" 253 spec: 254 # ScaleTargetRef is the reference to the workload that should be scaled. 255 scaleTargetRef: 256 apiVersion: apps/v1 257 kind: Deployment 258 name: sample-app 259 minReplicas: 1 # MinReplicas is the lower limit replicas to the scale target which the autoscaler can scale down to. 260 maxReplicas: 10 # MaxReplicas is the upper limit replicas to the scale target which the autoscaler can scale up to. 261 scaleStrategy: Auto # ScaleStrategy indicate the strategy to scaling target, value can be "Auto" and "Manual". 262 # Metrics contains the specifications for which to use to calculate the desired replica count. 263 metrics: 264 - type: Resource 265 resource: 266 name: cpu 267 target: 268 type: Utilization 269 averageUtilization: 50 270 - type: Pods 271 pods: 272 metric: 273 name: http_requests 274 target: 275 type: AverageValue 276 averageValue: 500m 277 # Prediction defines configurations for predict resources. 278 # If unspecified, defaults don't enable prediction. 279 prediction: 280 predictionWindowSeconds: 3600 # PredictionWindowSeconds is the time window to predict metrics in the future. 281 predictionAlgorithm: 282 algorithmType: dsp 283 dsp: 284 sampleInterval: "60s" 285 historyLength: "7d" 286 ``` 287 288 ```bash 289 kubectl create -f sample-app-hpa.yaml 290 ``` 291 292 Check the TimeSeriesPrediction status, which may be unpredictable if the app has been running for a short time: 293 294 ```yaml 295 apiVersion: prediction.crane.io/v1alpha1 296 kind: TimeSeriesPrediction 297 metadata: 298 creationTimestamp: "2022-07-11T16:10:09Z" 299 generation: 1 300 labels: 301 app.kubernetes.io/managed-by: effective-hpa-controller 302 app.kubernetes.io/name: ehpa-php-apache 303 app.kubernetes.io/part-of: php-apache 304 autoscaling.crane.io/effective-hpa-uid: 1322c5ac-a1c6-4c71-98d6-e85d07b22da0 305 name: ehpa-php-apache 306 namespace: default 307 spec: 308 predictionMetrics: 309 - algorithm: 310 algorithmType: dsp 311 dsp: 312 estimators: {} 313 historyLength: 7d 314 sampleInterval: 60s 315 resourceIdentifier: crane_pod_cpu_usage 316 resourceQuery: cpu 317 type: ResourceQuery 318 - algorithm: 319 algorithmType: dsp 320 dsp: 321 estimators: {} 322 historyLength: 7d 323 sampleInterval: 60s 324 expressionQuery: 325 expression: sum(rate(http_requests_total[5m])) by (pod) 326 resourceIdentifier: crane_custom.pods_http_requests 327 type: ExpressionQuery 328 predictionWindowSeconds: 3600 329 targetRef: 330 apiVersion: apps/v1 331 kind: Deployment 332 name: sample-app 333 namespace: default 334 status: 335 conditions: 336 - lastTransitionTime: "2022-07-12T06:54:42Z" 337 message: not all metric predicted 338 reason: PredictPartial 339 status: "False" 340 type: Ready 341 predictionMetrics: 342 - ready: false 343 resourceIdentifier: crane_pod_cpu_usage 344 - prediction: 345 - labels: 346 - name: pod 347 value: sample-app-7cfb596f98-8h5vv 348 samples: 349 - timestamp: 1657608900 350 value: "0.01683" 351 - timestamp: 1657608960 352 value: "0.01683" 353 ...... 354 ready: true 355 resourceIdentifier: crane_custom.pods_http_requests 356 ``` 357 358 Looking at the HPA object created by Effective HPA, you can observe that a Metric has been created based on custom metrics predictions: ``crane_custom.pods_http_requests``. 359 360 ```yaml 361 apiVersion: autoscaling/v2beta2 362 kind: HorizontalPodAutoscaler 363 metadata: 364 creationTimestamp: "2022-07-11T16:10:10Z" 365 labels: 366 app.kubernetes.io/managed-by: effective-hpa-controller 367 app.kubernetes.io/name: ehpa-php-apache 368 app.kubernetes.io/part-of: php-apache 369 autoscaling.crane.io/effective-hpa-uid: 1322c5ac-a1c6-4c71-98d6-e85d07b22da0 370 name: ehpa-php-apache 371 namespace: default 372 spec: 373 maxReplicas: 10 374 metrics: 375 - pods: 376 metric: 377 name: http_requests 378 target: 379 averageValue: 500m 380 type: AverageValue 381 type: Pods 382 - pods: 383 metric: 384 name: crane_custom.pods_http_requests 385 selector: 386 matchLabels: 387 autoscaling.crane.io/effective-hpa-uid: 1322c5ac-a1c6-4c71-98d6-e85d07b22da0 388 target: 389 averageValue: 500m 390 type: AverageValue 391 type: Pods 392 - resource: 393 name: cpu 394 target: 395 averageUtilization: 50 396 type: Utilization 397 type: Resource 398 minReplicas: 1 399 scaleTargetRef: 400 apiVersion: apps/v1 401 kind: Deployment 402 name: sample-app 403 ``` 404 405 ## Summary 406 407 Due to the complexity of production environments, multi-metric-based autoscaling (CPU/Memory/custom metrics) is often a common choice for production applications, so Effective HPA achieves the effectiveness of helping more businesses land horizontal autoscaling in production environments by covering multi-metric autoscaling with predictive algorithms.