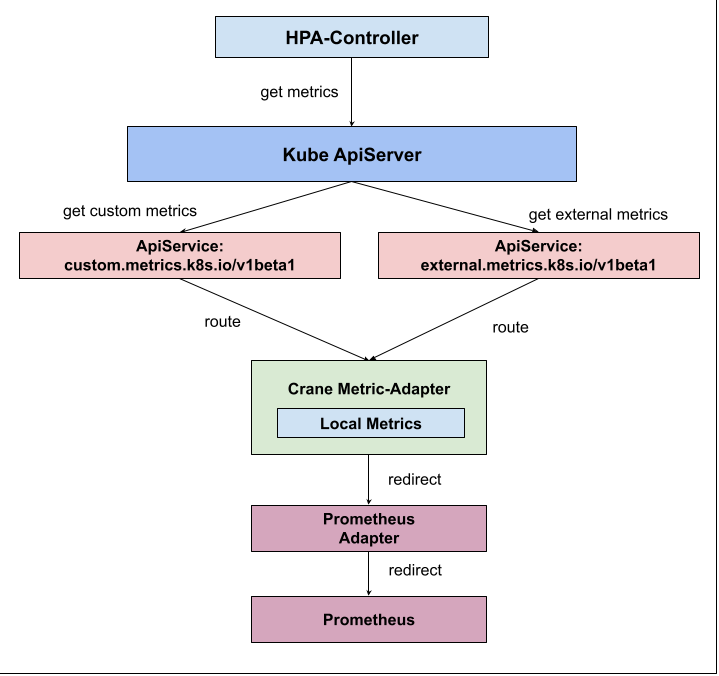

github.com/gocrane/crane@v0.11.0/docs/tutorials/effective-hpa-with-prometheus-adapter.zh.md (about) 1 # 基于 Effective HPA 实现自定义指标的智能弹性实践 2 3 Kubernetes HPA 支持了丰富的弹性扩展能力,Kubernetes 平台开发者部署服务实现自定义 Metric 的服务,Kubernetes 用户配置多项内置的资源指标或者自定义 Metric 指标实现自定义水平弹性。 4 Effective HPA 兼容社区的 Kubernetes HPA 的能力,提供了更智能的弹性策略,比如基于预测的弹性和基于 Cron 周期的弹性等。 5 Prometheus 是当下流行的开源监控系统,通过 Prometheus 可以获取到用户的自定义指标配置。 6 7 本文将通过一个例子介绍了如何基于 Effective HPA 实现自定义指标的智能弹性。部分配置来自于 [官方文档](https://github.com/kubernetes-sigs/prometheus-adapter/blob/master/docs/walkthrough.md) 8 9 ## 部署环境要求 10 11 - Kubernetes 1.18+ 12 - Helm 3.1.0 13 - Crane v0.6.0+ 14 - Prometheus 15 16 参考 [安裝文档](https://docs.gocrane.io/dev/installation/) 在集群中安装 Crane,Prometheus 可以使用安装文档中的也可以是已部署的 Prometheus。 17 18 ## 环境搭建 19 20 ### 安装 PrometheusAdapter 21 22 Crane 组件 Metric-Adapter 和 PrometheusAdapter 都基于 [custom-metric-apiserver](https://github.com/kubernetes-sigs/custom-metrics-apiserver) 实现了 Custom Metric 和 External Metric 的 ApiService。在安装 Crane 时会将对应的 ApiService 安装为 Crane 的 Metric-Adapter,因此安装 PrometheusAdapter 前需要删除 ApiService 以确保 Helm 安装成功。 23 24 ```bash 25 # 查看当前集群 ApiService 26 kubectl get apiservice 27 ``` 28 29 因为安装了 Crane, 结果如下: 30 31 ```bash 32 NAME SERVICE AVAILABLE AGE 33 v1beta1.batch Local True 35d 34 v1beta1.custom.metrics.k8s.io crane-system/metric-adapter True 18d 35 v1beta1.discovery.k8s.io Local True 35d 36 v1beta1.events.k8s.io Local True 35d 37 v1beta1.external.metrics.k8s.io crane-system/metric-adapter True 18d 38 v1beta1.flowcontrol.apiserver.k8s.io Local True 35d 39 v1beta1.metrics.k8s.io kube-system/metrics-service True 35d 40 ``` 41 42 删除 crane 安装的 ApiService 43 44 ```bash 45 kubectl delete apiservice v1beta1.custom.metrics.k8s.io 46 kubectl delete apiservice v1beta1.external.metrics.k8s.io 47 ``` 48 49 通过 Helm 安装 PrometheusAdapter 50 51 ```bash 52 helm repo add prometheus-community https://prometheus-community.github.io/helm-charts 53 helm repo update 54 helm install prometheus-adapter -n crane-system prometheus-community/prometheus-adapter 55 ``` 56 57 再将 ApiService 改回 Crane 的 Metric-Adapter 58 59 ```bash 60 kubectl apply -f https://raw.githubusercontent.com/gocrane/crane/main/deploy/metric-adapter/apiservice.yaml 61 ``` 62 63 ### 配置 Metric-Adapter 开启 RemoteAdapter 功能 64 65 在安装 PrometheusAdapter 时没有将 ApiService 指向 PrometheusAdapter,因此为了让 PrometheusAdapter 也可以提供自定义 Metric,通过 Crane Metric Adapter 的 `RemoteAdapter` 功能将请求转发给 PrometheusAdapter。 66 67 修改 Metric-Adapter 的配置,将 PrometheusAdapter 的 Service 配置成 Crane Metric Adapter 的 RemoteAdapter 68 69 ```bash 70 # 查看当前集群 ApiService 71 kubectl edit deploy metric-adapter -n crane-system 72 ``` 73 74 根据 PrometheusAdapter 的配置做以下修改: 75 76 ```yaml 77 apiVersion: apps/v1 78 kind: Deployment 79 metadata: 80 name: metric-adapter 81 namespace: crane-system 82 spec: 83 template: 84 spec: 85 containers: 86 - args: 87 #添加外部 Adapter 配置 88 - --remote-adapter=true 89 - --remote-adapter-service-namespace=crane-system 90 - --remote-adapter-service-name=prometheus-adapter 91 - --remote-adapter-service-port=443 92 ``` 93 94 #### RemoteAdapter 能力 95 96  97 98 Kubernetes 限制一个 ApiService 只能配置一个后端服务,因此,为了在一个集群内使用 Crane 提供的 Metric 和 PrometheusAdapter 提供的 Metric,Crane 支持了 RemoteAdapter 解决此问题 99 100 - Crane Metric-Adapter 支持配置一个 Kubernetes Service 作为一个远程 Adapter 101 - Crane Metric-Adapter 处理请求时会先检查是否是 Crane 提供的 Local Metric,如果不是,则转发给远程 Adapter 102 103 ## 运行例子 104 105 ### 准备应用 106 107 将以下应用部署到集群中,应用暴露了 Metric 展示每秒收到的 http 请求数量。 108 109 <summary>sample-app.deploy.yaml</summary> 110 111 ```yaml 112 apiVersion: apps/v1 113 kind: Deployment 114 metadata: 115 name: sample-app 116 labels: 117 app: sample-app 118 spec: 119 replicas: 1 120 selector: 121 matchLabels: 122 app: sample-app 123 template: 124 metadata: 125 labels: 126 app: sample-app 127 spec: 128 containers: 129 - image: luxas/autoscale-demo:v0.1.2 130 name: metrics-provider 131 resources: 132 limits: 133 cpu: 500m 134 requests: 135 cpu: 200m 136 ports: 137 - name: http 138 containerPort: 8080 139 ``` 140 141 <summary>sample-app.service.yaml</summary> 142 143 ```yaml 144 apiVersion: v1 145 kind: Service 146 metadata: 147 labels: 148 app: sample-app 149 name: sample-app 150 spec: 151 ports: 152 - name: http 153 port: 80 154 protocol: TCP 155 targetPort: 8080 156 selector: 157 app: sample-app 158 type: ClusterIP 159 ``` 160 161 ```bash 162 kubectl create -f sample-app.deploy.yaml 163 kubectl create -f sample-app.service.yaml 164 ``` 165 166 当应用部署完成后,您可以通过命令检查 `http_requests_total` Metric: 167 168 ```bash 169 curl http://$(kubectl get service sample-app -o jsonpath='{ .spec.clusterIP }')/metrics 170 ``` 171 172 ### 配置采集规则 173 174 配置 Prometheus 的 ScrapeConfig,收集应用的 Metric: http_requests_total 175 176 ```bash 177 kubectl edit configmap -n crane-system prometheus-server 178 ``` 179 180 添加以下配置 181 182 ```yaml 183 - job_name: sample-app 184 kubernetes_sd_configs: 185 - role: pod 186 relabel_configs: 187 - action: keep 188 regex: default;sample-app-(.+) 189 source_labels: 190 - __meta_kubernetes_namespace 191 - __meta_kubernetes_pod_name 192 - action: labelmap 193 regex: __meta_kubernetes_pod_label_(.+) 194 - action: replace 195 source_labels: 196 - __meta_kubernetes_namespace 197 target_label: namespace 198 - source_labels: [__meta_kubernetes_pod_name] 199 action: replace 200 target_label: pod 201 ``` 202 203 此时,您可以在 Prometheus 查询 psql:sum(rate(http_requests_total[5m])) by (pod) 204 205 ### 验证 PrometheusAdapter 206 207 PrometheusAdapter 默认的 Rule 配置支持将 http_requests_total 转换成 Pods 类型的 Custom Metric,通过命令验证: 208 209 ```bash 210 kubectl get --raw /apis/custom.metrics.k8s.io/v1beta1 | jq . 211 ``` 212 213 结果应包括 `pods/http_requests`: 214 215 ```bash 216 { 217 "name": "pods/http_requests", 218 "singularName": "", 219 "namespaced": true, 220 "kind": "MetricValueList", 221 "verbs": [ 222 "get" 223 ] 224 } 225 ``` 226 227 这表明现在可以通过 Pod Metric 配置 HPA。 228 229 ### 配置弹性 230 231 现在我们可以创建 Effective HPA。此时 Effective HPA 可以通过 Pod Metric `http_requests` 进行弹性: 232 233 #### 如何定义一个自定义指标开启预测功能 234 235 在 Effective HPA 的 Annotation 按以下规则添加配置: 236 237 ```yaml 238 annotations: 239 # metric-query.autoscaling.crane.io 是固定的前缀,后面是 Metric 名字,需跟 spec.metrics 中的 Metric.name 相同,支持 Pods 类型和 External 类型 240 metric-query.autoscaling.crane.io/http_requests: "sum(rate(http_requests_total[5m])) by (pod)" 241 ``` 242 243 <summary>sample-app-hpa.yaml</summary> 244 245 ```yaml 246 apiVersion: autoscaling.crane.io/v1alpha1 247 kind: EffectiveHorizontalPodAutoscaler 248 metadata: 249 name: php-apache 250 annotations: 251 # metric-query.autoscaling.crane.io 是固定的前缀,后面是 Metric 名字,需跟 spec.metrics 中的 Metric.name 相同,支持 Pods 类型和 External 类型 252 metric-query.autoscaling.crane.io/http_requests: "sum(rate(http_requests_total[5m])) by (pod)" 253 spec: 254 # ScaleTargetRef is the reference to the workload that should be scaled. 255 scaleTargetRef: 256 apiVersion: apps/v1 257 kind: Deployment 258 name: sample-app 259 minReplicas: 1 # MinReplicas is the lower limit replicas to the scale target which the autoscaler can scale down to. 260 maxReplicas: 10 # MaxReplicas is the upper limit replicas to the scale target which the autoscaler can scale up to. 261 scaleStrategy: Auto # ScaleStrategy indicate the strategy to scaling target, value can be "Auto" and "Manual". 262 # Metrics contains the specifications for which to use to calculate the desired replica count. 263 metrics: 264 - type: Resource 265 resource: 266 name: cpu 267 target: 268 type: Utilization 269 averageUtilization: 50 270 - type: Pods 271 pods: 272 metric: 273 name: http_requests 274 target: 275 type: AverageValue 276 averageValue: 500m 277 # Prediction defines configurations for predict resources. 278 # If unspecified, defaults don't enable prediction. 279 prediction: 280 predictionWindowSeconds: 3600 # PredictionWindowSeconds is the time window to predict metrics in the future. 281 predictionAlgorithm: 282 algorithmType: dsp 283 dsp: 284 sampleInterval: "60s" 285 historyLength: "7d" 286 ``` 287 288 ```bash 289 kubectl create -f sample-app-hpa.yaml 290 ``` 291 292 查看 TimeSeriesPrediction 状态,如果应用运行时间较短,可能会无法预测: 293 294 ```yaml 295 apiVersion: prediction.crane.io/v1alpha1 296 kind: TimeSeriesPrediction 297 metadata: 298 creationTimestamp: "2022-07-11T16:10:09Z" 299 generation: 1 300 labels: 301 app.kubernetes.io/managed-by: effective-hpa-controller 302 app.kubernetes.io/name: ehpa-php-apache 303 app.kubernetes.io/part-of: php-apache 304 autoscaling.crane.io/effective-hpa-uid: 1322c5ac-a1c6-4c71-98d6-e85d07b22da0 305 name: ehpa-php-apache 306 namespace: default 307 spec: 308 predictionMetrics: 309 - algorithm: 310 algorithmType: dsp 311 dsp: 312 estimators: {} 313 historyLength: 7d 314 sampleInterval: 60s 315 resourceIdentifier: crane_pod_cpu_usage 316 resourceQuery: cpu 317 type: ResourceQuery 318 - algorithm: 319 algorithmType: dsp 320 dsp: 321 estimators: {} 322 historyLength: 7d 323 sampleInterval: 60s 324 expressionQuery: 325 expression: sum(rate(http_requests_total[5m])) by (pod) 326 resourceIdentifier: crane_custom.pods_http_requests 327 type: ExpressionQuery 328 predictionWindowSeconds: 3600 329 targetRef: 330 apiVersion: apps/v1 331 kind: Deployment 332 name: sample-app 333 namespace: default 334 status: 335 conditions: 336 - lastTransitionTime: "2022-07-12T06:54:42Z" 337 message: not all metric predicted 338 reason: PredictPartial 339 status: "False" 340 type: Ready 341 predictionMetrics: 342 - ready: false 343 resourceIdentifier: crane_pod_cpu_usage 344 - prediction: 345 - labels: 346 - name: pod 347 value: sample-app-7cfb596f98-8h5vv 348 samples: 349 - timestamp: 1657608900 350 value: "0.01683" 351 - timestamp: 1657608960 352 value: "0.01683" 353 ...... 354 ready: true 355 resourceIdentifier: crane_custom.pods_http_requests 356 ``` 357 358 查看 Effective HPA 创建的 HPA 对象,可以观测到已经创建出基于自定义指标预测的 Metric: `crane_custom.pods_http_requests` 359 360 ```yaml 361 apiVersion: autoscaling/v2beta2 362 kind: HorizontalPodAutoscaler 363 metadata: 364 creationTimestamp: "2022-07-11T16:10:10Z" 365 labels: 366 app.kubernetes.io/managed-by: effective-hpa-controller 367 app.kubernetes.io/name: ehpa-php-apache 368 app.kubernetes.io/part-of: php-apache 369 autoscaling.crane.io/effective-hpa-uid: 1322c5ac-a1c6-4c71-98d6-e85d07b22da0 370 name: ehpa-php-apache 371 namespace: default 372 spec: 373 maxReplicas: 10 374 metrics: 375 - pods: 376 metric: 377 name: http_requests 378 target: 379 averageValue: 500m 380 type: AverageValue 381 type: Pods 382 - pods: 383 metric: 384 name: crane_custom.pods_http_requests 385 selector: 386 matchLabels: 387 autoscaling.crane.io/effective-hpa-uid: 1322c5ac-a1c6-4c71-98d6-e85d07b22da0 388 target: 389 averageValue: 500m 390 type: AverageValue 391 type: Pods 392 - resource: 393 name: cpu 394 target: 395 averageUtilization: 50 396 type: Utilization 397 type: Resource 398 minReplicas: 1 399 scaleTargetRef: 400 apiVersion: apps/v1 401 kind: Deployment 402 name: sample-app 403 ``` 404 405 ## 总结 406 407 由于生产环境的复杂性,基于多指标的弹性(CPU/Memory/自定义指标)往往是生产应用的常见选择,因此 Effective HPA 通过预测算法覆盖了多指标的弹性,达到了帮助更多业务在生产环境落地水平弹性的成效。