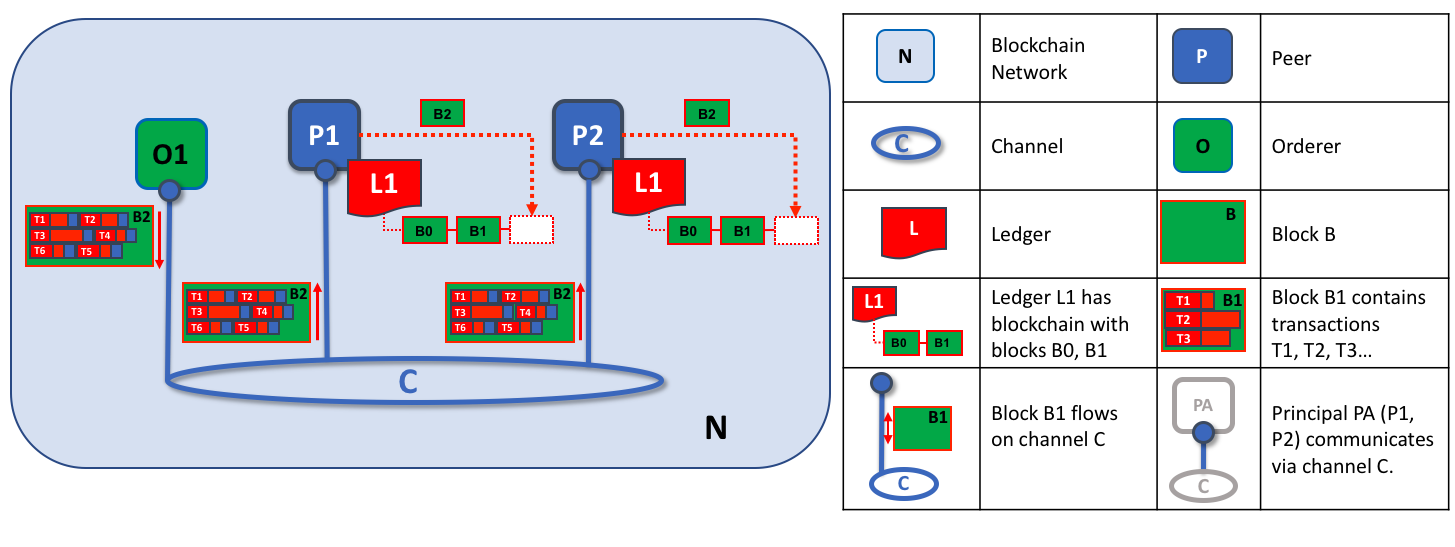

github.com/hechain20/hechain@v0.0.0-20220316014945-b544036ba106/docs/source/orderer/ordering_service.md (about) 1 # The Ordering Service 2 3 **Audience:** Architects, ordering service admins, channel creators 4 5 This topic serves as a conceptual introduction to the concept of ordering, how 6 orderers interact with peers, the role they play in a transaction flow, and an 7 overview of the currently available implementations of the ordering service, 8 with a particular focus on the recommended **Raft** ordering service implementation. 9 10 ## What is ordering? 11 12 Many distributed blockchains, such as Ethereum and Bitcoin, are not permissioned, 13 which means that any node can participate in the consensus process, wherein 14 transactions are ordered and bundled into blocks. Because of this fact, these 15 systems rely on **probabilistic** consensus algorithms which eventually 16 guarantee ledger consistency to a high degree of probability, but which are 17 still vulnerable to divergent ledgers (also known as a ledger "fork"), where 18 different participants in the network have a different view of the accepted 19 order of transactions. 20 21 Hechain works differently. It features a node called an 22 **orderer** (it's also known as an "ordering node") that does this transaction 23 ordering, which along with other orderer nodes forms an **ordering service**. 24 Because Fabric's design relies on **deterministic** consensus algorithms, any block 25 validated by the peer is guaranteed to be final and correct. Ledgers cannot fork 26 the way they do in many other distributed and permissionless blockchain networks. 27 28 In addition to promoting finality, separating the endorsement of chaincode 29 execution (which happens at the peers) from ordering gives Fabric advantages 30 in performance and scalability, eliminating bottlenecks which can occur when 31 execution and ordering are performed by the same nodes. 32 33 ## Orderer nodes and channel configuration 34 35 Orderers also enforce basic access control for channels, restricting who can 36 read and write data to them, and who can configure them. Remember that who 37 is authorized to modify a configuration element in a channel is subject to the 38 policies that the relevant administrators set when they created the consortium 39 or the channel. Configuration transactions are processed by the orderer, 40 as it needs to know the current set of policies to execute its basic 41 form of access control. In this case, the orderer processes the 42 configuration update to make sure that the requestor has the proper 43 administrative rights. If so, the orderer validates the update request against 44 the existing configuration, generates a new configuration transaction, 45 and packages it into a block that is relayed to all peers on the channel. The 46 peers then process the configuration transactions in order to verify that the 47 modifications approved by the orderer do indeed satisfy the policies defined in 48 the channel. 49 50 ## Orderer nodes and identity 51 52 Everything that interacts with a blockchain network, including peers, 53 applications, admins, and orderers, acquires their organizational identity from 54 their digital certificate and their Membership Service Provider (MSP) definition. 55 56 For more information about identities and MSPs, check out our documentation on 57 [Identity](../identity/identity.html) and [Membership](../membership/membership.html). 58 59 Just like peers, ordering nodes belong to an organization. And similar to peers, 60 a separate Certificate Authority (CA) should be used for each organization. 61 Whether this CA will function as the root CA, or whether you choose to deploy 62 a root CA and then intermediate CAs associated with that root CA, is up to you. 63 64 ## Orderers and the transaction flow 65 66 ### Phase one: Transaction Proposal and Endorsement 67 68 We've seen from our topic on [Peers](../peers/peers.html) that they form the basis 69 for a blockchain network, hosting ledgers, which can be queried and updated by 70 applications through smart contracts. 71 72 Specifically, applications that want to update the ledger are involved in a 73 process with three phases that ensures all of the peers in a blockchain network 74 keep their ledgers consistent with each other. 75 76 In the first phase, a client application sends a transaction proposal to the Fabric 77 Gateway service, via a trusted peer. This peer executes the proposed transaction or 78 forwards it to another peer in its organization for execution. 79 80 The gateway also forwards the transaction to peers in the organizations required by the endorsement policy. These endorsing peers run the transaction and return the 81 transaction response to the gateway service. They do not apply the proposed update to 82 their copy of the ledger at this time. The endorsed transaction proposals will ultimately 83 be ordered into blocks in phase two, and then distributed to all peers for final validation 84 and commitment to the ledger in phase three. 85 86 Note: Fabric v2.3 SDKs embed the logic of the v2.4 Fabric Gateway service in the client application --- refer to the [v2.3 Applications and Peers](https://hyperledger-fabric.readthedocs.io/en/release-2.3/peers/peers.html#applications-and-peers) topic for details. 87 88 For an in-depth look at phase one, refer back to the [Peers](../peers/peers.html#applications-and-peers) topic. 89 90 ### Phase two: Transaction Submission and Ordering 91 92 With successful completion of the first transaction phase (proposal), the client 93 application has received an endorsed transaction proposal response from the 94 Fabric Gateway service for signing. For an endorsed transaction, the gateway service 95 forwards the transaction to the ordering service, which orders it with 96 other endorsed transactions, and packages them all into a block. 97 98 The ordering service creates these blocks of transactions, which will ultimately 99 be distributed to all peers on the channel for validation and commitment to 100 the ledger in phase three. The blocks themselves are also ordered and are the 101 basic components of a blockchain ledger. 102 103 Ordering service nodes receive transactions from many different application 104 clients (via the gateway) concurrently. These ordering service nodes collectively 105 form the ordering service, which may be shared by multiple channels. 106 107 The number of transactions in a block depends on channel configuration 108 parameters related to the desired size and maximum elapsed duration for a 109 block (`BatchSize` and `BatchTimeout` parameters, to be exact). The blocks are 110 then saved to the orderer's ledger and distributed to all peers on the channel. 111 If a peer happens to be down at this time, or joins 112 the channel later, it will receive the blocks by gossiping with another peer. 113 We'll see how this block is processed by peers in the third phase. 114 115 It's worth noting that the sequencing of transactions in a block is not 116 necessarily the same as the order received by the ordering service, since there 117 can be multiple ordering service nodes that receive transactions at approximately 118 the same time. What's important is that the ordering service puts the transactions 119 into a strict order, and peers will use this order when validating and committing 120 transactions. 121 122 This strict ordering of transactions within blocks makes Hechain a 123 little different from other blockchains where the same transaction can be 124 packaged into multiple different blocks that compete to form a chain. 125 In Hechain, the blocks generated by the ordering service are 126 **final**. Once a transaction has been written to a block, its position in the 127 ledger is immutably assured. As we said earlier, Hechain's finality 128 means that there are no **ledger forks** --- validated and committed transactions 129 will never be reverted or dropped. 130 131 We can also see that, whereas peers execute smart contracts (chaincode) and process transactions, 132 orderers most definitely do not. Every authorized transaction that arrives at an 133 orderer is then mechanically packaged into a block --- the orderer makes no judgement 134 as to the content of a transaction (except for channel configuration transactions, 135 as mentioned earlier). 136 137 At the end of phase two, we see that orderers have been responsible for the simple 138 but vital processes of collecting proposed transaction updates, ordering them, 139 and packaging them into blocks, ready for distribution to the channel peers. 140 141 ### Phase three: Transaction Validation and Commitment 142 143 The third phase of the transaction workflow involves the distribution of 144 ordered and packaged blocks from the ordering service to the channel peers 145 for validation and commitment to the ledger. 146 147 Phase three begins with the ordering service distributing blocks to all channel 148 peers. It's worth noting that not every peer needs to be connected to an orderer --- 149 peers can cascade blocks to other peers using the [**gossip**](../gossip.html) 150 protocol --- although receiving blocks directly from the ordering service is 151 recommended. 152 153 Each peer will validate distributed blocks independently, ensuring that ledgers 154 remain consistent. Specifically, each peer in the channel will validate each 155 transaction in the block to ensure it has been endorsed 156 by the required organizations, that its endorsements match, and that 157 it hasn't become invalidated by other recently committed transactions. Invalidated 158 transactions are still retained in the immutable block created by the orderer, 159 but they are marked as invalid by the peer and do not update the ledger's state. 160 161  162 163 *The second role of an ordering node is to distribute blocks to peers. In this 164 example, orderer O1 distributes block B2 to peer P1 and peer P2. Peer P1 165 processes block B2, resulting in a new block being added to ledger L1 on P1. In 166 parallel, peer P2 processes block B2, resulting in a new block being added to 167 ledger L1 on P2. Once this process is complete, the ledger L1 has been 168 consistently updated on peers P1 and P2, and each may inform connected 169 applications that the transaction has been processed.* 170 171 In summary, phase three sees the blocks of transactions created by the ordering 172 service applied consistently to the ledger by the peers. The strict 173 ordering of transactions into blocks allows each peer to validate that transaction 174 updates are consistently applied across the channel. 175 176 For a deeper look at phase 3, refer back to the [Peers](../peers/peers.html#phase-3-validation-and-commit) topic. 177 178 ## Ordering service implementations 179 180 While every ordering service currently available handles transactions and 181 configuration updates the same way, there are nevertheless several different 182 implementations for achieving consensus on the strict ordering of transactions 183 between ordering service nodes. 184 185 For information about how to stand up an ordering node (regardless of the 186 implementation the node will be used in), check out [our documentation on deploying a production ordering service](../deployorderer/ordererplan.html). 187 188 * **Raft** (recommended) 189 190 New as of v1.4.1, Raft is a crash fault tolerant (CFT) ordering service 191 based on an implementation of [Raft protocol](https://raft.github.io/raft.pdf) 192 in [`etcd`](https://coreos.com/etcd/). Raft follows a "leader and 193 follower" model, where a leader node is elected (per channel) and its decisions 194 are replicated by the followers. Raft ordering services should be easier to set 195 up and manage than Kafka-based ordering services, and their design allows 196 different organizations to contribute nodes to a distributed ordering service. 197 198 * **Kafka** (deprecated in v2.x) 199 200 Similar to Raft-based ordering, Apache Kafka is a CFT implementation that uses 201 a "leader and follower" node configuration. Kafka utilizes a ZooKeeper 202 ensemble for management purposes. The Kafka based ordering service has been 203 available since Fabric v1.0, but many users may find the additional 204 administrative overhead of managing a Kafka cluster intimidating or undesirable. 205 206 * **Solo** (deprecated in v2.x) 207 208 The Solo implementation of the ordering service is intended for test only and 209 consists only of a single ordering node. It has been deprecated and may be 210 removed entirely in a future release. Existing users of Solo should move to 211 a single node Raft network for equivalent function. 212 213 ## Raft 214 215 For information on how to customize the `orderer.yaml` file that determines the configuration of an ordering node, check out the [Checklist for a production ordering node](../deployorderer/ordererchecklist.html). 216 217 The go-to ordering service choice for production networks, the Fabric 218 implementation of the established Raft protocol uses a "leader and follower" 219 model, in which a leader is dynamically elected among the ordering 220 nodes in a channel (this collection of nodes is known as the "consenter set"), 221 and that leader replicates messages to the follower nodes. Because the system 222 can sustain the loss of nodes, including leader nodes, as long as there is a 223 majority of ordering nodes (what's known as a "quorum") remaining, Raft is said 224 to be "crash fault tolerant" (CFT). In other words, if there are three nodes in a 225 channel, it can withstand the loss of one node (leaving two remaining). If you 226 have five nodes in a channel, you can lose two nodes (leaving three 227 remaining nodes). This feature of a Raft ordering service is a factor in the 228 establishment of a high availability strategy for your ordering service. Additionally, 229 in a production environment, you would want to spread these nodes across data 230 centers and even locations. For example, by putting one node in three different 231 data centers. That way, if a data center or entire location becomes unavailable, 232 the nodes in the other data centers continue to operate. 233 234 From the perspective of the service they provide to a network or a channel, Raft 235 and the existing Kafka-based ordering service (which we'll talk about later) are 236 similar. They're both CFT ordering services using the leader and follower 237 design. If you are an application developer, smart contract developer, or peer 238 administrator, you will not notice a functional difference between an ordering 239 service based on Raft versus Kafka. However, there are a few major differences worth 240 considering, especially if you intend to manage an ordering service. 241 242 * Raft is easier to set up. Although Kafka has many admirers, even those 243 admirers will (usually) admit that deploying a Kafka cluster and its ZooKeeper 244 ensemble can be tricky, requiring a high level of expertise in Kafka 245 infrastructure and settings. Additionally, there are many more components to 246 manage with Kafka than with Raft, which means that there are more places where 247 things can go wrong. Kafka also has its own versions, which must be coordinated 248 with your orderers. **With Raft, everything is embedded into your ordering node**. 249 250 * Kafka and Zookeeper are not designed to be run across large networks. While 251 Kafka is CFT, it should be run in a tight group of hosts. This means that 252 practically speaking you need to have one organization run the Kafka cluster. 253 Given that, having ordering nodes run by different organizations when using Kafka 254 (which Fabric supports) doesn't decentralize the nodes because ultimately 255 the nodes all go to a Kafka cluster which is under the control of a 256 single organization. With Raft, each organization can have its own ordering 257 nodes, participating in the ordering service, which leads to a more decentralized 258 system. 259 260 * Raft is supported natively, which means that users are required to get the requisite images and 261 learn how to use Kafka and ZooKeeper on their own. Likewise, support for 262 Kafka-related issues is handled through [Apache](https://kafka.apache.org/), the 263 open-source developer of Kafka, not Hechain. The Fabric Raft implementation, 264 on the other hand, has been developed and will be supported within the Fabric 265 developer community and its support apparatus. 266 267 * Where Kafka uses a pool of servers (called "Kafka brokers") and the admin of 268 the orderer organization specifies how many nodes they want to use on a 269 particular channel, Raft allows the users to specify which ordering nodes will 270 be deployed to which channel. In this way, peer organizations can make sure 271 that, if they also own an orderer, this node will be made a part of a ordering 272 service of that channel, rather than trusting and depending on a central admin 273 to manage the Kafka nodes. 274 275 * Raft is the first step toward Fabric's development of a byzantine fault tolerant 276 (BFT) ordering service. As we'll see, some decisions in the development of 277 Raft were driven by this. If you are interested in BFT, learning how to use 278 Raft should ease the transition. 279 280 For all of these reasons, support for Kafka-based ordering service is being 281 deprecated in Fabric v2.x. 282 283 Note: Similar to Solo and Kafka, a Raft ordering service can lose transactions 284 after acknowledgement of receipt has been sent to a client. For example, if the 285 leader crashes at approximately the same time as a follower provides 286 acknowledgement of receipt. Therefore, application clients should listen on peers 287 for transaction commit events regardless (to check for transaction validity), but 288 extra care should be taken to ensure that the client also gracefully tolerates a 289 timeout in which the transaction does not get committed in a configured timeframe. 290 Depending on the application, it may be desirable to resubmit the transaction or 291 collect a new set of endorsements upon such a timeout. 292 293 ### Raft concepts 294 295 While Raft offers many of the same features as Kafka --- albeit in a simpler and 296 easier-to-use package --- it functions substantially different under the covers 297 from Kafka and introduces a number of new concepts, or twists on existing 298 concepts, to Fabric. 299 300 **Log entry**. The primary unit of work in a Raft ordering service is a "log 301 entry", with the full sequence of such entries known as the "log". We consider 302 the log consistent if a majority (a quorum, in other words) of members agree on 303 the entries and their order, making the logs on the various orderers replicated. 304 305 **Consenter set**. The ordering nodes actively participating in the consensus 306 mechanism for a given channel and receiving replicated logs for the channel. 307 308 **Finite-State Machine (FSM)**. Every ordering node in Raft has an FSM and 309 collectively they're used to ensure that the sequence of logs in the various 310 ordering nodes is deterministic (written in the same sequence). 311 312 **Quorum**. Describes the minimum number of consenters that need to affirm a 313 proposal so that transactions can be ordered. For every consenter set, this is a 314 **majority** of nodes. In a cluster with five nodes, three must be available for 315 there to be a quorum. If a quorum of nodes is unavailable for any reason, the 316 ordering service cluster becomes unavailable for both read and write operations 317 on the channel, and no new logs can be committed. 318 319 **Leader**. This is not a new concept --- Kafka also uses leaders --- 320 but it's critical to understand that at any given time, a channel's consenter set 321 elects a single node to be the leader (we'll describe how this happens in Raft 322 later). The leader is responsible for ingesting new log entries, replicating 323 them to follower ordering nodes, and managing when an entry is considered 324 committed. This is not a special **type** of orderer. It is only a role that 325 an orderer may have at certain times, and then not others, as circumstances 326 determine. 327 328 **Follower**. Again, not a new concept, but what's critical to understand about 329 followers is that the followers receive the logs from the leader and 330 replicate them deterministically, ensuring that logs remain consistent. As 331 we'll see in our section on leader election, the followers also receive 332 "heartbeat" messages from the leader. In the event that the leader stops 333 sending those message for a configurable amount of time, the followers will 334 initiate a leader election and one of them will be elected the new leader. 335 336 ### Raft in a transaction flow 337 338 Every channel runs on a **separate** instance of the Raft protocol, which allows each instance to elect a different leader. This configuration also allows further decentralization of the service in use cases where clusters are made up of ordering nodes controlled by different organizations. Ordering nodes can be added or removed from a channel as needed as long as only a single node is added or removed at a time. While this configuration creates more overhead in the form of redundant heartbeat messages and goroutines, it lays necessary groundwork for BFT. 339 340 In Raft, transactions (in the form of proposals or configuration updates) are 341 automatically routed by the ordering node that receives the transaction to the 342 current leader of that channel. This means that peers and applications do not 343 need to know who the leader node is at any particular time. Only the ordering 344 nodes need to know. 345 346 When the orderer validation checks have been completed, the transactions are 347 ordered, packaged into blocks, consented on, and distributed, as described in 348 phase two of our transaction flow. 349 350 ### Architectural notes 351 352 #### How leader election works in Raft 353 354 Although the process of electing a leader happens within the orderer's internal 355 processes, it's worth noting how the process works. 356 357 Raft nodes are always in one of three states: follower, candidate, or leader. 358 All nodes initially start out as a **follower**. In this state, they can accept 359 log entries from a leader (if one has been elected), or cast votes for leader. 360 If no log entries or heartbeats are received for a set amount of time (for 361 example, five seconds), nodes self-promote to the **candidate** state. In the 362 candidate state, nodes request votes from other nodes. If a candidate receives a 363 quorum of votes, then it is promoted to a **leader**. The leader must accept new 364 log entries and replicate them to the followers. 365 366 For a visual representation of how the leader election process works, check out 367 [The Secret Lives of Data](http://thesecretlivesofdata.com/raft/). 368 369 #### Snapshots 370 371 If an ordering node goes down, how does it get the logs it missed when it is 372 restarted? 373 374 While it's possible to keep all logs indefinitely, in order to save disk space, 375 Raft uses a process called "snapshotting", in which users can define how many 376 bytes of data will be kept in the log. This amount of data will conform to a 377 certain number of blocks (which depends on the amount of data in the blocks. 378 Note that only full blocks are stored in a snapshot). 379 380 For example, let's say lagging replica `R1` was just reconnected to the network. 381 Its latest block is `100`. Leader `L` is at block `196`, and is configured to 382 snapshot at amount of data that in this case represents 20 blocks. `R1` would 383 therefore receive block `180` from `L` and then make a `Deliver` request for 384 blocks `101` to `180`. Blocks `180` to `196` would then be replicated to `R1` 385 through the normal Raft protocol. 386 387 ### Kafka (deprecated in v2.x) 388 389 The other crash fault tolerant ordering service supported by Fabric is an 390 adaptation of a Kafka distributed streaming platform for use as a cluster of 391 ordering nodes. You can read more about Kafka at the [Apache Kafka Web site](https://kafka.apache.org/intro), 392 but at a high level, Kafka uses the same conceptual "leader and follower" 393 configuration used by Raft, in which transactions (which Kafka calls "messages") 394 are replicated from the leader node to the follower nodes. In the event the 395 leader node goes down, one of the followers becomes the leader and ordering can 396 continue, ensuring fault tolerance, just as with Raft. 397 398 The management of the Kafka cluster, including the coordination of tasks, 399 cluster membership, access control, and controller election, among others, is 400 handled by a ZooKeeper ensemble and its related APIs. 401 402 Kafka clusters and ZooKeeper ensembles are notoriously tricky to set up, so our 403 documentation assumes a working knowledge of Kafka and ZooKeeper. If you decide 404 to use Kafka without having this expertise, you should complete, *at a minimum*, 405 the first six steps of the [Kafka Quickstart guide](https://kafka.apache.org/quickstart) before experimenting with the 406 Kafka-based ordering service. You can also consult 407 [this sample configuration file](https://github.com/hechain20/hechain/blob/release-1.1/bddtests/dc-orderer-kafka.yml) 408 for a brief explanation of the sensible defaults for Kafka and ZooKeeper. 409 410 To learn how to bring up a Kafka-based ordering service, check out [our documentation on Kafka](../kafka.html). 411 412 <!--- Licensed under Creative Commons Attribution 4.0 International License 413 https://creativecommons.org/licenses/by/4.0/) -->