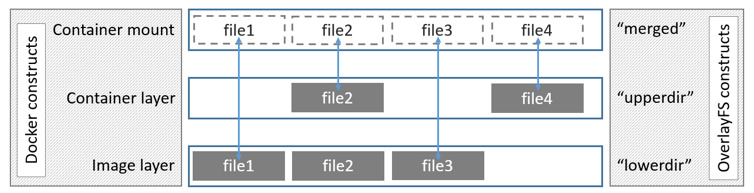

github.com/hustcat/docker@v1.3.3-0.20160314103604-901c67a8eeab/docs/userguide/storagedriver/overlayfs-driver.md (about) 1 <!--[metadata]> 2 +++ 3 title = "OverlayFS storage in practice" 4 description = "Learn how to optimize your use of OverlayFS driver." 5 keywords = ["container, storage, driver, OverlayFS "] 6 [menu.main] 7 parent = "engine_driver" 8 +++ 9 <![end-metadata]--> 10 11 # Docker and OverlayFS in practice 12 13 OverlayFS is a modern *union filesystem* that is similar to AUFS. In comparison 14 to AUFS, OverlayFS: 15 16 * has a simpler design 17 * has been in the mainline Linux kernel since version 3.18 18 * is potentially faster 19 20 As a result, OverlayFS is rapidly gaining popularity in the Docker community 21 and is seen by many as a natural successor to AUFS. As promising as OverlayFS 22 is, it is still relatively young. Therefore caution should be taken before 23 using it in production Docker environments. 24 25 Docker's `overlay` storage driver leverages several OverlayFS features to build 26 and manage the on-disk structures of images and containers. 27 28 >**Note**: Since it was merged into the mainline kernel, the OverlayFS *kernel 29 >module* was renamed from "overlayfs" to "overlay". As a result you may see the 30 > two terms used interchangeably in some documentation. However, this document 31 > uses "OverlayFS" to refer to the overall filesystem, and `overlay` to refer 32 > to Docker's storage-driver. 33 34 ## Image layering and sharing with OverlayFS 35 36 OverlayFS takes two directories on a single Linux host, layers one on top of 37 the other, and provides a single unified view. These directories are often 38 referred to as *layers* and the technology used to layer them is known as a 39 *union mount*. The OverlayFS terminology is "lowerdir" for the bottom layer and 40 "upperdir" for the top layer. The unified view is exposed through its own 41 directory called "merged". 42 43 The diagram below shows how a Docker image and a Docker container are layered. 44 The image layer is the "lowerdir" and the container layer is the "upperdir". 45 The unified view is exposed through a directory called "merged" which is 46 effectively the containers mount point. The diagram shows how Docker constructs 47 map to OverlayFS constructs. 48 49  50 51 Notice how the image layer and container layer can contain the same files. When 52 this happens, the files in the container layer ("upperdir") are dominant and 53 obscure the existence of the same files in the image layer ("lowerdir"). The 54 container mount ("merged") presents the unified view. 55 56 OverlayFS only works with two layers. This means that multi-layered images 57 cannot be implemented as multiple OverlayFS layers. Instead, each image layer 58 is implemented as its own directory under `/var/lib/docker/overlay`. 59 Hard links are then used as a space-efficient way to reference data shared with 60 lower layers. As of Docker 1.10, image layer IDs no longer correspond to 61 directory names in `/var/lib/docker/` 62 63 To create a container, the `overlay` driver combines the directory representing 64 the image's top layer plus a new directory for the container. The image's top 65 layer is the "lowerdir" in the overlay and read-only. The new directory for the 66 container is the "upperdir" and is writable. 67 68 ## Example: Image and container on-disk constructs 69 70 The following `docker pull` command shows a Docker host with downloading a 71 Docker image comprising four layers. 72 73 $ sudo docker pull ubuntu 74 Using default tag: latest 75 latest: Pulling from library/ubuntu 76 8387d9ff0016: Pull complete 77 3b52deaaf0ed: Pull complete 78 4bd501fad6de: Pull complete 79 a3ed95caeb02: Pull complete 80 Digest: sha256:457b05828bdb5dcc044d93d042863fba3f2158ae249a6db5ae3934307c757c54 81 Status: Downloaded newer image for ubuntu:latest 82 83 Each image layer has it's own directory under `/var/lib/docker/overlay/`. This 84 is where the the contents of each image layer are stored. 85 86 The output of the command below shows the four directories that store the 87 contents of each image layer just pulled. However, as can be seen, the image 88 layer IDs do not match the directory names in `/var/lib/docker/overlay`. This 89 is normal behavior in Docker 1.10 and later. 90 91 $ ls -l /var/lib/docker/overlay/ 92 total 24 93 drwx------ 3 root root 4096 Oct 28 11:02 1d073211c498fd5022699b46a936b4e4bdacb04f637ad64d3475f558783f5c3e 94 drwx------ 3 root root 4096 Oct 28 11:02 5a4526e952f0aa24f3fcc1b6971f7744eb5465d572a48d47c492cb6bbf9cbcda 95 drwx------ 5 root root 4096 Oct 28 11:06 99fcaefe76ef1aa4077b90a413af57fd17d19dce4e50d7964a273aae67055235 96 drwx------ 3 root root 4096 Oct 28 11:01 c63fb41c2213f511f12f294dd729b9903a64d88f098c20d2350905ac1fdbcbba 97 98 The image layer directories contain the files unique to that layer as well as 99 hard links to the data that is shared with lower layers. This allows for 100 efficient use of disk space. 101 102 Containers also exist on-disk in the Docker host's filesystem under 103 `/var/lib/docker/overlay/`. If you inspect the directory relating to a running 104 container using the `ls -l` command, you find the following file and 105 directories. 106 107 $ ls -l /var/lib/docker/overlay/<directory-of-running-container> 108 total 16 109 -rw-r--r-- 1 root root 64 Oct 28 11:06 lower-id 110 drwxr-xr-x 1 root root 4096 Oct 28 11:06 merged 111 drwxr-xr-x 4 root root 4096 Oct 28 11:06 upper 112 drwx------ 3 root root 4096 Oct 28 11:06 work 113 114 These four filesystem objects are all artifacts of OverlayFS. The "lower-id" 115 file contains the ID of the top layer of the image the container is based on. 116 This is used by OverlayFS as the "lowerdir". 117 118 $ cat /var/lib/docker/overlay/73de7176c223a6c82fd46c48c5f152f2c8a7e49ecb795a7197c3bb795c4d879e/lower-id 119 1d073211c498fd5022699b46a936b4e4bdacb04f637ad64d3475f558783f5c3e 120 121 The "upper" directory is the containers read-write layer. Any changes made to 122 the container are written to this directory. 123 124 The "merged" directory is effectively the containers mount point. This is where 125 the unified view of the image ("lowerdir") and container ("upperdir") is 126 exposed. Any changes written to the container are immediately reflected in this 127 directory. 128 129 The "work" directory is required for OverlayFS to function. It is used for 130 things such as *copy_up* operations. 131 132 You can verify all of these constructs from the output of the `mount` command. 133 (Ellipses and line breaks are used in the output below to enhance readability.) 134 135 $ mount | grep overlay 136 overlay on /var/lib/docker/overlay/73de7176c223.../merged 137 type overlay (rw,relatime,lowerdir=/var/lib/docker/overlay/1d073211c498.../root, 138 upperdir=/var/lib/docker/overlay/73de7176c223.../upper, 139 workdir=/var/lib/docker/overlay/73de7176c223.../work) 140 141 The output reflects that the overlay is mounted as read-write ("rw"). 142 143 ## Container reads and writes with overlay 144 145 Consider three scenarios where a container opens a file for read access with 146 overlay. 147 148 - **The file does not exist in the container layer**. If a container opens a 149 file for read access and the file does not already exist in the container 150 ("upperdir") it is read from the image ("lowerdir"). This should incur very 151 little performance overhead. 152 153 - **The file only exists in the container layer**. If a container opens a file 154 for read access and the file exists in the container ("upperdir") and not in 155 the image ("lowerdir"), it is read directly from the container. 156 157 - **The file exists in the container layer and the image layer**. If a 158 container opens a file for read access and the file exists in the image layer 159 and the container layer, the file's version in the container layer is read. 160 This is because files in the container layer ("upperdir") obscure files with 161 the same name in the image layer ("lowerdir"). 162 163 Consider some scenarios where files in a container are modified. 164 165 - **Writing to a file for the first time**. The first time a container writes 166 to an existing file, that file does not exist in the container ("upperdir"). 167 The `overlay` driver performs a *copy_up* operation to copy the file from the 168 image ("lowerdir") to the container ("upperdir"). The container then writes the 169 changes to the new copy of the file in the container layer. 170 171 However, OverlayFS works at the file level not the block level. This means 172 that all OverlayFS copy-up operations copy entire files, even if the file is 173 very large and only a small part of it is being modified. This can have a 174 noticeable impact on container write performance. However, two things are 175 worth noting: 176 177 * The copy_up operation only occurs the first time any given file is 178 written to. Subsequent writes to the same file will operate against the copy of 179 the file already copied up to the container. 180 181 * OverlayFS only works with two layers. This means that performance should 182 be better than AUFS which can suffer noticeable latencies when searching for 183 files in images with many layers. 184 185 - **Deleting files and directories**. When files are deleted within a container 186 a *whiteout* file is created in the containers "upperdir". The version of the 187 file in the image layer ("lowerdir") is not deleted. However, the whiteout file 188 in the container obscures it. 189 190 Deleting a directory in a container results in *opaque directory* being 191 created in the "upperdir". This has the same effect as a whiteout file and 192 effectively masks the existence of the directory in the image's "lowerdir". 193 194 ## Configure Docker with the overlay storage driver 195 196 To configure Docker to use the overlay storage driver your Docker host must be 197 running version 3.18 of the Linux kernel (preferably newer) with the overlay 198 kernel module loaded. OverlayFS can operate on top of most supported Linux 199 filesystems. However, ext4 is currently recommended for use in production 200 environments. 201 202 The following procedure shows you how to configure your Docker host to use 203 OverlayFS. The procedure assumes that the Docker daemon is in a stopped state. 204 205 > **Caution:** If you have already run the Docker daemon on your Docker host 206 > and have images you want to keep, `push` them Docker Hub or your private 207 > Docker Trusted Registry before attempting this procedure. 208 209 1. If it is running, stop the Docker `daemon`. 210 211 2. Verify your kernel version and that the overlay kernel module is loaded. 212 213 $ uname -r 214 3.19.0-21-generic 215 216 $ lsmod | grep overlay 217 overlay 218 219 3. Start the Docker daemon with the `overlay` storage driver. 220 221 $ docker daemon --storage-driver=overlay & 222 [1] 29403 223 root@ip-10-0-0-174:/home/ubuntu# INFO[0000] Listening for HTTP on unix (/var/run/docker.sock) 224 INFO[0000] Option DefaultDriver: bridge 225 INFO[0000] Option DefaultNetwork: bridge 226 <output truncated> 227 228 Alternatively, you can force the Docker daemon to automatically start with 229 the `overlay` driver by editing the Docker config file and adding the 230 `--storage-driver=overlay` flag to the `DOCKER_OPTS` line. Once this option 231 is set you can start the daemon using normal startup scripts without having 232 to manually pass in the `--storage-driver` flag. 233 234 4. Verify that the daemon is using the `overlay` storage driver 235 236 $ docker info 237 Containers: 0 238 Images: 0 239 Storage Driver: overlay 240 Backing Filesystem: extfs 241 <output truncated> 242 243 Notice that the *Backing filesystem* in the output above is showing as 244 `extfs`. Multiple backing filesystems are supported but `extfs` (ext4) is 245 recommended for production use cases. 246 247 Your Docker host is now using the `overlay` storage driver. If you run the 248 `mount` command, you'll find Docker has automatically created the `overlay` 249 mount with the required "lowerdir", "upperdir", "merged" and "workdir" 250 constructs. 251 252 ## OverlayFS and Docker Performance 253 254 As a general rule, the `overlay` driver should be fast. Almost certainly faster 255 than `aufs` and `devicemapper`. In certain circumstances it may also be faster 256 than `btrfs`. That said, there are a few things to be aware of relative to the 257 performance of Docker using the `overlay` storage driver. 258 259 - **Page Caching**. OverlayFS supports page cache sharing. This means multiple 260 containers accessing the same file can share a single page cache entry (or 261 entries). This makes the `overlay` driver efficient with memory and a good 262 option for PaaS and other high density use cases. 263 264 - **copy_up**. As with AUFS, OverlayFS has to perform copy-up operations any 265 time a container writes to a file for the first time. This can insert latency 266 into the write operation — especially if the file being copied up is 267 large. However, once the file has been copied up, all subsequent writes to that 268 file occur without the need for further copy-up operations. 269 270 The OverlayFS copy_up operation should be faster than the same operation 271 with AUFS. This is because AUFS supports more layers than OverlayFS and it is 272 possible to incur far larger latencies if searching through many AUFS layers. 273 274 - **RPMs and Yum**. OverlayFS only implements a subset of the POSIX standards. 275 This can result in certain OverlayFS operations breaking POSIX standards. One 276 such operation is the *copy-up* operation. Therefore, using `yum` inside of a 277 container on a Docker host using the `overlay` storage driver is unlikely to 278 work without implementing workarounds. 279 280 - **Inode limits**. Use of the `overlay` storage driver can cause excessive 281 inode consumption. This is especially so as the number of images and containers 282 on the Docker host grows. A Docker host with a large number of images and lots 283 of started and stopped containers can quickly run out of inodes. 284 285 Unfortunately you can only specify the number of inodes in a filesystem at the 286 time of creation. For this reason, you may wish to consider putting 287 `/var/lib/docker` on a separate device with its own filesystem, or manually 288 specifying the number of inodes when creating the filesystem. 289 290 The following generic performance best practices also apply to OverlayFS. 291 292 - **Solid State Devices (SSD)**. For best performance it is always a good idea 293 to use fast storage media such as solid state devices (SSD). 294 295 - **Use Data Volumes**. Data volumes provide the best and most predictable 296 performance. This is because they bypass the storage driver and do not incur 297 any of the potential overheads introduced by thin provisioning and 298 copy-on-write. For this reason, you should place heavy write workloads on data 299 volumes.