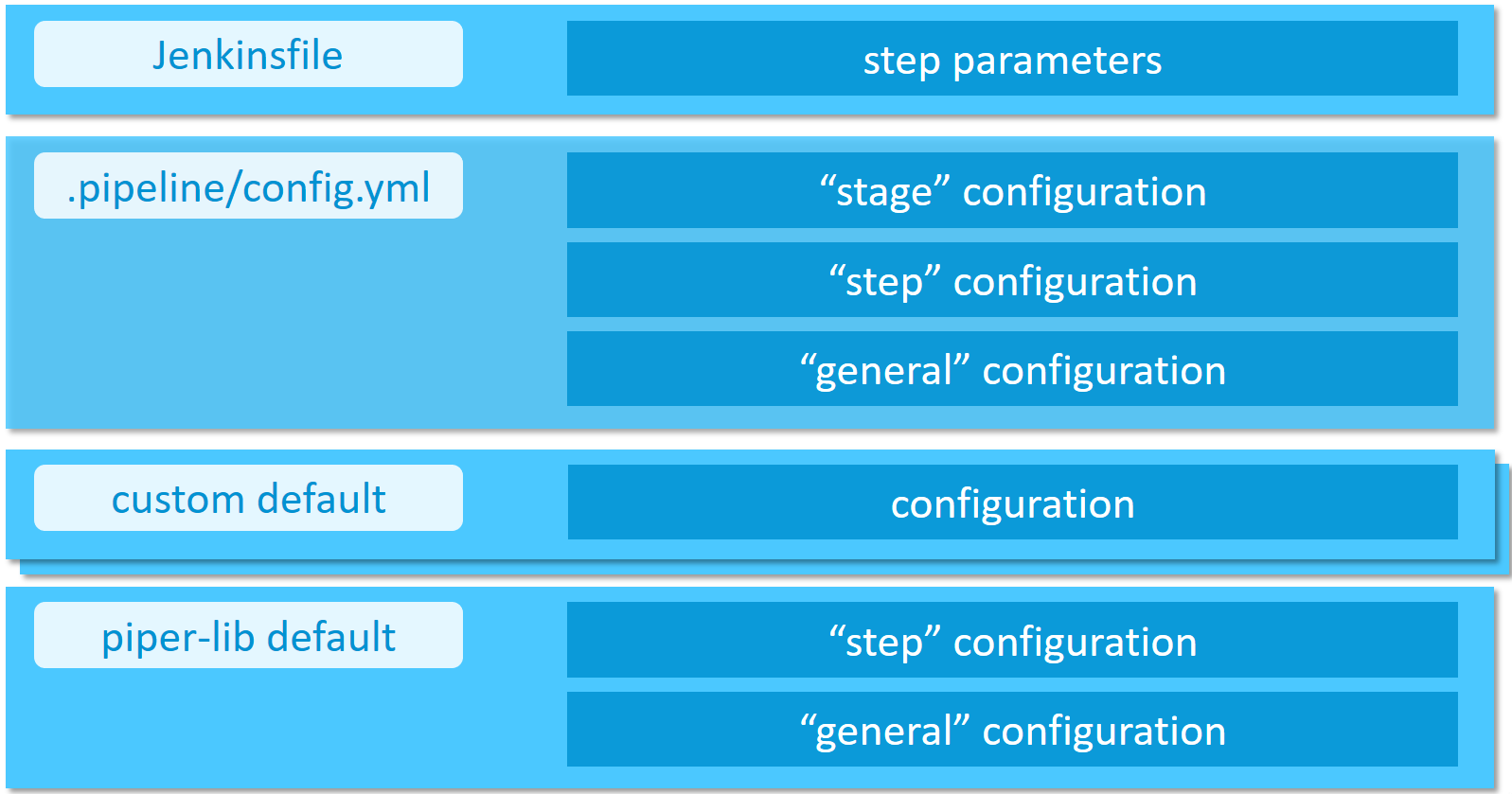

github.com/jaylevin/jenkins-library@v1.230.4/documentation/docs/configuration.md (about) 1 # Configuration 2 3 Configure your project through a yml-file, which is located at `.pipeline/config.yml` in the **master branch** of your source code repository. 4 5 Your configuration inherits from the default configuration located at [https://github.com/SAP/jenkins-library/blob/master/resources/default_pipeline_environment.yml](https://github.com/SAP/jenkins-library/blob/master/resources/default_pipeline_environment.yml). 6 7 !!! caution "Adding custom parameters" 8 Please note that adding custom parameters to the configuration is at your own risk. 9 We may introduce new parameters at any time which may clash with your custom parameters. 10 11 Configuration of the project "Piper" steps, as well as project "Piper" templates, can be done in a hierarchical manner. 12 13 1. Directly passed step parameters will always take precedence over other configuration values and defaults 14 1. Stage configuration parameters define a Jenkins pipeline stage-dependent set of parameters (e.g. deployment options for the `Acceptance` stage) 15 1. Step configuration defines how steps behave in general (e.g. step `cloudFoundryDeploy`) 16 1. General configuration parameters define parameters which are available across step boundaries 17 1. Custom default configuration provided by the user through a reference in the `customDefaults` parameter of the project configuration 18 1. Default configuration comes with the project "Piper" library and is always available 19 20  21 22 ## Collecting telemetry data 23 24 To improve this Jenkins library, we are collecting telemetry data. 25 Data is sent using [`com.sap.piper.pushToSWA`](https://github.com/SAP/jenkins-library/blob/master/src/com/sap/piper/Utils.groovy) 26 27 Following data (non-personal) is collected for example: 28 29 * Hashed job url, e.g. `4944f745e03f5f79daf0001eec9276ce351d3035` hash calculation is done in your Jenkins server and no original values are transmitted 30 * Name of library step which has been executed, like e.g. `artifactSetVersion` 31 * Certain parameters of the executed steps, e.g. `buildTool=maven` 32 33 **We store the telemetry data for no longer than 6 months on premises of SAP SE.** 34 35 !!! note "Disable collection of telemetry data" 36 If you do not want to send telemetry data you can easily deactivate this. 37 38 This is done with either of the following two ways: 39 40 1. General deactivation in your `.pipeline/config.yml` file by setting the configuration parameter `general -> collectTelemetryData: false` (default setting can be found in the [library defaults](https://github.com/SAP/jenkins-library/blob/master/resources/default_pipeline_environment.yml)). 41 42 **Please note: this will only take effect in all steps if you run `setupCommonPipelineEnvironment` at the beginning of your pipeline** 43 44 2. Individual deactivation per step by passing the parameter `collectTelemetryData: false`, like e.g. `setVersion script:this, collectTelemetryData: false` 45 46 ## Example configuration 47 48 ```yaml 49 general: 50 gitSshKeyCredentialsId: GitHub_Test_SSH 51 52 steps: 53 cloudFoundryDeploy: 54 deployTool: 'cf_native' 55 cloudFoundry: 56 org: 'testOrg' 57 space: 'testSpace' 58 credentialsId: 'MY_CF_CREDENTIALSID_IN_JENKINS' 59 newmanExecute: 60 newmanCollection: 'myNewmanCollection.file' 61 newmanEnvironment: 'myNewmanEnvironment' 62 newmanGlobals: 'myNewmanGlobals' 63 ``` 64 65 ## Collecting telemetry and logging data for Splunk 66 67 Splunk gives the ability to analyze any kind of logging information and to visualize the retrieved information in dashboards. 68 To do so, we support sending telemetry information as well as logging information in case of a failed step to a Splunk Http Event Collector (HEC) endpoint. 69 70 The following data will be sent to the endpoint if activated: 71 72 * Hashed pipeline URL 73 * Hashed Build URL 74 * StageName 75 * StepName 76 * ExitCode 77 * Duration (of each step) 78 * ErrorCode 79 * ErrorCategory 80 * CorrelationID (not hashed) 81 * CommitHash (Head commit hash of current build.) 82 * Branch 83 * GitOwner 84 * GitRepository 85 86 The information will be sent to the specified Splunk endpoint in the config file. By default, the Splunk mechanism is 87 deactivated and gets only activated if you add the following to your config: 88 89 ```yaml 90 general: 91 gitSshKeyCredentialsId: GitHub_Test_SSH 92 93 steps: 94 cloudFoundryDeploy: 95 deployTool: 'cf_native' 96 cloudFoundry: 97 org: 'testOrg' 98 space: 'testSpace' 99 credentialsId: 'MY_CF_CREDENTIALSID_IN_JENKINS' 100 hooks: 101 splunk: 102 dsn: 'YOUR SPLUNK HEC ENDPOINT' 103 token: 'YOURTOKEN' 104 index: 'SPLUNK INDEX' 105 sendLogs: true 106 ``` 107 108 `sendLogs` is a boolean, if set to true, the Splunk hook will send the collected logs in case of a failure of the step. 109 If no failure occurred, no logs will be sent. 110 111 ### How does the sent data look alike 112 113 In case of a failure, we send the collected messages in the field `messages` and the telemetry information in `telemetry`. By default, piper sends the log messages in batches. The default length for the messages is `1000`. As an example: 114 If you encounter an error in a step that created `5k` log messages, piper will send five messages containing the messages and the telemetry information. 115 116 ```json 117 { 118 "messages": [ 119 { 120 "time": "2021-04-28T17:59:19.9376454Z", 121 "message": "Project example pipeline exists...", 122 "data": { 123 "library": "", 124 "stepName": "checkmarxExecuteScan" 125 } 126 } 127 ], 128 "telemetry": { 129 "PipelineUrlHash": "73ece565feca07fa34330c2430af2b9f01ba5903", 130 "BuildUrlHash": "ec0aada9cc310547ca2938d450f4a4c789dea886", 131 "StageName": "", 132 "StepName": "checkmarxExecuteScan", 133 "ExitCode": "1", 134 "Duration": "52118", 135 "ErrorCode": "1", 136 "ErrorCategory": "undefined", 137 "CorrelationID": "https://example-jaasinstance.corp/job/myApp/job/microservice1/job/master/10/", 138 "CommitHash": "961ed5cd98fb1e37415a91b46a5b9bdcef81b002", 139 "Branch": "master", 140 "GitOwner": "piper", 141 "GitRepository": "piper-splunk" 142 } 143 } 144 ``` 145 146 ## Access to the configuration from custom scripts 147 148 Configuration is loaded into `commonPipelineEnvironment` during step [setupCommonPipelineEnvironment](steps/setupCommonPipelineEnvironment.md). 149 150 You can access the configuration values via `commonPipelineEnvironment.configuration` which will return you the complete configuration map. 151 152 Thus following access is for example possible (accessing `gitSshKeyCredentialsId` from `general` section): 153 154 ```groovy 155 commonPipelineEnvironment.configuration.general.gitSshKeyCredentialsId 156 ``` 157 158 ## Access to configuration in custom library steps 159 160 Within library steps the `ConfigurationHelper` object is used. 161 162 You can see its usage in all the Piper steps, for example [newmanExecute](https://github.com/SAP/jenkins-library/blob/master/vars/newmanExecute.groovy#L23). 163 164 ## Custom default configuration 165 166 For projects that are composed of multiple repositories (microservices), it might be desired to provide custom default configurations. 167 To do that, create a YAML file which is accessible from your CI/CD environment and configure it in your project configuration. 168 For example, the custom default configuration can be stored in a GitHub repository and accessed via the "raw" URL: 169 170 ```yaml 171 customDefaults: ['https://my.github.local/raw/someorg/custom-defaults/master/backend-service.yml'] 172 general: 173 ... 174 ``` 175 176 Note, the parameter `customDefaults` is required to be a list of strings and needs to be defined as a separate section of the project configuration. 177 In addition, the item order in the list implies the precedence, i.e., the last item of the customDefaults list has the highest precedence. 178 179 It is important to ensure that the HTTP response body is proper YAML, as the pipeline will attempt to parse it. 180 181 Anonymous read access to the `custom-defaults` repository is required. 182 183 The custom default configuration is merged with the project's `.pipeline/config.yml`. 184 Note, the project's config takes precedence, so you can override the custom default configuration in your project's local configuration. 185 This might be useful to provide a default value that needs to be changed only in some projects. 186 An overview of the configuration hierarchy is given at the beginning of this page. 187 188 If you have different types of projects, they might require different custom default configurations. 189 For example, you might not require all projects to have a certain code check (like Whitesource, etc.) active. 190 This can be achieved by having multiple YAML files in the _custom-defaults_ repository. 191 Configure the URL to the respective configuration file in the projects as described above.