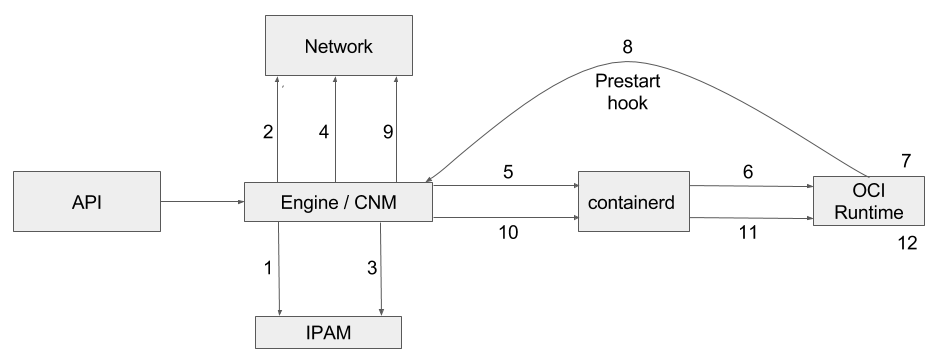

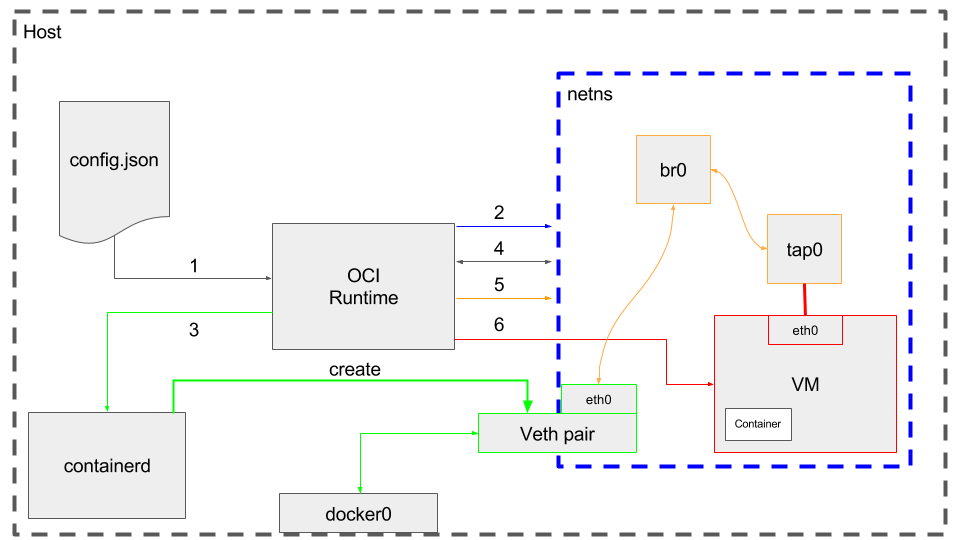

github.com/kata-containers/runtime@v0.0.0-20210505125100-04f29832a923/virtcontainers/README.md (about) 1 Table of Contents 2 ================= 3 4 * [What is it?](#what-is-it) 5 * [Background](#background) 6 * [Out of scope](#out-of-scope) 7 * [virtcontainers and Kubernetes CRI](#virtcontainers-and-kubernetes-cri) 8 * [Design](#design) 9 * [Sandboxes](#sandboxes) 10 * [Hypervisors](#hypervisors) 11 * [Agents](#agents) 12 * [Shim](#shim) 13 * [Proxy](#proxy) 14 * [API](#api) 15 * [Sandbox API](#sandbox-api) 16 * [Container API](#container-api) 17 * [Networking](#networking) 18 * [CNM](#cnm) 19 * [Storage](#storage) 20 * [How to check if container uses devicemapper block device as its rootfs](#how-to-check-if-container-uses-devicemapper-block-device-as-its-rootfs) 21 * [Devices](#devices) 22 * [How to pass a device using VFIO-passthrough](#how-to-pass-a-device-using-vfio-passthrough) 23 * [Developers](#developers) 24 * [Persistent storage plugin support](#persistent-storage-plugin-support) 25 * [Experimental features](#experimental-features) 26 27 # What is it? 28 29 `virtcontainers` is a Go library that can be used to build hardware-virtualized container 30 runtimes. 31 32 # Background 33 34 The few existing VM-based container runtimes (Clear Containers, runV, rkt's 35 KVM stage 1) all share the same hardware virtualization semantics but use different 36 code bases to implement them. `virtcontainers`'s goal is to factorize this code into 37 a common Go library. 38 39 Ideally, VM-based container runtime implementations would become translation 40 layers from the runtime specification they implement (e.g. the [OCI runtime-spec][oci] 41 or the [Kubernetes CRI][cri]) to the `virtcontainers` API. 42 43 `virtcontainers` was used as a foundational package for the [Clear Containers][cc] [runtime][cc-runtime] implementation. 44 45 [oci]: https://github.com/opencontainers/runtime-spec 46 [cri]: https://git.k8s.io/community/contributors/devel/sig-node/container-runtime-interface.md 47 [cc]: https://github.com/clearcontainers/ 48 [cc-runtime]: https://github.com/clearcontainers/runtime/ 49 50 # Out of scope 51 52 Implementing a container runtime is out of scope for this project. Any 53 tools or executables in this repository are only provided for demonstration or 54 testing purposes. 55 56 ## virtcontainers and Kubernetes CRI 57 58 `virtcontainers`'s API is loosely inspired by the Kubernetes [CRI][cri] because 59 we believe it provides the right level of abstractions for containerized sandboxes. 60 However, despite the API similarities between the two projects, the goal of 61 `virtcontainers` is _not_ to build a CRI implementation, but instead to provide a 62 generic, runtime-specification agnostic, hardware-virtualized containers 63 library that other projects could leverage to implement CRI themselves. 64 65 # Design 66 67 ## Sandboxes 68 69 The `virtcontainers` execution unit is a _sandbox_, i.e. `virtcontainers` users start sandboxes where 70 containers will be running. 71 72 `virtcontainers` creates a sandbox by starting a virtual machine and setting the sandbox 73 up within that environment. Starting a sandbox means launching all containers with 74 the VM sandbox runtime environment. 75 76 ## Hypervisors 77 78 The `virtcontainers` package relies on hypervisors to start and stop virtual machine where 79 sandboxes will be running. An hypervisor is defined by an Hypervisor interface implementation, 80 and the default implementation is the QEMU one. 81 82 ### Update cloud-hypervisor client code 83 84 See [docs](pkg/cloud-hypervisor/README.md) 85 86 ## Agents 87 88 During the lifecycle of a container, the runtime running on the host needs to interact with 89 the virtual machine guest OS in order to start new commands to be executed as part of a given 90 container workload, set new networking routes or interfaces, fetch a container standard or 91 error output, and so on. 92 There are many existing and potential solutions to resolve that problem and `virtcontainers` abstracts 93 this through the Agent interface. 94 95 ## Shim 96 97 In some cases the runtime will need a translation shim between the higher level container 98 stack (e.g. Docker) and the virtual machine holding the container workload. This is needed 99 for container stacks that make strong assumptions on the nature of the container they're 100 monitoring. In cases where they assume containers are simply regular host processes, a shim 101 layer is needed to translate host specific semantics into e.g. agent controlled virtual 102 machine ones. 103 104 ## Proxy 105 106 When hardware virtualized containers have limited I/O multiplexing capabilities, 107 runtimes may decide to rely on an external host proxy to support cases where several 108 runtime instances are talking to the same container. 109 110 # API 111 112 The high level `virtcontainers` API is the following one: 113 114 ## Sandbox API 115 116 * `CreateSandbox(sandboxConfig SandboxConfig)` creates a Sandbox. 117 The virtual machine is started and the Sandbox is prepared. 118 119 * `DeleteSandbox(sandboxID string)` deletes a Sandbox. 120 The virtual machine is shut down and all information related to the Sandbox are removed. 121 The function will fail if the Sandbox is running. In that case `StopSandbox()` has to be called first. 122 123 * `StartSandbox(sandboxID string)` starts an already created Sandbox. 124 The Sandbox and all its containers are started. 125 126 * `RunSandbox(sandboxConfig SandboxConfig)` creates and starts a Sandbox. 127 This performs `CreateSandbox()` + `StartSandbox()`. 128 129 * `StopSandbox(sandboxID string)` stops an already running Sandbox. 130 The Sandbox and all its containers are stopped. 131 132 * `PauseSandbox(sandboxID string)` pauses an existing Sandbox. 133 134 * `ResumeSandbox(sandboxID string)` resume a paused Sandbox. 135 136 * `StatusSandbox(sandboxID string)` returns a detailed Sandbox status. 137 138 * `ListSandbox()` lists all Sandboxes on the host. 139 It returns a detailed status for every Sandbox. 140 141 ## Container API 142 143 * `CreateContainer(sandboxID string, containerConfig ContainerConfig)` creates a Container on an existing Sandbox. 144 145 * `DeleteContainer(sandboxID, containerID string)` deletes a Container from a Sandbox. 146 If the Container is running it has to be stopped first. 147 148 * `StartContainer(sandboxID, containerID string)` starts an already created Container. 149 The Sandbox has to be running. 150 151 * `StopContainer(sandboxID, containerID string)` stops an already running Container. 152 153 * `EnterContainer(sandboxID, containerID string, cmd Cmd)` enters an already running Container and runs a given command. 154 155 * `StatusContainer(sandboxID, containerID string)` returns a detailed Container status. 156 157 * `KillContainer(sandboxID, containerID string, signal syscall.Signal, all bool)` sends a signal to all or one container inside a Sandbox. 158 159 An example tool using the `virtcontainers` API is provided in the `hack/virtc` package. 160 161 For further details, see the [API documentation](documentation/api/1.0/api.md). 162 163 # Networking 164 165 `virtcontainers` supports the 2 major container networking models: the [Container Network Model (CNM)][cnm] and the [Container Network Interface (CNI)][cni]. 166 167 Typically the former is the Docker default networking model while the later is used on Kubernetes deployments. 168 169 [cnm]: https://github.com/docker/libnetwork/blob/master/docs/design.md 170 [cni]: https://github.com/containernetworking/cni/ 171 172 ## CNM 173 174  175 176 __CNM lifecycle__ 177 178 1. `RequestPool` 179 180 2. `CreateNetwork` 181 182 3. `RequestAddress` 183 184 4. `CreateEndPoint` 185 186 5. `CreateContainer` 187 188 6. Create `config.json` 189 190 7. Create PID and network namespace 191 192 8. `ProcessExternalKey` 193 194 9. `JoinEndPoint` 195 196 10. `LaunchContainer` 197 198 11. Launch 199 200 12. Run container 201 202  203 204 __Runtime network setup with CNM__ 205 206 1. Read `config.json` 207 208 2. Create the network namespace ([code](https://github.com/containers/virtcontainers/blob/0.5.0/cnm.go#L108-L120)) 209 210 3. Call the prestart hook (from inside the netns) ([code](https://github.com/containers/virtcontainers/blob/0.5.0/api.go#L46-L49)) 211 212 4. Scan network interfaces inside netns and get the name of the interface created by prestart hook ([code](https://github.com/containers/virtcontainers/blob/0.5.0/cnm.go#L70-L106)) 213 214 5. Create bridge, TAP, and link all together with network interface previously created ([code](https://github.com/containers/virtcontainers/blob/0.5.0/network.go#L123-L205)) 215 216 6. Start VM inside the netns and start the container ([code](https://github.com/containers/virtcontainers/blob/0.5.0/api.go#L66-L70)) 217 218 __Drawbacks of CNM__ 219 220 There are three drawbacks about using CNM instead of CNI: 221 * The way we call into it is not very explicit: Have to re-exec `dockerd` binary so that it can accept parameters and execute the prestart hook related to network setup. 222 * Implicit way to designate the network namespace: Instead of explicitly giving the netns to `dockerd`, we give it the PID of our runtime so that it can find the netns from this PID. This means we have to make sure being in the right netns while calling the hook, otherwise the VETH pair will be created with the wrong netns. 223 * No results are back from the hook: We have to scan the network interfaces to discover which one has been created inside the netns. This introduces more latency in the code because it forces us to scan the network in the `CreateSandbox` path, which is critical for starting the VM as quick as possible. 224 225 # Storage 226 227 Container workloads are shared with the virtualized environment through 9pfs. 228 The devicemapper storage driver is a special case. The driver uses dedicated block devices rather than formatted filesystems, and operates at the block level rather than the file level. This knowledge has been used to directly use the underlying block device instead of the overlay file system for the container root file system. The block device maps to the top read-write layer for the overlay. This approach gives much better I/O performance compared to using 9pfs to share the container file system. 229 230 The approach above does introduce a limitation in terms of dynamic file copy in/out of the container via `docker cp` operations. 231 The copy operation from host to container accesses the mounted file system on the host side. This is not expected to work and may lead to inconsistencies as the block device will be simultaneously written to, from two different mounts. 232 The copy operation from container to host will work, provided the user calls `sync(1)` from within the container prior to the copy to make sure any outstanding cached data is written to the block device. 233 234 ``` 235 docker cp [OPTIONS] CONTAINER:SRC_PATH HOST:DEST_PATH 236 docker cp [OPTIONS] HOST:SRC_PATH CONTAINER:DEST_PATH 237 ``` 238 239 Ability to hotplug block devices has been added, which makes it possible to use block devices for containers started after the VM has been launched. 240 241 ## How to check if container uses devicemapper block device as its rootfs 242 243 Start a container. Call `mount(8)` within the container. You should see `/` mounted on `/dev/vda` device. 244 245 # Devices 246 247 Support has been added to pass [VFIO](https://www.kernel.org/doc/Documentation/vfio.txt) 248 assigned devices on the docker command line with --device. 249 Support for passing other devices including block devices with --device has 250 not been added added yet. 251 252 ## How to pass a device using VFIO-passthrough 253 254 1. Requirements 255 256 IOMMU group represents the smallest set of devices for which the IOMMU has 257 visibility and which is isolated from other groups. VFIO uses this information 258 to enforce safe ownership of devices for userspace. 259 260 You will need Intel VT-d capable hardware. Check if IOMMU is enabled in your host 261 kernel by verifying `CONFIG_VFIO_NOIOMMU` is not in the kernel configuration. If it is set, 262 you will need to rebuild your kernel. 263 264 The following kernel configuration options need to be enabled: 265 ``` 266 CONFIG_VFIO_IOMMU_TYPE1=m 267 CONFIG_VFIO=m 268 CONFIG_VFIO_PCI=m 269 ``` 270 271 In addition, you need to pass `intel_iommu=on` on the kernel command line. 272 273 2. Identify BDF(Bus-Device-Function) of the PCI device to be assigned. 274 275 276 ``` 277 $ lspci -D | grep -e Ethernet -e Network 278 0000:01:00.0 Ethernet controller: Intel Corporation Ethernet Controller 10-Gigabit X540-AT2 (rev 01) 279 280 $ BDF=0000:01:00.0 281 ``` 282 283 3. Find vendor and device id. 284 285 ``` 286 $ lspci -n -s $BDF 287 01:00.0 0200: 8086:1528 (rev 01) 288 ``` 289 290 4. Find IOMMU group. 291 292 ``` 293 $ readlink /sys/bus/pci/devices/$BDF/iommu_group 294 ../../../../kernel/iommu_groups/16 295 ``` 296 297 5. Unbind the device from host driver. 298 299 ``` 300 $ echo $BDF | sudo tee /sys/bus/pci/devices/$BDF/driver/unbind 301 ``` 302 303 6. Bind the device to `vfio-pci`. 304 305 ``` 306 $ sudo modprobe vfio-pci 307 $ echo 8086 1528 | sudo tee /sys/bus/pci/drivers/vfio-pci/new_id 308 $ echo $BDF | sudo tee --append /sys/bus/pci/drivers/vfio-pci/bind 309 ``` 310 311 7. Check `/dev/vfio` 312 313 ``` 314 $ ls /dev/vfio 315 16 vfio 316 ``` 317 318 8. Start a Clear Containers container passing the VFIO group on the docker command line. 319 320 ``` 321 docker run -it --device=/dev/vfio/16 centos/tools bash 322 ``` 323 324 9. Running `lspci` within the container should show the device among the 325 PCI devices. The driver for the device needs to be present within the 326 Clear Containers kernel. If the driver is missing, you can add it to your 327 custom container kernel using the [osbuilder](https://github.com/clearcontainers/osbuilder) 328 tooling. 329 330 # Developers 331 332 For information on how to build, develop and test `virtcontainers`, see the 333 [developer documentation](documentation/Developers.md). 334 335 # Persistent storage plugin support 336 337 See the [persistent storage plugin documentation](persist/plugin). 338 339 # Experimental features 340 341 See the [experimental features documentation](experimental).