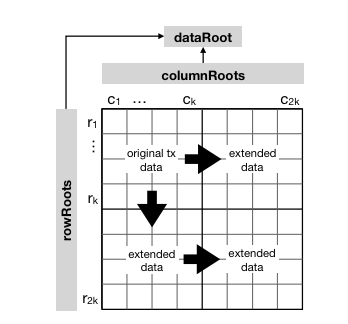

github.com/lazyledger/lazyledger-core@v0.35.0-dev.0.20210613111200-4c651f053571/docs/lazy-adr/adr-002-ipld-da-sampling.md (about) 1 # ADR 002: Sampling erasure coded Block chunks 2 3 ## Changelog 4 5 - 26-2-2021: Created 6 7 ## Context 8 9 In Tendermint's block gossiping each peer gossips random parts of block data to peers. 10 For LazyLedger, we need nodes (from light-clients to validators) to be able to sample row-/column-chunks of the erasure coded 11 block (aka the extended data square) from the network. 12 This is necessary for Data Availability proofs. 13 14  15 16 A high-level, implementation-independent formalization of above mentioned sampling and Data Availability proofs can be found in: 17 [_Fraud and Data Availability Proofs: Detecting Invalid Blocks in Light Clients_](https://fc21.ifca.ai/papers/83.pdf). 18 19 For the time being, besides the academic paper, no other formalization or specification of the protocol exists. 20 Currently, the LazyLedger specification itself only describes the [erasure coding](https://github.com/lazyledger/lazyledger-specs/blob/master/specs/data_structures.md#erasure-coding) 21 and how to construct the extended data square from the block data. 22 23 This ADR: 24 - describes the high-level requirements 25 - defines the API that and how it can be used by different components of LazyLedger (block gossiping, block sync, DA proofs) 26 - documents decision on how to implement this. 27 28 29 The core data structures and the erasure coding of the block are already implemented in lazyledger-core ([#17], [#19], [#83]). 30 While there are no ADRs for these changes, we can refer to the LazyLedger specification in this case. 31 For this aspect, the existing implementation and specification should already be on par for the most part. 32 The exact arrangement of the data as described in this [rationale document](https://github.com/lazyledger/lazyledger-specs/blob/master/rationale/message_block_layout.md) 33 in the specification can happen at app-side of the ABCI boundary. 34 The latter was implemented in [lazyledger/lazyledger-app#21](https://github.com/lazyledger/lazyledger-app/pull/21) 35 leveraging a new ABCI method, added in [#110](https://github.com/lazyledger/lazyledger-core/pull/110). 36 This new method is a sub-set of the proposed ABCI changes aka [ABCI++](https://github.com/tendermint/spec/pull/254). 37 38 Mustafa Al-Bassam (@musalbas) implemented a [prototype](https://github.com/lazyledger/lazyledger-prototype) 39 whose main purpose is to realistically analyse the protocol. 40 Although the prototype does not make any network requests and only operates locally, it can partly serve as a reference implementation. 41 It uses the [rsmt2d] library. 42 43 The implementation will essentially use IPFS' APIs. For reading (and writing) chunks it 44 will use the IPLD [`DagService`](https://github.com/ipfs/go-ipld-format/blob/d2e09424ddee0d7e696d01143318d32d0fb1ae63/merkledag.go#L54), 45 more precisely the [`NodeGetter`](https://github.com/ipfs/go-ipld-format/blob/d2e09424ddee0d7e696d01143318d32d0fb1ae63/merkledag.go#L18-L27) 46 and [`NodeAdder`](https://github.com/ipfs/go-ipld-format/blob/d2e09424ddee0d7e696d01143318d32d0fb1ae63/merkledag.go#L29-L39). 47 As an optimization, we can also use a [`Batch`](https://github.com/ipfs/go-ipld-format/blob/d2e09424ddee0d7e696d01143318d32d0fb1ae63/batch.go#L29) 48 to batch adding and removing nodes. 49 This will be achieved by passing around a [CoreAPI](https://github.com/ipfs/interface-go-ipfs-core/blob/b935dfe5375eac7ea3c65b14b3f9a0242861d0b3/coreapi.go#L15) 50 object, which derive from the IPFS node which is created along a with a tendermint node (see [#152]). 51 This code snippet does exactly that (see the [go-ipfs documentation] for more examples): 52 ```go 53 // This constructs an IPFS node instance 54 node, _ := core.NewNode(ctx, nodeOptions) 55 // This attaches the Core API to the constructed node 56 coreApi := coreapi.NewCoreAPI(node) 57 ``` 58 59 The above mentioned IPLD methods operate on so called [ipld.Nodes]. 60 When computing the data root, we can pass in a [`NodeVisitor`](https://github.com/lazyledger/nmt/blob/b22170d6f23796a186c07e87e4ef9856282ffd1a/nmt.go#L22) 61 into the Namespaced Merkle Tree library to create these (each inner- and leaf-node in the tree becomes an ipld node). 62 As a peer that requests such an IPLD node, the LazyLedger IPLD plugin provides the [function](https://github.com/lazyledger/lazyledger-core/blob/ceb881a177b6a4a7e456c7c4ab1dd0eb2b263066/p2p/ipld/plugin/nodes/nodes.go#L175) 63 `NmtNodeParser` to transform the retrieved raw data back into an `ipld.Node`. 64 65 A more high-level description on the changes required to rip out the current block gossiping routine, 66 including changes to block storage-, RPC-layer, and potential changes to reactors is either handled in [LAZY ADR 001](./adr-001-block-propagation.md), 67 and/or in a few smaller, separate followup ADRs. 68 69 ## Alternative Approaches 70 71 Instead of creating a full IPFS node object and passing it around as explained above 72 - use API (http) 73 - use ipld-light 74 - use alternative client 75 76 Also, for better performance 77 - use [graph-sync], [IPLD selectors], e.g. via [ipld-prime] 78 79 Also, there is the idea, that nodes only receive the [Header] with the data root only 80 and, in an additional step/request, download the DA header using the library, too. 81 While this feature is not considered here, and we assume each node that uses this library has the DA header, this assumption 82 is likely to change when flesh out other parts of the system in more detail. 83 Note that this also means that light clients would still need to validate that the data root and merkelizing the DA header yield the same result. 84 85 ## Decision 86 87 > This section records the decision that was made. 88 > It is best to record as much info as possible from the discussion that happened. This aids in not having to go back to the Pull Request to get the needed information. 89 90 > - TODO: briefly summarize github, discord, and slack discussions (?) 91 > - also mention Mustafa's prototype and compare both apis briefly (RequestSamples, RespondSamples, ProcessSamplesResponse) 92 > - mention [ipld experiments] 93 94 95 96 ## Detailed Design 97 98 Add a package to the library that provides the following features: 99 1. sample a given number of random row/col indices of extended data square given a DA header and indicate if successful or timeout/other error occurred 100 2. store the block in the network by adding it to the peer's local Merkle-DAG whose content is discoverable via a DHT 101 3. store the sampled chunks in the network 102 4. reconstruct the whole block from a given DA header 103 5. get all messages of a particular namespace ID. 104 105 We mention 5. here mostly for completeness. Its details will be described / implemented in a separate ADR / PR. 106 107 Apart from the above mentioned features, we informally collect additional requirements: 108 - where randomness is needed, the randomness source should be configurable 109 - all replies by the network should be verified if this is not sufficiently covered by the used libraries already (IPFS) 110 - where possible, the requests to the network should happen in parallel (without DoSing the proposer for instance). 111 112 This library should be implemented as two new packages: 113 114 First, a sub-package should be added to the layzledger-core [p2p] package 115 which does not know anything about the core data structures (Block, DA header etc). 116 It handles the actual network requests to the IPFS network and operates on IPFS/IPLD objects 117 directly and hence should live under [p2p/ipld]. 118 To a some extent this part of the stack already exists. 119 120 Second, a high-level API that can "live" closer to the actual types, e.g., in a sub-package in [lazyledger-core/types] 121 or in a new sub-package `da`. 122 123 We first describe the high-level library here and describe functions in 124 more detail inline with their godoc comments below. 125 126 ### API that operates on lazyledger-core types 127 128 As mentioned above this part of the library has knowledge of the core types (and hence depends on them). 129 It does not deal with IPFS internals. 130 131 ```go 132 // ValidateAvailability implements the protocol described in https://fc21.ifca.ai/papers/83.pdf. 133 // Specifically all steps of the protocol described in section 134 // _5.2 Random Sampling and Network Block Recovery_ are carried out. 135 // 136 // In more detail it will first create numSamples random unique coordinates. 137 // Then, it will ask the network for the leaf data corresponding to these coordinates. 138 // Additionally to the number of requests, the caller can pass in a callback, 139 // which will be called on for each retrieved leaf with a verified Merkle proof. 140 // 141 // Among other use-cases, the callback can be useful to monitoring (progress), or, 142 // to process the leaf data the moment it was validated. 143 // The context can be used to provide a timeout. 144 // TODO: Should there be a constant = lower bound for #samples 145 func ValidateAvailability( 146 ctx contex.Context, 147 dah *DataAvailabilityHeader, 148 numSamples int, 149 onLeafValidity func(namespace.PrefixedData8), 150 ) error { /* ... */} 151 152 // RetrieveBlockData can be used to recover the block Data. 153 // It will carry out a similar protocol as described for ValidateAvailability. 154 // The key difference is that it will sample enough chunks until it can recover the 155 // full extended data square, including original data (e.g. by using rsmt2d.RepairExtendedDataSquare). 156 func RetrieveBlockData( 157 ctx contex.Context, 158 dah *DataAvailabilityHeader, 159 api coreiface.CoreAPI, 160 codec rsmt2d.Codec, 161 ) (types.Data, error) {/* ... */} 162 163 // PutBlock operates directly on the Block. 164 // It first computes the erasure coding, aka the extended data square. 165 // Row by row ir calls a lower level library which handles adding the 166 // the row to the Merkle Dag, in our case a Namespaced Merkle Tree. 167 // Note, that this method could also fill the DA header. 168 // The data will be pinned by default. 169 func (b *Block) PutBlock(ctx contex.Context, nodeAdder ipld.NodeAdder) error 170 ``` 171 172 We now describe the lower-level library that will be used by above methods. 173 Again we provide more details inline in the godoc comments directly. 174 175 `PutBlock` is a method on `Block` as the erasure coding can then be cached, e.g. in a private field 176 in the block. 177 178 ### Changes to the lower level API closer to IPFS (p2p/ipld) 179 180 ```go 181 // GetLeafData takes in a Namespaced Merkle tree root transformed into a Cid 182 // and the leaf index to retrieve. 183 // Callers also need to pass in the total number of leaves of that tree. 184 // Internally, this will be translated to a IPLD path and corresponds to 185 // an ipfs dag get request, e.g. namespacedCID/0/1/0/0/1. 186 // The retrieved data should be pinned by default. 187 func GetLeafData( 188 ctx context.Context, 189 rootCid cid.Cid, 190 leafIndex uint32, 191 totalLeafs uint32, // this corresponds to the extended square width 192 api coreiface.CoreAPI, 193 ) ([]byte, error) 194 ``` 195 196 `GetLeafData` can be used by above `ValidateAvailability` and `RetrieveBlock` and 197 `PutLeaves` by `PutBlock`. 198 199 ### A Note on IPFS/IPLD 200 201 In IPFS all data is _content addressed_ which basically means the data is identified by its hash. 202 Particularly, in the LazyLedger case, the root CID identifies the Namespaced Merkle tree including all its contents (inner and leaf nodes). 203 This means that if a `GetLeafData` request succeeds, the retrieved leaf data is in fact the leaf data in the tree. 204 We do not need to additionally verify Merkle proofs per leaf as this will essentially be done via IPFS on each layer while 205 resolving and getting to the leaf data. 206 207 > TODO: validate this assumption and link to code that shows how this is done internally 208 209 ### Implementation plan 210 211 As fully integrating Data Available proofs into tendermint, is a rather larger change we break up the work into the 212 following packages (not mentioning the implementation work that was already done): 213 214 1. Flesh out the changes in the consensus messages ([lazyledger-specs#126], [lazyledger-specs#127]) 215 2. Flesh out the changes that would be necessary to replace the current block gossiping ([LAZY ADR 001](./adr-001-block-propagation.md)) 216 3. Add the possibility of storing and retrieving block data (samples or whole block) to lazyledger-core (this ADR and related PRs). 217 4. Integrate above API (3.) as an addition into lazyledger-core without directly replacing the tendermint counterparts (block gossip etc). 218 5. Rip out each component that will be redundant with above integration in one or even several smaller PRs: 219 - block gossiping (see LAZY ADR 001) 220 - modify block store (see LAZY ADR 001) 221 - make downloading full Blocks optional (flag/config) 222 - route some RPC requests to IPFS (see LAZY ADR 001) 223 224 225 ## Status 226 227 Proposed 228 229 ## Consequences 230 231 ### Positive 232 233 - simplicity & ease of implementation 234 - can re-use an existing networking and p2p stack (go-ipfs) 235 - potential support of large, cool, and helpful community 236 - high-level API definitions independent of the used stack 237 238 ### Negative 239 240 - latency 241 - being connected to the public IPFS network might be overkill if peers should in fact only care about a subset that participates in the LazyLedger protocol 242 - dependency on a large code-base with lots of features and options of which we only need a small subset of 243 244 ### Neutral 245 - two different p2p layers exist in lazyledger-core 246 247 ## References 248 249 - https://github.com/lazyledger/lazyledger-core/issues/85 250 - https://github.com/lazyledger/lazyledger-core/issues/167 251 252 - https://docs.ipld.io/#nodes 253 - https://arxiv.org/abs/1809.09044 254 - https://fc21.ifca.ai/papers/83.pdf 255 - https://github.com/tendermint/spec/pull/254 256 257 258 [#17]: https://github.com/lazyledger/lazyledger-core/pull/17 259 [#19]: https://github.com/lazyledger/lazyledger-core/pull/19 260 [#83]: https://github.com/lazyledger/lazyledger-core/pull/83 261 262 [#152]: https://github.com/lazyledger/lazyledger-core/pull/152 263 264 [lazyledger-specs#126]: https://github.com/lazyledger/lazyledger-specs/issues/126 265 [lazyledger-specs#127]: https://github.com/lazyledger/lazyledger-specs/pulls/127 266 [Header]: https://github.com/lazyledger/lazyledger-specs/blob/master/specs/data_structures.md#header 267 268 [go-ipfs documentation]: https://github.com/ipfs/go-ipfs/tree/master/docs/examples/go-ipfs-as-a-library#use-go-ipfs-as-a-library-to-spawn-a-node-and-add-a-file 269 [ipld experiments]: https://github.com/lazyledger/ipld-plugin-experiments 270 [ipld.Nodes]: https://github.com/ipfs/go-ipld-format/blob/d2e09424ddee0d7e696d01143318d32d0fb1ae63/format.go#L22-L45 271 [graph-sync]: https://github.com/ipld/specs/blob/master/block-layer/graphsync/graphsync.md 272 [IPLD selectors]: https://github.com/ipld/specs/blob/master/selectors/selectors.md 273 [ipld-prime]: https://github.com/ipld/go-ipld-prime 274 275 [rsmt2d]: https://github.com/lazyledger/rsmt2d 276 277 278 [p2p]: https://github.com/lazyledger/lazyledger-core/tree/0eccfb24e2aa1bb9c4428e20dd7828c93f300e60/p2p 279 [p2p/ipld]: https://github.com/lazyledger/lazyledger-core/tree/0eccfb24e2aa1bb9c4428e20dd7828c93f300e60/p2p/ipld 280 [lazyledger-core/types]: https://github.com/lazyledger/lazyledger-core/tree/0eccfb24e2aa1bb9c4428e20dd7828c93f300e60/types