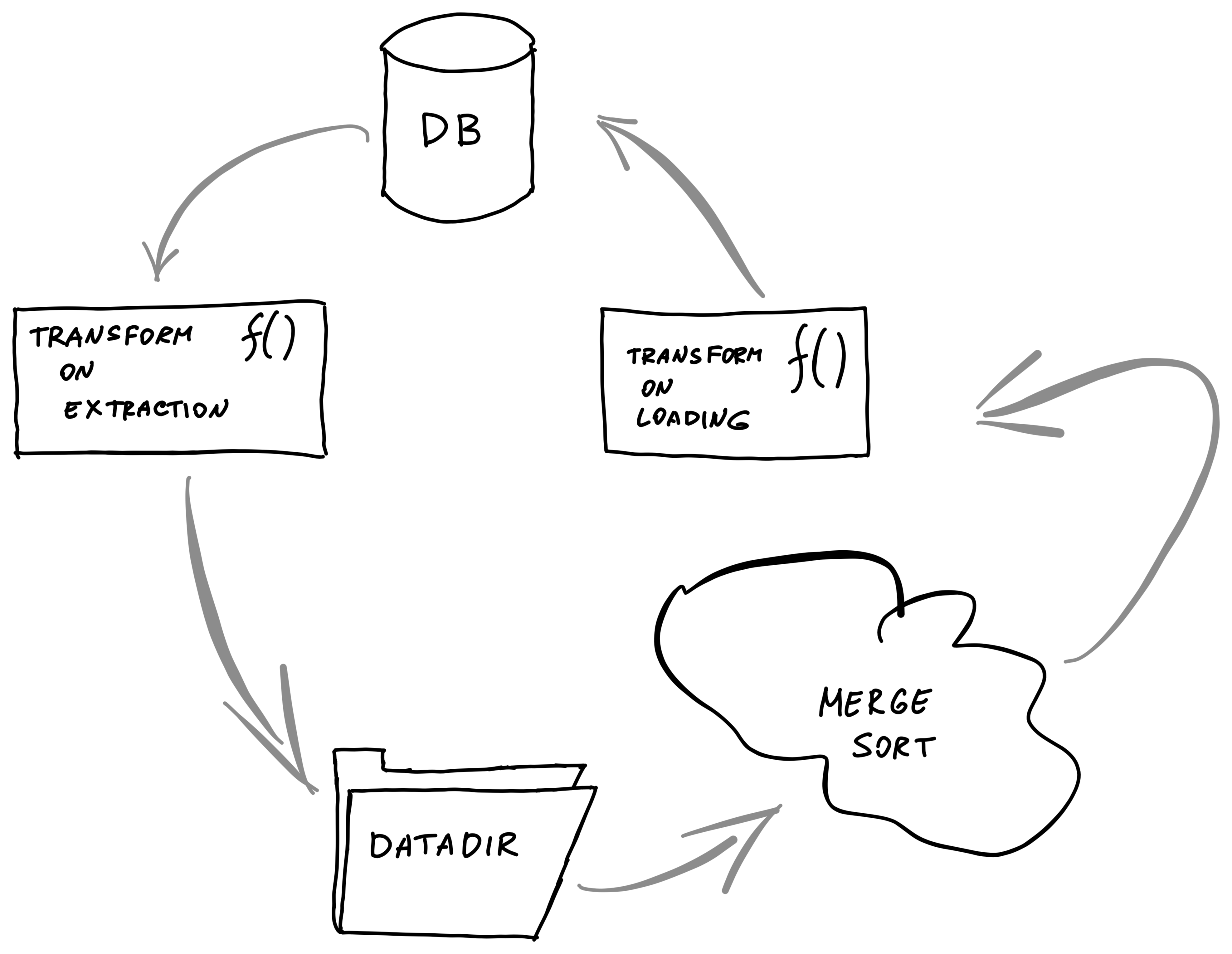

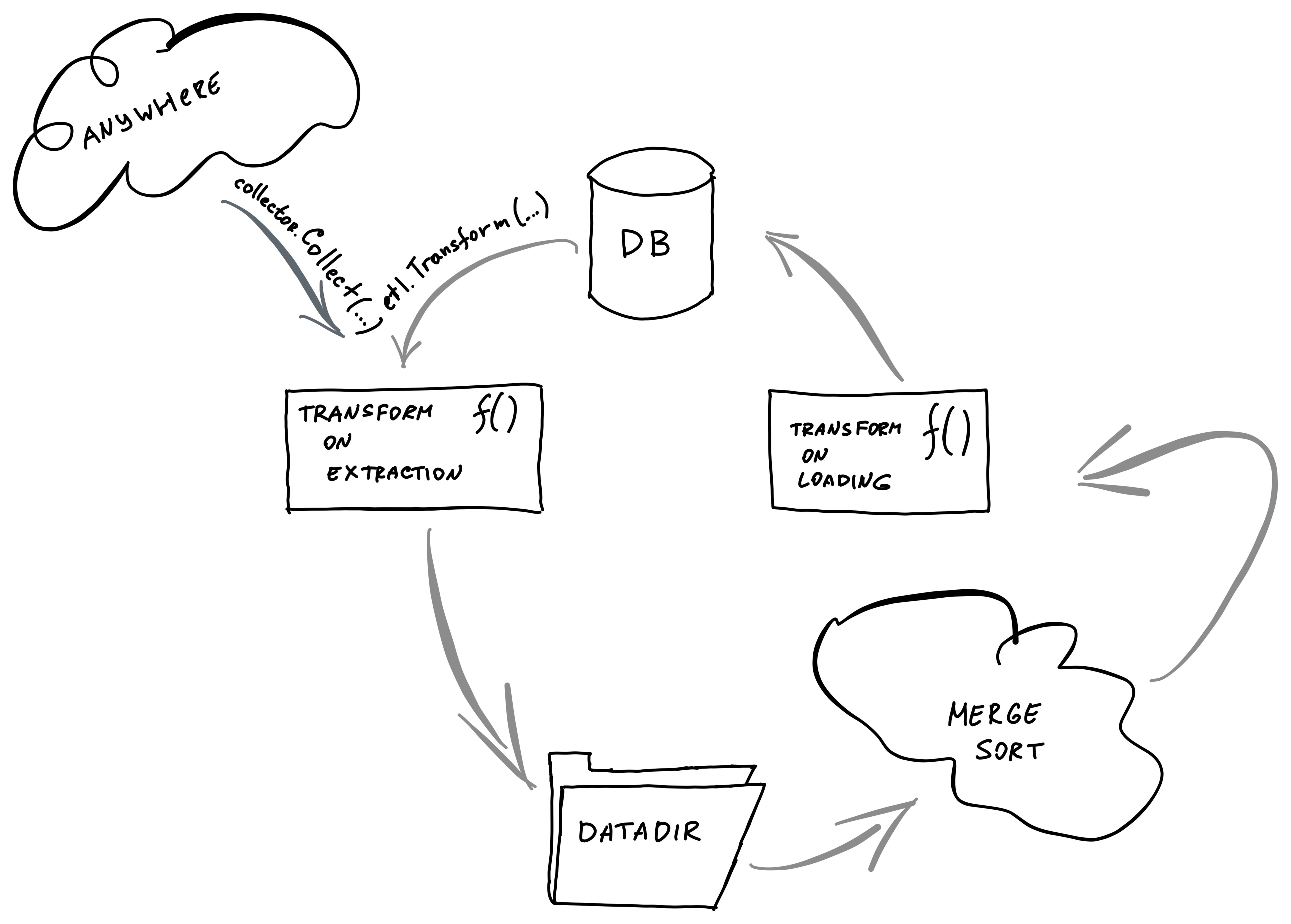

github.com/ledgerwatch/erigon-lib@v1.0.0/etl/README.md (about) 1 # ETL 2 ETL framework is most commonly used in [staged sync](https://github.com/ledgerwatch/erigon/blob/devel/eth/stagedsync/README.md). 3 4 It implements a pattern where we extract some data from a database, transform it, 5 then put it into temp files and insert back to the database in sorted order. 6 7 Inserting entries into our KV storage sorted by keys helps to minimize write 8 amplification, hence it is much faster, even considering additional I/O that 9 is generated by storing files. 10 11 It behaves similarly to enterprise [Extract, Tranform, Load](https://en.wikipedia.org/wiki/Extract,_transform,_load) frameworks, hence the name. 12 We use temporary files because that helps keep RAM usage predictable and allows 13 using ETL on large amounts of data. 14 15 ### Example 16 17 ``` 18 func keyTransformExtractFunc(transformKey func([]byte) ([]byte, error)) etl.ExtractFunc { 19 return func(k, v []byte, next etl.ExtractNextFunc) error { 20 newK, err := transformKey(k) 21 if err != nil { 22 return err 23 } 24 return next(k, newK, v) 25 } 26 } 27 28 err := etl.Transform( 29 db, // database 30 dbutils.PlainStateBucket, // "from" bucket 31 dbutils.CurrentStateBucket, // "to" bucket 32 datadir, // where to store temp files 33 keyTransformExtractFunc(transformPlainStateKey), // transformFunc on extraction 34 etl.IdentityLoadFunc, // transform on load 35 etl.TransformArgs{ // additional arguments 36 Quit: quit, 37 }, 38 ) 39 if err != nil { 40 return err 41 } 42 43 ``` 44 45 ## Data Transformation 46 47 The whole flow is shown in the image 48 49  50 51 Data could be transformed in two places along the pipeline: 52 53 * transform on extraction 54 55 * transform on loading 56 57 ### Transform On Extraction 58 59 `type ExtractFunc func(k []byte, v []byte, next ExtractNextFunc) error` 60 61 Transform on extraction function receives the current key and value from the 62 source bucket. 63 64 ### Transform On Loading 65 66 `type LoadFunc func(k []byte, value []byte, state State, next LoadNextFunc) error` 67 68 As well as the current key and value, the transform on loading function 69 receives the `State` object that can receive data from the destination bucket. 70 71 That is used in index generation where we want to extend index entries with new 72 data instead of just adding new ones. 73 74 ### `<...>NextFunc` pattern 75 76 Sometimes we need to produce multiple entries from a single entry when 77 transforming. 78 79 To do that, each of the transform function receives a next function that should 80 be called to move data further. That means that each transformation can produce 81 any number of outputs for a single input. 82 83 It can be one output, like in `IdentityLoadFunc`: 84 85 ``` 86 func IdentityLoadFunc(k []byte, value []byte, _ State, next LoadNextFunc) error { 87 return next(k, k, value) // go to the next step 88 } 89 ``` 90 91 It can be multiple outputs like when each entry is a `ChangeSet`: 92 93 ``` 94 func(dbKey, dbValue []byte, next etl.ExtractNextFunc) error { 95 blockNum, _ := dbutils.DecodeTimestamp(dbKey) 96 return bytes2walker(dbValue).Walk(func(changesetKey, changesetValue []byte) error { 97 key := common.CopyBytes(changesetKey) 98 v := make([]byte, 9) 99 binary.BigEndian.PutUint64(v, blockNum) 100 if len(changesetValue) == 0 { 101 v[8] = 1 102 } 103 return next(dbKey, key, v) // go to the next step 104 }) 105 } 106 ``` 107 108 ### Buffer Types 109 110 Before the data is being flushed into temp files, it is getting collected into 111 a buffer until if overflows (`etl.ExtractArgs.BufferSize`). 112 113 There are different types of buffers available with different behaviour. 114 115 * `SortableSliceBuffer` -- just append `(k, v1)`, `(k, v2)` onto a slice. Duplicate keys 116 will lead to duplicate entries: `[(k, v1) (k, v2)]`. 117 118 * `SortableAppendBuffer` -- on duplicate keys: merge. `(k, v1)`, `(k, v2)` 119 will lead to `k: [v1 v2]` 120 121 * `SortableOldestAppearedBuffer` -- on duplicate keys: keep the oldest. `(k, 122 v1)`, `(k v2)` will lead to `k: v1` 123 124 ### Transforming Structs 125 126 Both transform functions and next functions allow only byte arrays. 127 If you need to pass a struct, you will need to marshal it. 128 129 ### Loading Into Database 130 131 We load data from the temp files into a database in batches, limited by 132 `IdealBatchSize()` of an `ethdb.Mutation`. 133 134 (for tests we can also override it) 135 136 ### Handling Interruptions 137 138 ETL processes are long, so we need to be able to handle interruptions. 139 140 #### Handing `Ctrl+C` 141 142 You can pass your quit channel into `Quit` parameter into `etl.TransformArgs`. 143 144 When this channel is closed, ETL will be interrupted. 145 146 #### Saving & Restoring State 147 148 Interrupting in the middle of loading can lead to inconsistent state in the 149 database. 150 151 To avoid that, the ETL framework allows storing progress by setting `OnLoadCommit` in `etl.TransformArgs`. 152 153 Then we can use this data to know the progress the ETL transformation made. 154 155 You can also specify `ExtractStartKey` and `ExtractEndKey` to limit the number 156 of items transformed. 157 158 ## Ways to work with ETL framework 159 160 There might be 2 scenarios on how you want to work with the ETL framework. 161 162  163 164 ### `etl.Transform` function 165 166 The vast majority of use-cases is when we extract data from one bucket and in 167 the end, load it into another bucket. That is the use-case for `etl.Transform` 168 function. 169 170 ### `etl.Collector` struct 171 172 If you want a more modular behaviour instead of just reading from the DB (like 173 generating intermediate hashes in `../../core/chain_makers.go`, you can use 174 `etl.Collector` struct directly. 175 176 It has a `.Collect()` method that you can provide your data to. 177 178 179 ## Optimizations 180 181 * if all data fits into a single file, we don't write anything to disk and just 182 use in-memory storage.