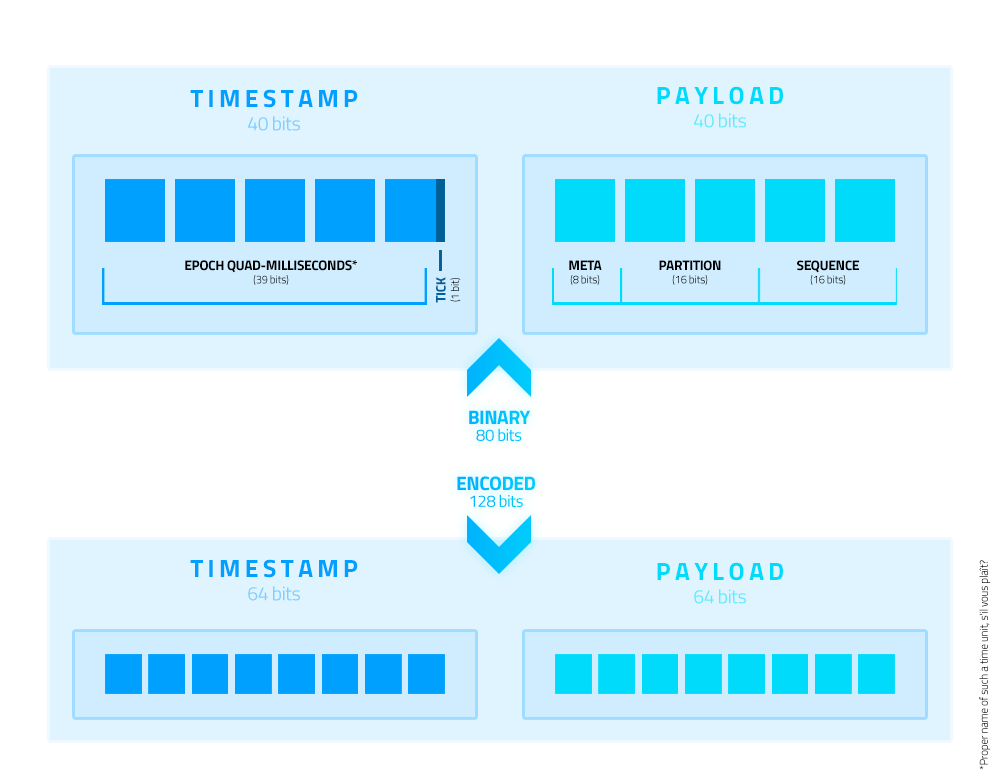

github.com/muyo/sno@v1.2.1/README.md (about) 1 <img src="./.github/logo_200x200.png" alt="sno logo" title="sno" align="left" height="200" /> 2 3 A spec for **unique IDs in distributed systems** based on the Snowflake design, i.e. a coordination-based ID variant. 4 It aims to be friendly to both machines and humans, compact, *versatile* and fast. 5 6 This repository contains a **Go** package for generating such IDs. 7 8 [](https://pkg.go.dev/github.com/muyo/sno?tab=doc) 9 [](https://github.com/muyo/sno/releases) 10 [](https://travis-ci.com/muyo/sno) 11 [](https://codecov.io/gh/muyo/sno) 12 [](https://goreportcard.com/report/github.com/muyo/sno) 13 [](https://raw.githubusercontent.com/muyo/sno/master/LICENSE) 14 ```bash 15 go get -u github.com/muyo/sno 16 ``` 17 18 ### Features 19 20 - **Compact** - **10 bytes** in its binary representation, canonically [encoded](#encoding) as **16 characters**. 21 <br />URL-safe and non-ambiguous encoding which also happens to be at the binary length of UUIDs - 22 **sno**s can be stored as UUIDs in your database of choice. 23 - **K-sortable** in either representation. 24 - **[Embedded timestamp](#time-and-sequence)** with a **4msec resolution**, bounded within the years **2010 - 2079**. 25 <br />Handles clock drifts gracefully, without waiting. 26 - **[Embedded byte](#metabyte)** for arbitrary data. 27 - **[Simple data layout](#layout)** - straightforward to inspect or encode/decode. 28 - **[Optional and flexible](#usage)** configuration and coordination. 29 - **[Fast](./benchmark#results)**, wait-free, safe for concurrent use. 30 <br />Clocks in at about 500 LoC, has no external dependencies and minimal dependencies on std. 31 - A pool of **≥ 16,384,000** IDs per second. 32 <br /> 65,536 guaranteed unique IDs per 4msec per partition (65,536 combinations) per metabyte 33 (256 combinations) per tick-tock (1 bit adjustment for clock drifts). 34 **549,755,813,888,000** is the global pool **per second** when all components are taken into account. 35 36 ### Non-features / cons 37 38 - True randomness. **sno**s embed a counter and have **no entropy**. They are not suitable in a context where 39 unpredictability of IDs is a must. They still, however, meet the common requirement of keeping internal counts 40 (e.g. total number of entitites) unguessable and appear obfuscated; 41 - Time precision. While *good enough* for many use cases, not quite there for others. The ➜ [Metabyte](#metabyte) 42 can be used to get around this limitation, however. 43 - It's 10 bytes, not 8. This is suboptimal as far as memory alignment is considered (platform dependent). 44 45 46 <br /> 47 48 ## Usage (➜ [API](https://pkg.go.dev/github.com/muyo/sno?tab=doc)) 49 50 **sno** comes with a package-level generator on top of letting you configure your own generators. 51 52 Generating a new ID using the defaults takes no more than importing the package and: 53 54 ```go 55 id := sno.New(0) 56 ``` 57 58 Where `0` is the ➜ [Metabyte](#metabyte).<br /> 59 60 The global generator is immutable and private. It's therefore also not possible to restore it using a Snapshot. 61 Its Partition is based on time and changes across restarts. 62 63 ### Partitions (➜ [doc](https://pkg.go.dev/github.com/muyo/sno?tab=doc#Partition)) 64 65 As soon as you run more than 1 generator, you **should** start coordinating the creation of Generators to 66 actually *guarantee* a collision-free ride. This applies to all specs of the Snowflake variant. 67 68 Partitions are one of several friends you have to get you those guarantees. A Partition is 2 bytes. 69 What they mean and how you define them is up to you. 70 71 ```go 72 generator, err := sno.NewGenerator(&sno.GeneratorSnapshot{ 73 Partition: sno.Partition{'A', 10} 74 }, nil) 75 ``` 76 77 Multiple generators can share a partition by dividing the sequence pool between 78 them (➜ [Sequence sharding](#sequence-sharding)). 79 80 ### Snapshots (➜ [doc](https://pkg.go.dev/github.com/muyo/sno?tab=doc#GeneratorSnapshot)) 81 82 Snapshots happen to serve both as configuration and a means of saving and restoring generator data. They are 83 optional - simply pass `nil` to `NewGenerator()`, to get a Generator with sane defaults and a unique (in-process) 84 Partition. 85 86 Snapshots can be taken at runtime: 87 88 ```go 89 s := generator.Snapshot() 90 ``` 91 92 This exposes most of a Generator's internal bookkeeping data. In an ideal world where programmers are not lazy 93 until their system runs into an edge case - you'd persist that snapshot across restarts and restore generators 94 instead of just creating them from scratch each time. This will keep you safe both if a large clock drift happens 95 during the restart -- or before, and you just happen to come back online again "in the past", relative to IDs that 96 had already been generated. 97 98 A snapshot is a sample in time - it will very quickly get stale. Only take snapshots meant for restoring them 99 later when generators are already offline - or for metrics purposes when online. 100 101 102 <br /> 103 104 ## Layout 105 106 A **sno** is simply 80-bits comprised of two 40-bit blocks: the **timestamp** and the **payload**. The bytes are 107 stored in **big-endian** order in all representations to retain their sortable property. 108  109 Both blocks can be inspected and mutated independently in either representation. Bits of the components in the binary 110 representation don't spill over into other bytes which means no additional bit twiddling voodoo is necessary* to extract 111 them. 112 113 \*The tick-tock bit in the timestamp is the only exception (➜ [Time and sequence](#time-and-sequence)). 114 115 <br /> 116 117 ## Time and sequence 118 119 ### Time 120 121 **sno**s embed a timestamp comprised of 39 bits with the epoch **milliseconds at a 4msec resolution** (floored, 122 unsigned) and one bit, the LSB of the entire block - for the tick-tock toggle. 123 124 ### Epoch 125 126 The **epoch is custom** and **constant**. It is bounded within `2010-01-01 00:00:00 UTC` and 127 `2079-09-07 15:47:35.548 UTC`. The lower bound is `1262304000` seconds relative to Unix. 128 129 If you *really* have to break out of the epoch - or want to store higher precision - the metabyte is your friend. 130 131 ### Precision 132 133 Higher precision *is not necessarily* a good thing. Think in dataset and sorting terms, or in sampling rates. You 134 want to grab all requests with an error code of `403` in a given second, where the code may be encoded in the metabyte. 135 At a resolution of 1 second, you binary search for just one index and then proceed straight up linearly. 136 That's simple enough. 137 138 At a resolution of 1msec however, you now need to find the corresponding 1000 potential starting offsets because 139 your `403` requests are interleaved with the `200` requests (potentially). At 4msec, this is 250 steps. 140 141 Everything has tradeoffs. This was a compromise between precision, size, simple data layout -- and factors like that above. 142 143 ### Sequence 144 145 **sno**s embed a sequence (2 bytes) that is **relative to time**. It does not overflow and resets on each new time 146 unit (4msec). A higher sequence within a given timeframe **does not necessarily indicate order of creation**. 147 It is not advertised as monotonic because its monotonicity is dependent on usage. A single generator writing 148 to a single partition, *ceteris paribus*, *will* result in monotonic increments and *will* represent order of creation. 149 150 With multiple writers in the same partition, increment order is *undefined*. If the generator moves back in time, 151 the order will still be monotonic but sorted either 2msec after or before IDs previously already written at that 152 time (see tick-tock). 153 154 #### Sequence sharding 155 156 The sequence pool has a range of `[0..65535]` (inclusive). **sno** supports partition sharing out of the box 157 by further sharding the sequence - that is multiple writers (generators) in the same partition. 158 159 This is done by dividing the pool between all writers, via user-specified bounds. 160 161 A generator will reset to its lower bound on each new time unit - and will never overflow its upper bound. 162 Collisions are therefore guaranteed impossible unless misconfigured and they overlap with another 163 *currently online* generator. 164 165 166 <details> 167 <summary>Star Trek: Voyager mode, <b>How to shard sequences</b></summary> 168 <p> 169 170 This can be useful when multiple containers on one physical machine are to write as a cluster to a partition 171 defined by the machine's ID (or simpler - multiple processes on one host). Or if multiple remote 172 services across the globe were to do that. 173 174 ```go 175 var PeoplePartition = sno.Partition{'P', 0} 176 177 // In process/container/remote host #1 178 generator1, err := sno.NewGenerator(&sno.GeneratorSnapshot{ 179 Partition: PeoplePartition, 180 SequenceMin: 0, 181 SequenceMax: 32767 // 32768 - 1 182 }, nil) 183 184 // In process/container/remote host #2 185 generator2, err := sno.NewGenerator(&sno.GeneratorSnapshot{ 186 Partition: PeoplePartition, 187 SequenceMin: 32768, 188 SequenceMax: 65535 // 65536 - 1 189 }, nil) 190 ``` 191 192 You will notice that we have simply divided our total pool of 65,536 into 2 even and **non-overlapping** 193 sectors. In the first snapshot `SequenceMin` could be omitted - and `SequenceMax` in the second, as those are the 194 defaults used when they are not defined. You will get an error when trying to set limits above the capacity of 195 generators, but since the library is oblivious to your setup - it cannot warn you about overlaps and cannot 196 resize on its own either. 197 198 The pools can be defined arbitrarily - as long as you make sure they don't overlap across *currently online* 199 generators. 200 201 It is safe for a range previously used by another generator to be assigned to a different generator under the 202 following conditions: 203 - it happens in a different timeframe *in the future*, i.e. no sooner than after 4msec have passed (no orchestrator 204 is fast enough to get a new container online to replace a dead one for this to be a worry); 205 - if you can guarantee the new Generator won't regress into a time the previous Generator was running in. 206 207 If you create the new Generator using a Snapshot of the former as it went offline, you do not need to worry about those 208 conditions and can resume writing to the same range immediately - the obvious tradeoff being the need to coordinate 209 the exchange of Snapshots. 210 211 If your clusters are always fixed size - reserving ranges is straightforward. With dynamic sizes, a potential simple 212 scheme is to reserve the lower byte of the partition for scaling. Divide your sequence pool by, say, 8, keep 213 assigning higher ranges until you hit your divider. When you do, increment partition by 1, start assigning 214 ranges from scratch. This gave us 2048 identifiable origins by using just one byte of the partition. 215 216 That said, the partition pool available is large enough that the likelihood you'll ever *need* 217 this is slim to none. Suffice to know you *can* if you want to. 218 219 Besides for guaranteeing a collision-free ride, this approach can also be used to attach more semantic meaning to 220 partitions themselves, them being placed higher in the sort order. 221 In other words - with it, the origin of an ID can be determined by inspecting the sequence 222 alone, which frees up the partition for another meaning. 223 224 How about... 225 226 ```go 227 var requestIDGenerator, _ = sno.NewGenerator(&GeneratorSnapshot{ 228 SequenceMax: 32767, 229 }, nil) 230 231 type Service byte 232 type Call byte 233 234 const ( 235 UsersSvc Service = 1 236 UserList Call = 1 237 UserCreate Call = 2 238 UserDelete Call = 3 239 ) 240 241 func genRequestID(svc Service, methodID Call) sno.ID { 242 id := requestIDGenerator.New(byte(svc)) 243 // Overwrites the upper byte of the fixed partition. 244 // In our case - we didn't define it but gave a non-nil snapshot, so it is {0, 0}. 245 id[6] = byte(methodID) 246 247 return id 248 } 249 ``` 250 251 </p> 252 </details> 253 254 #### Sequence overflow 255 256 Remember that limiting the sequence pool also limits max throughput of each generator. For an explanation on what 257 happens when you're running at or over capacity, see the details below or take a look at ➜ [Benchmarks](#benchmarks) 258 which explains the numbers involved. 259 260 <details> 261 <summary>Star Trek: Voyager mode, <b>Behaviour on sequence overflow</b></summary> 262 <p> 263 264 The sequence never overflows and the generator is designed with a single-return `New()` method that does not return 265 errors nor invalid IDs. *Realistically* the default generator will never overflow simply because you won't saturate 266 the capacity. 267 268 But since you can set bounds yourself, the capacity could shrink to `4` per 4msec (smallest allowed). 269 Now that's more likely. So when you start overflowing, the generator will *stall* and *pray* for a 270 reduction in throughput sometime in the near future. 271 272 From **sno**'s persective requesting more IDs than it can safely give you **immediately** is not an error - but 273 it *may* require correcting on *your end*. And you should know about that. Therefore, if 274 you want to know when it happens - simply give **sno** a channel along with its configuration snapshot. 275 276 When a thread requests an ID and gets stalled, **once** per time unit, you will get a `SequenceOverflowNotification` 277 on that channel. 278 279 ```go 280 type SequenceOverflowNotification struct { 281 Now time.Time // Time of tick. 282 Count uint32 // Number of currently overflowing generation calls. 283 Ticks uint32 // For how many ticks in total we've already been dealing with the *current* overflow. 284 } 285 ``` 286 Keep track of the counter. If it keeps increasing, you're no longer bursting - you're simply over capacity 287 and *eventually* need to slow down or you'll *eventually* starve your system. The `Ticks` count lets you estimate 288 how long the generator has already been overflowing without keeping track of time yourself. A tick is *roughly* 1ms. 289 290 The order of generation when stalling occurs is `undefined`. It is not a FIFO queue, it's a race. Previously stalled 291 goroutines get woken up alongside inflight goroutines which have not yet been stalled, where the order of the former is 292 handled by the runtime. A livelock is therefore possible if demand doesn't decrease. This behaviour *may* change and 293 inflight goroutines *may* get thrown onto the stalling wait list if one is up and running, but this requires careful 294 inspection. And since this is considered an unrealistic scenario which can be avoided with simple configuration, 295 it's not a priority. 296 297 </p> 298 </details> 299 300 #### Clock drift and the tick-tock toggle 301 302 Just like all other specs that rely on clock times to resolve ambiguity, **sno**s are prone to clock drifts. But 303 unlike all those others specs, **sno** adjusts itself to the new time - instead of waiting (blocking), it tick-tocks. 304 305 **The tl;dr** applying to any system, really: ensure your deployments use properly synchronized system clocks 306 (via NTP) to mitigate the *size* of drifts. Ideally, use a NTP server pool that applies 307 a gradual [smear for leap seconds](https://developers.google.com/time/smear). Despite the original Snowflake spec 308 suggesting otherwise, using NTP in slew mode (to avoid regressions entirely) 309 [is not always a good idea](https://www.redhat.com/en/blog/avoiding-clock-drift-vms). 310 311 Also remember that containers tend to get *paused* meaning their clocks are paused with them. 312 313 As far as **sno**, collisions and performance are concerned, in typical scenarios you can enjoy a wait-free ride 314 without requiring slew mode nor having to worry about even large drifts. 315 316 <details> 317 <summary>Star Trek: Voyager mode, <b>How tick-tocking works</b></summary> 318 <p> 319 320 **sno** attempts to eliminate the issue *entirely* - both despite and because of its small pool of bits to work with. 321 322 The approach it takes is simple - each generator keeps track of the highest wall clock time it got from the OS\*, 323 each time it generates a new timestamp. If we get a time that is lower than the one we recorded, i.e. the clock 324 drifted backwards and we'd risk generating colliding IDs, we toggle a bit - stored from here on out in 325 each **sno** generated *until the next regression*. Rinse, repeat - tick, tock. 326 327 (\*IDs created with a user-defined time are exempt from this mechanism as their time is arbitrary. The means 328 to *bring your own time* are provided to make porting old IDs simpler and is assumed to be done before an ID 329 scheme goes online) 330 331 In practice this means that we switch back and forth between two alternating timelines. Remember how the pool 332 we've got is 16,384,000 IDs per second? When we tick or tock, we simply jump between two pools with the same 333 capacity. 334 335 Why not simply use that bit to store a higher resolution time fraction? True, we'd get twice the pool which 336 seemingly boils down to the same - except it doesn't. That is due to how the sequence increases. Even if you 337 had a throughput of merely 1 ID per hour, while the chance would be astronomically low - if the clock drifted 338 back that whole hour, you *could* get a collision. The higher your throughput, the bigger the probability. 339 ID's of the Snowflake variant, **sno** being one of them, are about **guarantees - not probabilities**. 340 So this is a **sno-go**. 341 342 (I will show myself out...) 343 344 The simplistic approach of tick-tocking *entirely eliminates* that collision chance - but with a rigorous assumption: 345 regressions happen at most once into a specific period, i.e. from the highest recorded time into the past 346 and never back into that particular timeframe (let alone even further into the past). 347 348 This *generally* is exactly the case but oddities as far as time synchronization, bad clocks and NTP client 349 behaviour goes *do* happen. And in distributed systems, every edge case that can happen - *will* happen. What do? 350 351 ##### How others do it 352 353 - [Sonyflake] goes to sleep until back at the wall clock time it was already at 354 previously. All goroutines attempting to generate are blocked. 355 - [snowflake] hammers the OS with syscalls to get the current time until back 356 at the time it was already at previously. All goroutines attempting to generate are blocked. 357 - [xid] goes ¯\\_(ツ)_/¯ and does not tackle drifts at all. 358 - Entropy-based specs (like UUID or KSUID) don't really need to care as they are generally not prone, even to 359 extreme drifts - you run with a risk all the time. 360 361 The approach one library took was to keep generating, but timestamp all IDs with the highest time recorded instead. 362 This worked, because it had a large entropy pool to work with, for one (so a potential large spike in IDs generated 363 in the same timeframe wasn't much of a consideration). **sno** has none. But more importantly - it disagrees on the 364 reasoning about time and clocks. If we moved backwards, it means that an *adjustment* happened and we are *now* 365 closer to the *correct* time from the perspective of a wider system. 366 367 **sno** therefore keeps generating without waiting, using the time as reported by the system - in the "past" so to 368 speak, but with the tick-tock bit toggled. 369 370 *If* another regression happens, into that timeframe or even further back, *only then* do we tell all contenders 371 to wait. We get a wait-free fast path *most of the time* - and safety if things go southways. 372 373 ##### Tick-tocking obviously affects the sort order as it changes the timestamp 374 375 Even though the toggle is *not* part of the milliseconds, you can think of it as if it were. Toggling is then like 376 moving two milliseconds back and forth, but since our milliseconds are floored to increments of 4msec, we never 377 hit the range of a previous timeframe. Alternating timelines are as such sorted *as if* they were 2msec apart from 378 each other, but as far as the actual stored time is considered - they are timestamped at exactly the same millisecond. 379 380 They won't sort in an interleaved fashion, but will be *right next* to the other timeline. Technically they *were* 381 created at a different time, so being able to make that distinction is considered a plus by the author. 382 383 </p> 384 </details> 385 <br /><br /> 386 387 ## Metabyte 388 389 The **metabyte** is unique to **sno** across the specs the author researched, but the concept of embedding metadata 390 in IDs is an ancient one. It's effectively just a *byte-of-whatever-you-want-it-to-be* - but perhaps 391 *8-bits-of-whatever-you-want-them-to-be* does a better job of explaining its versatility. 392 393 ### `0` is a valid metabyte 394 395 **sno** is agnostic as to what that byte represents and it is **optional**. None of the properties of **sno**s 396 get violated if you simply pass a `0`. 397 398 However, if you can't find use for it, then you may be better served using a different ID spec/library 399 altogether (➜ [Alternatives](#alternatives)). You'd be wasting a byte that could give you benefits elsewhere. 400 401 ### Why? 402 403 Many databases, especially embedded ones, are extremely efficient when all you need is the keys - not all 404 the data all those keys represent. None of the Snowflake-like specs would provide a means to do that without 405 excessive overrides (or too small a pool to work with), essentially a different format altogether, and so - **sno**. 406 407 <details> 408 <summary> 409 And simple constants tend to do the trick. 410 </summary> 411 <p> 412 413 Untyped integers can pass as `uint8` (i.e. `byte`) in Go, so the following would work and keep things tidy: 414 415 ```go 416 const ( 417 PersonType = iota 418 OtherType 419 ) 420 421 type Person struct { 422 ID sno.ID 423 Name string 424 } 425 426 person := Person{ 427 ID: sno.New(PersonType), 428 Name: "A Special Snöflinga", 429 } 430 ``` 431 </p> 432 </details> 433 434 <br /> 435 436 *Information that describes something* has the nice property of also helping to *identify* something across a sea 437 of possibilities. It's a natural fit. 438 439 Do everyone a favor, though, and **don't embed confidential information**. It will stop being confidential and 440 become public knowledge the moment you do that. Let's stick to *nice* property, avoiding `PEBKAC`. 441 442 ### Sort order and placement 443 444 The metabyte follows the timestamp. This clusters IDs by the timestamp and then by the metabyte (for example - 445 the type of the entity), *before* the fixed partition. 446 447 If you were to use machine-ID based partitions across a cluster generating, say, `Person` entities, where `Person` 448 corresponds to a metabyte of `1` - this has the neat property of grouping all `People` generated across the entirety 449 of your system in the given timeframe in a sortable manner. In database terms, you *could* think of the metabyte as 450 identifying a table that is sharded across many partitions - or as part of a compound key. But that's just one of 451 many ways it can be utilized. 452 453 Placement at the beginning of the second block allows the metabyte to potentially both extend the timestamp 454 block or provide additional semantics to the payload block. Even if you always leave it empty, sort 455 order nor sort/insert performance won't be hampered. 456 457 ### But it's just a single byte! 458 459 A single byte is plenty. 460 461 <details> 462 <summary>Here's a few <em>ideas for things you did not know you wanted, yet</em>.</summary> 463 <p> 464 465 - IDs for requests in a HTTP context: 1 byte is enough to contain one of all possible standard HTTP status codes. 466 *Et voila*, you now got all requests that resulted in an error nicely sorted and clustered. 467 <br />Limit yourself to the non-exotic status codes and you can store the HTTP verb along with the status code. 468 In that single byte. Suddenly even the partition (if it's tied to a machine/cluster) gains relevant semantics, 469 as you've gained a timeseries of requests that started fail-cascading in the cluster. Constrain yourself even 470 further to just one bit for `OK` or `ERROR` and you made room to also store information about the operation that 471 was requested (think resource endpoint). 472 473 - How about storing a (immutable) bitmask along with the ID? Save some 7 bytes of bools by doing so and have the 474 flags readily available during an efficient sequential key traversal using your storage engine of choice. 475 476 - Want to version-control a `Message`? Limit yourself to at most 256 versions and it becomes trivial. Take the ID 477 of the last version created, increment its metabyte - and that's it. What you now have is effectively a simplistic 478 versioning schema, where the IDs of all possible versions can be inferred without lookups, joins, indices and whatnot. 479 And many databases will just store them *close* to each other. Locality is a thing. 480 <br />How? The only part that changed was the metabyte. All other components remained the same, but we ended up with 481 a new ID pointing to the most recent version. Admittedly the timestamp lost its default semantics of 482 *moment of creation* and instead is *moment of creation of first version*, but you'd store a `revisedAt` timestamp 483 anyways, wouldn't you?<br />And if you *really* wanted to support more versions - the IDs have certain properties 484 that can be (ab)used for this. Increment this, decrement that... 485 486 - Sometimes a single byte is all the data that you actually need to store, along with the time 487 *when something happened*. Batch processing succeeded? `sno.New(0)`, done. Failed? `sno.New(1)`, done. You now 488 have a uniquely identifiable event, know *when* and *where* it happened, what the outcome was - and you still 489 had 7 spare bits (for higher precision time, maybe?) 490 491 - Polymorphism has already been covered. Consider not just data storage, but also things like (un)marshaling 492 polymorphic types efficiently. Take a JSON of `{id: "aaaaaaaa55aaaaaa", foo: "bar", baz: "bar"}`. 493 The 8-th and 9-th (0-indexed) characters of the ID contain the encoded bits of the metabyte. Decode that 494 (use one of the utilities provided by the library) and you now know what internal type the data should unmarshal 495 to without first unmarshaling into an intermediary structure (nor rolling out a custom decoder for this type). 496 There are many approaches to tackle this - an ID just happens to lend itself naturally to solve it and is easily 497 portable. 498 499 - 2 bytes for partitions not enough for your needs? Use a fixed byte as the metabyte -- you have extended the 500 fixed partition to 3 bytes. Wrap a generator with a custom one to apply that metabyte for you each time you use it. 501 The metabyte is, after all, part of the partition. It's just separated out for semantic purposes but its actual 502 semantics are left to you. 503 </p> 504 </details> 505 506 <br /> 507 508 ## Encoding 509 510 The encoding is a **custom base32** variant stemming from base32hex. Let's *not* call it *sno32*. 511 A canonically encoded **sno** is a regexp of `[2-9a-x]{16}`. 512 513 The following alphabet is used: 514 515 ``` 516 23456789abcdefghijklmnopqrstuvwx 517 ``` 518 519 This is 2 contiguous ASCII ranges: `50..57` (digits) and `97..120` (*strictly* lowercase letters). 520 521 On `amd64` encoding/decoding is vectorized and **[extremely fast](./benchmark#encodingdecoding)**. 522 523 <br /> 524 525 ## Alternatives 526 527 | Name | Binary (bytes) | Encoded (chars)* | Sortable | Random** | Metadata | nsec/ID 528 |------------:|:--------------:|:----------------:|:---------:|:---------:|:--------:|--------: 529 | [UUID] | 16 | 36 | ![no] | ![yes] | ![no] | ≥36.3 530 | [KSUID] | 20 | 27 | ![yes] | ![yes] | ![no] | 206.0 531 | [ULID] | 16 | 26 | ![yes] | ![yes] | ![no] | ≥50.3 532 | [Sandflake] | 16 | 26 | ![yes] | ![meh] | ![no] | 224.0 533 | [cuid] | ![no] | 25 | ![yes] | ![meh] | ![no] | 342.0 534 | [xid] | 12 | 20 | ![yes] | ![no] | ![no] | 19.4 535 | **sno** | 10 | **16** | ![yes] | ![no] | ![yes] | **8.8** 536 | [Snowflake] | **8** | ≤20 | ![yes] | ![no] | ![no] | 28.9 537 538 539 [UUID]: https://github.com/gofrs/uuid 540 [KSUID]: https://github.com/segmentio/ksuid 541 [cuid]: https://github.com/lucsky/cuid 542 [Snowflake]: https://github.com/bwmarrin/snowflake 543 [Sonyflake]: https://github.com/sony/sonyflake 544 [Sandflake]: https://github.com/celrenheit/sandflake 545 [ULID]: https://github.com/oklog/ulid 546 [xid]: https://github.com/rs/xid 547 548 [yes]: ./.github/ico-yes.svg 549 [meh]: ./.github/ico-meh.svg 550 [no]: ./.github/ico-no.svg 551 552 \* Using canonical encoding.<br /> 553 \** When used with a proper CSPRNG. The more important aspect is the distinction between entropy-based and 554 coordination-based IDs. [Sandflake] and [cuid] do contain entropy, but not sufficient to rely on entropy 555 alone to avoid collisions (3 bytes and 4 bytes respectively).<br /> 556 557 For performance results see ➜ [Benchmark](./benchmark). `≥` values given for libraries which provide more 558 than one variant, whereas the fastest one is listed. 559 560 561 <br /><br /> 562 563 ## Attributions 564 565 **sno** is both based on and inspired by [xid] - more so than by the original Snowflake - but the changes it 566 introduces are unfortunately incompatible with xid's spec. 567 568 ## Further reading 569 570 - [Original Snowflake implementation](https://github.com/twitter-archive/snowflake/tree/snowflake-2010) and 571 [related post](https://blog.twitter.com/engineering/en_us/a/2010/announcing-snowflake.html) 572 - [Mongo ObjectIds](https://docs.mongodb.com/manual/reference/method/ObjectId/) 573 - [Instagram: Sharding & IDs at Instagram](https://instagram-engineering.com/sharding-ids-at-instagram-1cf5a71e5a5c) 574 - [Flickr: Ticket Servers: Distributed Unique Primary Keys on the Cheap](http://code.flickr.net/2010/02/08/ticket-servers-distributed-unique-primary-keys-on-the-cheap/) 575 - [Segment: A brief history of the UUID](https://segment.com/blog/a-brief-history-of-the-uuid/) - about KSUID and the shortcomings of UUIDs. 576 - [Farfetch: Unique integer generation in distributed systems](https://www.farfetchtechblog.com/en/blog/post/unique-integer-generation-in-distributed-systems) - uint32 utilizing Cassandra to coordinate. 577 578 Also potentially of interest: 579 - [Lamport timestamps](https://en.wikipedia.org/wiki/Lamport_timestamps) (vector/logical clocks) 580 - [The Bloom Clock](https://arxiv.org/pdf/1905.13064.pdf) by Lum Ramabaja