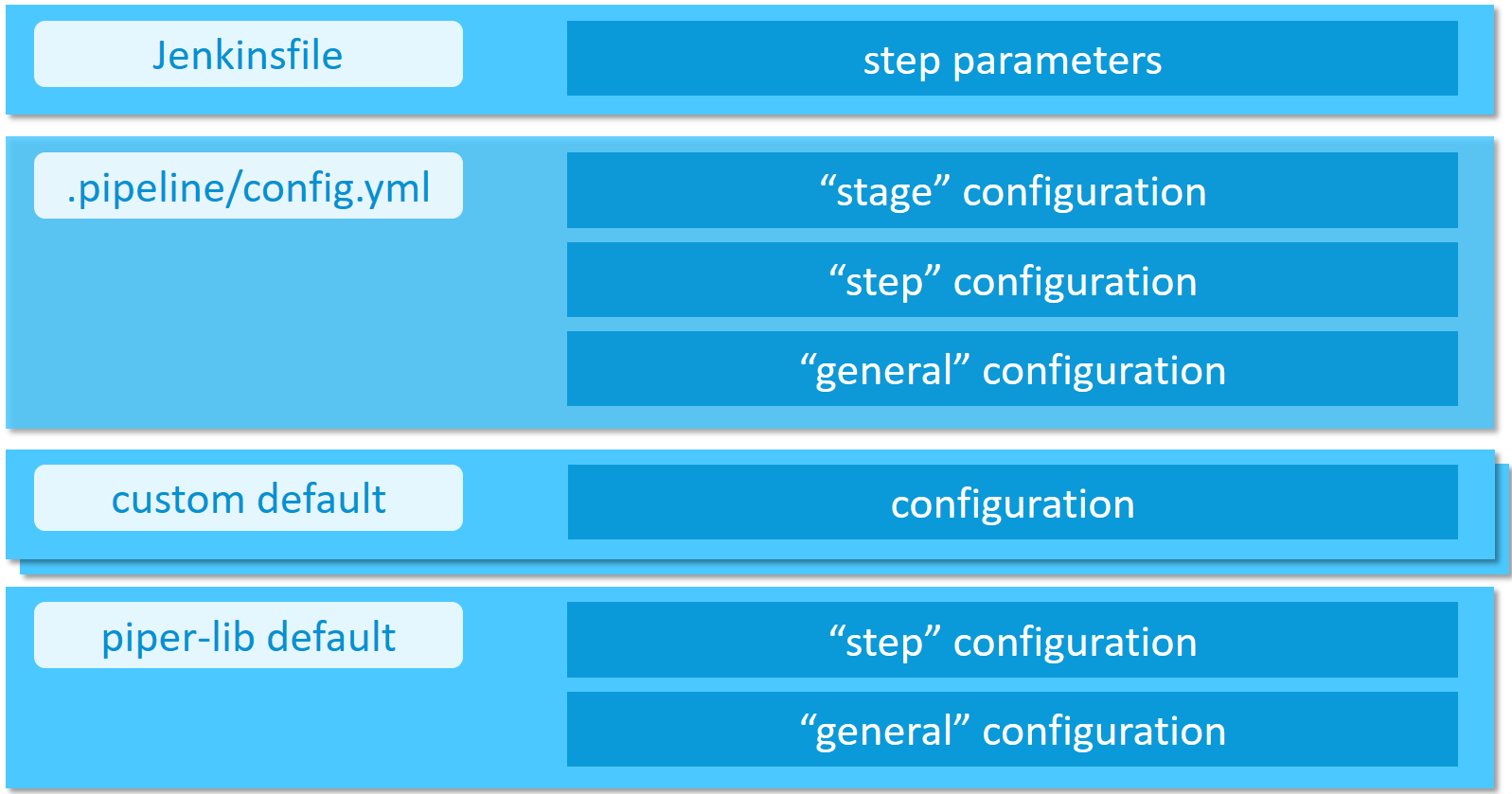

github.com/ouraigua/jenkins-library@v0.0.0-20231028010029-fbeaf2f3aa9b/documentation/docs/configuration.md (about) 1 # Configuration 2 3 Configure your project through a yml-file, which is located at `.pipeline/config.yml` in the **master branch** of your source code repository. 4 5 Your configuration inherits from the default configuration located at [https://github.com/SAP/jenkins-library/blob/master/resources/default_pipeline_environment.yml](https://github.com/SAP/jenkins-library/blob/master/resources/default_pipeline_environment.yml). 6 7 !!! caution "Adding custom parameters" 8 Please note that adding custom parameters to the configuration is at your own risk. 9 We may introduce new parameters at any time which may clash with your custom parameters. 10 11 Configuration of the project "Piper" steps, as well as project "Piper" templates, can be done in a hierarchical manner. 12 13 1. Directly passed step parameters will always take precedence over other configuration values and defaults 14 1. Stage configuration parameters define a Jenkins pipeline stage-dependent set of parameters (e.g. deployment options for the `Acceptance` stage) 15 1. Step configuration defines how steps behave in general (e.g. step `cloudFoundryDeploy`) 16 1. General configuration parameters define parameters which are available across step boundaries 17 1. Custom default configuration provided by the user through a reference in the `customDefaults` parameter of the project configuration 18 1. Default configuration comes with the project "Piper" library and is always available 19 20  21 22 ## Collecting telemetry data 23 24 To improve this Jenkins library, we are collecting telemetry data. 25 Data is sent using [`com.sap.piper.pushToSWA`](https://github.com/SAP/jenkins-library/blob/master/src/com/sap/piper/Utils.groovy) 26 27 Following data (non-personal) is collected for example: 28 29 * Hashed job url, e.g. `4944f745e03f5f79daf0001eec9276ce351d3035` hash calculation is done in your Jenkins server and no original values are transmitted 30 * Name of library step which has been executed, like e.g. `artifactSetVersion` 31 * Certain parameters of the executed steps, e.g. `buildTool=maven` 32 33 **We store the telemetry data for no longer than 6 months on premises of SAP SE.** 34 35 !!! note "Disable collection of telemetry data" 36 If you do not want to send telemetry data you can easily deactivate this. 37 38 This is done with either of the following two ways: 39 40 1. General deactivation in your `.pipeline/config.yml` file by setting the configuration parameter `general -> collectTelemetryData: false` (default setting can be found in the [library defaults](https://github.com/SAP/jenkins-library/blob/master/resources/default_pipeline_environment.yml)). 41 42 **Please note: this will only take effect in all steps if you run `setupCommonPipelineEnvironment` at the beginning of your pipeline** 43 44 2. Individual deactivation per step by passing the parameter `collectTelemetryData: false`, like e.g. `setVersion script:this, collectTelemetryData: false` 45 46 ## Example configuration 47 48 ```yaml 49 general: 50 gitSshKeyCredentialsId: GitHub_Test_SSH 51 52 steps: 53 cloudFoundryDeploy: 54 deployTool: 'cf_native' 55 cloudFoundry: 56 org: 'testOrg' 57 space: 'testSpace' 58 credentialsId: 'MY_CF_CREDENTIALSID_IN_JENKINS' 59 newmanExecute: 60 newmanCollection: 'myNewmanCollection.file' 61 newmanEnvironment: 'myNewmanEnvironment' 62 newmanGlobals: 'myNewmanGlobals' 63 ``` 64 65 ## Sending log data to the SAP Alert Notification service for SAP BTP 66 67 The SAP Alert Notification service for SAP BTP allows users to define 68 certain delivery channels, for example, e-mail or triggering of HTTP 69 requests, to receive notifications from pipeline events. If the alert 70 notification service service-key is properly configured in "Piper", any "Piper" 71 step implemented in golang will send log data to the alert notification 72 service backend for log levels higher than warnings, i.e. warnings, error, 73 fatal and panic. 74 75 The SAP Alert Notification service event properties are defined depending on the 76 log entry content as follows: 77 78 - `eventType`: the type of event type (defaults to 'Piper', but can be 79 overwritten with the event template) 80 - `eventTimestamp`: the time of the log entry 81 - `severity` and `category`: the event severity and the event category 82 depends on the log level: 83 84 | log level | severity | category | 85 |-----------|----------|----------| 86 | info | INFO | NOTICE | 87 | debug | INFO | NOTICE | 88 | warn | WARNING | ALERT | 89 | error | ERROR | EXCEPTION | 90 | fatal | FATAL | EXCEPTION | 91 | panic | FATAL | EXCEPTION | 92 93 - `subject`: short description of the event (defaults to 'Step <step_name> sends <severity>', but 94 can be overwritten with the event template) 95 - `body`: the log message 96 - `priority`: (optional) an integer number in the range [1:1000] (not set by 97 "Piper", but can be set with the event template) 98 - `tags`: optional key-value pairs. The following are set by "Piper": 99 100 - `ans:correlationId`: a unique correlation ID of the pipeline run 101 (defaults to the URL of that pipeline run, but can be overwritten with the 102 event template) 103 - `ans:sourceEventId`: also set to the "Piper" correlation ID (can also be 104 overwritten with the event template) 105 - `cicd:stepName`: the "Piper" step name 106 - `cicd:logLevel`: the "Piper" log level 107 - `cicd:errorCategory`: the "Piper" error category, if available 108 109 - `resource`: the following default properties are set by "Piper": 110 111 - `resourceType`: resource type identifier (defaults to 'Pipeline', but 112 can be overwritten with the event template) 113 - `resourceName`: unique resource name (defaults to 'Pipeline', can be 114 overwritten with the event template) 115 - `resourceInstance`: (optional) resource instance identifier (not set by 116 "Piper", can be set with the event template) 117 - `tags`: optional key-value pairs. 118 119 The following event properties cannot be set and are instead set by the SAP 120 Alert Notification service: 121 `region`, `regionType`, `resource.globalAccount`, `resource.subAccount` and 122 `resource.resourceGroup` 123 124 For more information and an example of the structure of an alert 125 notification service event, see 126 [SAP Alert Notification Service Events](https://help.sap.com/viewer/5967a369d4b74f7a9c2b91f5df8e6ab6/Cloud/en-US/eaaa37e6ff62486ebb849507dc33abc6.html) 127 in the SAP Help Portal. 128 129 ### SAP Alert Notification service configuration 130 131 There are two options that can be configured: the mandatory service-key and 132 the optional event template. 133 134 #### Service-Key 135 136 The SAP Alert Notification service service-key needs to be present in the 137 environment, where the "Piper" binary is run. See the 138 [Credential Management guide](https://help.sap.com/docs/ALERT_NOTIFICATION/5967a369d4b74f7a9c2b91f5df8e6ab6/80fe24f86bde4e3aac2903ac05511835.html?locale=en-US) 139 in the SAP Help Portal on how to retrieve an alert notification service 140 service-key. The environment variable used is: `PIPER_ansHookServiceKey`. 141 142 If Jenkins is used to run "Piper", you can use the Jenkins credential store 143 to store the alert notification service service-key as a "Secret Text" 144 credential. Provide the credential ID in a custom defaults file 145 ([described below](#custom-default-configuration)) as follows: 146 147 ```yaml 148 hooks: 149 ans: 150 serviceKeyCredentialsId: 'my_ANS_Service_Key' 151 ``` 152 153 !!! warning It is **not** possible to configure the above in the project configuration file, i.e. in `.pipeline/config.yaml` 154 155 #### Event template 156 157 You can also create an event template in JSON format to overwrite 158 or add event details to the default. To do this, provide the JSON string 159 directly in the environment where the "Piper" binary is run. The environment 160 variable used in this case is: `PIPER_ansEventTemplate`. 161 162 For example in unix: 163 164 ```bash 165 export PIPER_ansEventTemplate='{"priority": 999}' 166 ``` 167 168 The event body, timestamp, severity and category cannot be set via the 169 template. They are always set from the log entry. 170 171 ## Collecting telemetry and logging data for Splunk 172 173 Splunk gives the ability to analyze any kind of logging information and to visualize the retrieved information in dashboards. 174 To do so, we support sending telemetry information as well as logging information in case of a failed step to a Splunk Http Event Collector (HEC) endpoint. 175 176 The following data will be sent to the endpoint if activated: 177 178 * Hashed pipeline URL 179 * Hashed Build URL 180 * StageName 181 * StepName 182 * ExitCode 183 * Duration (of each step) 184 * ErrorCode 185 * ErrorCategory 186 * CorrelationID (not hashed) 187 * CommitHash (Head commit hash of current build.) 188 * Branch 189 * GitOwner 190 * GitRepository 191 192 The information will be sent to the specified Splunk endpoint in the config file. By default, the Splunk mechanism is 193 deactivated and gets only activated if you add the following to your config: 194 195 ```yaml 196 general: 197 gitSshKeyCredentialsId: GitHub_Test_SSH 198 199 steps: 200 cloudFoundryDeploy: 201 deployTool: 'cf_native' 202 cloudFoundry: 203 org: 'testOrg' 204 space: 'testSpace' 205 credentialsId: 'MY_CF_CREDENTIALSID_IN_JENKINS' 206 hooks: 207 splunk: 208 dsn: 'YOUR SPLUNK HEC ENDPOINT' 209 token: 'YOURTOKEN' 210 index: 'SPLUNK INDEX' 211 sendLogs: true 212 ``` 213 214 `sendLogs` is a boolean, if set to true, the Splunk hook will send the collected logs in case of a failure of the step. 215 If no failure occurred, no logs will be sent. 216 217 ### How does the sent data look alike 218 219 In case of a failure, we send the collected messages in the field `messages` and the telemetry information in `telemetry`. By default, piper sends the log messages in batches. The default length for the messages is `1000`. As an example: 220 If you encounter an error in a step that created `5k` log messages, piper will send five messages containing the messages and the telemetry information. 221 222 ```json 223 { 224 "messages": [ 225 { 226 "time": "2021-04-28T17:59:19.9376454Z", 227 "message": "Project example pipeline exists...", 228 "data": { 229 "library": "", 230 "stepName": "checkmarxExecuteScan" 231 } 232 } 233 ], 234 "telemetry": { 235 "PipelineUrlHash": "73ece565feca07fa34330c2430af2b9f01ba5903", 236 "BuildUrlHash": "ec0aada9cc310547ca2938d450f4a4c789dea886", 237 "StageName": "", 238 "StepName": "checkmarxExecuteScan", 239 "ExitCode": "1", 240 "Duration": "52118", 241 "ErrorCode": "1", 242 "ErrorCategory": "undefined", 243 "CorrelationID": "https://example-jaasinstance.corp/job/myApp/job/microservice1/job/master/10/", 244 "CommitHash": "961ed5cd98fb1e37415a91b46a5b9bdcef81b002", 245 "Branch": "master", 246 "GitOwner": "piper", 247 "GitRepository": "piper-splunk" 248 } 249 } 250 ``` 251 252 ## Access to the configuration from custom scripts 253 254 Configuration is loaded into `commonPipelineEnvironment` during step [setupCommonPipelineEnvironment](steps/setupCommonPipelineEnvironment.md). 255 256 You can access the configuration values via `commonPipelineEnvironment.configuration` which will return you the complete configuration map. 257 258 Thus following access is for example possible (accessing `gitSshKeyCredentialsId` from `general` section): 259 260 ```groovy 261 commonPipelineEnvironment.configuration.general.gitSshKeyCredentialsId 262 ``` 263 264 ## Access to configuration in custom library steps 265 266 Within library steps the `ConfigurationHelper` object is used. 267 268 You can see its usage in all the Piper steps, for example [newmanExecute](https://github.com/SAP/jenkins-library/blob/master/vars/newmanExecute.groovy#L23). 269 270 ## Custom default configuration 271 272 For projects that are composed of multiple repositories (microservices), it might be desired to provide custom default configurations. 273 To do that, create a YAML file which is accessible from your CI/CD environment and configure it in your project configuration. 274 For example, the custom default configuration can be stored in a GitHub repository and accessed via the "raw" URL: 275 276 ```yaml 277 customDefaults: ['https://my.github.local/raw/someorg/custom-defaults/master/backend-service.yml'] 278 general: 279 ... 280 ``` 281 282 Note, the parameter `customDefaults` is required to be a list of strings and needs to be defined as a separate section of the project configuration. 283 In addition, the item order in the list implies the precedence, i.e., the last item of the customDefaults list has the highest precedence. 284 285 It is important to ensure that the HTTP response body is proper YAML, as the pipeline will attempt to parse it. 286 287 Anonymous read access to the `custom-defaults` repository is required. 288 289 The custom default configuration is merged with the project's `.pipeline/config.yml`. 290 Note, the project's config takes precedence, so you can override the custom default configuration in your project's local configuration. 291 This might be useful to provide a default value that needs to be changed only in some projects. 292 An overview of the configuration hierarchy is given at the beginning of this page. 293 294 If you have different types of projects, they might require different custom default configurations. 295 For example, you might not require all projects to have a certain code check (like Whitesource, etc.) active. 296 This can be achieved by having multiple YAML files in the _custom-defaults_ repository. 297 Configure the URL to the respective configuration file in the projects as described above.