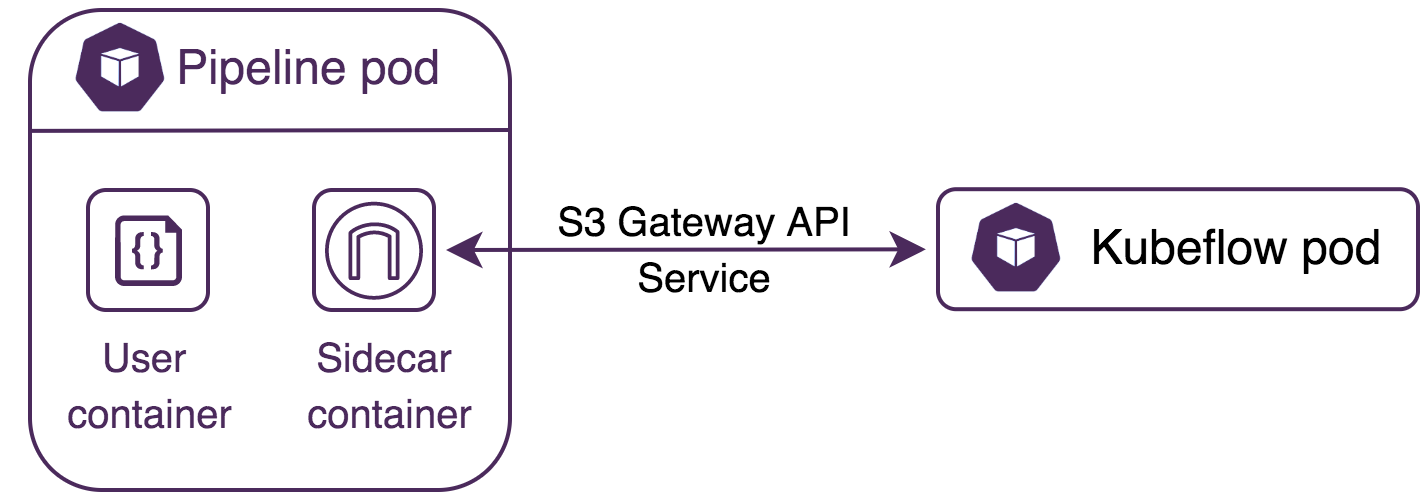

github.com/pachyderm/pachyderm@v1.13.4/doc/docs/1.10.x/deploy-manage/manage/s3gateway/deploy-s3gateway-sidecar.md (about) 1 # Create an S3-enabled Pipeline 2 3 If you want to use Pachyderm with such platforms like Kubeflow or 4 Apache™ Spark, you need to create an S3-enabled Pachyderm pipeline. 5 Such a pipeline ensures that data provenance of the pipelines that 6 run in those external systems is properly preserved and is tied to 7 corresponding Pachyderm jobs. 8 9 Pachyderm can deploys the S3 gateway in the `pachd` pod. Also, 10 you can deploy a separate S3 gateway instance as a sidecar container 11 in your pipeline worker pod. The former is 12 typically used when you need to configure an ingress or egress with 13 object storage tooling, such as MinIO, boto3, and others. The latter 14 is needed when you use Pachyderm with external data processing 15 platforms, such as Kubeflow or Apache Spark, that interact with 16 object stores but do not work with local file systems. 17 18 The master S3 gateway exists independently and outside of the 19 pipeline lifecycle. Therefore, if a 20 Kubeflow pod connects through the master S3 gateway, the Pachyderm pipelines 21 created in Kubeflow do not properly maintain data provenance. When the 22 S3 functionality is exposed through a sidecar instance in the 23 pipeline worker pod, Kubeflow can access the files stored in S3 buckets 24 in the pipeline pod, which ensures the provenance is maintained 25 correctly. The S3 gateway sidecar instance is created together with the 26 pipeline and shut down when the pipeline is destroyed. 27 28 The following diagram shows communication between the S3 gateway 29 deployed in a sidecar and the Kubeflow pod. 30 31  32 33 ## Limitations 34 35 Pipelines exposed through a sidecar S3 gateway have the following limitations: 36 37 * As with a standard Pachyderm pipeline, in which the input repo is read-only 38 and output is write-only, the same applies to using the S3 gateway within 39 pipelines. The input bucket(s) are read-only and the output bucket that 40 you define using the `s3_out` parameter is write-only. This limitation 41 guarantees that pipeline provenance is preserved just as it is with normal 42 Pachyderm pipelines. 43 44 * The `glob` field in the pipeline must be set to `"glob": "/"`. All files 45 are processed as a single datum. In this configuration, already processed 46 datums are not skipped which 47 could be an important performance consideration for some processing steps. 48 49 * Join and union inputs are not supported, but you can create a cross or 50 a single input. 51 52 * You can create a cross of an S3-enabled input with a non-S3 input. 53 For a non-S3 input in such a cross you can still specify a glob pattern. 54 55 * Statistics collection for S3-enabled pipelines is not supported. If you 56 set `"s3_out": true`, you need to disable the `enable_stats` 57 parameter in your pipeline. 58 59 ## Expose a Pipeline through an S3 Gateway in a Sidecar 60 61 When you work with platforms like Kubeflow or Apache Spark, you need 62 to spin up an S3 gateway instance that runs alongside the pipeline worker 63 pod as a sidecar container. To do so, set the `s3` parameter in the `input` 64 part of your pipeline specification to `true`. When enabled, this parameter 65 mounts S3 buckets for input repositories in the S3 gateway sidecar instance 66 instead of in `/pfs/`. You can set this property for each PFS input in 67 a pipeline. The address of the input repository will be `s3://<input_repo>`. 68 69 You can also expose the output repository through the same S3 gateway 70 instance by setting the `s3_out` property to `true` in the root of 71 the pipeline spec. If set to `true`, Pachyderm creates another S3 bucket 72 on the sidecar, and the output files will be written there instead of 73 `/pfs/out`. By default, `s3_out` is set to `false`. The address of the 74 output repository will be `s3://<output_repo>`, which is always the name 75 of the pipeline. 76 77 You can connect to the S3 gateway sidecar instance through its Kubernetes 78 service. To access the sidecar instance and the buckets on it, you need 79 to know the address of the buckets. Because PPS handles all permissions, 80 no special authentication configuration is needed. 81 82 The following text is an example of a pipeline exposed through a sidecar 83 S3 gateway instance: 84 85 ```json 86 { 87 "pipeline": { 88 "name": "test" 89 }, 90 "input": { 91 "pfs": { 92 "glob": "/", 93 "repo": "s3://images", 94 "s3": "true" 95 } 96 }, 97 "transform": { 98 "cmd": [ "python3", "/edges.py" ], 99 "image": "pachyderm/opencv" 100 }, 101 "s3_out": true 102 } 103 ``` 104 105 !!! note "See Also:" 106 - [Pipeline Specification](../../../../reference/pipeline_spec/#input-required) 107 - [Configure Environment Variables](../../../deploy/environment-variables/)