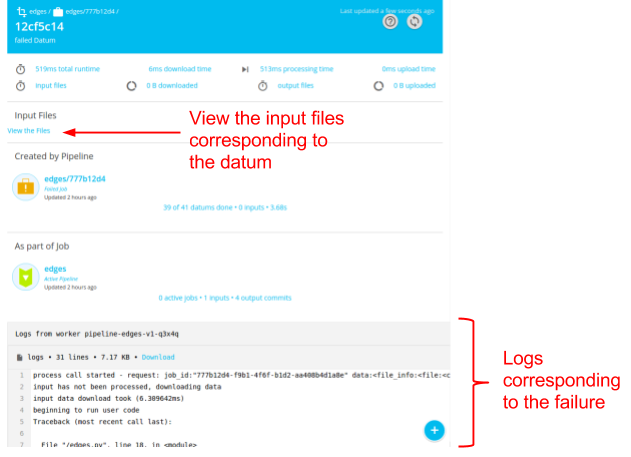

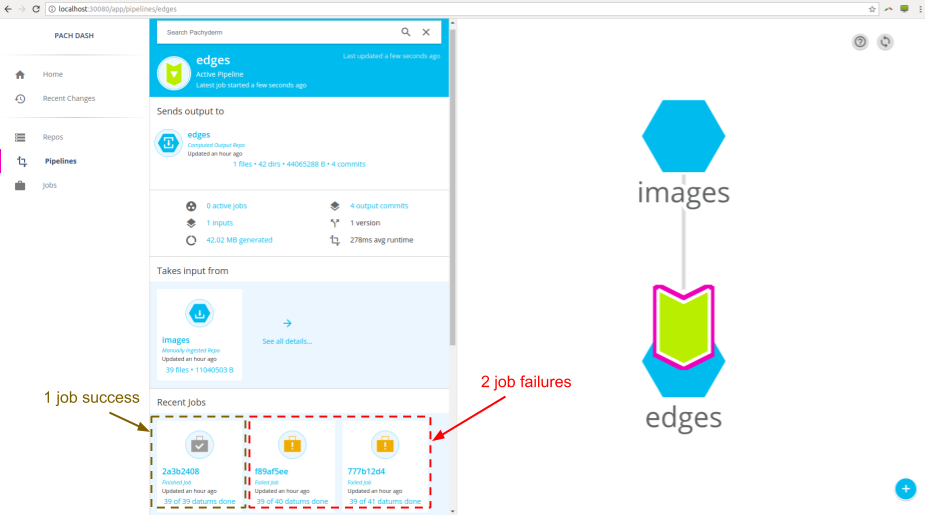

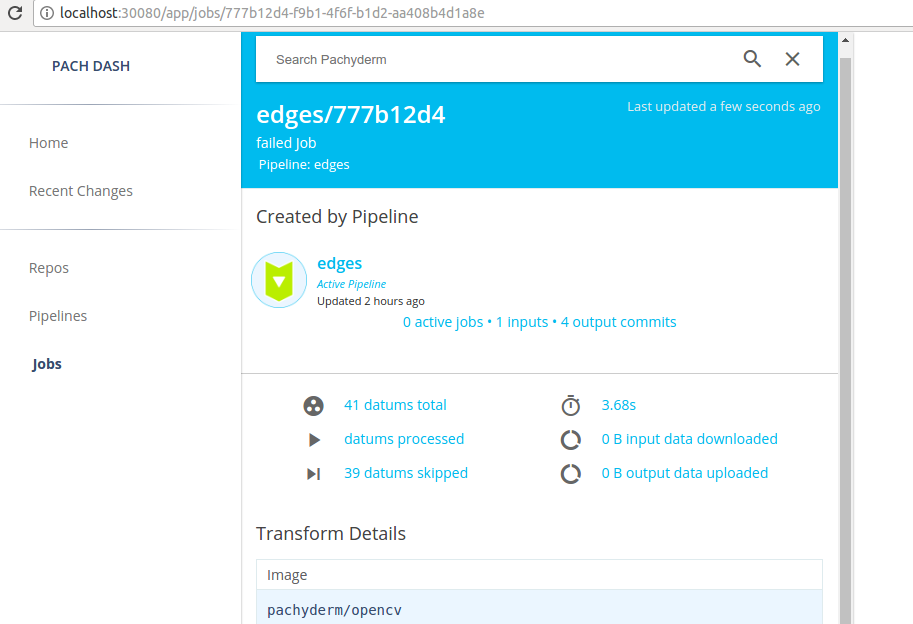

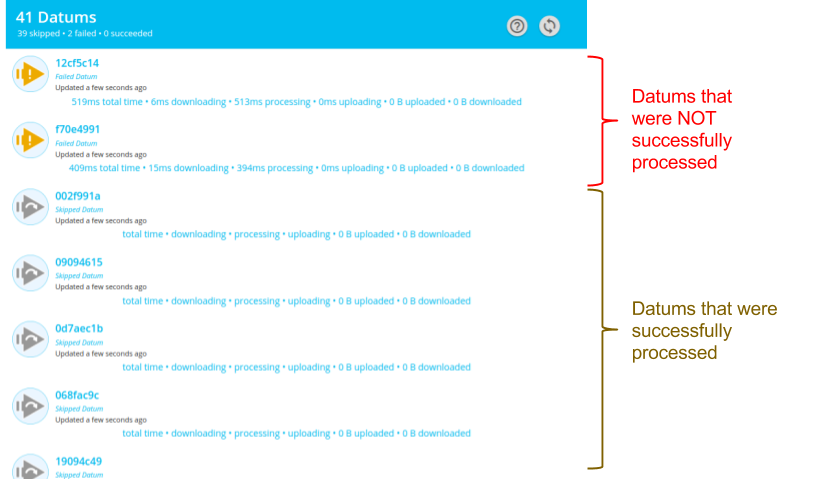

github.com/pachyderm/pachyderm@v1.13.4/doc/docs/1.10.x/enterprise/stats.md (about) 1 # Advanced Statistics 2 3 To use the advanced statistics features in Pachyderm, you need to: 4 5 1. Run your pipelines on a Pachyderm cluster. 6 2. Enable stats collection in your pipelines by including 7 `"enable_stats": true` in your [pipeline specification](https://docs.pachyderm.com/latest/reference/pipeline_spec/#enable-stats-optional). 8 9 Advanced statistics provides the following information for any jobs 10 corresponding to your pipelines: 11 12 - The amount of data that was uploaded and downloaded during the job and on a per-datum 13 level. 14 - The time spend uploading and downloading data on a per-datum level. 15 - The amount of data uploaded and downloaded on a per-datum level. 16 - The total time spend processing on a per-datum level. 17 - Success/failure information on a per-datum level. 18 - The directory structure of input data that was seen by the job. 19 20 The primary and recommended way to view this information is via the 21 Pachyderm Enterprise dashboard. However, the same information is 22 available through the `pachctl inspect datum` and `pachctl list datum` 23 commands or through their language client equivalents. 24 25 !!! note 26 Pachyderm recommends that you enable stats for all of your pipelines 27 and only disabling the feature for very stable, long-running pipelines. 28 In most cases, the debugging and maintenance benefits of the stats data 29 outweigh any disadvantages of storing the extra data associated with 30 the stats. Also note, none of your data is duplicated in producing the stats. 31 32 ## Enabling Stats for a Pipeline 33 34 As mentioned above, enabling stats collection for a pipeline is as simple as 35 adding the `"enable_stats": true` field to a pipeline specification. For 36 example, to enable stats collection for the [OpenCV demo pipeline](../getting_started/beginner_tutorial.md), 37 modify the pipeline specification as follows: 38 39 !!! example 40 41 ```shell 42 { 43 "pipeline": { 44 "name": "edges" 45 }, 46 "input": { 47 "pfs": { 48 "glob": "/*", 49 "repo": "images" 50 } 51 }, 52 "transform": { 53 "cmd": [ "python3", "/edges.py" ], 54 "image": "pachyderm/opencv" 55 }, 56 "enable_stats": true 57 } 58 ``` 59 60 ## Listing Stats for a Pipeline 61 62 Once the pipeline has been created and you have use it to process data, 63 you can confirm that stats are being collected with `list file`. There 64 should now be stats data in the output repo of the pipeline under a 65 branch called `stats`: 66 67 !!! example 68 69 ```shell 70 pachctl list file edges@stats 71 ``` 72 73 **System response:** 74 75 ```shell 76 NAME TYPE SIZE 77 002f991aa9db9f0c44a92a30dff8ab22e788f86cc851bec80d5a74e05ad12868 dir 342.7KiB 78 0597f2df3f37f1bb5b9bcd6397841f30c62b2b009e79653f9a97f5f13432cf09 dir 1.177MiB 79 068fac9c3165421b4e54b358630acd2c29f23ebf293e04be5aa52c6750d3374e dir 270.3KiB 80 0909461500ce508c330ca643f3103f964a383479097319dbf4954de99f92f9d9 dir 109.6KiB 81 ... 82 ``` 83 84 ## Accessing Stats through the command line 85 86 To view the stats for a specific datum you can use a `list file`: 87 88 !!! example 89 90 ```shell 91 pachctl list file edges@stats:/002f991aa9db9f0c44a92a30dff8ab22e788f86cc851bec80d5a74e05ad12868 92 ``` 93 94 **System response:** 95 96 ```shell 97 NAME TYPE SIZE 98 /002f991aa9db9f0c44a92a30dff8ab22e788f86cc851bec80d5a74e05ad12868/index file 1B 99 /002f991aa9db9f0c44a92a30dff8ab22e788f86cc851bec80d5a74e05ad12868/job:e448275f92604db0aa77770bddf24610 file 0B 100 /002f991aa9db9f0c44a92a30dff8ab22e788f86cc851bec80d5a74e05ad12868/logs file 0B 101 /002f991aa9db9f0c44a92a30dff8ab22e788f86cc851bec80d5a74e05ad12868/pfs dir 115.9KiB 102 /002f991aa9db9f0c44a92a30dff8ab22e788f86cc851bec80d5a74e05ad12868/stats file 136B 103 ``` 104 105 The files: index, job, logs and stats are metadata files that can be accessed using a `get file`: 106 107 !!! example 108 109 ```shell 110 pachctl get file edges@stats:/002f991aa9db9f0c44a92a30dff8ab22e788f86cc851bec80d5a74e05ad12868/stats 111 ``` 112 113 **System response:** 114 115 ```shell {"downloadTime":"0.211353702s","processTime":"0.474949018s","uploadTime":"0.567586547s","downloadBytes":"80588","uploadBytes":"38046"} 116 ``` 117 118 The pfs directory has both the input and the output data that was committed in this datum: 119 120 !!! example 121 122 ```shell 123 pachctl list file edges@stats:/002f991aa9db9f0c44a92a30dff8ab22e788f86cc851bec80d5a74e05ad12868/pfs 124 ``` 125 126 **System response:** 127 128 ```shell NAME TYPE SIZE 129 /002f991aa9db9f0c44a92a30dff8ab22e788f86cc851bec80d5a74e05ad12868/pfs/images dir 78.7KiB 130 /002f991aa9db9f0c44a92a30dff8ab22e788f86cc851bec80d5a74e05ad12868/pfs/out dir 37.15KiB 131 ``` 132 133 ## Accessing Stats Through the Dashboard 134 135 If you have deployed and activated the Pachyderm Enterprise 136 Edition, you can explore advanced statistics through the dashboard. For example, if you 137 navigate to the `edges` pipeline, you might see something similar to this: 138 139  140 141 In this example case, you can see that the pipeline has 1 recent successful 142 job and 2 recent job failures. Pachyderm advanced stats can be very helpful 143 in debugging these job failures. When you click on one of the job failures, 144 can see general stats about the failed job, such as total time, total data 145 upload/download, and so on: 146 147  148 149 To get more granular per-datum stats, click on the `41 datums total`, to get 150 the following information: 151 152  153 154 You can identify the exact datums that caused the pipeline to fail, as well 155 as the associated stats: 156 157 - Total time 158 - Time spent downloading data 159 - Time spent processing 160 - Time spent uploading data 161 - Amount of data downloaded 162 - Amount of data uploaded 163 164 If we need to, you can even go a level deeper and explore the exact details 165 of a failed datum. Clicking on one of the failed datums reveals the logs 166 that corresponds to the datum processing failure along with the exact input 167 files of the datum: 168 169