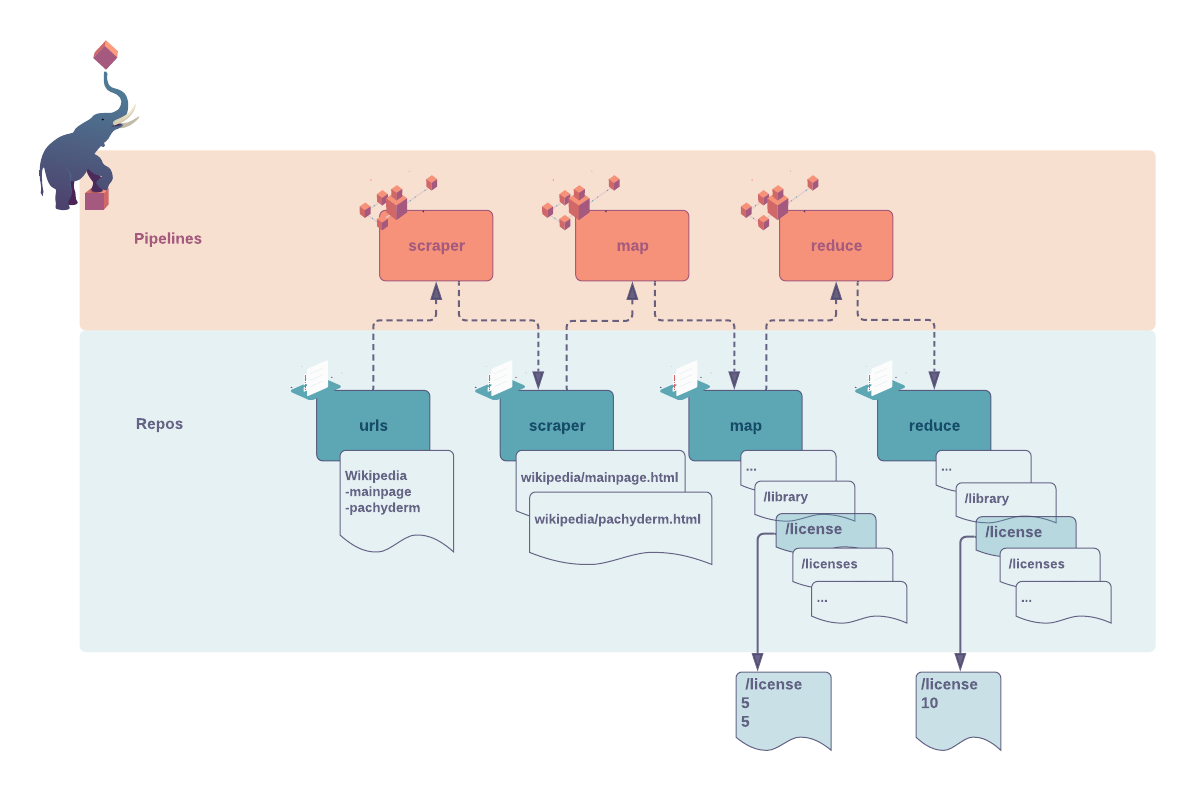

github.com/pachyderm/pachyderm@v1.13.4/examples/word_count/README.md (about) 1 > INFO - Pachyderm 2.0 introduces profound architectural changes to the product. As a result, our examples pre and post 2.0 are kept in two separate branches: 2 > - Branch Master: Examples using Pachyderm 2.0 and later versions - https://github.com/pachyderm/pachyderm/tree/master/examples 3 > - Branch 1.13.x: Examples using Pachyderm 1.13 and older versions - https://github.com/pachyderm/pachyderm/tree/1.13.x/examples 4 # Pachyderm Word Count - Map/Reduce 101 5 > New to Pachyderm? Start with the [beginner tutorial](https://docs.pachyderm.com/1.13.x/getting_started/beginner_tutorial/). 6 7 In this guide, we will write a classic MapReduce word count application in Pachyderm.A MapReduce job typically splits your input data into independent chunks that are seamlessly processed by a `map` pipeline in a parallel manner. The outputs of the maps are then input to a `reduce` pipeline which creates an aggregated content. 8 9 - In the first part of this example, we will: 10 - **Map**: Extract a list of words occurring in given web pages and create a list of text files named after each word. One line in that file represents the occurrence of the word on a page. 11 - **Reduce**: Aggregate those numbers to display the total occurrence for each word in all pages considered. 12 - In our second example, we add an additional web page and witness how the map/reduce pipelines manage our additional words. 13 14 ***Table of Contents*** 15 - [1. Getting ready](#1-getting-ready) 16 - [2. Pipelines setup](#2-pipelines-setup) 17 - [3. Example part one](#3-example-part-one) 18 - [***Step 1*** Create and populate Pachyderm's entry repo and pipelines](#step-1-create-and-populate-pachyderms-entry-repo-and-pipelines) 19 - [***Step 2*** Now, let's take a closer look at their content](#step-2-now-lets-take-a-closer-look-at-their-content) 20 - [4. Expand on the example](#4-expand-on-the-example) 21 22 23 ***Key concepts*** 24 For this example, we recommend being familiar with the following concepts: 25 - The original Map/Reduce word count example. 26 - Pachyderm's [file appending strategy](https://docs.pachyderm.com/1.13.x/concepts/data-concepts/file/#file-processing-strategies) - 27 When you put a file into a Pachyderm repository and a file by the same name already exists, Pachyderm appends the new data to the existing file by default, unless you add an `override` flag to your instruction. 28 - [Parallelism](https://docs.pachyderm.com/1.13.x/concepts/advanced-concepts/distributed_computing/) and [Glob Pattern](https://docs.pachyderm.com/1.13.x/concepts/pipeline-concepts/datum/glob-pattern/) to fine tune your performances. 29 30 ## 1. Getting ready 31 ***Prerequisite*** 32 - A workspace on [Pachyderm Hub](https://docs.pachyderm.com/1.13.x/hub/hub_getting_started/) (recommended) or Pachyderm running [locally](https://docs.pachyderm.com/1.13.x/getting_started/local_installation/). 33 - [pachctl command-line ](https://docs.pachyderm.com/1.13.x/getting_started/local_installation/#install-pachctl) installed, and your context created (i.e. you are logged in) 34 35 ***Getting started*** 36 - Clone this repo. 37 - Make sure Pachyderm is running. You should be able to connect to your Pachyderm cluster via the `pachctl` CLI. 38 Run a quick: 39 ```shell 40 $ pachctl version 41 42 COMPONENT VERSION 43 pachctl 1.12.0 44 pachd 1.12.0 45 ``` 46 Ideally, have your pachctl and pachd versions match. At a minimum, you should always use the same major & minor versions of pachctl and pachd. 47 - You can run this example as is. You can also decide to build, tag, and push your own image to your Docker Hub. 48 If so, make sure to update `CONTAINER_TAG` in the `Makefile` accordingly 49 as well as your pipelines' specifications, 50 then run `make docker-image`. 51 52 ## 2. Pipelines setup 53 ***Goal*** 54 In this example, we will have three successive processing stages (`scraper`, `map`, `reduce`) defined by three pipelines: 55 56  57 58 1. **Pipeline input repositories**: The `urls` in which we will commit files containing URLS. 59 Each file is named for the site we want to scrape with the content being the URLs of the pages considered. 60 61 1. **Pipelines**: 62 - [scraper.json](./pipelines/scraper.json) will first retrieve the .html content of the pages linked to the given urls. 63 - [map](./pipelines/map.json) will then tokenize the words from each page in parallel and extract each word occurrence. 64 - finally,[reduce](./pipelines/reduce.json) will aggregate the total counts for each word across all pages. 65 66 3 pipelines, including `reduce`, can be run in a distributed fashion to maximize performance. 67 68 1. **Pipeline output repository**: The output repo `reduce` will contain a list of text files named after the words identified in the pages and their cumulative occurrence. 69 70 ## 3. Example part one 71 ### ***Step 1*** Create and populate Pachyderm's entry repo and pipelines 72 73 In the `examples/word_count` directory, run: 74 ```shell 75 $ make wordcount 76 ``` 77 or create the entry repo `urls`, add the Wikipedia file: 78 ```shell 79 $ pachctl create repo urls 80 $ cd data && pachctl put file urls@master -f Wikipedia 81 ``` 82 The input data [`Wikipedia`](./data/Wikipedia) contains 2 URLs refering to 2 Wikipedia pages. 83 84 ... then create your 3 pipelines: 85 ```shell 86 $ pachctl create pipeline -f pipelines/scraper.json 87 $ pachctl create pipeline -f pipelines/map.json 88 $ pachctl create pipeline -f pipelines/reduce.json 89 ``` 90 91 The entry repository of the first pipeline already contains data to process. 92 Therefore, the pipeline creation will trigger a list of 3 jobs. 93 94 You should be able to see your jobs running: 95 ```shell 96 $ pachctl list job 97 ``` 98 ```shell 99 ID PIPELINE STARTED DURATION RESTART PROGRESS DL UL STATE 100 101 ba77c94678ae401db1a2b58528b74d78 reduce 42 seconds ago - 0 0 + 0 / 1488 0B 0B running 102 7e2ed8e0a8dd49a18f1e45d62942c7ee map 43 seconds ago Less than a second 0 2 + 0 / 2 5.218MiB 4.495KiB success 103 bc3031c8076c4f91aa1d8e6ba450b096 map_build 50 seconds ago 2 seconds 0 1 + 0 / 1 1.455KiB 2.557MiB success 104 badd3d81d3ce46358d91bedbb34dd0ed scraper 59 seconds ago 16 seconds 0 1 + 0 / 1 81B 103.4KiB success 105 ``` 106 107 Let's have a look at your repos and check that all entry/output repos have been created: 108 ```shell 109 $ pachctl list repo 110 ``` 111 ```shell 112 NAME CREATED SIZE (MASTER) DESCRIPTION 113 114 reduce 13 minutes ago 4.62KiB Output repo for pipeline reduce. 115 map 13 minutes ago 4.495KiB Output repo for pipeline map. 116 scraper 13 minutes ago 103.4KiB Output repo for pipeline scraper. 117 urls 13 minutes ago 81B 118 ``` 119 120 ### ***Step 2*** Now, let's take a closer look at their content 121 - Scraper content 122 ```shell 123 $ pachctl list file scraper@master 124 ``` 125 ```shell 126 NAME TYPE SIZE 127 /Wikipedia dir 103.4KiB 128 ``` 129 ```shell 130 $ pachctl list file scraper@master:/Wikipedia 131 ``` 132 ```shell 133 NAME TYPE SIZE 134 /Wikipedia/Main_Page.html file 77.53KiB 135 /Wikipedia/Pachyderm.html file 25.88KiB 136 ``` 137 We have successfully retrieved 2 .html pages corresponding to the 2 URLs provided. 138 139 - Map content 140 141 The `map` pipeline counts the number of occurrences of each word it encounters in each of the scraped webpages (see [map.go](./src/map.go)). 142 The filename for each word is the name of the word itself. 143 144 ```shell 145 $ pachctl list file map@master 146 ``` 147 ```shell 148 NAME TYPE SIZE 149 ... 150 /liberated file 2B 151 /librarians file 2B 152 /library file 2B 153 /license file 4B 154 /licenses file 4B 155 ... 156 ``` 157 For every word on those pages, there is a separate file. 158 Each file contains the numeric value for how many times that word appeared for each page. 159 160 ```shell 161 $ pachctl get file map@master:/license 162 5 163 5 164 ``` 165 It looks like the word `license` appeared 5 times on each of the 2 pages considered. 166 167 By default, Pachyderm will spin up the same number of workers as the number of nodes in your cluster. 168 This can be changed. 169 For more info on controlling the number of workers, check the [Distributed Computing](https://docs.pachyderm.com/1.13.x/concepts/pipeline-concepts/distributed_computing/#controlling-the-number-of-workers) page. 170 171 - Reduce content 172 173 The final pipeline, `reduce`, aggregates the total count per word. 174 Take a look at the words counted: 175 176 ```shell 177 $ pachctl list file reduce@master 178 ``` 179 ```shell 180 NAME TYPE SIZE 181 ... 182 /liberated file 2B 183 /librarians file 2B 184 /library file 2B 185 /license file 3B 186 /licenses file 2B 187 ... 188 ``` 189 For the word `license`, let's confirm that the total occurrence is the sum of the 2 previous numbers above 5+5. 190 191 ```shell 192 $ pachctl get file reduce@master:/license 193 10 194 ``` 195 ## 4. Expand on the example 196 197 Now that we have a full end-to-end scraper and word count use case set up, let's add more to it. Go ahead and add one more site to scrape. 198 199 ```shell 200 $ cd data && pachctl put file urls@master -f Github 201 ``` 202 Your scraper should automatically get started pulling the new site (it won't scrape Wikipedia again). 203 That will then automatically trigger the `map` and `reduce` pipelines 204 to process the new data and update the word counts for all the sites combined. 205 206 - Scraper content 207 ```shell 208 $ pachctl list file scraper@master 209 ``` 210 ```shell 211 NAME TYPE SIZE 212 /Github dir 195.1KiB 213 /Wikipedia dir 103.4KiB 214 ``` 215 ```shell 216 $ pachctl list file scraper@master:/Github 217 ``` 218 ```shell 219 NAME TYPE SIZE 220 /Github/pachyderm.html file 195.1KiB 221 ``` 222 The scraper has added one additional .html page following the URL provided in the new Github file. 223 224 - Map content 225 ```shell 226 $ pachctl list file map@master 227 ``` 228 | Then | **NOW** | 229 |------|-----| 230 |NAME - TYPE - SIZE|NAME - TYPE - SIZE| 231 |...|...| 232 |/liberated file 2B|/liberated file 2B| 233 |/librarians file 2B|/librarians file 2B| 234 | |**/libraries file 2B**| 235 |/library file 2B|/library file 2B| 236 |/license file 4B|/license file **7B**| 237 |/licenses file 4B|/licenses file 4B| 238 |...|...| 239 240 241 We have highlighted the newcomer (the new word `libraries`) and the change in the size of the `license` file. 242 243 ```shell 244 $ pachctl get file map@master:/license 245 23 246 5 247 5 248 ``` 249 It looks like the word `license` appeared 23 times on our new github page. 250 251 - Reduce content 252 253 ```shell 254 $ pachctl list file reduce@master 255 ``` 256 ```shell 257 NAME TYPE SIZE 258 ... 259 /liberated file 2B 260 /librarians file 2B 261 /libraries file 2B 262 /library file 2B 263 /license file 3B 264 /licenses file 2B 265 ... 266 ``` 267 Let's see if the total sum of all occurrences in now 23+5+5 = 33. 268 269 ```shell 270 $ pachctl get file reduce@master:/license 271 33 272 ``` 273 274 275 > By default, pipelines spin up one worker for each node in your cluster, 276 but you can choose to set a [different number of workers](https://docs.pachyderm.com/1.13.x/concepts/advanced-concepts/distributed_computing/) in your pipeline specification. 277 Further, 278 the pipelines are already configured to spread computation across the various workers with `"glob": "/*/*"`. Check out our [Glob Pattern](https://docs.pachyderm.com/1.13.x/concepts/pipeline-concepts/datum/glob-pattern/) to learn more. 279 280