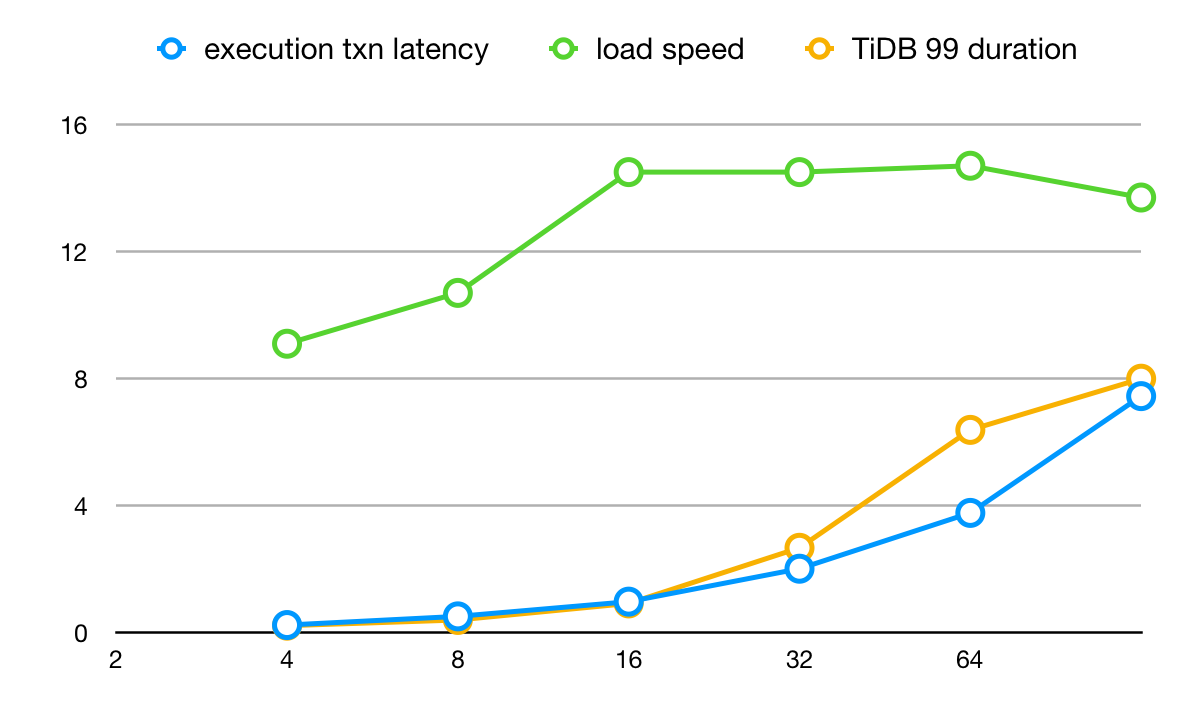

github.com/pingcap/tiflow@v0.0.0-20240520035814-5bf52d54e205/dm/docs/RFCS/20190906_flow_control.md (about) 1 # Proposal: Add flow control for data replication to downstream 2 3 - Author(s): [yangfei](https://github.com/amyangfei) 4 - Last updated: 2019-09-18 5 6 ## Abstract 7 8 Too fast import data or replication in DM may lead to downstream TiDB too busy to serve as normal. This proposal aims to find a way to detect the congestion of import or replication data flow and introduces a framework of dynamic concurrency control, which will help to control the import and replication data flow. 9 10 Table of contents: 11 12 - [Background](#Background) 13 - [Implementation](#Implementation) 14 - [Congestion definition](#Congestion-definition) 15 - [How to measure the key indicators](#How-to-measure-the-key-indicators) 16 - [How to detect and measure congestion](#How-to-detect-and-measure-congestion) 17 - [Congestion control framework](#Congestion-control-framework) 18 - [The congestion detect component](#The-congestion-detect-component) 19 - [The data flow control component](#The-data-flow-control-component) 20 - [The concurrency control component](#The-concurrency-control-component) 21 22 ## Background 23 24 Firstly we will discuss the key factor that affects the import or replication speed between DM data processing unit and downstream TiDB. 25 26 - In the full data import procedure, data import speed is based on the SQL batch size and SQL executor concurrency. The SQL batch size is mainly determined by the single statement size of dump data, which can be controlled with the `mydumper` configuration. The SQL executor concurrency equals to the worker count of load unit, which is configurated by loader `pool-size` in task config. 27 - In the incremental data replication procedure, replication speed relates to SQL job batch size and SQL executor concurrency. The binlog replication unit gets data from relay log consumer (assuming the relay log consume speed is fast enough), and distributes SQL jobs to multiple executors, the executor count is configurated by `worker-count` in task config. When the cached SQL job count exceeds SQL batch size (SQL batch size is configurated by `batch` in task config) or every 10ms, all SQLs in these jobs will be executed to downstream in a transaction. 28 29 In the full data import scenario, downstream congestion may happen if we have too many DM-workers or much high executor concurrency. 30 31 While in incremental data replication scenario the congestion does not happen often, but there still exists some use scenario that may lead to congestion, including 32 33 - User pauses replication task for a while and a lot of relay log is accumulated, and the replication node has high concurrency. 34 - In shard scenario and there are too many upstream shard instances replicating to downstream at the same time. 35 - In none shard scenario, but there are many tasks replicating to downstream from multiple instances at the same time. 36 37 When we encounter these abnormal scenario, we often pause part of the tasks or decrease the SQL executor concurrency. The key point of solving the congestion problem is to reduce the data flow speed. Currently DM has no data flow control, we need to build a data flow control framework which will make data import or replication more smooth, more automotive and as fast as possible. The data flow control framework should provide the following features: 38 39 - Downstream congestion definition and auto detection 40 - Import or replication speed auto control 41 42 ## Implementation 43 44 ### Congestion definition 45 46 There exists some key concepts in a data import/replication: 47 48 - Transaction failure: Each database transaction execution failure is treated as one transaction failure, the failure includes every kind of database execution failure that no data is written to downstream, partial data written error does not include. 49 - Bandwidth: Bandwidth describes the maximum data import/replication rate from a DM-worker to downstream, this can be measured by TPS from DM-worker (or TPS in downstream, but should only include the data traffic from this DM-worker). 50 - Latency: Latency is the amount of time it takes for data to replicate from DM-worker to downstream. 51 52 The congestion usually means the quality of service decreases because of the service node or data link is carrying more data than it can handle. In the data import/replication scenario, the consequence of congestion can be partial downstream database execution timeout, downstream qps decrease or SQL execution latency increase. We can use these three indicators to determine whether congestion happens and measure the degree of congestion. 53 54 #### How to measure the key indicators 55 56 Before we can measure the congestion, we should find a way to estimate the transaction failure, the bandwidth and the latency. 57 58 - Transaction failure: can be collected in DM-worker itself, and we often use it as transaction failure rate, which means the count of sqls in all failed transaction divided by the count sqls in all transactions that need to be processed. 59 - Latency: equals to the transaction executed latency, can be collected in DM-worker itself. 60 - Bandwidth: this is a little complicated, but we can estimate it by adjusting the concurrency and find the maximum downstream TPS for this DM-worker, which can be used as the bandwidth of the data import/replication link. 61 62 Take the [benchmark result in DM 1.0-GA](https://pingcap.com/docs/v3.0/benchmark/dm-v1.0-ga/#benchmark-result-with-different-pool-size-in-load-unit) as an example, we extract the result in load unit test, use load unit pool size as X-axis, latency and load speed as Y-axis. 63 64  65 66 From the benchmark result we can draw the following conclusions: 67 68 - The latency grows exponentially as the concurrency increases. 69 - The TPS grows linely as the concurrency increases in a specific range, in this case the liner range is between 0-16. 70 - After the TPS reaches the maximum value, it remains the same even the concurrency increases. The maximum value of TPS can be used as the bandwidth. 71 72 #### How to detect and measure congestion 73 74 Congestion can be exposed in different ways and have different effect on key indicators. We have the following predictive indicators change that may reflect congestion. 75 76 - If TPS doesn't increase alongside with the concurrency increase (assuming the dumped data or relay log stream is fast enough), we can believe the congestion is occurring. 77 - If concurrency doesn't change, but TPS decreases and latency increases significantly (maybe TPS halves and latency doubles), we can believe the congestion is occurring. 78 - If concurrency doesn't change, TPS and latency don't change too much, but transaction failure rate increases significantly (however in most cases if transaction failure rate increases, we often find TPS decreases and latency increases), we can believe the congestion is occurring. 79 80 The congestion degree can be measured by the three indicators, but the workflow in the real world is often complicated, we won't introduce an estimate model in this proposal, as we only need to known whether the congestion is occurring in our congestion control model. 81 82 ### Congestion control framework 83 84 The congestion control framework is separated into three parts, the congestion detect component, the data flow control component and the concurrency control component. 85 86 #### The congestion detect component 87 88 The congestion detect component will collect the key indicators in DM-worker as following: 89 90 - transaction failure rate: we will add a sliding window to record the count of downstream resource busy error (such as `tmysql.ErrPDServerTimeout`, `tmysql.ErrTiKVServerTimeout`, `tmysql.ErrTiKVServerBusy` etc) and the count of all dispatched transactions. The transaction failure rate will be calculated with these two counters. 91 - TPS detection: we will add a sliding window to record the count of successfully executed transactions, and calculate TPS with this value. 92 - latency: transaction execution latency is already recorded in load and sync unit, we can use it directly. 93 94 #### The data flow control component 95 96 This component is responsible to give a reasonable concurrency value based on the data flow stage, current and historical data flow indicators. Firstly we define some stage, which is used to represent the concurrency change stage. 97 98 ```go 99 // CongestionStage represents the stage in a congestion control algorithm 100 type CongestionStage int 101 102 // Congestion stage list 103 const ( 104 // When the task starts with a low concurrency, the concurrency can be increased fast in this stage. 105 StageSlowStart CongestionStage = iota + 1 106 // When the concurrency exceeds a threshold, we recommend to increase slowly, which is often called the avoidance stage. 107 StageAvoidance 108 // The steady stage means the data flow TPS reaches the bandwidth, the latency keeps steady and no transaction failure. 109 StageSteady 110 // When congestion happens and the concurrency is turned down, we enter the recovery stage. 111 StageRecovery 112 ) 113 ``` 114 115 We can use different congestion algorithm, just to implement the `FlowControl` interface. 116 117 ```go 118 type CongestionIndicator struct { 119 DataLossRate float64 120 TPS int 121 Latency float64 122 } 123 124 type FlowControl interface { 125 // NextConcurrency gives the recommended concurrency value 126 NextConcurrency(stage CongestionStage, history []*CongestionIndicator, current *CongestionIndicator) int 127 } 128 ``` 129 130 #### The concurrency control component 131 132 This component is used to control the concurrency of DM work unit. Currently the code in load unit and sync unit don't support changing `pool-size` or `worker-count` dynamically very well. We have two ways to solve the problem. 133 134 1. re-design the concurrent work model and support dynamically changing real worker count. 135 2. create enough workers when the load/sync unit initializes, and control the concurrency by controlling the working worker number. 136 137 We prefer to use the second method, which is more straight forward and less invasive to concurrent framework. The original `pool-size` and `worker-count` configuration will be used as the upper bound of concurrency.