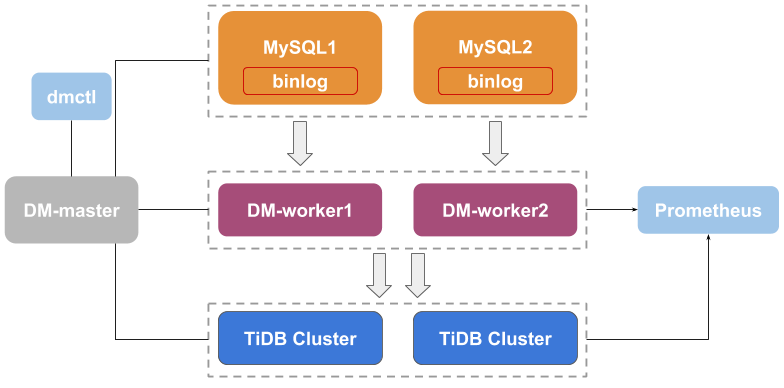

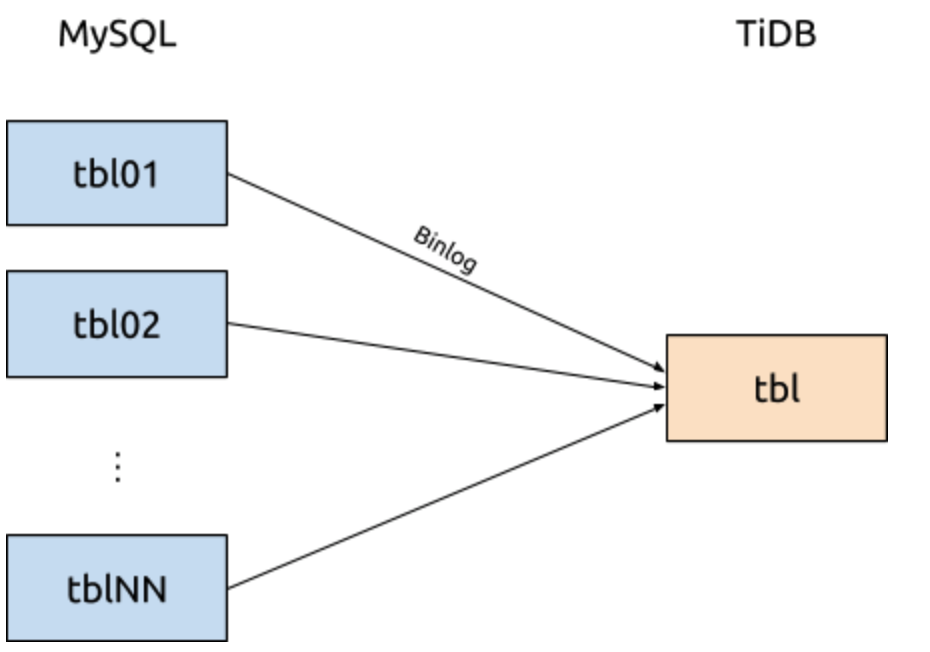

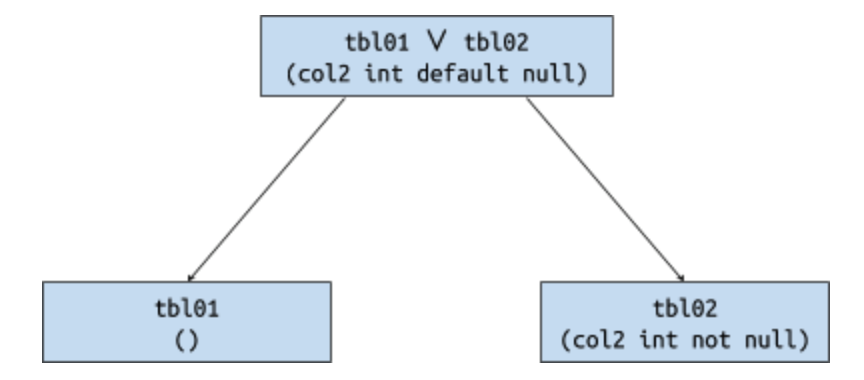

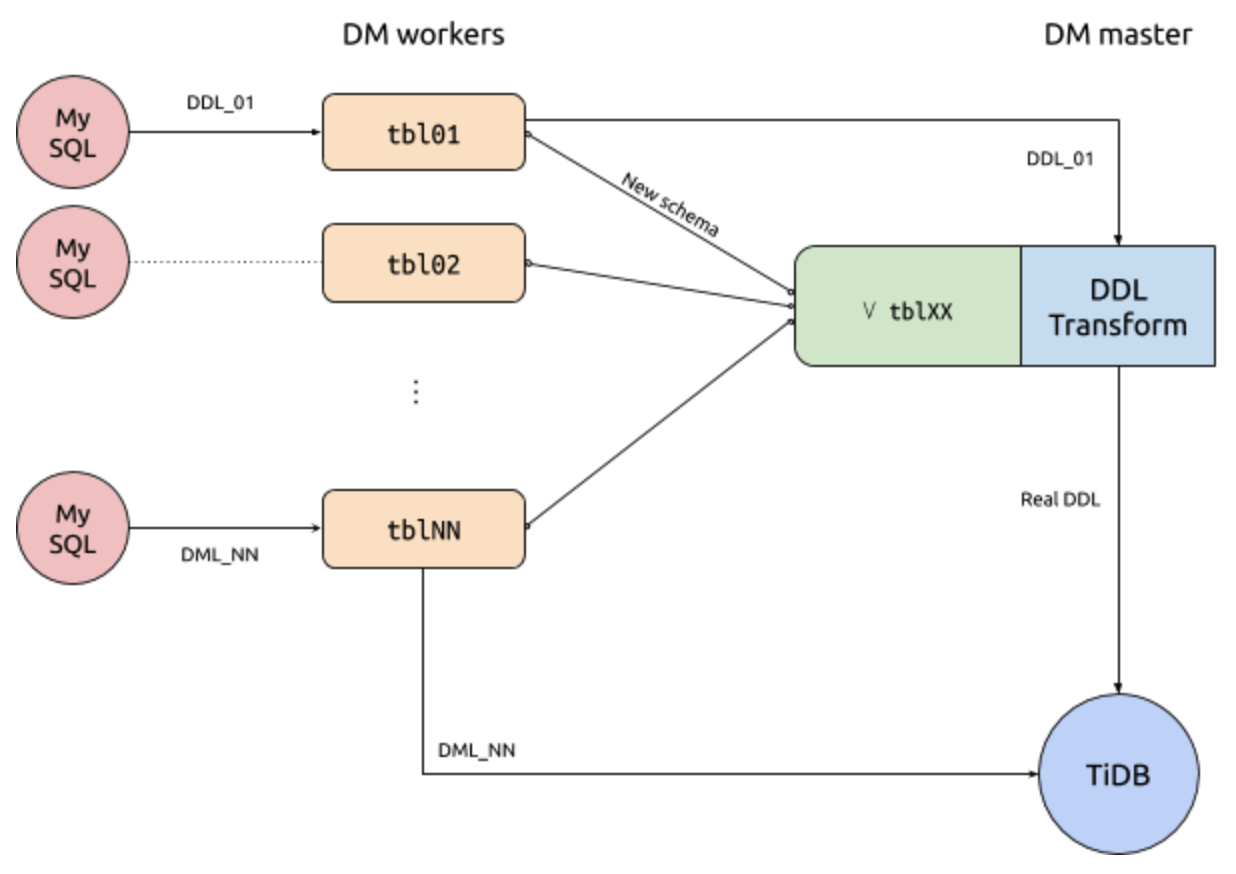

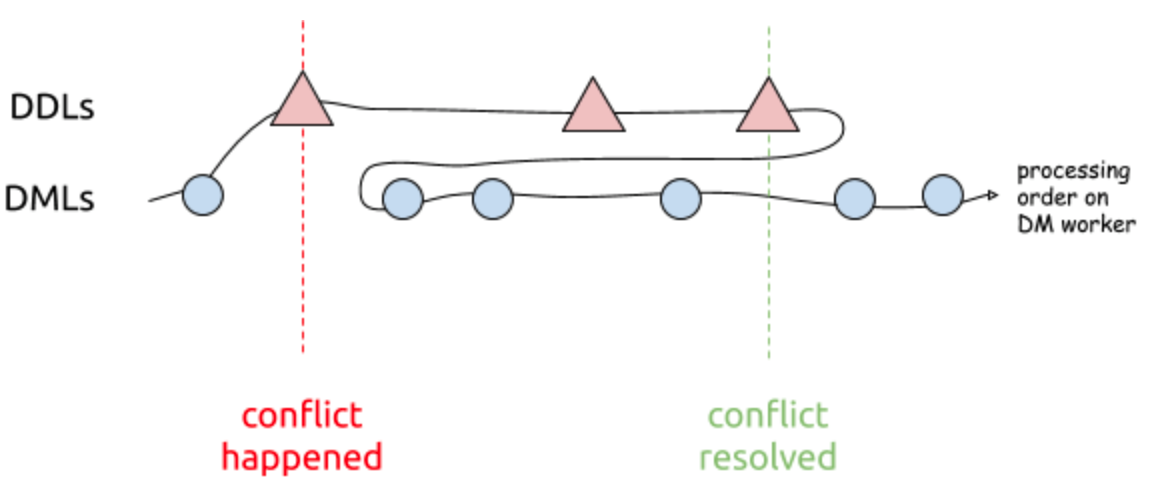

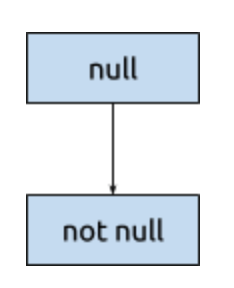

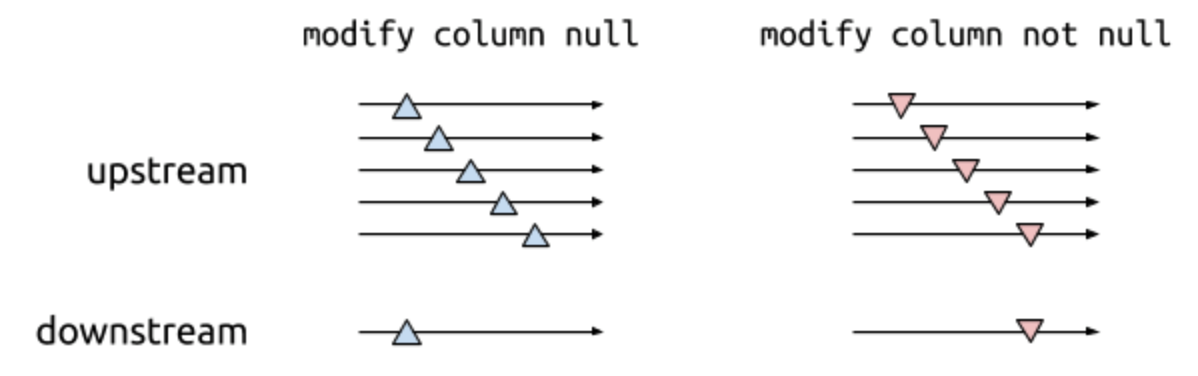

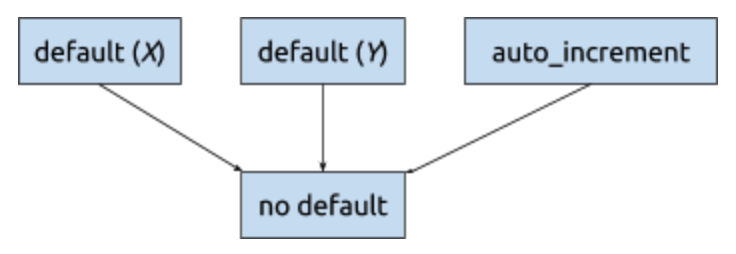

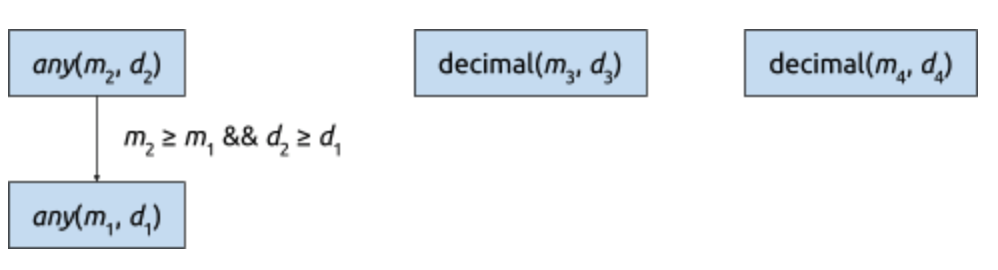

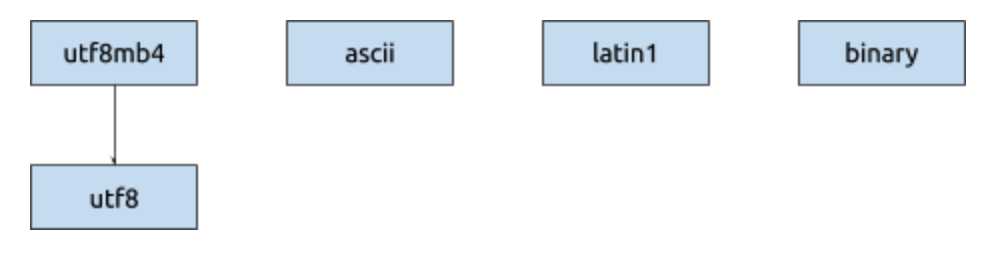

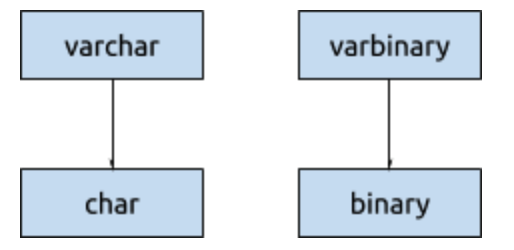

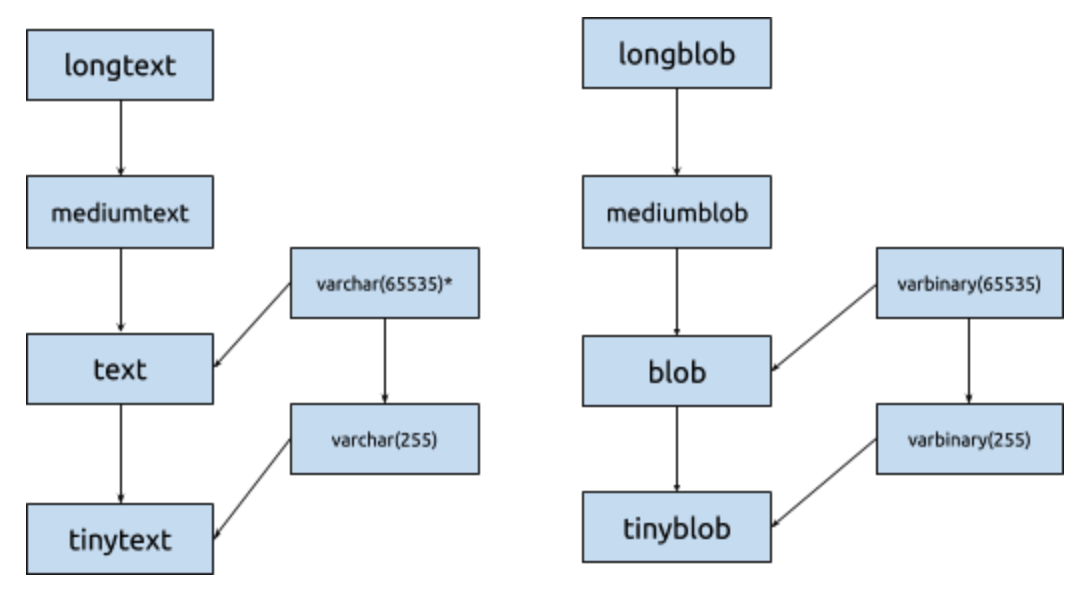

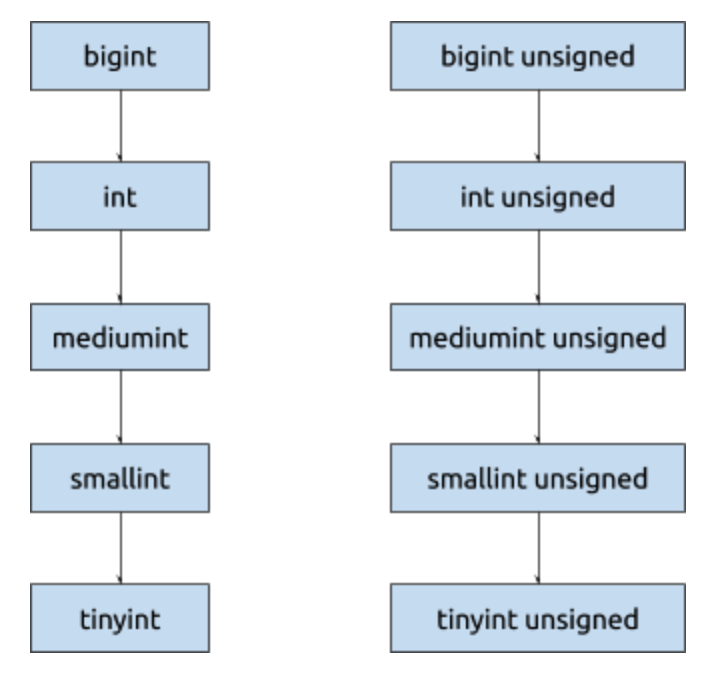

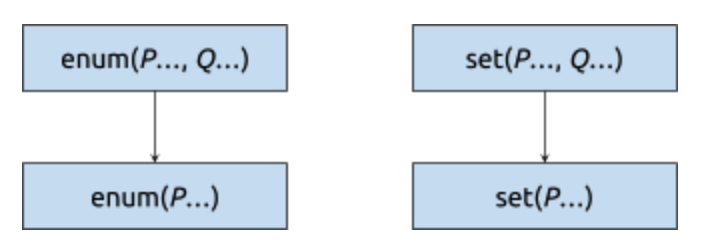

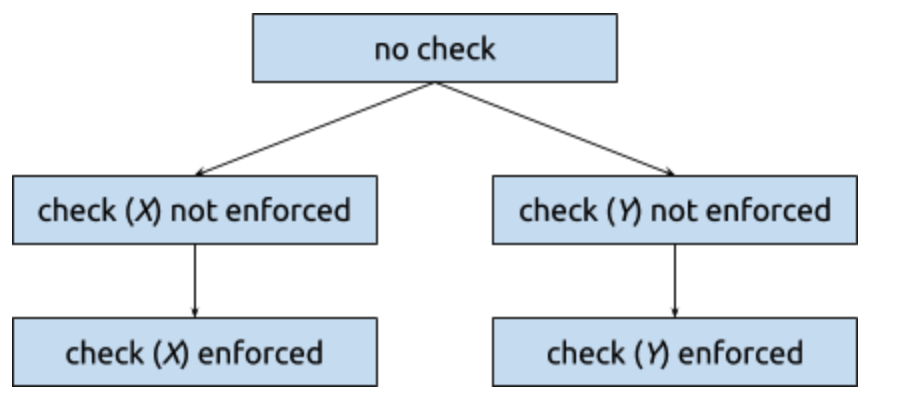

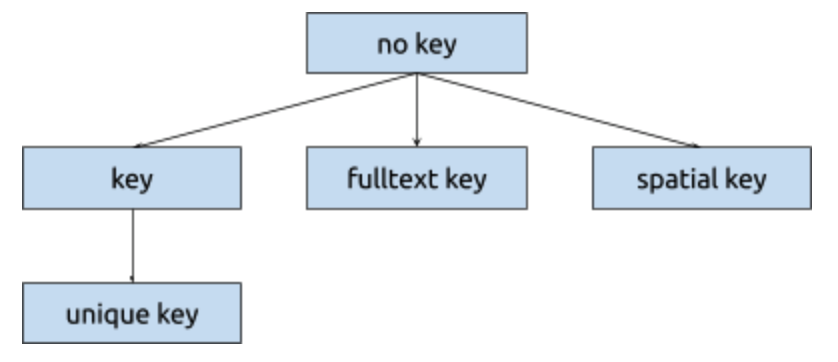

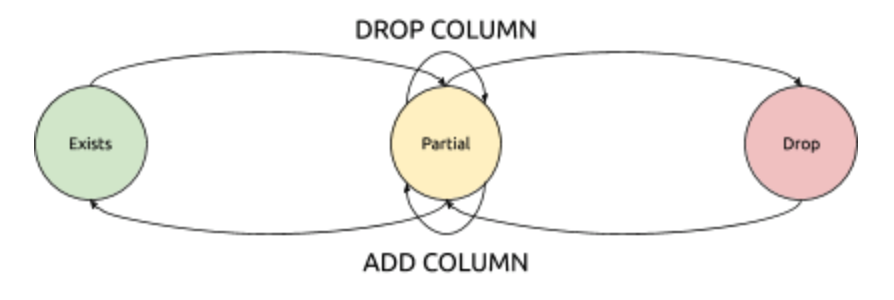

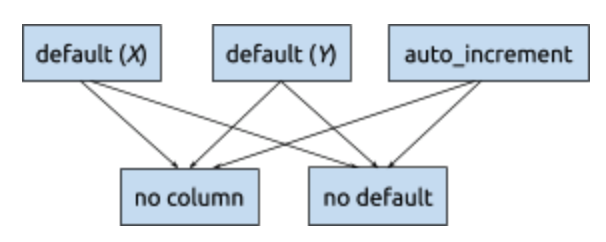

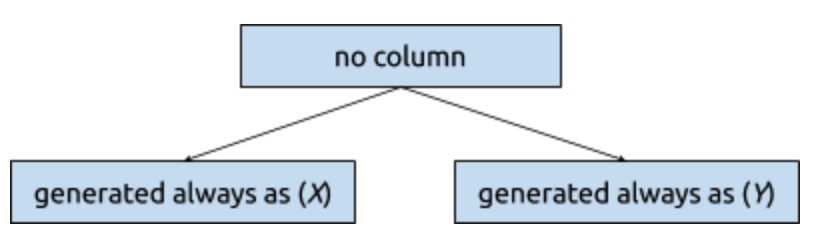

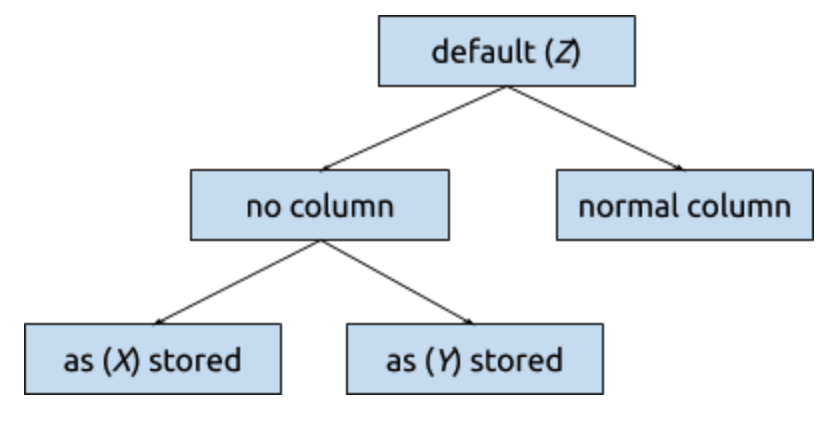

github.com/pingcap/tiflow@v0.0.0-20240520035814-5bf52d54e205/dm/docs/RFCS/20191209_optimistic_ddl.md (about) 1 # Proposal: Manage DDLs on Sharded Tables by Maximizing Schema Compatibility 2 3 - Author(s): [kennytm](https://github.com/kennytm) 4 - Last updated: 2019-12-09 5 6 ## Abstract 7 8 After installing Schema Tracker on DM workers, the DM master can compare schemas from all shards and produce a joined schema compatible with all DMLs produced by all shards. By diffing the joined schema before and after applying an upstream DDL, the DM master can produce the real DDL to be executed downstream. 9 10 ## Background 11 12 DM is a tool for synchronizing upstream MySQL databases into a downstream TiDB cluster via [row-based replication](https://dev.mysql.com/doc/refman/8.0/en/replication-formats.html). Whenever an SQL statement is executed on MySQL, some binlog events will be sent to the DM worker, which then translates them back into SQL and executed on TiDB. 13 14  15 16 One major feature of DM is [merging manually sharded tables](https://pingcap.com/docs/dev/reference/tools/data-migration/features/shard-merge/) into a single table. 17 18  19 20 The design difficulty on merging sharded tables is handling schema change (DDLs). Currently, DM resolves this with pessimistic replication: if a DDL statement is executed on tbl01, a [barrier](https://en.wikipedia.org/wiki/Barrier_%28computer_science%29) (known as sharding DDL lock) would be set up to pause all binlogs from tbl01 (including this DDL). The same is applied on all other tables, until the same DDL statement is executed on all of tbl01 to tblNN, where the barrier would be unlocked. 21 22 This approach ignores the actual action done by the DDL statement, e.g. even if executing ddl_1; ddl_2; is equivalent to ddl_3; ddl_4;, DM cannot recognize this and will not automatically unlock the barrier, and [require human intervention](https://pingcap.com/docs/dev/reference/tools/data-migration/features/manually-handling-sharding-ddl-locks/). This makes the whole feature feel risky and error-prone. 23 24 Even strictly following DDL execution, if the user takes a long time to execute all DDLs, it would create a very long backlog and thus cripples DM’s responsiveness. 25 26 ## Principles 27 28 To prevent the drawbacks mentioned above to make DM more user-friendly, we want to create a barrier-free algorithm (w.r.t. DMLs) for reconciling DDLs on sharded tables. 29 30 Note: This work assumes each DM worker already tracks the upstream schema independently from the downstream, so that column order is not a concern. 31 32 ### DDL awareness 33 34 The reason DM chooses pessimistic replication (barrier) is because it is a general solution for all DDL kinds. DM did not need to special case. A barrier-free solution must require DM to understand the meaning of each DDL, however. As an example, blindly propagating a “drop column” DDL from tbl01 would cause error when replicating data from tbl02. 35 36 ```sql 37 alter table tbl01 drop column col2; 38 insert into tbl01 (col1, col3) values (11, 13); 39 insert into tbl02 (col1, col2, col3) values (21, 22, 23); 40 ``` 41 42 In our case, we should at least recognize these DDL statements 43 44 - `alter table add column [first / after]` 45 46 - `alter table drop column` 47 48 - `create index` 49 50 - `drop index` 51 52 - `alter table modify column [first / after]` 53 54 and know that some changes are inverse of another (e.g. adding a column and then dropping the same column is equivalent to no-op). 55 56 ### CRDTs 57 58 The opposite of using a barrier is [optimistic replication](https://en.wikipedia.org/wiki/Optimistic_replication), where replicas (schemas of different shards) are allowed to diverge. The cost to this is having to make up a conflict resolution algorithm. 59 60 Here we borrow the idea of [conflict-free replicated data type (CRDTs)](https://en.wikipedia.org/wiki/Conflict-free_replicated_data_type). This data structure is conflict-free by defining a commutative, associative and idempotent “merge” function between all states. For shard merging, we use each shard’s schema as the state, and we define the merge function below. 61 62 Given a table schema S, We define its set of DMLs C(S) as all DML statements which can be successfully executed in that schema. For instance, with the table schema 63 64 ```sql 65 create table S1 (a int not null default 1); 66 ``` 67 68 the following DML statements are members of C(S1) 69 70 ```sql 71 insert into S1 (a) values (5); 72 insert into S1 () values (); 73 ``` 74 75 while the following is not a member of C(S1) 76 77 ```sql 78 insert into S1 (a) values (null); 79 ``` 80 81 Subset relationships of set of DMLs form a partial order. We define S1 ≤ S2 iff C(S1) ⊆ C(S2), i.e. S2 can accept more DML statements (producing the same effect) than S1. We call S2 more compatible than S1. For instance, the table schema is strictly more compatible than S1, which accepts the null value. 82 83 ```sql 84 create table S2 (a int null default 1); 85 ``` 86 87 To merge schemas S1, …, Sn, we take the [join](https://en.wikipedia.org/wiki/Join_and_meet) (supremum) in the semilattice formed by compatibility among all schemas. The result is a schema which can accept all DMLs from all shards, no matter how they become. 88 89 Why is this concept important? Suppose we drop a column col2 from table tbl01, leaving two different schemas in the same sharding group. 90 91 ```sql 92 create table tbl01 (col1 int not null, col3 int not null); 93 create table tbl02 (col1 int not null, col2 int not null, col3 int not null); 94 ``` 95 96 The binlog events are not aware of the downstream schema. The merged sharded table tbl must now accept these two DMLs 97 98 ```sql 99 insert into tbl01 (col1, col3) values (11, 13); 100 insert into tbl02 (col1, col2, col3) values (21, 22, 23); 101 ``` 102 103 Therefore, the downstream schema needs to be changed to tbl01 ∨ tbl02 like 104 105 ```sql 106 create table tbl (col1 int not null, col2 int default null, col3 int not null); 107 ``` 108 109 This suggests that this particular “drop column” DDL propagated downstream should be changed to 110 111 ```sql 112 -- transform from: alter table tbl drop column col2; 113 alter table tbl modify column col2 int default null; 114 ``` 115 116 Note that the intended result of running the DML is also important in determining compatibility. Consider the following two schemas: 117 118 ```sql 119 create table S5 (a int not null default 5); 120 create table S6 (a int not null default 6); 121 ``` 122 123 Although both accept the DML 124 125 ```sql 126 insert into S () values (); 127 ``` 128 129 For S5 this will insert a row with a = 5, while for S6 this will insert a row with a = 6. As the results are different, S5 and S6 are incompatible with each other. 130 131 In the rest of this document, we would express compatibility relationships using DAGs like this, where properties at the top are more compatible. 132 133  134 135 ### From schema diffs to DDL 136 137 The idea of CRDTs give us what the merged schema should look like, but it does not tell us how to construct SQL statements to reach that schema. In fact, there may be multiple pathways to the same schema. For instance, to change from T1 to T2, 138 139 ```sql 140 create table T1 (a int, b int, c int); 141 create table T2 (b int, c int, d int); 142 ``` 143 144 one could either rename column a to d and reorder it, or drop a and then add d, or successively rename a → b → c → d, etc. 145 146 ```sql 147 alter table T change column a d int after c; 148 alter table T drop column a, add column d int; 149 alter table T rename column a to b, rename column b to c, rename column c to d; 150 ``` 151 152 While all of these produce the same schema, the actual data in each would be wildly different. Therefore, even though there are tools like [migra](https://djrobstep.com/docs/migra) which can automatically compute the necessary DDLs from the start and end schemas alone, we must rely on which upstream DDL statements executed to improve the accuracy. 153 154 Additionally, some schemas can indeed have conflict because no DDLs exist to move between different schema. As an example 155 156 ```sql 157 create table S3 (a int, b float not null); 158 create table S4 (a int, b datetime not null); 159 ``` 160 161 In the joined schema S3 ∨ S4, the column b would have type varchar(24). But TiDB does not support changing the type of a column (nor does this join really make sense), and hence DM shall emit an error and forces S4 to pause synchronization. 162 163 ## Design 164 165 ### DM master 166 167 When a DM worker of a sharded table receives a DDL binlog event, it must upload the DDL and the schema after applying the DDL to DM master, instead of executing it on TiDB directly. The DM master must ensure the DDLs are sequentially and atomically managed. DML statements are still executed directly by DM worker without consulting DM master. 168 169  170 171 #### Error handling 172 173 DM master should ensure only one DDL from the sharding group is processed at a time. If the DDL from tbl01 leads to errors, the master should reply to tbl01 that the DDL is unacceptable. Example: 174 175 ```sql 176 -- Original schema: create table tbl (a int); 177 alter table tbl02 add column b float; 178 -- Current schema: create table tbl (a int, b float default null); 179 alter table tbl01 add column b datetime; 180 -- Error! Column b cannot be both float and datetime 181 insert into tbl02 (a, b) values (1, -2.5); 182 insert into tbl01 (a, b) values (3, now()); 183 -- ^ one of these two rows must be lost 184 ``` 185 186 In this case, the row from tbl01 can never be synchronized. 187 188 When DM worker received an error notification from DM master, it needs to pause synchronization of DMLs. User can perform DDLs upstream on tbl01 or any other shards (all DDLs should continued to be sent to DM master), until DM master finds the conflict is resolved, who will actively tell DM worker that the DMLs may be resumed. 189 190 To fix this, we could either 191 192 - `drop column b on tbl02, add column b with type datetime` 193 194 - `drop column b on tbl01, add column b with type float` 195 196 In the first case, the conflict of tbl01 is resolved non-intrusively (not touching tbl01’s data and schema). The paused DML events become valid, and can continue to be propagated. 197 198 In the second case, the conflict of tbl01 is resolved intrusively (touching tbl01’s data or schema). The DMLs during the conflict are invalid, and should be disposed of. 199 200 Intrusively resolving the conflict does not always lead to invalid DMLs though. 201 202 ```sql 203 -- Original schema: create table tbl (a int); 204 alter table tbl02 add column c text; 205 -- Current schema: create table tbl (a int, c text default null); 206 alter table tbl01 add column c datetime; 207 -- Error! Column b cannot be both text and timestamp 208 insert into tbl02 (a, c) values (1, 'content'); 209 insert into tbl01 (a, c) values (3, now()); 210 ``` 211 212 We could resolve the conflict by alter table tbl01 modify column c text;. Because MySQL is weakly typed, a datetime value can be inserted directly into a text column, meaning the DML is still synchronizable. 213 214 It is difficult to programmatically decide when a DML is valid or not. Therefore, after the conflict is resolved, we choose to let DM syncer continue processing binlogs from where it was first paused. 215 216  217 218 If certain DMLs are truly invalid, it should pause the DM worker again, and this offending binlog event could be got rid of by [dmctl’s sql-skip --binlog-pos feature](https://pingcap.com/docs/dev/reference/tools/data-migration/skip-replace-sqls/#sql-skip). The requirement of human intervention here is unfortunate but unavoidable. 219 220 ### Modifying column types 221 222 When receiving one of these DDL statements: 223 224 ```sql 225 alter table tbl01 alter column col2 set default expr; 226 alter table tbl01 alter column col2 drop default; 227 alter table tbl01 change column col2 col2 col_spec; 228 alter table tbl01 modify column col2 col_spec; 229 ``` 230 231 Compute the most compatible column type new_col_spec among all shards, then modify the column using new_col_spec instead. 232 233 #### Nullability 234 235  236 237 A null column is strictly more compatible than a non null column. 238 239 Effectively, as soon as the column of one shard becomes null, this should be propagated downstream immediately. On the other hand, to convert a column to non null, we need to wait until all shards are modified. 240 241  242 243 #### Defaultness 244 245  246 247 Having a default value is similar. However, columns with different default value are incompatible and should raise error. 248 249 #### Precision 250 251  252 253 TiDB only allows increasing precision. Additionally, the precision of the decimal type cannot be changed on TiDB, so these always result in error too. 254 255 #### Character sets 256 257  258 259 Only utf8 < utf8mb4 is supported. All other character sets cannot be joined. 260 261 #### Character types 262 263  264 265 Note that char(n) charset binary is equivalent to binary(n). 266 267  268 269 varchar(65535) only exists for latin1 and ascii character sets. For utf8 and utf8mb4, the types text and varchar(255) cannot be joined. 270 271 #### Integer types 272 273  274 275 TiDB does not support changing signedness. 276 277 #### Sets and enums 278 279  280 281 Changing enums and sets are compatible only if members are appended to the end. 282 283 #### Other types 284 285 The remaining (year, date, time, datetime, timestamp, float, double, decimal, bit, json) are independent and cannot be converted to or from other types. 286 287 ### Constraints and indices 288 289 A table with less constraints are more compatible. Therefore, a DDL adding constraints should be executed last, while a DDL removing constraints should be executed first. 290 291 #### Checks 292 293  294 295 When encountering DDLs modifying checks, either propagate the DDL or drop it depending whether the check (with the same name) still exists in the joined schema. 296 297 ```sql 298 alter table tbl01 add check (expr); 299 alter table tbl01 drop check name; 300 alter table tbl01 alter check name [not] enforced; 301 ``` 302 303 (Note that, in MySQL, “check” clauses in a “modify column” DDL is ignored.) 304 305 #### Indices 306 307  308 309 When encountering DDLs adding or removing indices, either propagate the DDL or drop it depending whether the index (with the same name) still exists in the joined schema. DM master should reply to DM worker as soon as possible, without waiting for TiDB to complete adding the indices. 310 311 ```sql 312 alter table tbl01 add [spatial | fulltext | unique] key name (cols); 313 alter table tbl01 alter check name [not] enforced; 314 alter table tbl01 drop key name; 315 create [spatial | fulltext | unique] index name on tbl01 (cols); 316 drop index name on tbl01; 317 alter table tbl01 modify column col02 col_spec unique; 318 alter table tbl01 rename key old_name to new_name; 319 ``` 320 321 #### Foreign keys 322 323 (TODO; Not supported by TiDB) 324 325 ### Adding and dropping columns 326 327 Columns can be in one of three states: 328 329 - Exists on all shards 330 331 - Partially exists 332 333 - Dropped from all shards 334 335  336 337 Since DM master has a copy of all sharded schemas, it can simply count how many times this column exists on each shard to determine the column’s state in the joined schema. 338 339 #### Adding column on first shard (dropped → partial) 340 341  342 343 When adding a column which did not exist before, we should change the specification to include a default value if not given. 344 345 | Type | Default | Type | Default | 346 | ---- | ------- | ---- | ------- | 347 | Any nullable type | null | year | '0000' | 348 | int | 0 | date | '0000-00-00' | 349 | float/double | 0.0 | time | '00:00:00' | 350 | decimal | 0 | datetime | '0000-00-00 00:00:00' | 351 | bit | 0 | timestamp | '0000-00-00 00:00:00' | 352 | char/varchar/text | '' | enum/set | 0 | 353 | binary/varbinary/blob | '' | json | 'null' | 354 355 For instance, these 3 DDL statements 356 357 ```sql 358 alter table tbl01 add column col4 int; 359 alter table tbl01 add column col5 int not null unique; 360 alter table tbl01 add column col6 int not null default 3; 361 ``` 362 363 should be converted to these in downstream 364 365 ```sql 366 alter table tbl add column col4 int default null; 367 alter table tbl add column col5 int not null default 0; 368 alter table tbl add column col6 int not null default 3; 369 ``` 370 371 Note that if the new column contains any “unique” or “check” constraints, they would be ignored as described in the [Constraints and indices](#constraints-and-indices) section. 372 373 #### Adding column on remaining shards (partial → partial) 374 375 Executing “add column” on the remaining shards should be translated to “modify column” on downstream, e.g. if we further execute 376 377 ```sql 378 alter table tbl02 add column col4 bigint; 379 ``` 380 381 DM master should turn this into 382 383 ```sql 384 alter table tbl modify column col4 bigint default null; 385 ``` 386 387 As usual, if the type is not changed nothing should executed. 388 389 #### Adding column on last shard (partial → exists) 390 391 When the last shard has completed adding the column, 392 393 ```sql 394 alter table tblNN add column col5 int not null unique; 395 ``` 396 397 DM master should drop the default value (if needed), and also populate any associated checks and indices. 398 399 ```sql 400 alter table tbl alter column col5 drop default; 401 alter table tbl add unique key col5 (col5); 402 ``` 403 404 #### Dropping column on first shard (exists → partial) 405 406 Dropping a column reverses the action done by adding column. 407 408 ```sql 409 alter table tbl01 drop column col7; 410 ``` 411 412 DM master would keep the column but add a default. In addition, all indices and checks associated with this column should be immediately dropped, before receiving any DML events from tbl01. 413 414 ```sql 415 alter table tbl alter column col7 drop default; 416 alter table tbl drop key col7; 417 alter table tbl drop check tbl_chk_7; 418 ``` 419 420 #### Dropping column on remaining shards (partial → partial) 421 422 At this stage dropping the same column just become no-op. 423 424 #### Dropping column on last shard (partial → dropped) 425 426 When the last shard has dropped the column, the DDL can be finally be propagated to downstream. 427 428 ```sql 429 alter table tbl drop column col7; 430 ``` 431 432 ### Renaming columns 433 434 **Important**: TiDB does not support renaming columns yet. 435 436 #### Standard procedures 437 438 ```sql 439 alter table tbl01 rename column a to b; 440 ``` 441 442 Schematically, renaming a column is equivalent to adding a new column and dropping the old one. Therefore, the treatment would be similar to adding and removing columns. The differences are that 443 444 1. The new column should store all data from the old column. 445 446 2. Values inserted/updated in the old column from tbl02 should eventually appear in the new column in downstream tbl. 447 448 Therefore, this DDL at the beginning (old exists → partial; new dropped → partial), we should rename the column, then recreate the old column to capture values from tbl02: 449 450 ```sql 451 alter table tbl rename column a to b; 452 -- ^ rename the column 453 alter table tbl alter column b set default 0; 454 -- ^ make new column optional 455 alter table tbl add column a int default null; 456 -- ^ make old column available (and must be converted to nullable) 457 ``` 458 459 Renaming the columns in the remaining shards (old partial → partial; new partial → partial) should be no-op. 460 461 After the last shard renamed (old partial → dropped; new partial → exists), it should copy all those values from the old columns back to the new column before dropping. 462 463 ```sql 464 alter table tbl alter column b drop default; 465 -- ^ finalize new column 466 update tbl set b = a where a is not null; 467 -- ^ transfer stale old column content to new column 468 alter table tbl drop column a; 469 -- ^ finalize old column 470 ``` 471 472 Similar to the case of adding and dropping columns, checks and indices involving the renamed column would be temporarily dropped. 473 474 (TODO: Investigate non-standard column rename procedure) 475 476 ### Generated columns 477 478 Unlike normal columns, generated columns are absent from DML events, and thus have no “partial” state. Nevertheless, a generated column may contain expressions which is invalid for some values. 479 480 ```sql 481 alter table tbl01 add column b int as (100 / a); 482 insert into tbl02 (a) values (0); 483 ``` 484 485 Therefore, we consider a generated column missing be more compatible, i.e. generated columns should be added last and dropped first. 486 487  488 489 #### Stored generated columns 490 491  492 493 A stored generated column can be changed to a normal column, and vice-versa on MySQL. TiDB does not support changing a normal column to a stored generated column though. 494 495 ```sql 496 -- Original schema: create table tbl (a int, b int as (a + 1) stored); 497 alter table tbl01 modify column b int; 498 -- ^ no longer generated 499 alter table tbl01 modify column b int as (a - 1) stored; 500 -- ^ again generated and overwrites everything (not supported by TiDB) 501 ``` 502 503 Changing a stored generated column to a normal column is effectively the same as adding the column, since the generated column is filtered out when reconstructing the SQL from binlog event. 504 505 Hence, the above DDL statements should be translated as 506 507 ```sql 508 -- generated → normal, first shard 509 alter table tbl modify column b int default null; 510 -- generated → normal, last shard 511 alter table tbl alter column b drop default; 512 -- normal → generated, first shard 513 alter table tbl alter column b set default null; 514 -- normal → generated, last shard 515 alter table tbl modify column b int as (a - 1) stored; 516 ``` 517 518 ### Partitions 519 520 (TODO: Fill in. But is anyone sane enough to manually shard partitioned tables?) 521 522 ### Creating and dropping tables 523 524 ```sql 525 create table tbl99 (…); 526 drop table tbl02; 527 recover table tbl02; 528 rename table tbl01 to irrelevant_table; 529 rename table irrelevant_table to tbl01; 530 ``` 531 532 When we create or drop a table, it would move into or out of the sharding group. This could have gazillions of effects on the group’s joined schema. For instance, all partially many added/dropped/renamed columns may suddenly be all confirmed due to losing a member. 533 534 DM would try its best to deduce the necessary DDL statements to reproduce the large diff, but some context can be lost (like add/drop vs rename). 535 536 (Note: currently DM ignores “drop table” and “drop database” DDLs involving sharding groups. We may continue to do so.) 537 538 ### Idempotent DDLs 539 540 Some DDL statements do not have any noticeable effect on the schema. These DDLs, if proved to be idempotent, could be propagated directly downstream. Examples: 541 542 - Reordering columns 543 544 - Changing table’s options (default character set, auto-increment ID, comment, etc.) 545 546 - Changing column’s options (comment) 547 548 - Truncating the table (really?) 549 550 ### Unsupported DDLs 551 552 DDL statements which the Schema Tracker does not understand or DM master cannot join should pause DM worker. User can sql-skip these statements and resume. 553 554 ## Restrictions 555 556 DM had some [restriction](https://pingcap.com/docs/dev/reference/tools/data-migration/features/shard-merge/#restrictions) when managing sharded tables. With the design above, these restrictions still remain: 557 558 - DDLs not recognized by TiDB are not supported, including but not limited to: 559 560 - Renaming columns 561 562 - Incompatibly changing column types (e.g. integer <-> char) 563 564 - Adding or removing primary keys 565 566 - Renaming, creating and dropping table should only be performed when the schemas among all shards are consistent. 567 568 - Schemas of the shards should not be diverged in a way that result in conflict, e.g. you should not 569 570 - add a column on two shards with same name but different types 571 572 - add a column on two shards with same name but different default values 573 574 - add a generated column to two shards with same name but different generated expressions 575 576 - add an index on two shards with same name but different key parts 577 578 - (we could try to explore further cases by fuzzing with generated DDLs and DMLs) 579 580 - (the effect of violating these cases should be documented) 581 582 - Upstream and downstream should have the same sql_mode. 583 584 Risks: 585 586 - All schemas of every shards should be consistent before and after running a batch of DDL statements. (← please further clarify) 587 588 - or, no DMLs before the schemas could become inconsistent 589 590 - worst-case fix: re-synchronize from the point where all schemas were still consistent. 591 592 Some restrictions are lifted, e.g. 593 594 - You can now execute DDLs in different order or combination, as long as the final result converges. 595 596 - The upstream MySQL schemas and downstream TiDB schema does not need to exactly match; ensuring upstream ≤ downstream is enough. 597 598 ## Task management 599 600 Programs can go wrong, and we may need to fix the states manually. We will need the following additional commands: 601 602 - disable-ddl-propagation 603 604 - Disallows DM master sending DDLs to downstream. 605 606 - enable-ddl-propagation 607 608 - Allows DM master sending DDLs to downstream. The schema diff during the period while DDL propagation was disabled are to be executed immediately. 609 610 - set-schema --worker 192.168.1.10:8262 --table '`db01`.`tbl02`' 611 612 - Forcibly replaces a table’s schema by a custom “create table” statement. 613 614 Commands involving DDL locks (show-ddl-locks, unlock-ddl-lock, break-ddl-lock) can be removed. 615 616 ## Scratch pad (please ignore) 617 618 --- 619 620 [VMware Tungsten Replicator](https://pubs.vmware.com/continuent/tungsten-replicator-5.0-oss/index.html) supports replicating MySQL binlog or Oracle CDC to any custom downstream database. The scenario closest to shard merging is [fan-in topology](https://pubs.vmware.com/continuent/tungsten-replicator-5.0-oss/deployment-fanin.html), where multiple masters replicate data to the same slave. 621 622 However, in face of online schema change, TR simply recommends [pausing replication to the slave](https://pubs.vmware.com/continuent/tungsten-replicator-5.0-oss/operations-schemachanges.html) when performing the DDLs, which isn’t a useful solution to us. 623 624 --- 625 626 A prior art for this is [Citus](http://docs.citusdata.com/en/v8.3/develop/reference_ddl.html), which only officially supports automatic handling of some special DDL kinds on a distributed table, such as “add column”, “drop index”, etc. Other statements must be [executed manually](http://docs.citusdata.com/en/v8.3/develop/reference_propagation.html#manual-prop) on the shards. 627 628 --- 629 630 We cannot support renaming where a column is both the source and target 631 632 ```sql 633 alter table tbl01 rename column d to e; 634 alter table tbl01 rename column c to d; 635 ``` 636 637 This would require downstream to accept the DML `insert into tbl (d) values (...);` with potentially two different types. DM master can prevent such situation by reporting error on the second DDL, by examining the column’s state transition. 638 639 | old \ new | dropped → partial | partial → partial | partial → exists | 640 | --------- | ----------------- | ----------------- | ---------------- | 641 | exists → partial | first shard | ? | ? | 642 | partial → partial | ? | remaining shard | ? | 643 | partial → dropped | ? | ? | last shard |