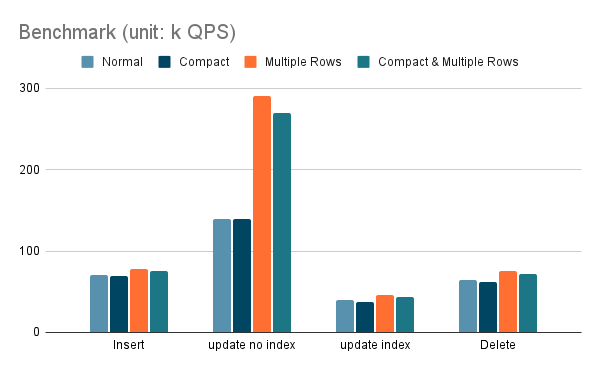

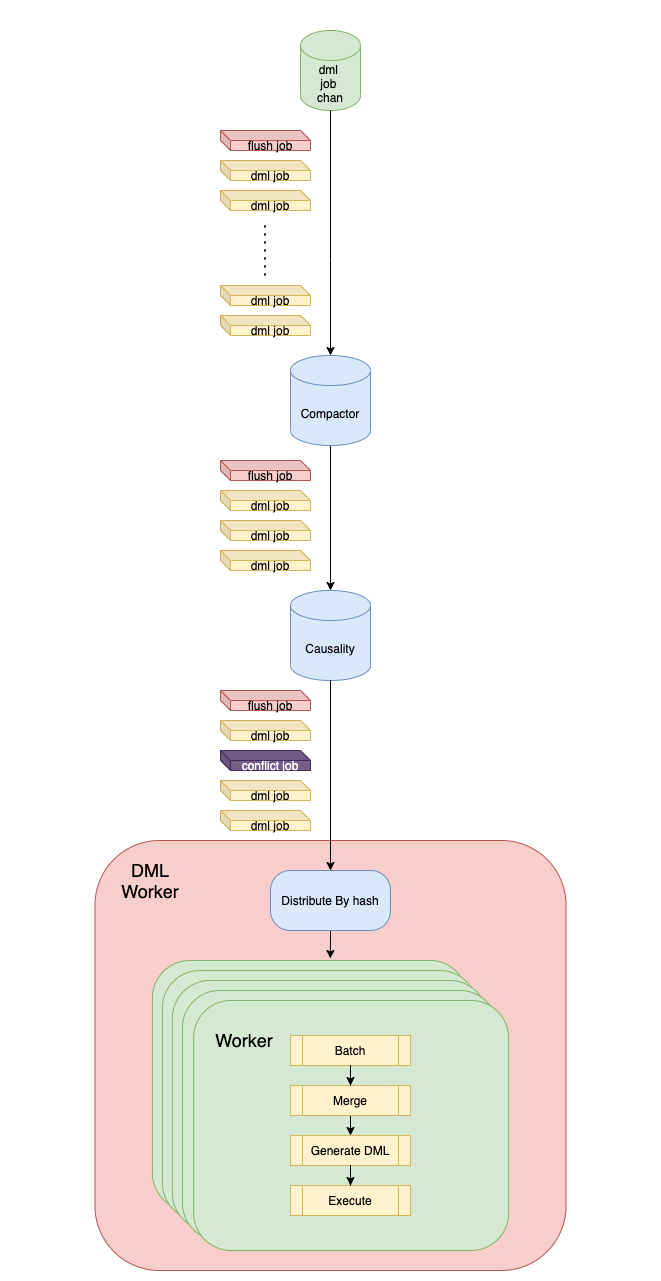

github.com/pingcap/tiflow@v0.0.0-20240520035814-5bf52d54e205/dm/docs/RFCS/20211008_dml_execution_optimization.md (about) 1 # Proposal: SQL Execution Optimization 2 3 - Author(s): [gmhdbjd](https://github.com/gmhdbjd) 4 - Last updated: 2021-10-08 5 6 ## Background 7 8 When the user replicates sharding task and the upstream has high-write workload, the downstream may have write bottleneck, resulting in the inability to catch up with the upstream or high synchronization latency. By analyzing the write logic, we try to optimize dml execution logic to maximize catching up with the upstream write speed when TiDB reaches the performance bottleneck. 9 10 ## Design 11 12 ### Compactor 13 14 Some users' write logic is: one INSERT statement followed by multiple UPDATE statements in a short time. We consider merging these DMLs into one INSERT statement, which can reduce the number of SQL synchronized to downstream. 15 16 ``` 17 # Assuming that UPDATE statement does not update the primary key. If no primary key, choose a UNIQUE NOT NULL Key. 18 # If UPDATE statement update the primary key or unique key, split it into one DELETE and one INSERT statement. 19 # We list two successive DMLs of one row and the result that can be compacted. X means this situation will not happen. 20 INSERT + INSERT => X 21 INSERT + UPDATE => INSERT 22 INSERT + DELETE => DELETE 23 UPDATE + INSERT => X 24 UPDATE + UPDATE => UPDATE 25 UPDATE + DELETE => DELETE 26 DELETE + INSERT => REPLACE(INSERT ON DUPLICATE KEY IGNORE) 27 DELETE + UPDATE => X 28 DELETE + DELETE => X 29 ``` 30 31 Now we can then compact all DMLs with the same primary key to a single DML (INSERT/UPDATE/DELETE), and output them in the original order. 32 33 > **Notice** 34 > 35 > We can only compact DMLs for those tables with PK or Not NULL UK. 36 > If a table only has a unique key which can be NULL, we cannot compact it. 37 > e.g. 38 > ``` 39 > // column `a` is UNIQUE KEY DEFAULT NULL 40 > INSERT INTO tb(a,b) VALUES(NULL,1); 41 > FLUSH COMPACTOR 42 > // cannot compact these two to INSERT because it update two rows 43 > INSERT INTO tb(a,b) VALUES(NULL,1); 44 > UPDATE tb SET a=NULL, b=2 WHERE a IS NULL AND b=1; 45 > ``` 46 > But we can still detect causality for it as we do now, because NULL can be regarded as a special value and can be hashed to a special worker. 47 > 48 49 ### Merge 50 51 Users may use single SQL statement to update a large amount of data, because DM is a row-based replication, it will turn the upstream range update into multiple row update, such as one `UPDATE WHERE id>=1 and id<=10` statement will be converted into ten `update where id=x` statemnets, which has a big impact on the write performance, so consider merging multiple single value DMLs into one multi-value DML. By generating SQL with multiple values, we expect to reduce TiDB parsing statement time, network interaction latency, TiDB CPU and TiKV gRPC usage. 52 53 ``` 54 # Assuming `a` is the primary key and UPDATE statement does not update the primary key. 55 56 INSERT tb(a,b) VALUES(1,1); 57 + INSERT tb(a,b) VALUES(2,2); 58 = INSERT tb(a,b) VALUES(1,1),(2,2); 59 60 UPDATE tb SET a=1, b=1 WHERE a=1; 61 + UPDATE tb SET a=2, b=2 WHERE a=2; 62 = INSERT tb(a,b) VALUES(1,1),(2,2) ON DUPLICATE UPDATE a=VALUES(a), b=VALUES(b); 63 64 DELETE tb WHERE a=1 65 + DELETE tb WHERE a=2 66 = DELETE tb WHERE (a) IN (1),(2); 67 ``` 68 69 By combining multiple update statements into a single `INSERT ON DUPLICATE UPDATE` statement, TiDB can use batch get rather than point get, which will reduct gRPC usage. 70 71 ### DML Flow 72 73 Now, all the DMLs compacted by compactor will be sent to causality, and then we can execute DMLs by hash in parallel. 74 75 So the processing flow of DML will look like the following diagram. 76 77  78 79 - Compactor compacts DMLs, output a ***batch*** of DML jobs in original order and flush job(receive from input channel) 80 - Causality detects conflict for DMLs, output DML jobs, conflict job(conflict detected) and flush job(receive from input channel) in ***streaming*** 81 - DMLWorker receives DML jobs from Causality in streaming, distribute them to the Workers by hash key, each Worker executes batch jobs and wait for next batch of jobs. 82 83 > **Notice** 84 > 85 > According to the principle of latency first, all components must give priority to output DML jobs when the downstream load is low, which means compactor should flush buffer when ouput channel is empty even if the buffer is not full and DML workers should execute jobs when previous jobs are executed even if the batch is not full. 86 > 87 88 ## Configuration 89 90 Two configuration items will be added to the task configuration file, and their default values will both be false. 91 ``` 92 syncers: 93 global: 94 compact: true 95 multiple-rows: true 96 ``` 97 98 ### Benchmark 99 100 Through a demo, it is measured that under a specific environment, merge multiple DMLs into a multi value DML has 9%, 100%, 15%, 18% row QPS improvement respectively in bulk insert, no index update, index update and delete. 101 102 | unit: k QPS | Normal | Compact | Multiple Rows | Compact & Multiple Rows | 103 | :-----------: | :----: | :-----: | :-----------: | :---------------------: | 104 | INSERT | 71 | 69 | 78 | 75 | 105 | UPDATE NO INDEX | 140 | 140 | 290 | 270 | 106 | UPDATE INDEX | 40 | 37 | 46 | 43 | 107 | DELETE | 64 | 62 | 77 | 72 | 108 109