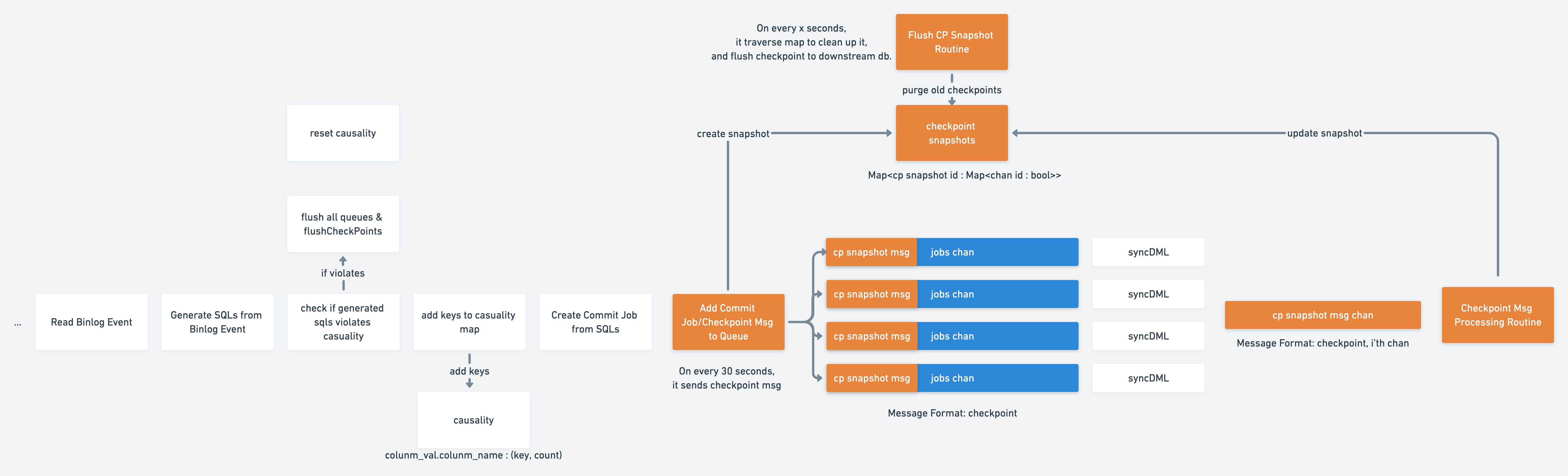

github.com/pingcap/tiflow@v0.0.0-20240520035814-5bf52d54e205/dm/docs/RFCS/20211012_async_checkpoint_flush.md (about) 1 # Proposal: Async Checkpoint Flush 2 3 - Author(s): [will](https://github.com/db-will) 4 - Last updated: 2021-10-12 5 6 ## Abstract 7 This proposal introduces an asynchronous mechanism to flush checkpoint in order to improve DM incremental replication performance. 8 9 Table of contents: 10 11 - [Background](#Background) 12 13 ## Background 14 In an incremental replication of DM, a checkpoint corresponds to the binlog position and other information of a binlog event that is successfully parsed and migrated to the downstream. Currently, a checkpoint is updated after the DDL operation is successfully migrated or 30 seconds after the last update. 15 16 For every 30 seconds, DM will synchronously flush checkpoints to the downstream database. The synchronous flushing blocks DM to pull and parse upstream binlog events, and so it impacts overall latency and throughput of DM. 17 18 Since checkpoint wasn’t designed to provide a very accurate replication progress info(thanks to [safe mode](https://docs.pingcap.com/tidb-data-migration/stable/glossary?_ga=2.227219742.2051636884.1631070434-1987700333.1624974822#safe-mode)), it’s only necessary to guarantee all upstream binlog events before saved checkpoint are replicated. Therefore, instead of synchronously flushing the checkpoint every 30 seconds, we can asynchronously flush the checkpoint without blocking DM to pull or process upstream binlog events. With async checkpoint flush, it can improve DM’s incremental replication throughput and latency. 19 20 Previously, [gmh](https://github.com/GMHDBJD) has conducted a performance testing to compare DM qps when checkpoint flush is enabled and disabled. It shows that there could be a 15% ~ 30% qps increase when checkpoint flush disabled. [glorv](https://github.com/glorv) created the initial proposal for aynsc checkpoint flush. On community side, there is a stale pr for [WIP: async flush checkpoints](https://github.com/pingcap/dm/pull/1627). But it hasn’t been updated for a very long time. 21 22 ## Goals 23 With asynchronous checkpoint flush: 24 - we should be able to see improvements on DM incremental replication qps and latency. 25 - it should guarantee that all events before checkpoint have been flushed. 26 - it should be upgradable and compatible with previous versions. 27 28 ## Current Implementation - synchronously checkpoints flush 29  30 31 As shown in the above diagram, the current bottleneck is the red box - “Add Commit Job to Queue”, where it will block further pulling and parsing upstream binlog events every 30 seconds when a flush checkpoint is needed. 32 33 ### How Causality Works With Checkpoint 34 Here is an example about how dml generate causality relations: 35 ``` 36 create table t1 (id int not null primary key, username varchar(60) not null unique key, pass varchar(60) not null unique key); 37 38 insert into t1 values (3, 'a3', 'b3'); 39 insert into t1 values (5, 'a5', 'b5'); 40 insert into t1 values (7, 'a7', 'b7'); 41 ``` 42 43 DM causality relations: 44 ``` 45 { 46 "3.id.`only_dml`.`t1`":"3.id.`only_dml`.`t1`", 47 "5.id.`only_dml`.`t1`":"5.id.`only_dml`.`t1`", 48 "7.id.`only_dml`.`t1`":"7.id.`only_dml`.`t1`", 49 "a3.username.`only_dml`.`t1`":"3.id.`only_dml`.`t1`", 50 "a5.username.`only_dml`.`t1`":"5.id.`only_dml`.`t1`", 51 "a7.username.`only_dml`.`t1`":"7.id.`only_dml`.`t1`", 52 "b3.pass.`only_dml`.`t1`":"3.id.`only_dml`.`t1`", 53 "b5.pass.`only_dml`.`t1`":"5.id.`only_dml`.`t1`", 54 "b7.pass.`only_dml`.`t1`":"7.id.`only_dml`.`t1`", 55 } 56 ``` 57 58 For each DML generated from upstream binlog event, DM will create a list of causality keys from primary key and unique key related to the DML, and then those keys will be added into global causality relations map. If a DML contains unique keys, those unique keys' value will point to the primary key as described in above causality relation map example. 59 60 Causality is used to prevent dmls related to the same primary key or unique key from being executed out of order. For example: 61 ``` 62 create table t1(id int primary key, sid varchar) 63 update t1 set sid='b' where id = 1 64 update t1 set sid='c' where id = 1 65 update t1 set sid='d' where id = 1 66 ``` 67 68 With a causality mechanism, we are guaranteed that these dmls will be executed in the same order as the upstream database does. 69 70 71 ## Proposed Implementation - asynchronously checkpoints flush 72  73 The component colored with orange are new components added for async checkpoint flush. 74 75 Instead of blocking to flush checkpoint, the idea of asynchronous flush checkpoint is to add a checkpoint snapshot message into each job channel, and forward it to the checkpoint snapshot message processing unit, then it will continuously update a central checkpoint map; At the same time, we periodically scan the central checkpoint snapshots map to flush the most recent checkpoint snapshot that already received all jobs chan’s checkpoint snapshot flush message, and clean up flushed checkpoints in the map. 76 77 The central checkpoint snapshot map is a map that uses a checkpoint snapshot id as key, a map of channel id mapped to bool as value. 78 79 The checkpoint snapshot id is a specific format of time(eg. 0102 15:04:05) representing when it’s created, and so we can easily sort them based on when it’s created. 80 81 The job channel id to bool map indicates which job channel’s checkpoint snapshot message has been received. As long as we have received all job channel’s checkpoint snapshot messages, we can flush the checkpoint snapshot. 82 83 However, the missing part is that we are leaving the causality structure filled with flushed jobs causality keys, and hence it could falsely trigger checkpoint flush even if the conflicting key’s jobs have been executed/flushed. We still need to explore the workload potentially causing a serious performance impact with the async checkpoint flush. 84 85 ### Causality Optimization 86  87 The component colored with orange are new components added for async checkpoint flush. 88 89 At the time of executing a batch of sql jobs in syncDML, we can collect those causality keys from these executed sql jobs, and so we can collect causality keys to delete in a separate data structure(TBD). There is a seperate routine running in background to purge causality keys to delete and update current causality on certain time interval. 90 91 Whenever we detect causality conflicts during processing upstream binlog event, we can update the current out-of-date causality with those causality-to-delete keys, and then recheck if it still violates causality rules. If still so, we will need to pause and flush all jobs in the channel. 92 93 This optimization is designed for keeping causality up to date and hence avoid stale causality keys conflicts caused checkpoint flush. 94 95 ## Testing 96 A separate testing doc needs to be created to fully design the testing cases, configurations and workload, failure points etc. In this section, we describe key points we need to evaluate the performance and compatibility of asynchronous checkpoints flush feature. 97 98 ### Performance 99 For performance testing, we would like to evaluate the asynchronous flush checkpoint feature from following aspects: 100 - Compared to the synchronous one, what’s the performance gain? 101 - Find workload/config easily violates causality check, and how does that impact performance? Does asynchronous perform better than the existing synchronous method? 102 - Try find workload/config cause checkpoint message queue busy/full, and check if perf is impacted? 103 - Check the actual performance impact with different checkpoint message interval and checkpoint flush interval 104 105 ### Compatibility 106 For compatibility testing, we would like to evaluate async checkpoint flush compatibility with DM operations, safe mode, and previous DM versions. 107 - Evaluate if pause & restart DM at different point, DM will still continue works well 108 - Evaluate if upgrading DM from lower DM version to current DM version will work 109 - Evaluate if async checkpoint compatible with safe mode DM 110 111 ## Metrics & Logs 112 Since extra components are introduced with asynchronous checkpoint flush, we need to add related metrics & logs to help us observe the DM cluster. The following design will subject to change in the development phase. 113 114 To get a better view of these new metrics and their impact on performance, we can add the [new Grafana dashboard](https://github.com/pingcap/dm/blob/master/dm/dm-ansible/scripts/DM-Monitor-Professional.json). 115 116 ### Checkpoint 117 #### Mertics 118 With following two metrics, we can conclude the time span of checkpoint snapshot msg: 119 - To understand start time of checkpoint snapshot msg, we record the checkpoint position when it’s pushed into the job chan 120 - To understand end time of checkpoint snapshot msg, we record the checkpoint pos when [checkpoint msg processing routine] find out a recent checkpoint is ready to flush at the time it process the received checkpoint msg from a channel 121 - To understand flush time of checkpoint, we record the checkpoint when it flushed in [checkpoint flush routine] 122 #### Logs 123 Log checkpoint when it’s pushed into job channel(need to evaluate its perf impact due to large amount of log generated) 124 Log checkpoint when it’s ready to flush after received all job chan msg(need to evaluate its perf impact due to large amount of log generated) 125 Log flushed and purged checkpoints 126 ### Causality 127 #### Mertics 128 To understand how many causality keys are added, we record the number of causality keys added into central causality structure and we can use sum to see the total number of causality keys added so far 129 130 To understand how many causality keys are resetted, we record the number of causality keys resetted. 131 132 Combine above two metrics, it will give us the number of causality keys at a certain moment. 133 134 Similar methods are applied to causality to delete structure, and we need to monitor the size of causality to delete. 135 #### Logs 136 Log information whenever causality or causality-to-delete are resetted. 137 Log causality keys when it’s added into causality or causality-to-delete data structure. 138 139 Both two pieces of information could be used for further debugging issues related to causality. 140 141 ## Milestones 142 ### Milestone 1 - Asynchronous Flush Checkpoint 143 For our first milestone, we will work on asynchronous flush checkpoint implementation, and measure the impact of causality keys conflicts. 144 145 In this stage, we focus on delivering code fast and test its performance to understand its impact for performance. 146 147 There are major work needed to be done for this milestone: 148 Asynchronous flush checkpoint implementation 149 Checkpoint related metrics and logs 150 Performance metrics of asynchronous flush checkpoint(especially when workload easily trigger causality conflicts) 151 No need to do comprehensive performance here, we focus on find out potential performance bottlenecks/impacts 152 Control memory consumption when no causality conflicts for a long time. 153 154 ### Milestone 2 - Asynchronous Flush Checkpoint With Causality Optimization 155 For our second milestone, if we find there are serious impacts on async flush checkpoint performance under causality conflicts, we will work on causality optimization. 156 157 In this stage, we focus on the quality of code of async flush checkpoint code. There are major work needed to done at this milestone: 158 Asynchronous flush checkpoint implementation with causality optimization 159 Causality related metrics and logs 160 Performance metrics of the implementation with causality optimization(especially when workload easily trigger causality conflicts) 161 No need to do comprehensive performance here, we focus on find out potential performance bottlenecks/impacts 162 Control memory consumption when no causality conflicts for a long time. 163 164 ### Milestone 3 - Testing 165 At this final stage, we will focus on comprehensive performance, compatibility, functionality testing, integration testing of asynchronous flush checkpoint. 166 There are major work needed to done at this milestone: 167 Testing design doc of asynchronous flush checkpoint 168 Configurable checkpoint flush interval config, need to support dynamically update 169 Conduct various testing on the feature 170 Optional, try DM with mock customer workload 171