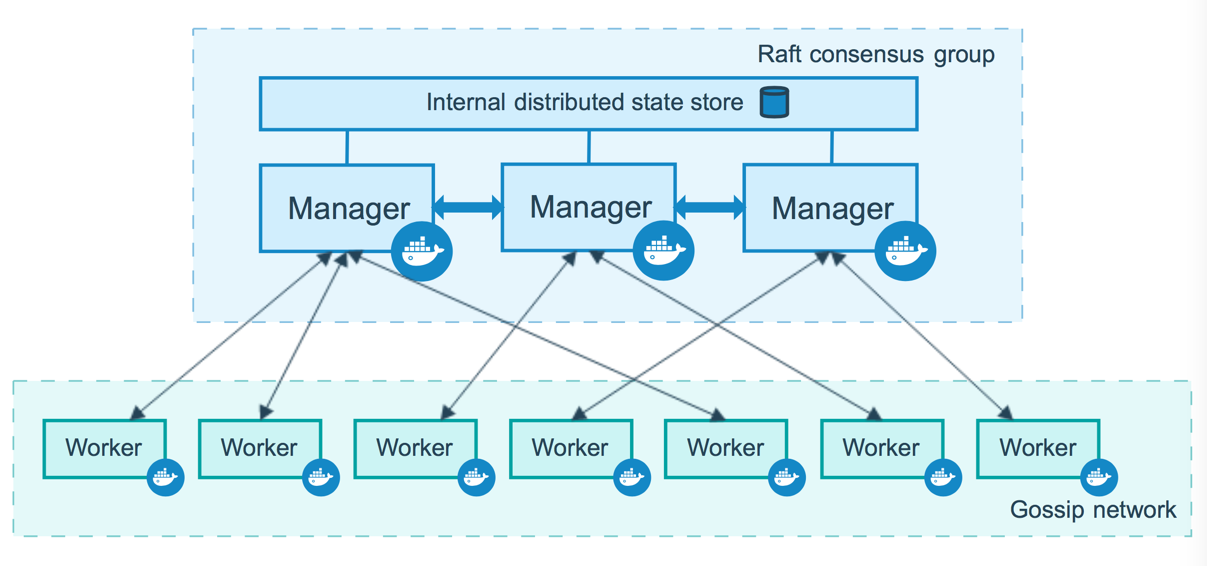

github.com/portworx/docker@v1.12.1/docs/swarm/how-swarm-mode-works/nodes.md (about) 1 <!--[metadata]> 2 +++ 3 aliases = [ 4 "/engine/swarm/how-swarm-mode-works/" 5 ] 6 title = "How nodes work" 7 description = "How swarm nodes work" 8 keywords = ["docker, container, cluster, swarm mode, node"] 9 [menu.main] 10 identifier="how-nodes-work" 11 parent="how-swarm-works" 12 weight="3" 13 +++ 14 <![end-metadata]--> 15 16 # How nodes work 17 18 Docker Engine 1.12 introduces swarm mode that enables you to create a 19 cluster of one or more Docker Engines called a swarm. A swarm consists 20 of one or more nodes: physical or virtual machines running Docker 21 Engine 1.12 or later in swarm mode. 22 23 There are two types of nodes: [**managers**](#manager-nodes) and 24 [**workers**](#worker-nodes). 25 26  27 28 If you haven't already, read through the [swarm mode overview](../index.md) and [key concepts](../key-concepts.md). 29 30 ## Manager nodes 31 32 Manager nodes handle cluster management tasks: 33 34 * maintaining cluster state 35 * scheduling services 36 * serving swarm mode [HTTP API endpoints](../../reference/api/index.md) 37 38 Using a [Raft](https://raft.github.io/raft.pdf) implementation, the managers 39 maintain a consistent internal state of the entire swarm and all the services 40 running on it. For testing purposes it is OK to run a swarm with a single 41 manager. If the manager in a single-manager swarm fails, your services will 42 continue to run, but you will need to create a new cluster to recover. 43 44 To take advantage of swarm mode's fault-tolerance features, Docker recommends 45 you implement an odd number of nodes according to your organization's 46 high-availability requirements. When you have multiple managers you can recover 47 from the failure of a manager node without downtime. 48 49 * A three-manager swarm tolerates a maximum loss of one manager. 50 * A five-manager swarm tolerates a maximum simultaneous loss of two 51 manager nodes. 52 * An `N` manager cluster will tolerate the loss of at most 53 `(N-1)/2` managers. 54 * Docker recommends a maximum of seven manager nodes for a swarm. 55 56 >**Important Note**: Adding more managers does NOT mean increased 57 scalability or higher performance. In general, the opposite is true. 58 59 ## Worker nodes 60 61 Worker nodes are also instances of Docker Engine whose sole purpose is to 62 execute containers. Worker nodes don't participate in the Raft distributed 63 state, make in scheduling decisions, or serve the swarm mode HTTP API. 64 65 You can create a swarm of one manager node, but you cannot have a worker node 66 without at least one manager node. By default, all managers are also workers. 67 In a single manager node cluster, you can run commands like `docker service 68 create` and the scheduler will place all tasks on the local Engine. 69 70 To prevent the scheduler from placing tasks on a manager node in a multi-node 71 swarm, set the availability for the manager node to `Drain`. The scheduler 72 gracefully stops tasks on nodes in `Drain` mode and schedules the tasks on an 73 `Active` node. The scheduler does not assign new tasks to nodes with `Drain` 74 availability. 75 76 Refer to the [`docker node update`](../../reference/commandline/node_update.md) 77 command line reference to see how to change node availability. 78 79 ## Changing roles 80 81 You can promote a worker node to be a manager by running `docker node promote`. 82 For example, you may want to promote a worker node when you 83 take a manager node offline for maintenance. See [node promote](../../reference/commandline/node_promote.md). 84 85 You can also demote a manager node to a worker node. See 86 [node demote](../../reference/commandline/node_demote.md). 87 88 89 ## What's Next 90 91 * Read about how swarm mode [services](services.md) work.