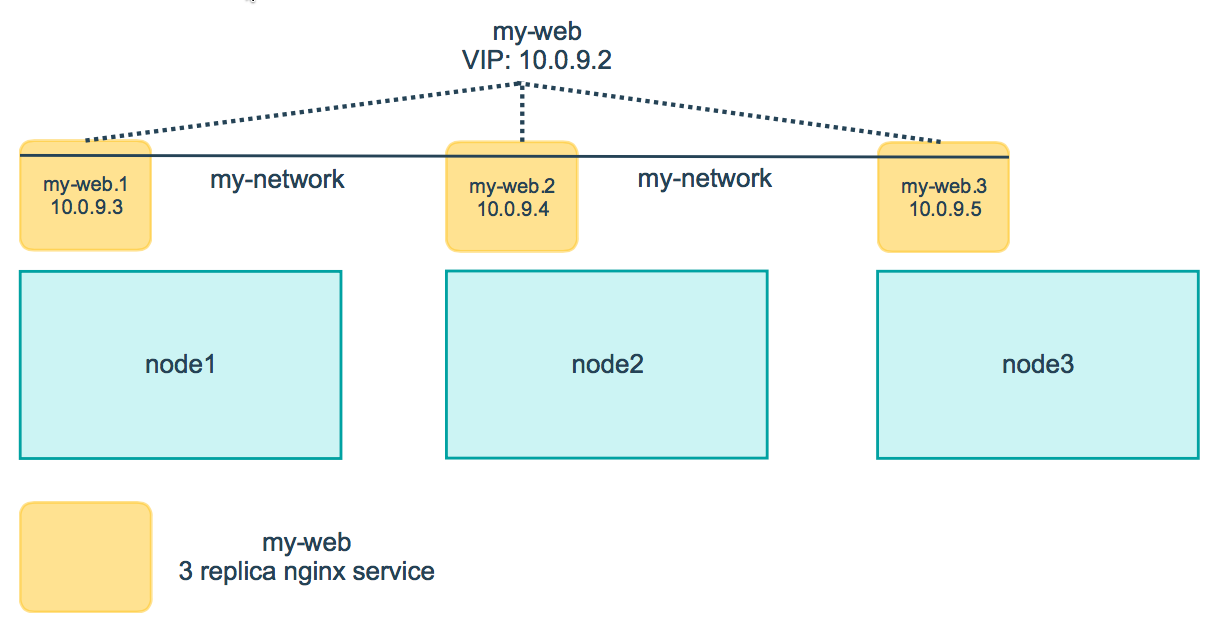

github.com/portworx/docker@v1.12.1/docs/swarm/networking.md (about) 1 <!--[metadata]> 2 +++ 3 title = "Attach services to an overlay network" 4 description = "Use swarm mode networking features" 5 keywords = ["guide", "swarm mode", "swarm", "network"] 6 [menu.main] 7 identifier="networking-guide" 8 parent="engine_swarm" 9 weight=16 10 +++ 11 <![end-metadata]--> 12 13 # Attach services to an overlay network 14 15 Docker Engine swarm mode natively supports **overlay networks**, so you can 16 enable container-to-container networks. When you use swarm mode, you don't need 17 an external key-value store. Features of swarm mode overlay networks include the 18 following: 19 20 * You can attach multiple services to the same network. 21 * By default, **service discovery** assigns a virtual IP address (VIP) and DNS 22 entry to each service in the swarm, making it available by its service name to 23 containers on the same network. 24 * You can configure the service to use DNS round-robin instead of a VIP. 25 26 In order to use overlay networks in the swarm, you need to have the following 27 ports open between the swarm nodes before you enable swarm mode: 28 29 * Port `7946` TCP/UDP for container network discovery. 30 * Port `4789` UDP for the container overlay network. 31 32 ## Create an overlay network in a swarm 33 34 When you run Docker Engine in swarm mode, you can run `docker network create` 35 from a manager node to create an overlay network. For instance, to create a 36 network named `my-network`: 37 38 ``` 39 $ docker network create \ 40 --driver overlay \ 41 --subnet 10.0.9.0/24 \ 42 --opt encrypted \ 43 my-network 44 45 273d53261bcdfda5f198587974dae3827e947ccd7e74a41bf1f482ad17fa0d33 46 ``` 47 48 By default nodes in the swarm encrypt traffic between themselves and other 49 nodes. The optional `--opt encrypted` flag enables an additional layer of 50 encryption in the overlay driver for vxlan traffic between containers on 51 different nodes. For more information, refer to [Docker swarm mode overlay network security model](../userguide/networking/overlay-security-model.md). 52 53 The `--subnet` flag specifies the subnet for use with the overlay network. When 54 you don't specify a subnet, the swarm manager automatically chooses a subnet and 55 assigns it to the network. On some older kernels, including kernel 3.10, 56 automatically assigned adresses may overlap with another subnet in your 57 infrastructure. Such overlaps can cause connectivity issues or failures with containers connected to the network. 58 59 Before you attach a service to the network, the network only extends to manager 60 nodes. You can run `docker network ls` to view the network: 61 62 ```bash 63 $ docker network ls 64 65 NETWORK ID NAME DRIVER SCOPE 66 f9145f09b38b bridge bridge local 67 ..snip.. 68 bd0befxwiva4 my-network overlay swarm 69 ``` 70 71 The `swarm` scope indicates that the network is available for use with services 72 deployed to the swarm. After you create a service attached to the network, the 73 swarm only extends the network to worker nodes where the scheduler places tasks 74 for the service. On workers without tasks running for a service attached to the 75 network, `network ls` does not display the network. 76 77 ## Attach a service to an overlay network 78 79 To attach a service to an overlay network, pass the `--network` flag when you 80 create a service. For example to create an nginx service attached to a 81 network called `my-network`: 82 83 ```bash 84 $ docker service create \ 85 --replicas 3 \ 86 --name my-web \ 87 --network my-network \ 88 nginx 89 ``` 90 91 >**Note:** You have to create the network before you can attach a service to it. 92 93 The containers for the tasks in the service can connect to one another on the 94 overlay network. The swarm extends the network to all the nodes with `Running` 95 tasks for the service. 96 97 From a manager node, run `docker service ps <SERVICE>` to view the nodes where 98 tasks are running for the service: 99 100 ```bash 101 $ docker service ps my-web 102 103 ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR 104 63s86gf6a0ms34mvboniev7bs my-web.1 nginx node1 Running Running 58 seconds ago 105 6b3q2qbjveo4zauc6xig7au10 my-web.2 nginx node2 Running Running 58 seconds ago 106 66u2hcrz0miqpc8h0y0f3v7aw my-web.3 nginx node3 Running Running about a minute ago 107 ``` 108 109  110 111 You can inspect the network from any node with a `Running` task for a service 112 attached to the network: 113 114 ```bash 115 $ docker network inspect <NETWORK> 116 ``` 117 118 The network information includes a list of the containers on the node that are 119 attached to the network. For instance: 120 121 ```bash 122 $ docker network inspect my-network 123 [ 124 { 125 "Name": "my-network", 126 "Id": "7m2rjx0a97n88wzr4nu8772r3", 127 "Scope": "swarm", 128 "Driver": "overlay", 129 "EnableIPv6": false, 130 "IPAM": { 131 "Driver": "default", 132 "Options": null, 133 "Config": [ 134 { 135 "Subnet": "10.0.9.0/24", 136 "Gateway": "10.0.9.1" 137 } 138 ] 139 }, 140 "Internal": false, 141 "Containers": { 142 "404d1dec939a021678132a35259c3604b9657649437e59060621a17edae7a819": { 143 "Name": "my-web.1.63s86gf6a0ms34mvboniev7bs", 144 "EndpointID": "3c9588d04db9bc2bf8749cb079689a3072c44c68e544944cbea8e4bc20eb7de7", 145 "MacAddress": "02:42:0a:00:09:03", 146 "IPv4Address": "10.0.9.3/24", 147 "IPv6Address": "" 148 } 149 }, 150 "Options": { 151 "com.docker.network.driver.overlay.vxlanid_list": "257" 152 }, 153 "Labels": {} 154 } 155 ] 156 ``` 157 158 In the example above, the container `my-web.1.63s86gf6a0ms34mvboniev7bs` for the 159 `my-web` service is attached to the `my-network` network on node2. 160 161 ## Use swarm mode service discovery 162 163 By default, when you create a service attached to a network, the swarm assigns 164 the service a VIP. The VIP maps to a DNS alias based upon the service name. 165 Containers on the network share DNS mappings for the service via gossip so any container on the network can access the service via its service 166 name. 167 168 You don't need to expose service-specific ports to make the service 169 available to other services on the same overlay network. The swarm's internal 170 load balancer automatically distributes requests to the service VIP among the 171 active tasks. 172 173 You can inspect the service to view the virtual IP. For example: 174 175 ```bash 176 $ docker service inspect \ 177 --format='{{json .Endpoint.VirtualIPs}}' \ 178 my-web 179 180 [{"NetworkID":"7m2rjx0a97n88wzr4nu8772r3" "Addr":"10.0.0.2/24"}] 181 ``` 182 183 The following example shows how you can add a `busybox` service on the same 184 network as the `nginx` service and the busybox service is able to access `nginx` 185 using the DNS name `my-web`: 186 187 1. From a manager node, deploy a busybox service to the same network as 188 `my-web`: 189 190 ```bash 191 $ docker service create \ 192 --name my-busybox \ 193 --network my-network \ 194 busybox \ 195 sleep 3000 196 ``` 197 198 2. Lookup the node where `my-busybox` is running: 199 200 ```bash 201 $ docker service ps my-busybox 202 203 ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR 204 1dok2cmx2mln5hbqve8ilnair my-busybox.1 busybox node1 Running Running 5 seconds ago 205 ``` 206 207 3. From the node where the busybox task is running, open an interactive shell to 208 the busybox container: 209 210 ```bash 211 $ docker exec -it my-busybox.1.1dok2cmx2mln5hbqve8ilnair /bin/sh 212 ``` 213 214 You can deduce the container name as `<TASK-NAME>`+`<ID>`. Alternatively, 215 you can run `docker ps` on the node where the task is running. 216 217 4. From inside the busybox container, query the DNS to view the VIP for the 218 `my-web` service: 219 220 ```bash 221 $ nslookup my-web 222 223 Server: 127.0.0.11 224 Address 1: 127.0.0.11 225 226 Name: my-web 227 Address 1: 10.0.9.2 ip-10-0-9-2.us-west-2.compute.internal 228 ``` 229 230 >**Note:** the examples here use `nslookup`, but you can use `dig` or any 231 available DNS query tool. 232 233 5. From inside the busybox container, query the DNS using a special query 234 <tasks.SERVICE-NAME> to find the IP addresses of all the containers for the 235 `my-web` service: 236 237 ```bash 238 $ nslookup tasks.my-web 239 240 Server: 127.0.0.11 241 Address 1: 127.0.0.11 242 243 Name: tasks.my-web 244 Address 1: 10.0.9.4 my-web.2.6b3q2qbjveo4zauc6xig7au10.my-network 245 Address 2: 10.0.9.3 my-web.1.63s86gf6a0ms34mvboniev7bs.my-network 246 Address 3: 10.0.9.5 my-web.3.66u2hcrz0miqpc8h0y0f3v7aw.my-network 247 ``` 248 249 6. From inside the busybox container, run `wget` to access the nginx web server 250 running in the `my-web` service: 251 252 ```bash 253 $ wget -O- my-web 254 255 Connecting to my-web (10.0.9.2:80) 256 <!DOCTYPE html> 257 <html> 258 <head> 259 <title>Welcome to nginx!</title> 260 ...snip... 261 ``` 262 263 The swarm load balancer automatically routes the HTTP request to the 264 service's VIP to an active task. It distributes subsequent requests to 265 other tasks using round-robin selection. 266 267 ## Use DNS round-robin for a service 268 269 You can configure the service to use DNS round-robin directly without using a 270 VIP, by setting the `--endpoint-mode dnsrr` when you create the service. DNS round-robin is useful in cases where you want to use your own load balancer. 271 272 The following example shows a service with `dnsrr` endpoint mode: 273 274 ```bash 275 $ docker service create \ 276 --replicas 3 \ 277 --name my-dnsrr-service \ 278 --network my-network \ 279 --endpoint-mode dnsrr \ 280 nginx 281 ``` 282 283 When you query the DNS for the service name, the DNS service returns the IP 284 addresses for all the task containers: 285 286 ```bash 287 $ nslookup my-dnsrr-service 288 Server: 127.0.0.11 289 Address 1: 127.0.0.11 290 291 Name: my-dnsrr 292 Address 1: 10.0.9.8 my-dnsrr-service.1.bd3a67p61by5dfdkyk7kog7pr.my-network 293 Address 2: 10.0.9.10 my-dnsrr-service.3.0sb1jxr99bywbvzac8xyw73b1.my-network 294 Address 3: 10.0.9.9 my-dnsrr-service.2.am6fx47p3bropyy2dy4f8hofb.my-network 295 ``` 296 297 ## Confirm VIP connectivity 298 299 In genaral we recommend you use `dig`, `nslookup`, or another DNS query tool to 300 test access to the service name via DNS. Because a VIP is a logical IP, `ping` 301 is not the right tool to confirm VIP connectivity. 302 303 ## Learn More 304 305 * [Deploy services to a swarm](services.md) 306 * [Swarm administration guide](admin_guide.md) 307 * [Docker Engine command line reference](../reference/commandline/index.md) 308 * [Swarm mode tutorial](swarm-tutorial/index.md)