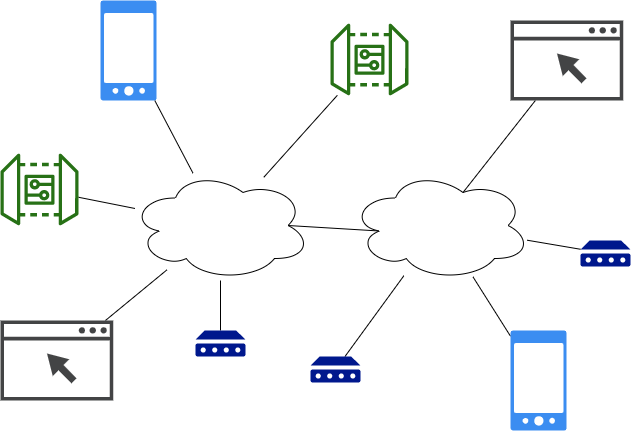

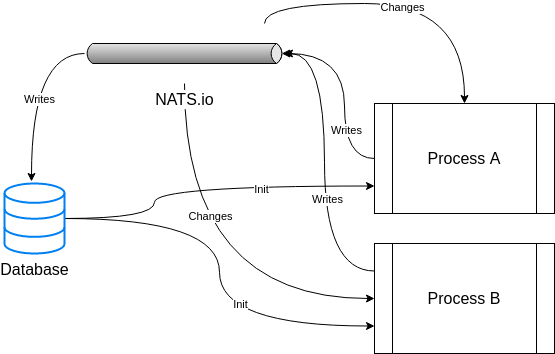

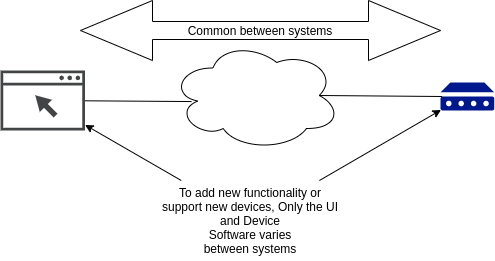

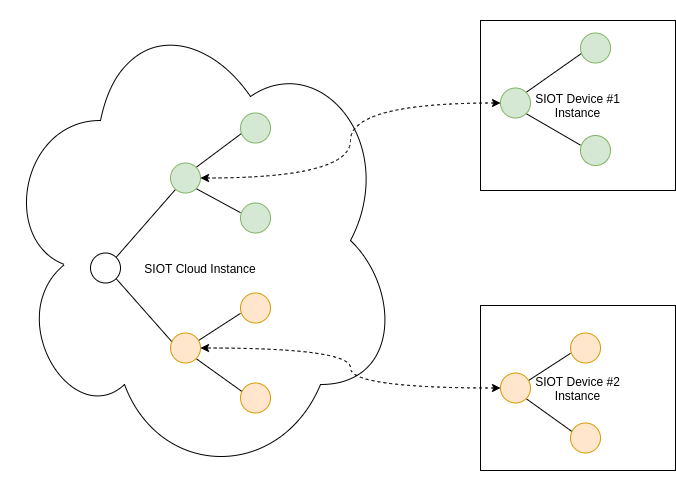

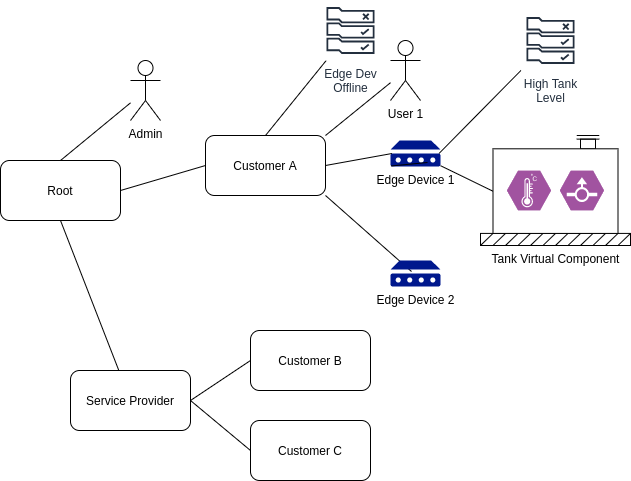

github.com/simpleiot/simpleiot@v0.18.3/docs/ref/architecture-system.md (about) 1 # System Architecture 2 3 **Contents** 4 5 <!-- toc --> 6 7 ## IoT Systems are distributed systems 8 9 IoT systems are inherently distributed where data needs to be synchronized 10 between a number of different systems including: 11 12 1. Cloud (one to several instances depending on the level of reliability 13 desired) 14 2. Edge devices (many instances) 15 3. User Interface (phone, browser) 16 17  18 19 Typically, the cloud instance stores all the system data, and the edge, browser, 20 and mobile devices access a subset of the system data. 21 22 ## Extensible architecture 23 24 Any `siot` app can function as a standalone, client, server or both. As an 25 example, `siot` can function both as an edge (client) and cloud apps (server). 26 27 - full client: full siot node that initiates and maintains connection with 28 another siot instance on a server. Can be behind a firewall, NAT, etc. 29 - server: needs to be on a network that is accessible by clients 30 31 We also need the concept of a lean client where an effort is made to minimize 32 the application size to facilitate updates over IoT cellular networks where data 33 is expensive. 34 35 ## Device communication and messaging 36 37 In an IoT system, data from sensors is continually streaming, so we need some 38 type of messaging system to transfer the data between various instances in the 39 system. This project uses NATS.io for messaging. Some reasons: 40 41 - allows us to [push realtime data](https://youtu.be/REZ6DKvRVv0) to an edge 42 device behind a NAT, on cellular network, etc -- no public IP address, VPN, 43 etc required. 44 - is more efficient than HTTP as it shares one persistent TCP connection for all 45 messages. The overhead and architecture is similar to MQTT, which is proven to 46 be a good IoT solution. It may also use less resources than something like 47 observing resources in CoAP systems, where each observation requires a 48 separate persistent connection. 49 - can scale out with multiple servers to provide redundancy or more capacity. 50 - is written in Go, so possible to embed the server to make deployments simpler 51 for small systems. Also, Go services are easy to manage as there are no 52 dependencies. 53 - focus on simplicity -- values fit this project. 54 - good security model. 55 56 For systems that only need to send one value several times a day, CoAP is 57 probably a better solution than NATS. Initially we are focusing on systems that 58 send more data -- perhaps 5-30MB/month. There is no reason we can't support CoAP 59 as well in the future. 60 61 ## Data modification 62 63 Where possible, modifying data (especially nodes) should be initiated over nats 64 vs direct db calls. This ensures anything in the system can have visibility into 65 data changes. Eventually we may want to hide db operations that do writes to 66 force them to be initiated through a NATS message. 67 68  69 70 ## Simple, Flexible data structures 71 72 As we work on IoT systems, data structures (types) tend to emerge. Common data 73 structures allow us to develop common algorithms and mechanism to process data. 74 Instead of defining a new data type for each type of sensor, define one type 75 that will work with all sensors. Then the storage (both static and time-series), 76 synchronization, charting, and rule logic can stay the same and adding 77 functionality to the system typically only involves changing the edge 78 application and the frontend UI. Everything between these two end points can 79 stay the same. This is a very powerful and flexible model as it is trivial to 80 support new sensors and applications. 81 82  83 84 See [Data](data.md) for more information. 85 86 ## Node Tree 87 88 The same Simple IoT application can run in both the cloud and device instances. 89 The node tree in a device would then become a subset of the nodes in the cloud 90 instance. Changes can be made to nodes in either the cloud or device and data is 91 sycnronized in both directions. 92 93  94 95 The following diagram illustrates how nodes might be arranged in a typical 96 system. 97 98  99 100 A few notes this structure of data: 101 102 - A user has access to its child nodes, parent nodes, and parent node 103 descendants (parents, children, siblings, nieces/nephews). 104 - Likewise, a rule node processes points from nodes using the same relationships 105 described above. 106 - A user can be added to any node. This allows permissions to be granted at any 107 level in the system. 108 - A user can be added to multiple nodes. 109 - A node admin user can configure nodes under it. This allows a service provider 110 to configure the system for their own customers. 111 - If a point changes, it triggers rules of upstream nodes to run (perhaps paced 112 to some reasonable interval) 113 - The _Edge Dev Offline_ rule will fire if any of the Edge devices go offline. 114 This allows us to only write this rule once to cover many devices. 115 - When a rule triggers a notification, the rule node and any upstream nodes can 116 optionally notify its users. 117 118 The distributed parts of the system include the following instances: 119 120 - **Cloud** (could be multiple for redundancy). The cloud instances would 121 typically store and synchronize the root node and everything under it. 122 - **Edge Devices** (typically many instances (1000's) connected via low 123 bandwidth cellular data). Edge instances would would store and synchronize the 124 edge node instance and descendants (ex Edge Device 1) 125 - **Web UI** (potentially dozens of instances connected via higher bandwidth 126 browser connection). 127 128 As this is a distributed system where nodes may be created on any number of 129 connected systems, node IDs need to be unique. A unique serial number or UUID is 130 recommended.