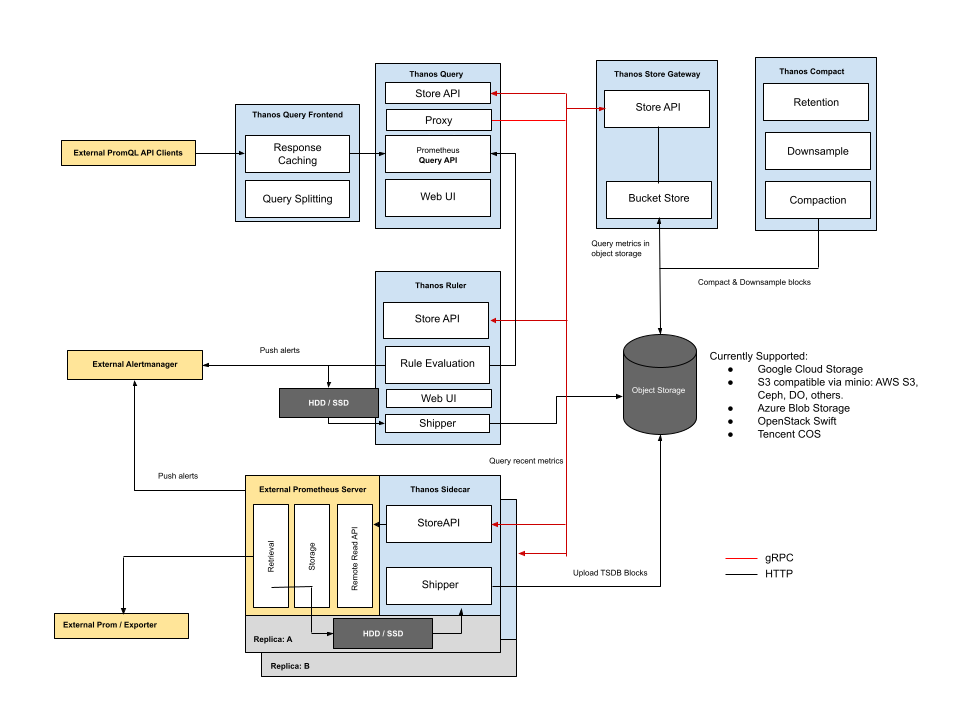

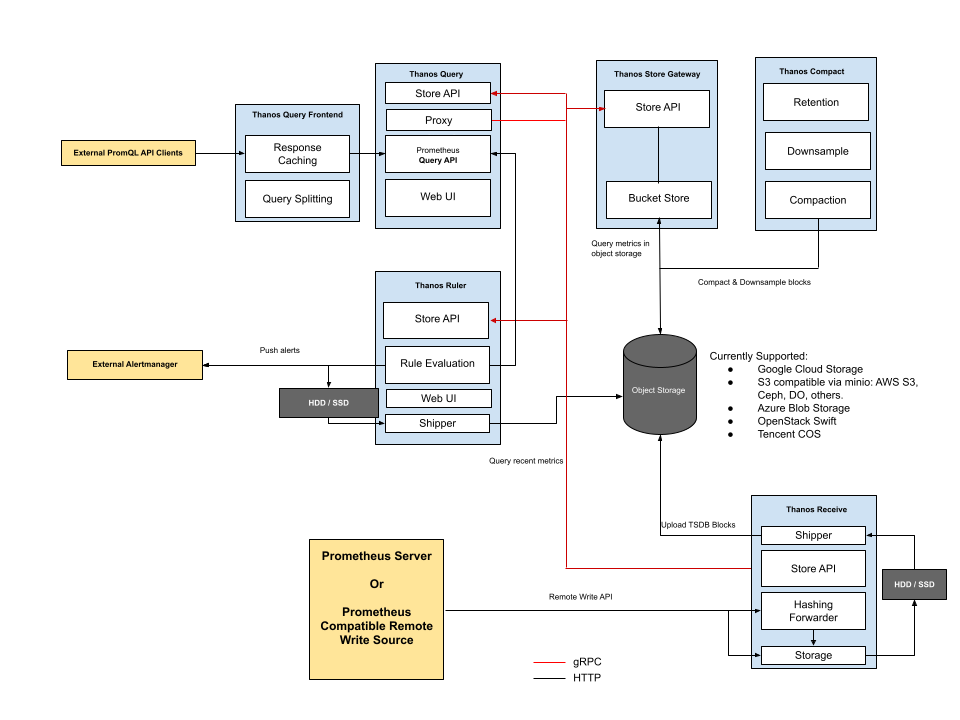

github.com/thanos-io/thanos@v0.32.5/docs/quick-tutorial.md (about) 1 # Quick Tutorial 2 3 Check out the free, in-browser interactive tutorial [Killercoda Thanos course](https://killercoda.com/thanos). We will be progressively updating our Killercoda course with more scenarios. 4 5 On top of this, find our quick tutorial below. 6 7 ## Prometheus 8 9 Thanos is based on Prometheus. With Thanos, Prometheus always remains as an integral foundation for collecting metrics and alerting using local data. 10 11 Thanos bases itself on vanilla [Prometheus](https://prometheus.io/). We plan to support *all* Prometheus versions beyond v2.2.1. 12 13 NOTE: It is highly recommended to use Prometheus v2.13.0+ due to its remote read improvements. 14 15 Always make sure to run Prometheus as recommended by the Prometheus team: 16 17 * Put Prometheus in the same failure domain. This means in the same network and in the same geographic location as the monitored services. 18 * Use a persistent disk to persist data across Prometheus restarts. 19 * Use local compaction for longer retentions. 20 * Do not change the minimum TSDB block durations. 21 * Do not scale out Prometheus unless necessary. A single Prometheus instance is already efficient. 22 23 We recommend using Thanos when you need to scale out your Prometheus instance. 24 25 ## Components 26 27 Following the [KISS](https://en.wikipedia.org/wiki/KISS_principle) and Unix philosophies, Thanos is comprised of a set of components where each fulfills a specific role. 28 29 * Sidecar: connects to Prometheus, reads its data for query and/or uploads it to cloud storage. 30 * Store Gateway: serves metrics inside of a cloud storage bucket. 31 * Compactor: compacts, downsamples and applies retention on the data stored in the cloud storage bucket. 32 * Receiver: receives data from Prometheus's remote write write-ahead log, exposes it, and/or uploads it to cloud storage. 33 * Ruler/Rule: evaluates recording and alerting rules against data in Thanos for exposition and/or upload. 34 * Querier/Query: implements Prometheus's v1 API to aggregate data from the underlying components. 35 * Query Frontend: implements Prometheus's v1 API to proxy it to Querier while caching the response and optionally splitting it by queries per day. 36 37 Deployment with Thanos Sidecar for Kubernetes: 38 39 <!--- 40 Source file to copy and edit: https://docs.google.com/drawings/d/1AiMc1qAjASMbtqL6PNs0r9-ynGoZ9LIAtf0b9PjILxw/edit?usp=sharing 41 --> 42 43  44 45 Deployment via Receive in order to scale out or integrate with other remote write-compatible sources: 46 47 <!--- 48 Source file to copy and edit: https://docs.google.com/drawings/d/1iimTbcicKXqz0FYtSfz04JmmVFLVO9BjAjEzBm5538w/edit?usp=sharing 49 --> 50 51  52 53 ### Sidecar 54 55 Thanos integrates with existing Prometheus servers as a [sidecar process](https://docs.microsoft.com/en-us/azure/architecture/patterns/sidecar#solution), which runs on the same machine or in the same pod as the Prometheus server. 56 57 The purpose of Thanos Sidecar is to back up Prometheus's data into an object storage bucket, and give other Thanos components access to the Prometheus metrics via a gRPC API. 58 59 Sidecar makes use of Prometheus's `reload` endpoint. Make sure it's enabled with the flag `--web.enable-lifecycle`. 60 61 [Sidecar component documentation](components/sidecar.md) 62 63 ### External Storage 64 65 The following configures Sidecar to write Prometheus's data into a configured object storage bucket: 66 67 ```bash 68 thanos sidecar \ 69 --tsdb.path /var/prometheus \ # TSDB data directory of Prometheus 70 --prometheus.url "http://localhost:9090" \ # Be sure that Sidecar can use this URL! 71 --objstore.config-file bucket_config.yaml \ # Storage configuration for uploading data 72 ``` 73 74 The exact format of the YAML file depends on the provider you choose. Configuration examples and an up-to-date list of the storage types that Thanos supports are available [here](storage.md). 75 76 Rolling this out has little to no impact on the running Prometheus instance. This allows you to ensure you are backing up your data while figuring out the other pieces of Thanos. 77 78 If you are not interested in backing up any data, the `--objstore.config-file` flag can simply be omitted. 79 80 * *[Example Kubernetes manifests using Prometheus operator](https://github.com/coreos/prometheus-operator/tree/master/example/thanos)* 81 * *[Example Deploying Sidecar using official Prometheus Helm Chart](../tutorials/kubernetes-helm/README.md)* 82 * *[Details & Config for other object stores](storage.md)* 83 84 ### Store API 85 86 The Sidecar component implements and exposes a gRPC *[Store API](https://github.com/thanos-io/thanos/blob/main/pkg/store/storepb/rpc.proto#L27)*. This implementation allows you to query the metric data stored in Prometheus. 87 88 Let's extend the Sidecar from the previous section to connect to a Prometheus server, and expose the Store API: 89 90 ```bash 91 thanos sidecar \ 92 --tsdb.path /var/prometheus \ 93 --objstore.config-file bucket_config.yaml \ # Bucket config file to send data to 94 --prometheus.url http://localhost:9090 \ # Location of the Prometheus HTTP server 95 --http-address 0.0.0.0:19191 \ # HTTP endpoint for collecting metrics on Sidecar 96 --grpc-address 0.0.0.0:19090 # GRPC endpoint for StoreAPI 97 ``` 98 99 * *[Example Kubernetes manifests using Prometheus operator](https://github.com/coreos/prometheus-operator/tree/master/example/thanos)* 100 101 ### Uploading Old Metrics 102 103 When Sidecar is run with the `--shipper.upload-compacted` flag, it will sync all older existing blocks from Prometheus local storage on startup. 104 105 NOTE: This assumes you never run the Sidecar with block uploading against this bucket. Otherwise, you must manually remove overlapping blocks from the bucket. Those mitigations will be suggested in the sidecar verification process. 106 107 ### External Labels 108 109 Prometheus allows the configuration of "external labels" of a given Prometheus instance. These are meant to globally identify the role of that instance. As Thanos aims to aggregate data across all instances, providing a consistent set of external labels becomes crucial! 110 111 Every Prometheus instance must have a globally unique set of identifying labels. For example, in Prometheus's configuration file: 112 113 ```yaml 114 global: 115 external_labels: 116 region: eu-west 117 monitor: infrastructure 118 replica: A 119 ``` 120 121 ## Querier/Query 122 123 Now that we have setup Sidecar for one or more Prometheus instances, we want to use Thanos's global [Query Layer](components/query.md) to evaluate PromQL queries against all instances at once. 124 125 The Querier component is stateless and horizontally scalable, and can be deployed with any number of replicas. Once connected to Thanos Sidecar, it automatically detects which Prometheus servers need to be contacted for a given PromQL query. 126 127 Thanos Querier also implements Prometheus's official HTTP API and can thus be used with external tools such as Grafana. It also serves a derivative of Prometheus's UI for ad-hoc querying and checking the status of the Thanos stores. 128 129 Below, we will set up a Thanos Querier to connect to our Sidecars, and expose its HTTP UI: 130 131 ```bash 132 thanos query \ 133 --http-address 0.0.0.0:19192 \ # HTTP Endpoint for Thanos Querier UI 134 --endpoint 1.2.3.4:19090 \ # Static gRPC Store API Address for the query node to query 135 --endpoint 1.2.3.5:19090 \ # Also repeatable 136 --endpoint dnssrv+_grpc._tcp.thanos-store.monitoring.svc # Supports DNS A & SRV records 137 ``` 138 139 Go to the configured HTTP address, which should now show a UI similar to that of Prometheus. You can now query across all Prometheus instances within the cluster. You can also check out the Stores page, which shows all of your stores. 140 141 [Query documentation](components/query.md) 142 143 ### Deduplicating Data from Prometheus HA Pairs 144 145 The Querier component is also capable of deduplicating data collected from Prometheus HA pairs. This requires configuring Prometheus's `global.external_labels` configuration block to identify the role of a given Prometheus instance. 146 147 A typical configuration uses the label name "replica" with whatever value you choose. For example, you might set up the following in Prometheus's configuration file: 148 149 ```yaml 150 global: 151 external_labels: 152 region: eu-west 153 monitor: infrastructure 154 replica: A 155 # ... 156 ``` 157 158 In a Kubernetes stateful deployment, the replica label can also be the pod name. 159 160 Ensure your Prometheus instances have been reloaded with the configuration you defined above. Then, in Thanos Querier, we will define `replica` as the label we want to enable deduplication on: 161 162 ```bash 163 thanos query \ 164 --http-address 0.0.0.0:19192 \ 165 --endpoint 1.2.3.4:19090 \ 166 --endpoint 1.2.3.5:19090 \ 167 --query.replica-label replica # Replica label for deduplication 168 --query.replica-label replicaX # Supports multiple replica labels for deduplication 169 ``` 170 171 Go to the configured HTTP address, and you should now be able to query across all Prometheus instances and receive deduplicated data. 172 173 * *[Example Kubernetes manifest](https://github.com/thanos-io/kube-thanos/blob/master/manifests/thanos-query-deployment.yaml)* 174 175 ### Communication Between Components 176 177 The only required communication between nodes is for a Thanos Querier to be able to reach the gRPC Store APIs that you provide. Thanos Querier periodically calls the info endpoint to collect up-to-date metadata as well as check the health of a given Store API. That metadata includes the information about time windows and external labels for each node. 178 179 There are various ways to tell Thanos Querier about the Store APIs it should query data from. The simplest way is to use a static list of well known addresses to query. These are repeatable, so you can add as many endpoints as you need. You can also put a DNS domain prefixed by `dns+` or `dnssrv+` to have a Thanos Querier do an `A` or `SRV` lookup to get all the required IPs it should communicate with. 180 181 ```bash 182 thanos query \ 183 --http-address 0.0.0.0:19192 \ # Endpoint for Thanos Querier UI 184 --grpc-address 0.0.0.0:19092 \ # gRPC endpoint for Store API 185 --endpoint 1.2.3.4:19090 \ # Static gRPC Store API Address for the query node to query 186 --endpoint 1.2.3.5:19090 \ # Also repeatable 187 --endpoint dns+rest.thanos.peers:19092 # Use DNS lookup for getting all registered IPs as separate Store APIs 188 ``` 189 190 Read more details [here](service-discovery.md). 191 192 * *[Example Kubernetes manifests using Prometheus operator](https://github.com/coreos/prometheus-operator/tree/master/example/thanos)* 193 194 ## Store Gateway 195 196 As Thanos Sidecar backs up data into the object storage bucket of your choice, you can decrease Prometheus's retention in order to store less data locally. However, we need a way to query all that historical data again. Store Gateway does just that, by implementing the same gRPC data API as Sidecar, but backing it with data it can find in your object storage bucket. Just like sidecars and query nodes, Store Gateway exposes a Store API and needs to be discovered by Thanos Querier. 197 198 ```bash 199 thanos store \ 200 --data-dir /var/thanos/store \ # Disk space for local caches 201 --objstore.config-file bucket_config.yaml \ # Bucket to fetch data from 202 --http-address 0.0.0.0:19191 \ # HTTP endpoint for collecting metrics on the Store Gateway 203 --grpc-address 0.0.0.0:19090 # GRPC endpoint for StoreAPI 204 ``` 205 206 Store Gateway uses a small amount of disk space for caching basic information about data in the object storage bucket. This will rarely exceed more than a few gigabytes and is used to improve restart times. It is useful but not required to preserve it across restarts. 207 208 * *[Example Kubernetes manifest](https://github.com/thanos-io/kube-thanos/blob/master/manifests/thanos-store-statefulSet.yaml)* 209 210 [Store Gateway documentation](components/store.md) 211 212 ## Compactor 213 214 A local Prometheus installation periodically compacts older data to improve query efficiency. Since Sidecar backs up data into an object storage bucket as soon as possible, we need a way to apply the same process to data in the bucket. 215 216 Thanos Compactor simply scans the object storage bucket and performs compaction where required. At the same time, it is responsible for creating downsampled copies of data in order to speed up queries. 217 218 ```bash 219 thanos compact \ 220 --data-dir /var/thanos/compact \ # Temporary workspace for data processing 221 --objstore.config-file bucket_config.yaml \ # Bucket where compacting will be performed 222 --http-address 0.0.0.0:19191 # HTTP endpoint for collecting metrics on the compactor 223 ``` 224 225 Compactor is not in the critical path of querying or data backup. It can either be run as a periodic batch job or be left running to always compact data as soon as possible. It is recommended to provide 100-300GB of local disk space for data processing. 226 227 *NOTE: Compactor must be run as a **singleton** and must not run when manually modifying data in the bucket.* 228 229 * *[Example Kubernetes manifest](https://github.com/thanos-io/kube-thanos/blob/master/examples/all/manifests/thanos-compact-statefulSet.yaml)* 230 231 [Compactor documentation](components/compact.md) 232 233 ## Ruler/Rule 234 235 In case Prometheus running with Thanos Sidecar does not have enough retention, or if you want to have alerts or recording rules that require a global view, Thanos has just the component for that: the [Ruler](components/rule.md), which does rule and alert evaluation on top of a given Thanos Querier. 236 237 [Rule documentation](components/rule.md)