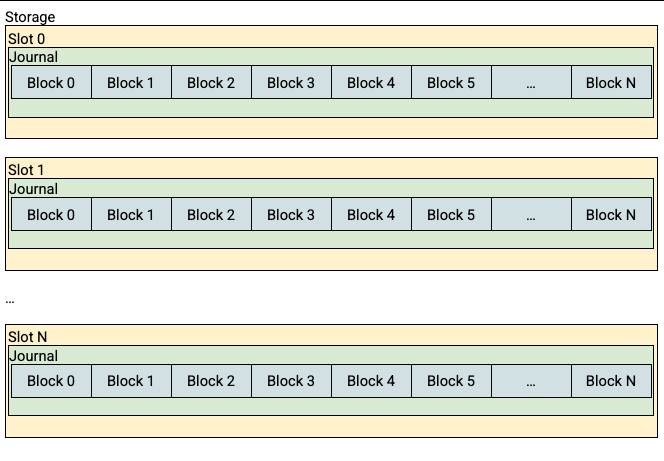

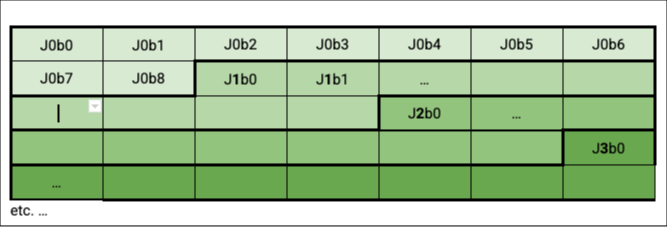

github.com/transparency-dev/armored-witness-applet@v0.1.1/trusted_applet/internal/storage/README.md (about) 1 # Storage 2 3 This directory contains a work-in-progress storage system for use with 4 the ArmoredWitness. 5 It should be considered experimental and subject to change! 6 7 Some details on the requirements and design of the storage system are below. 8 9 ### Requirements 10 11 * Allow the witness unikernel to persist small amounts of data, think multiple independent records of up to a few MB. 12 * Use the eMMC as storage 13 * Avoid artificially shortening the life of storage hardware (flash) 14 * Persisted state should be resilient to corruption from power failure/reboot during writes 15 16 #### Nice-to-haves 17 18 * Be somewhat reusable for other ArmoredWitness use cases we may have. 19 * This probably means being able to store different types of data in specified locations. 20 21 #### Non-requirements 22 23 * While we're ultimately limited by the performance of the storage hardware, it's not a priority to achieve the lowest possible latency or highest possible throughput for writes. 24 * Integration with Go's `os.Open()` style APIs (this _would_ be great, but would require upstream work in TamaGo so is explicitly out of scope for now). 25 26 #### Out-of-scope 27 28 Some things are explicitly out of scope for this design: 29 30 * Protecting against an attacker modifying the data on the storage in some out-of-band fashion. 31 * Hardware failure resulting in previously readable data becoming unreadable/corrupted. 32 * Supporting easy discovery / enumeration of data on disk, or preventing duplicate data from being written. Higher level code should be responsible for understanding what data should be in which slots. 33 34 ### Design 35 36 A relatively simple storage API which offers a fixed number of "storage slots" to which a representation of state can be written. Slot storage will be allocated a range of the underlying storage, starting at a known byte offset and with a known length. This slot storage is also preconfigured with the number of slots that it should allocate (or alternatively/equivalently, the number of bytes to be reserved per-slot). 37 38 Each slot is backed by a fixed size "journal" stored across _N_ eMMC blocks. 39 40 Logically it can be thought of like so: 41 42  43 44 Physically it may look like this on the MMC block device itself (9 blocks per journal is just an example): 45 46  47 48 #### API 49 50 The API tries to be as simple as possible to use and implement for now - e.g. since we're only intending this to be used for O(MB) of data, it's probably fine to pass this to/from the storage layer as a straight `[]byte` slice. 51 52 However, if necessary, we could try to make the API more like Go's io framework, with `Reader` and `Writers`. 53 54 55 ```go 56 // Partition describes the extent and layout of a single contiguous region 57 // underlying block storage. 58 type Partition struct {} 59 60 // Open opens the specified slot, returns an error if the slot is out of bounds. 61 func (p *Partition) Open(slot int) (*Slot, error) 62 63 64 // Slot represents the current data in a slot. 65 type Slot struct {} 66 67 // Read returns the last data successfully written to the slot, along with 68 // a token which can be used with CheckAndWrite. 69 func (s *Slot) Read() ([]byte, uint32, error) 70 71 // Write stores the provided data to the slot. 72 // Upon successful completion, this data will be returned by future calls 73 // to Read until another successful Write call is mode. 74 // If the call to Write fails, future calls to Read will return the 75 // previous successfully written data, if any. 76 func (s *Slot) Write(p []byte) error 77 78 // CheckAndWrite behaves like Write, with the exception that it will 79 // immediately return an error if the slot has been successfully written 80 // to since the Read call which produced the passed-in token. 81 func (s *Slot) CheckAndWrite(token uint32, p []byte) error 82 83 ``` 84 85 #### Internal structures 86 87 Data stored in the slot is represented by an _"update record"_ written to the journal. 88 89 The update record contains: 90 91 Field Name | Type | Notes 92 -------------|-----------------------------|------------------------- 93 `Magic` |`[4]byte{'T', 'F', 'J', '0'}`| Magic record header, v0 94 `Revision` |`uint32` | Incremented with each write to slot 95 `DataLen` |`uint64` | `len(RecordData)` 96 `Checksum` |`[32]byte{}` | `SHA256` of `RecordData` 97 `RecordData` |`[DataLen]byte{}` | Application data 98 99 100 An update record is considered _valid_ if its: 101 102 * `Magic` is correct 103 * `Checksum` is correct for the data in `RecordData[:DataLen]` 104 105 The first time `Open` is called for a given slot, the slot's journal will be scanned from the beginning to look for the valid update record with the largest `Revision`. The Data from this record is the data associated with the slot. It could potentially be cached in RAM at this point if it's small enough. 106 107 If no such record exists, then the slot has not yet been successfully written to and there is no data associated with the slot. 108 109 An update to the slot causes an update record to be written to the journal starting at either: 110 111 * The first byte of the blocks following the extent of the "current" update record (i.e all blocks contain header/data for at most 1 record), if there is sufficient space remaining in the journal to accommodate the entire update record without wrapping around to the first blocks, or 112 * The first byte of the first block in the journal, if there is no current record or the update record will not fit in the remaining journal space. 113 114 Following a successful write to storage, the metadata associated with slot (i.e. Revision, current header location, location for next write, etc.) is updated. 115 116 The diagram below shows a sequence of several update record writes of varying data sizes. These writes are taking place in a single journal, which you'll remember comprises several blocks. 117 118 The grey boxes represent blocks containing old/previous data, green represents blocks holding the latest successful write. 119 120 The numbers indicate a header with a particular `Revision`, blocks with `…` contain follow-on `RecordData`, and an x indicates invalid record header: 121 122 ``` 123 ⬛⬛⬛⬛⬛⬛⬛⬛⬛⬛ - Initial state, nothing written 124 🟩🟩🟩⬛⬛⬛⬛⬛⬛⬛ - First record (rev=1) has been successfully stored 125 ⬜⬜⬜🟩🟩⬛⬛⬛⬛⬛ - Next record (rev=2) is stored with the next available block 126 ⬜⬜⬜⬜⬜🟩🟩🟩⬛⬛ - Same again. 127 🟩🟩🟩⬜⬜⬜⬜⬜⬛⬛ - The 4th record will not fit in the remaining space, so is written starting at the zeroth block, overwriting old revision(s) - note it does not wrap around. 128 ⬜⬜⬜🟩🟩🟩⬜⬜⬛⬛ - Subsequent revisions continue in this vein. 129 ``` 130 131 Since record revisions should always be increasing as we scan left-to-right through the slot storage, we can assume we've found the newest update record when we've either reached the end of the storage space, or after having read at least 1 _good_ update record we find a record with a lower `Revision` than the previous record, or one with an invalid `Magic` or `Checksum.` 132 133 #### Failed/interrupted writes 134 135 For a failed write to the storage to have any permanent effect at all, it must have succeeded in writing at least the 1st block of the update record, and so the stored header checksum will be invalid. This allows the failure to be detected when reading back with high probability. 136 137 The maximum permitted `RecordData` size is restricted to `(TotalSlotSize/3) - len(Header)`; this prevents a failed write obliterating all or part of the previous successful write, so unless the failed write is the first attempt to write to the slot, there will always be a valid previous record available (modulo storage fabric failure). 138 139 Adding records with failed writes: 140 141 ``` 142 ⬛⬛⬛⬛⬛⬛⬛⬛⬛ - Initial state, nothing written 143 🟩🟩⬜⬜⬜⬜⬜⬜⬛ - First record (rev=1) stored successfully 144 ⬜⬜🟩🟩🟩⬜⬜⬜⬛ - Second write (rev=2) is successful too. 145 ⬜⬜⬜⬜⬜🟥🟥🟥⬛ - Third write fails 146 ⬜⬜⬜⬜⬜🟩🟩🟩⬛ - Application retries, record (rev=3) is written successfully this time. 147 🟩🟩⬜⬜⬜⬜⬜⬜⬛ - Application succesfully retries and writes (rev=4) 148 ⬜⬜🟩🟩🟩⬜⬜⬜⬛ - and (rev=5) 149 ⬜⬜⬜⬜⬜🟩🟩🟩⬛ - and (rev=6), too 150 🟥🟥🟥⬜⬜🟩🟩🟩⬛ - Attempt to write (rev=7), located at the zeroth block, fails, corrupting (rev=4) and (rev=5), but rev=6, the current good record, is intact. 151 ``` 152 153 #### Other properties 154 155 This journal type approach affords a couple of additional nice properties given the environment and use case: 156 157 1. The API can provide _check-and-set_ semantics: _"Write an update record with revision X, iff the current record is revision X-1"_. 158 2. A very basic notion of "wear levelling" is provided since writes are spread out across most blocks. Note that this is less important here as the ArmoredWitness has eMMC storage, which mandates that the integrated controlled performs wear-leveling transparently.