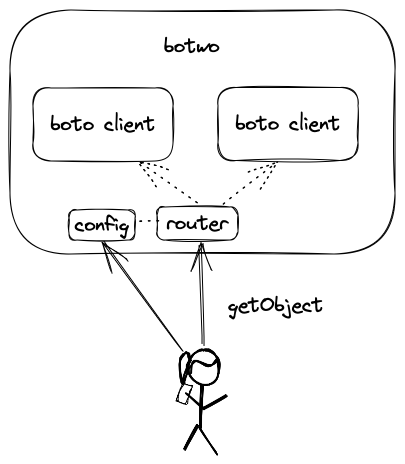

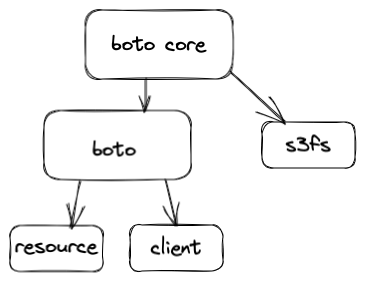

github.com/treeverse/lakefs@v1.24.1-0.20240520134607-95648127bfb0/design/accepted/boto-design.md (about) 1 # Design: Co-Existing with Boto 2 3 ## Problem Description 4 5 Existing code bases where paths are scattered throughout the codebase (which is rather common for DE/DS code) have a hard time incorporating the lakeFS S3 gateway for several reasons: 6 1. Clients (namely, boto3 and pandas) don't support multiple S3 endpoints with differing credentials. This makes it hard to migrate only a subset of the data to be in lakeFS because the client, once initialized, can talk to either lakeFS or S3 but not both. 7 2. Once data is migrated from S3 to lakeFS, even if the repo has the same name as the previous bucket, it's still not a drop-in replacement, because in lakeFS you'd also need to specify the version ref (branch/commit/tag) you're using, as a prefix. 8 9 Solving 1 is hard because it requires a big infrastructure change: instead of using a single initialized client (sometimes it's a default or global one), now multiple clients need to be introduced (one for S3, one for lakeFS) with custom logic for when to use which one. 10 Solving 2 is hard because it involves changing many paths scattered throughout the code base: some are dynamically generated by the code, some come from env vars or configuration files - it's not always easy to ensure coverage. 11 12 ## Goals 13 * Run two boto client side-by-side with minimal changes to user's existing code 14 * Users should do the minimal set of changes possible in code to integrate lakeFS 15 16 ## Non Goals 17 * Provide new features that weren't previously possible through lakeFS 18 19 ## Value Propositions 20 When a user with an existing python code that uses boto3 client to access s3 wants to move only part of their buckets/prefixes to lakefs, botwo allows using lakefs and s3 together with minimum code changes. 21 22 ## Proposed Design 23  24 25 The botwo package provides a boto3 like client with two underlying boto3 clients. 26 It handles several things for the user: 27 * Holds two boto clients and routes the requests between them by the bucket/prefix configuration. 28 For example: user can route all requests to s3 with `Bucket` to lakeFs with `Bucket/main/` in lakeFS (Bucket = repository name). 29 * Maps 1:1 with the boto AWS service API. All botwo client AWS functions will be generated on the fly according to boto3 client AWS functions. 30 * Holds special treatment for functions that operate on multiple buckets like `upload_part_copy`. If more such functions are added to s3 in the future, we will need to implement them as well. 31 * It has more functionality like the event-system that will be implemented by using one of its clients (the default one). 32 33 ## Alternatives Considered 34 35 ### Route the requests to lakefs endpoint by the prefix 36 ``` 37 s3 = boto3.client('s3') 38 if bucket == "bucket_a" and prefix == "my_path": 39 client.endpoint_url = 'https://lakefs.example.com' 40 ``` 41 Does not work because once you initialize boto client with parameters you can’t change them in run time. 42 43 ### Method Overriding 44 45 Monkey patching / overriding base class functions in boto3 with inheritance. 46 Does not work because Boto3 functions are generated on the fly from service definitions, so there is no static put_object function to inherit from. 47 48 ### Creating custom resource-class using the events system 49 50 When extending a resource class using the [events system][Extensibility guide], there is no inheritance relationship between the base class and the custom class. It results in a base class enriched with the functions and attributes of the custom class. 51 It allows only to replace the bucket and prefix names. 52 53 ### Route all the requests to lakefs server and implement the bucket configuration there 54 It can result in server load because requests like put_object can call directly to s3. 55 56 ### Wrap boto core fs 57 Possible but complex because we will need to implement boto core interface which has a lot of functionality. 58 59 ## Usage 60 61 Before introducing the new client, the user interacts only with S3: 62 ``` 63 import boto3 64 65 s3 = boto3.client('s3') 66 67 s3.get_object(Bucket="test-bucket", Key="test/object.txt") # in a lot of places 68 ``` 69 70 With the new client: change in every place in the code where a boto S3 client is initialized: 71 72 ``` 73 import boto3 74 import botwo 75 76 client1 = boto3.client('s3') 77 client2 = boto3.client('s3', endpoint_url='https://lakefs.example.com') 78 79 mapping = [ 80 { 81 "bucket_name": "bucket-a", 82 "client": "lakefs", 83 "key_prefix": "dev/" 84 }, 85 { 86 "bucket_name": "example-old-bucket", 87 "mapped_bucket_name": "example-repo", 88 "client": "lakefs", 89 "key_prefix": "main/" 90 }, 91 { 92 "bucket_name": "*", # default 93 "client": "s3", 94 } 95 ] 96 97 s3 = botwo.client({"s3": client1, "lakefs": client2}, mapping) 98 99 s3.get_object(Bucket="test-bucket", Key="test/object.txt") # stays the same 100 ``` 101 102 Every time s3 function is called, it will go over the mapping bucket list by order and stop if there is a match. 103 If there isn't a match it will go to the default client (the user must provide a default client). 104 105 ## Open Questions 106  107 108 * There are two different abstractions within the boto3 SDK for making AWS service requests: client and resource. 109 * Client - provides low-level AWS service access, maps 1:1 with the AWS service API. 110 * Resource - provides high-level, object-oriented API. It exposes sub-resources and collections of AWS resources and has actions (operations on resources). 111 ``` 112 s3 = boto3.resource('s3') 113 obj = s3.Object(bucket_name='boto3', key='test.py') 114 ``` 115 This proposal provides a solution only for boto client, It can be expended to resource as well per user requests. 116 117 * Pandas: there are two ways to access s3 - Boto and s3fs-supported pandas API (S3Fs is a Pythonic file interface to S3. It builds on top of botocore) which is not addressed as well. 118 119 120 [Extensibility guide]: https://boto3.amazonaws.com/v1/documentation/api/latest/guide/events.html