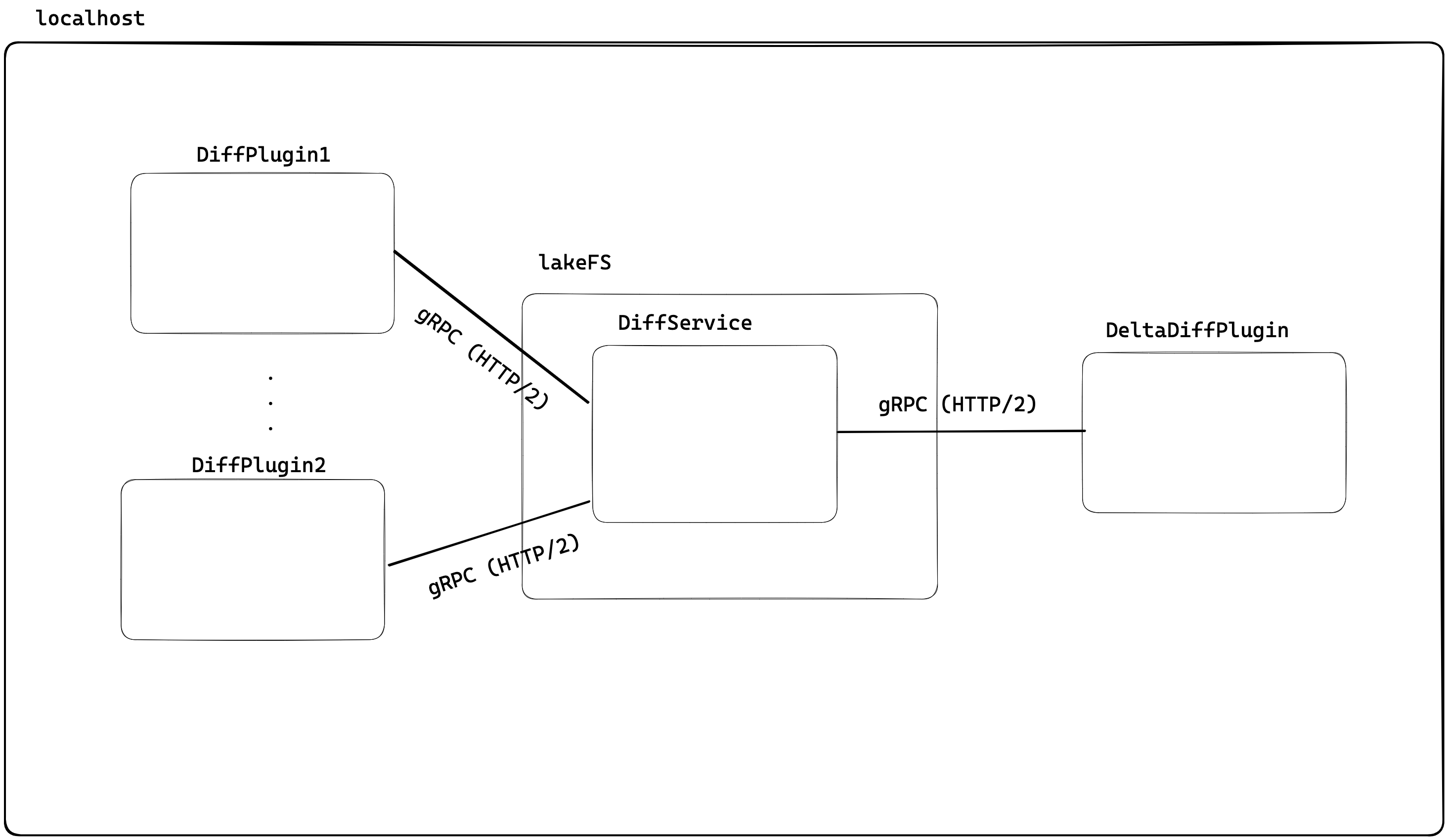

github.com/treeverse/lakefs@v1.24.1-0.20240520134607-95648127bfb0/design/accepted/delta-diff.md (about) 1 # Delta Table Differ 2 3 ## User Story 4 5 Jenny the data engineer runs a new retention-and-column-remover ETL over multiple Delta Lake tables. To test the ETL before running on production, she uses lakeFS (obviously) and branches out to a dedicated branch `exp1`, and runs the ETL pointed at `exp1`. 6 The output is not what Jenny planned... The Delta Table is missing multiple columns and the number of rows just got bigger! 7 She would like to debug the ETL run. In other words, she would want to see the Delta table history changes inserted applied in branch `exp1` since the commit that she branched from. 8 9 --- 10 11 ## Goals and scope 12 13 1. For the MVP, support only Delta table diff. 14 2. The system should be open for different data comparison implementations. 15 3. The diff itself will consist of metadata changes only (computed using the operation history of the two tables) and, optionally, the change in the number of rows. 16 4. The diff will be a "two-dots" diff (like `git log branch1..branch2`). Basically showing the log changes that happened in the topic branch and not in the base branch. 17 5. UI: GUI only. 18 6. Reduce user friction as much as possible. 19 20 --- 21 22 ## Non-Goals and off-scope 23 24 1. The Delta diff will be limited to the available Delta Log entries (the JSON files), which are, by default, retained 25 for [30 days](https://docs.databricks.com/delta/history.html#configure-data-retention-for-time-travel). 26 2. Log compaction isn't supported. 27 3. Delta Table diff will only be supported if the table stayed where it was, e.g. if we have a Delta table on path 28 `repo/branch1/delta/table` and it moved in a different branch to location `repo/branch2/delta/different/location/table` 29 then it won't be "diffable". 30 31 --- 32 33 ## High-Level design 34 35 ### Considered architectures 36 37 ##### Diff as part of the lakeFS server 38 39 Implement the diff functionality within lakeFS. 40 Delta doesn't provide a Go SDK implementation, so we'll need to implement the Delta data model and interface ourselves, which is not something we aim to do. 41 In addition, this couples future diff implementations to Go (which might result in similar problems). 42 43 ##### Diff as a standalone RESTful server 44 45 The diff implementation will be implemented in one of the available Delta Lake SDK languages and will be called RESTfully from lakeFS to get the diff results. 46 Users will run and manage the server and add communication details to the lakeFS configuration file. 47 The problem with this approach is the friction added for the users. We intend to integrate a diff experience as seamlessly as possible, yet this solution is adding the overhead of managing another server alongside lakeFS. 48 49 ##### Diff as bundled Rust/WebAssembly in lakeFS 50 51 Using the `delta-rs` package to build the differ, and bundling it into the lakeFS binary using `cgo` (to communicate with Rust's FFI) or a Web Assembly package that will use Go as the Web Assembly runtime/engine. 52 Multiple languages can be compiled to WebAssembly which is a great benefit, yet the engines that are needed to run WebAssembly runtime in Go are implemented in other languages (not Go) and are unstable and raise compilation complexity and maintenance. 53 54 ##### Diff as an external binary executable 55 56 We can trigger a diff binary from lakeFS using the `os.exec` package and get the output generated by that binary. 57 This is almost where we want to be, except that we'll need to somehow validate that the lakeFS server and the executable are using the same "API version" to communicate, so that the output of the binary would match the expected one from lakeFS. In addition, I would like to decrease the amount of deserialization needed to be implemented to interpret the returned DTO (maintainability and extensibility-wise). 58 59 ### Chosen architecture 60 61 #### The Microkernel/Plugin Architecture 62 63 The Microkernel/Plugin architecture is composed of two entities: a single "core system" and multiple "plugins". 64 In our case, the lakeFS server act as the core system, and the different diff implementations, including the Delta diff implementation, will act as plugins. 65 We'll use `gRPC` as the transport protocol, which makes the language of choice almost immaterial (due to the use of protobufs as the transferred data format) 66 as long as it's self-contained (theoretically it can also be not system-runtime-dependent but then the cost will be an added requirement for running lakeFS- runtime support). 67 68 #### Plugin system consideration 69 70 * The plugin system should be flexible enough such that it won't impose a language restriction, so that in the future we could have lakeFS users take part in plugins creation (not restricted to diffs). 71 * The plugin system should support multiple OSs. 72 * The plugin system should be easy enough to write plugins. 73 74 #### Hashicorp Plugin system 75 76 Hashicorp's battle-tested [`go-plugin`](https://github.com/hashicorp/go-plugin) system is a plugin system over RPC (used in Terraform, Vault, Consul, and Packer). 77 Currently, it's only designed to work over a local network. 78 Plugins can be written and consumed in almost every major language. This is achieved by supporting [gRPC](http://www.grpc.io/) as the communication protocol. 79 * The plugin system works by launching subprocesses and communicating over RPC (both `net/rpc` and `gRPC` are supported). 80 A single connection is made between any plugin and the core process. For gRPC-based plugins, the HTTP2 protocol handles [connection multiplexing](https://freecontent.manning.com/animation-http-1-1-vs-http-2-vs-http-2-with-push/). 81 * The plugin and core system are separate processes, which means that a crash of a plugin won't cause the core system to crash (lakeFS in our case). 82 83  84 [(excalidraw file)](../open/diagrams/microkernel-overview.excalidraw) 85 86 --- 87 88 ## Implementation 89 90  91 [(excalidraw file)](../open/diagrams/delta-diff-flow.excalidraw) 92 93 #### DiffService 94 95 The DiffService will be an internal component in lakeFS which will serve as the Core system. 96 In order to realize which diff plugins are available, we shall use new manifest section and environment variables: 97 98 ```yaml 99 diff: 100 <diff_type>: 101 plugin: <plugin_name_1> 102 plugins: 103 <plugin_name_1>: <location to the 'plugin_name_1' plugin - full path to the binary needed to execute> 104 <plugin_name_2>: <location to the 'plugin_name_1' plugin - full path to the binary needed to execute> 105 ``` 106 107 1. `LAKEFS_DIFF_{DIFF_TYPE}_PLUGIN` 108 The DiffService will use the name of plugin provided by this env var to load the binary and perform the diff. For instance, 109 `LAKEFS_DIFF_DELTA_PLUGIN=delta_diff_plugin` will search for the binary path under the manifest path 110 `plugins.delta_diff_plugin` or environment variable `LAKEFS_PLUGINS_DELTA_DIFF_PLUGIN`. 111 If a given plugin path will not be found in the manifest or env var (e.g. `delta_diff_plugin` in the above example), 112 the binary of a plugin with the same name will be searched under `~/.lakefs/plugins` (as the 113 [plugins' default location](https://github.com/hashicorp/terraform/blob/main/plugins.go)). 114 2. `LAKEFS_PLUGINS_{PLUGIN_NAME}` 115 This environment variable (and corresponding manifest path) will provide the path to the binary of a plugin named 116 {PLUGIN_NAME}, for example, `LAKEFS_PLUGINS_DELTA_DIFF_PLUGIN=/path/to/delta/diff/binary`. 117 118 The **type** of diff will be sent as part of the request to lakeFS as specified [here](#API). 119 The communication between the DiffService and the plugins, as explained above, will be through the `go-plugin` package (`gRPC`). 120 121 #### Delta Diff Plugin 122 123 Implemented using [delta-rs](https://github.com/delta-io/delta-rs) (Rust), this plugin will perform the diff operation using table paths provided by the DiffService through a `gRPC` call. 124 To query the Delta Table from lakeFS, the plugin will generate an S3 client (this is a constraint imposed by the `delta-rs` package) and send a request to lakeFS's S3 gateway. 125 126 * The objects requested from lakeFS will only be the `_delta_log` JSON files, as they are the files that construct a table's history. 127 * We shall limit the number of returned objects to 1000 (each entry is ~500 bytes).<sup>[2](#future-plans)</sup> 128 129 _The diff algorithm:_ 130 1. Get the table version of the base common ancestor. 131 2. Run a variation of the Delta [HISTORY command](https://docs.delta.io/latest/delta-utility.html#history-schema)<sup>[3](#future-plans)</sup> 132 (this variation starts from a given version number) on both table paths, starting from the version retrieved above. 133 - If the left table doesn't include the base version (from point 1), start from the oldest version greater than the base. 134 3. Operate on the [returned "commitInfo" entry vector ](https://github.com/delta-io/delta-rs/blob/d444cdf7588503c1ebfceec90d4d2fadbd50a703/rust/src/delta.rs#L910) 135 starting from the **oldest** version of each history vector that is greater than the base table version: 136 1. If one table version is greater than the other table version, increase the smaller table's version until it gets to the same version of the other table. 137 2. While there are more versions available && answer's vector size < 1001: 138 1. Create a hash for the entry based on fields: `timestamp`, `operation`, `operationParameters` , and `operationMetrics` values. 139 2. Compare the hashes of the versions. 140 3. If they **aren't equal**, add the "left" table's entry to the returned history list. 141 4. Traverse one version forward in both vectors. 142 4. Return the history list. 143 144 ### Authentication<sup>[4](#future-plans)</sup> 145 146 The `delta-rs` package generates an S3 client which will be used to communicate back to lakeFS (through the S3 gateway). 147 In order for the S3 client to communicate with lakeFS (or S3 in general) it needs to pass an AWS Access Key Id and Secret access key. 148 Since applicative credentials are not obligatory, the users that sent the request for a diff might not have such, and even if they have, 149 they cannot send them through the GUI (which is the UI we chose to implement this feature for the MVP). 150 To overcome this scenario, we'll use special diff credentials as follows: 151 1. User makes a Delta Diff request. 152 2. The DiffService checks if the user has "diff credentials" in the DB: 153 1. If there are such credentials, it will use them. 154 2. If there aren't such, it will generate the credentials and save them: `{AKIA: DIFF-<>, SAK: <>}`. The `DIFF` prefix will be used to identify "diff credentials". 155 3. The DiffService will pass the credentials to the Delta Diff plugin during the call as part of the `gRPC` call. 156 157 ### API 158 159 - GET `/repositories/repo/{repo}/otf/refs/{left_ref}/diff/{right_ref}?table_path={path}&type={diff_type}` 160 - Tagged as experimental 161 - **Response**: 162 The response includes an array of operations from different versions of the specified table format, and the type of diff: 163 `changed`, `created`, or `dropped`. 164 It has a general structure that enables formatting the different table format operation structs. 165 - `DiffType`: 166 - description: the type of change 167 - type: string 168 - `Results`: 169 - description: an array of differences 170 - type: array[ 171 - id: 172 - type: string 173 - description: the version/snapshot/transaction id of the operation. 174 - timestamp (epoch): 175 - type: long 176 - description: operation's timestamp. 177 - operation: 178 - type: string 179 - description: operation's name. 180 - operationContent: 181 - type: map 182 - description: an operation content specific to the table format implemented. 183 - operationType: 184 - type: string 185 - description: the type of performed operation: created, updated, or deleted 186 ] 187 188 **Delta lake response example**: 189 190 ```json 191 { 192 "table_diff_type": "changed", 193 "results": [ 194 { 195 "id": "1", 196 "timestamp":1515491537026, 197 "operation_type": "update", 198 "operation":"INSERT", 199 "operation_content":{ 200 "operation_parameters": { 201 "mode":"Append", 202 "partitionBy":"[]" 203 } 204 }, 205 ... 206 ] 207 208 ``` 209 210 --- 211 212 ### Build & Package 213 214 #### Build 215 216 The Rust diff implementation will be located within the lakeFS repo. That way the protobuf will be shared between the 217 "core" and "plugin" components easily. We will use `cargo` to build the artifacts for the different architectures. 218 219 ###### lakeFS development 220 221 The different plugins will have their own target in the `makefile` which will not be included as a (sub) dependency 222 of the `all` target. That way we'll not force lakeFS developers to include Rust (or any other plugin runtime) 223 in their stack. 224 If developers want to develop new plugins, they'll have to include the necessary runtime in their environment. 225 226 * _Contribution guide_: It also means updating the contribution guide on how to update the makefile if a new plugin is added. 227 228 ###### Docker 229 230 The docker file will build the plugins and will include Rust in the build part. 231 232 #### Package 233 234 1. We will package the binary within the released lakeFS + lakectl archive. It will be located under a `plugins` directory: 235 `plugins/diff/delta_v1`. 236 2. We will also update the Docker image to include the binary within it. The docker image is what clients should 237 use in order to get the "out-of-the-box" experience. 238 239 --- 240 241 ## Metrics 242 243 ### Applicative Metrics 244 245 - Diff runtime 246 - The total number of versions read (per diff) - this is the number of Delta Log (JSON) files that were read from lakeFS by the plugin. 247 This is basically the size of a Delta Log. We can use it later on to optimize reading and understand an average size of 248 Delta log. 249 250 ### Business Statistics 251 252 - The number of unique requests by installation id- get the lower bound of the number of Delta Lake users. 253 254 --- 255 256 ## Future plans 257 258 1. Add a separate call to the plugin that merely checks that the plugin si willing to try to run on a location. 259 This allows a better UX(gray-out the button until you type in a good path), and will probably be helpful for performing 260 auto-detection. 261 2. Support pagination. 262 3. The `delta-rs` HISTORY command is inefficient as it sends multiple requests to get all the Delta log entries. 263 We might want to hold this information in our own model to make it easier for the diff (and possibly merge) to work. 264 4. This design's authentication method is temporary. It enlists two auth requirements that we might need to implement: 265 1. Support tags/policies for credentials. 266 2. Allow temporary credentials.